Terry Regier

American Sign Language Handshapes Reflect Pressures for Communicative Efficiency

Jun 06, 2024

Abstract:Communicative efficiency is a prominent theory in linguistics and cognitive science. While numerous studies have shown how the pressure to save energy is reflected in the form of spoken languages, few have explored this phenomenon in signed languages. In this paper, we show how handshapes in American Sign Language (ASL) reflect these efficiency pressures and we present new evidence of communicative efficiency in the visual-gestural modality. We focus on handshapes that are used in both native ASL signs and signs borrowed from English to compare efficiency pressures from both ASL and English. First, we design new methodologies to quantify the articulatory effort required to produce handshapes as well as the perceptual effort needed to recognize them. Then, we compare correlations between communicative effort and usage statistics in ASL and English. Our findings reveal that frequent ASL handshapes are easier to produce and that pressures for communicative efficiency mostly come from ASL usage, not from English lexical borrowing.

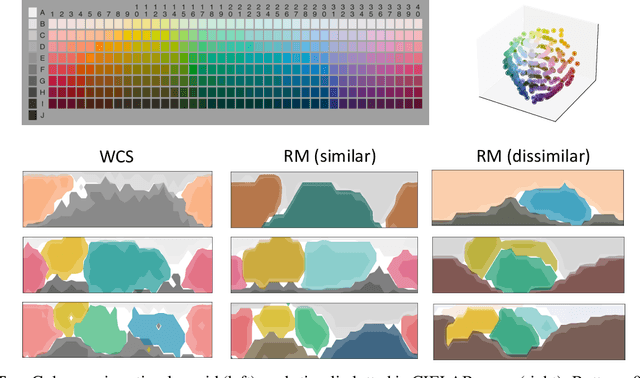

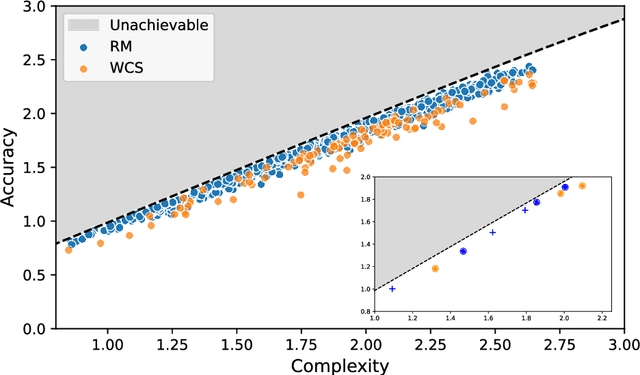

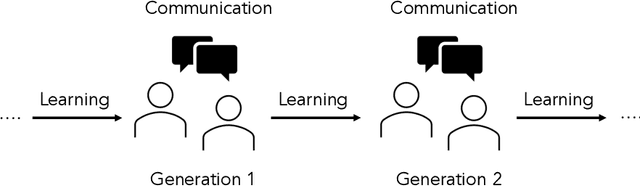

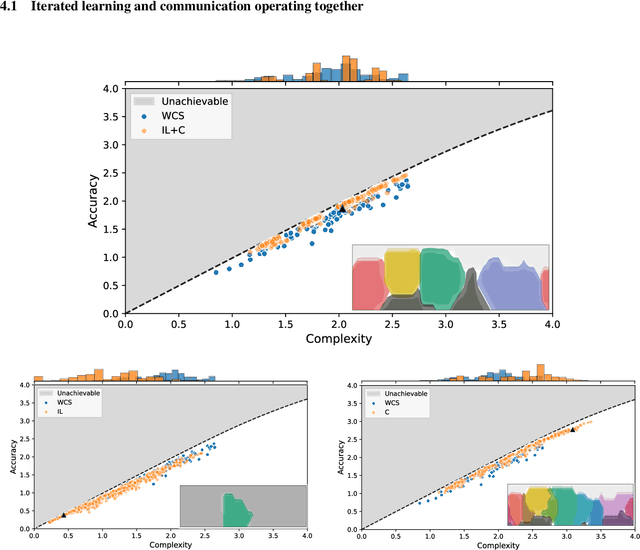

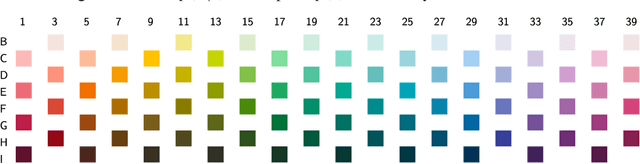

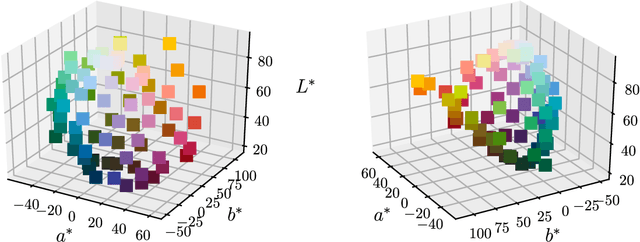

Iterated learning and communication jointly explain efficient color naming systems

May 17, 2023

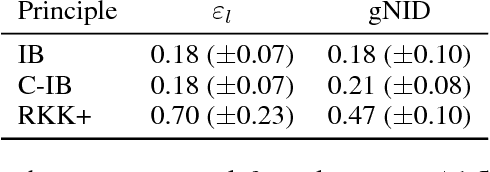

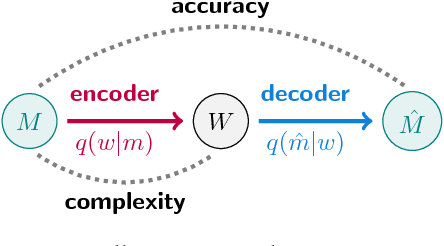

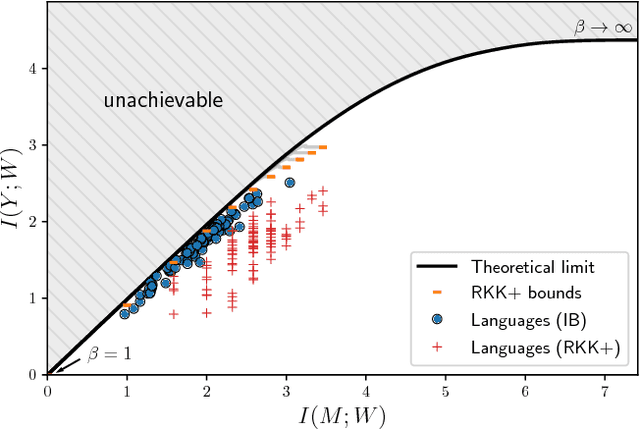

Abstract:It has been argued that semantic systems reflect pressure for efficiency, and a current debate concerns the cultural evolutionary process that produces this pattern. We consider efficiency as instantiated in the Information Bottleneck (IB) principle, and a model of cultural evolution that combines iterated learning and communication. We show that this model, instantiated in neural networks, converges to color naming systems that are efficient in the IB sense and similar to human color naming systems. We also show that iterated learning alone, and communication alone, do not yield the same outcome as clearly.

Does BERT agree? Evaluating knowledge of structure dependence through agreement relations

Aug 26, 2019

Abstract:Learning representations that accurately model semantics is an important goal of natural language processing research. Many semantic phenomena depend on syntactic structure. Recent work examines the extent to which state-of-the-art models for pre-training representations, such as BERT, capture such structure-dependent phenomena, but is largely restricted to one phenomenon in English: number agreement between subjects and verbs. We evaluate BERT's sensitivity to four types of structure-dependent agreement relations in a new semi-automatically curated dataset across 26 languages. We show that both the single-language and multilingual BERT models capture syntax-sensitive agreement patterns well in general, but we also highlight the specific linguistic contexts in which their performance degrades.

Semantic categories of artifacts and animals reflect efficient coding

May 11, 2019

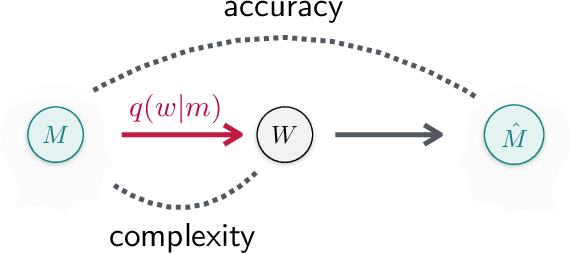

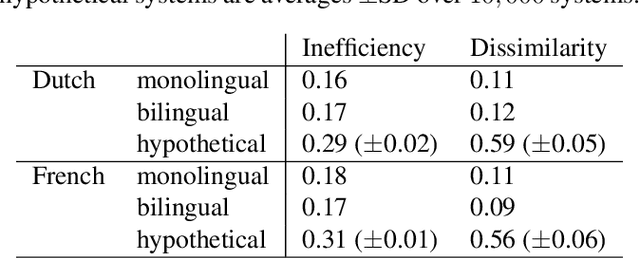

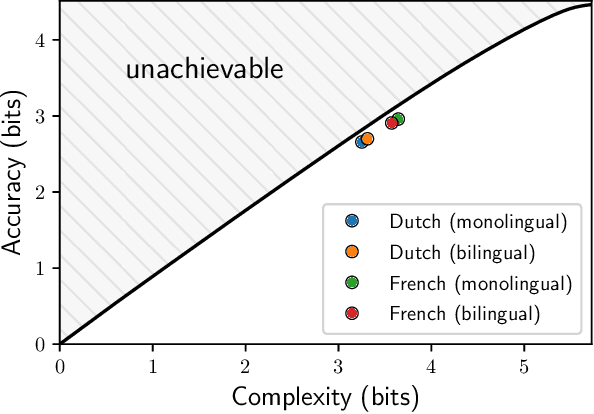

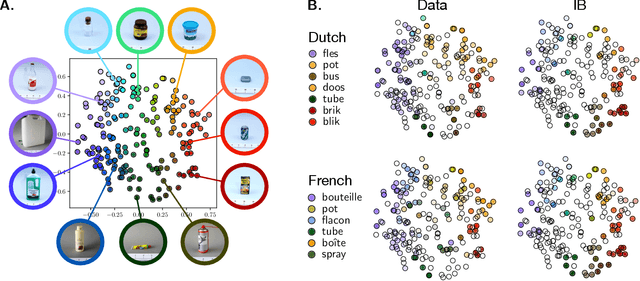

Abstract:It has been argued that semantic categories across languages reflect pressure for efficient communication. Recently, this idea has been cast in terms of a general information-theoretic principle of efficiency, the Information Bottleneck (IB) principle, and it has been shown that this principle accounts for the emergence and evolution of named color categories across languages, including soft structure and patterns of inconsistent naming. However, it is not yet clear to what extent this account generalizes to semantic domains other than color. Here we show that it generalizes to two qualitatively different semantic domains: names for containers, and for animals. First, we show that container naming in Dutch and French is near-optimal in the IB sense, and that IB broadly accounts for soft categories and inconsistent naming patterns in both languages. Second, we show that a hierarchy of animal categories derived from IB captures cross-linguistic tendencies in the growth of animal taxonomies. Taken together, these findings suggest that fundamental information-theoretic principles of efficient coding may shape semantic categories across languages and across domains.

Efficient human-like semantic representations via the Information Bottleneck principle

Aug 09, 2018

Abstract:Maintaining efficient semantic representations of the environment is a major challenge both for humans and for machines. While human languages represent useful solutions to this problem, it is not yet clear what computational principle could give rise to similar solutions in machines. In this work we propose an answer to this open question. We suggest that languages compress percepts into words by optimizing the Information Bottleneck (IB) tradeoff between the complexity and accuracy of their lexicons. We present empirical evidence that this principle may give rise to human-like semantic representations, by exploring how human languages categorize colors. We show that color naming systems across languages are near-optimal in the IB sense, and that these natural systems are similar to artificial IB color naming systems with a single tradeoff parameter controlling the cross-language variability. In addition, the IB systems evolve through a sequence of structural phase transitions, demonstrating a possible adaptation process. This work thus identifies a computational principle that characterizes human semantic systems, and that could usefully inform semantic representations in machines.

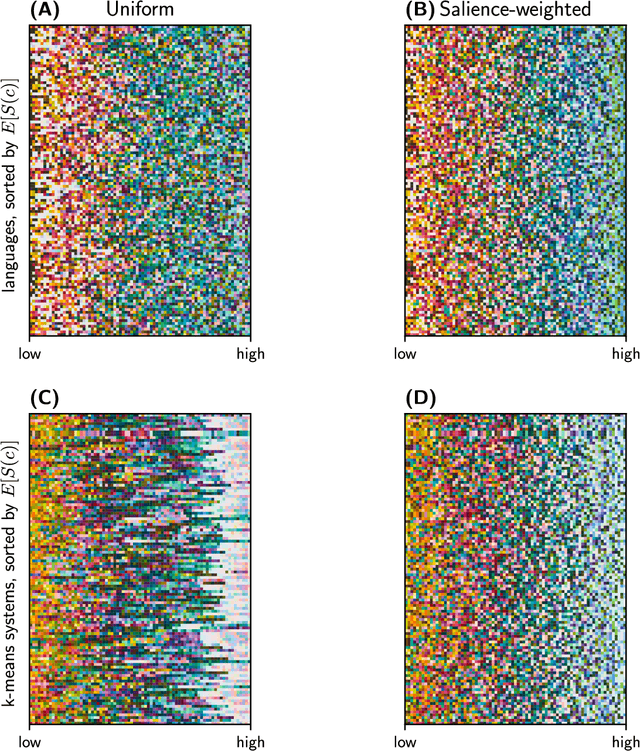

Color naming reflects both perceptual structure and communicative need

Aug 03, 2018

Abstract:Gibson et al. (2017) argued that color naming is shaped by patterns of communicative need. In support of this claim, they showed that color naming systems across languages support more precise communication about warm colors than cool colors, and that the objects we talk about tend to be warm-colored rather than cool-colored. Here, we present new analyses that alter this picture. We show that greater communicative precision for warm than for cool colors, and greater communicative need, may both be explained by perceptual structure. However, using an information-theoretic analysis, we also show that color naming across languages bears signs of communicative need beyond what would be predicted by perceptual structure alone. We conclude that color naming is shaped both by perceptual structure, as has traditionally been argued, and by patterns of communicative need, as argued by Gibson et al. - although for reasons other than those they advanced.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge