Tarang Chugh

Fingerprint Presentation Attack Detection: A Sensor and Material Agnostic Approach

Apr 06, 2020

Abstract:The vulnerability of automated fingerprint recognition systems to presentation attacks (PA), i.e., spoof or altered fingers, has been a growing concern, warranting the development of accurate and efficient presentation attack detection (PAD) methods. However, one major limitation of the existing PAD solutions is their poor generalization to new PA materials and fingerprint sensors, not used in training. In this study, we propose a robust PAD solution with improved cross-material and cross-sensor generalization. Specifically, we build on top of any CNN-based architecture trained for fingerprint spoof detection combined with cross-material spoof generalization using a style transfer network wrapper. We also incorporate adversarial representation learning (ARL) in deep neural networks (DNN) to learn sensor and material invariant representations for PAD. Experimental results on LivDet 2015 and 2017 public domain datasets exhibit the effectiveness of the proposed approach.

Fingerprint Spoof Detection: Temporal Analysis of Image Sequence

Dec 17, 2019

Abstract:We utilize the dynamics involved in the imaging of a fingerprint on a touch-based fingerprint reader, such as perspiration, changes in skin color (blanching), and skin distortion, to differentiate real fingers from spoof (fake) fingers. Specifically, we utilize a deep learning-based architecture (CNN-LSTM) trained end-to-end using sequences of minutiae-centered local patches extracted from ten color frames captured on a COTS fingerprint reader. A time-distributed CNN (MobileNet-v1) extracts spatial features from each local patch, while a bi-directional LSTM layer learns the temporal relationship between the patches in the sequence. Experimental results on a database of 26,650 live frames from 685 subjects (1,333 unique fingers), and 32,910 spoof frames of 7 spoof materials (with 14 variants) shows the superiority of the proposed approach in both known-material and cross-material (generalization) scenarios. For instance, the proposed approach improves the state-of-the-art cross-material performance from TDR of 81.65% to 86.20% @ FDR = 0.2%.

Universal Material Translator: Towards Spoof Fingerprint Generalization

Dec 08, 2019

Abstract:Spoof detectors are classifiers that are trained to distinguish spoof fingerprints from bonafide ones. However, state of the art spoof detectors do not generalize well on unseen spoof materials. This study proposes a style transfer based augmentation wrapper that can be used on any existing spoof detector and can dynamically improve the robustness of the spoof detection system on spoof materials for which we have very low data. Our method is an approach for synthesizing new spoof images from a few spoof examples that transfers the style or material properties of the spoof examples to the content of bonafide fingerprints to generate a larger number of examples to train the classifier on. We demonstrate the effectiveness of our approach on materials in the publicly available LivDet 2015 dataset and show that the proposed approach leads to robustness to fingerprint spoofs of the target material.

* 8 pages, 6 figures, conference

Fingerprint Spoof Generalization

Dec 05, 2019

Abstract:We present a style-transfer based wrapper, called Universal Material Generator (UMG), to improve the generalization performance of any fingerprint spoof detector against spoofs made from materials not seen during training. Specifically, we transfer the style (texture) characteristics between fingerprint images of known materials with the goal of synthesizing fingerprint images corresponding to unknown materials, that may occupy the space between the known materials in the deep feature space. Synthetic live fingerprint images are also added to the training dataset to force the CNN to learn generative-noise invariant features which discriminate between lives and spoofs. The proposed approach is shown to improve the generalization performance of a state-of-the-art spoof detector, namely Fingerprint Spoof Buster, from TDR of 75.24% to 91.78% @ FDR = 0.2%. These results are based on a large-scale dataset of 5,743 live and 4,912 spoof images fabricated using 12 different materials. Additionally, the UMG wrapper is shown to improve the average cross-sensor spoof detection performance from 67.60% to 80.63% when tested on the LivDet 2017 dataset. Training the UMG wrapper requires only 100 live fingerprint images from the target sensor, alleviating the time and resources required to generate large-scale live and spoof datasets for a new sensor. We also fabricate physical spoof artifacts using a mixture of known spoof materials to explore the role of cross-material style transfer in improving generalization performance.

OCT Fingerprints: Resilience to Presentation Attacks

Jul 31, 2019

Abstract:Optical coherent tomography (OCT) fingerprint technology provides rich depth information, including internal fingerprint (papillary junction) and sweat (eccrine) glands, in addition to imaging any fake layers (presentation attacks) placed over finger skin. Unlike 2D surface fingerprint scans, additional depth information provided by the cross-sectional OCT depth profile scans are purported to thwart fingerprint presentation attacks. We develop and evaluate a presentation attack detector (PAD) based on deep convolutional neural network (CNN). Input data to CNN are local patches extracted from the cross-sectional OCT depth profile scans captured using THORLabs Telesto series spectral-domain fingerprint reader. The proposed approach achieves a TDR of 99.73% @ FDR of 0.2% on a database of 3,413 bonafide and 357 PA OCT scans, fabricated using 8 different PA materials. By employing a visualization technique, known as CNN-Fixations, we are able to identify the regions in the OCT scan patches that are crucial for fingerprint PAD detection.

Fingerprint Presentation Attack Detection: Generalization and Efficiency

Dec 30, 2018

Abstract:We study the problem of fingerprint presentation attack detection (PAD) under unknown PA materials not seen during PAD training. A dataset of 5,743 bonafide and 4,912 PA images of 12 different materials is used to evaluate a state-of-the-art PAD, namely Fingerprint Spoof Buster. We utilize 3D t-SNE visualization and clustering of material characteristics to identify a representative set of PA materials that cover most of PA feature space. We observe that a set of six PA materials, namely Silicone, 2D Paper, Play Doh, Gelatin, Latex Body Paint and Monster Liquid Latex provide a good representative set that should be included in training to achieve generalization of PAD. We also propose an optimized Android app of Fingerprint Spoof Buster that can run on a commodity smartphone (Xiaomi Redmi Note 4) without a significant drop in PAD performance (from TDR = 95.7% to 95.3% @ FDR = 0.2%) which can make a PA prediction in less than 300ms.

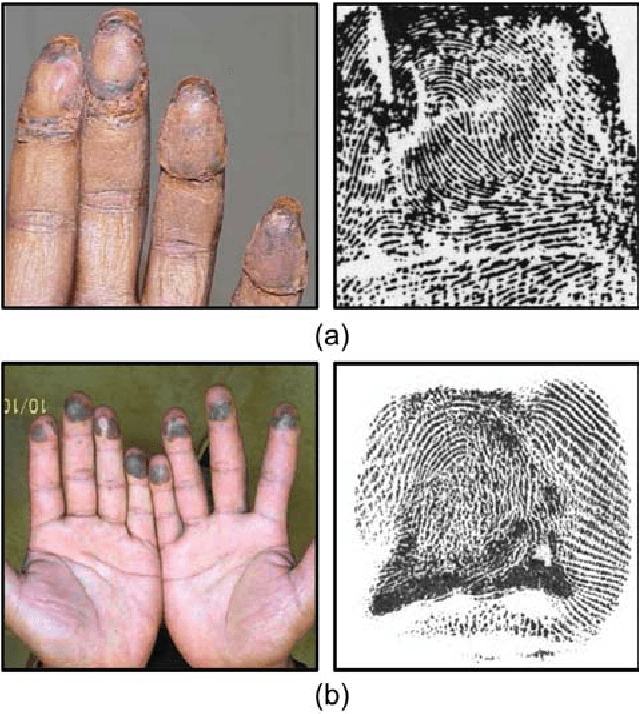

Altered Fingerprints: Detection and Localization

Sep 18, 2018

Abstract:Fingerprint alteration, also referred to as obfuscation presentation attack, is to intentionally tamper or damage the real friction ridge patterns to avoid identification by an AFIS. This paper proposes a method for detection and localization of fingerprint alterations. Our main contributions are: (i) design and train CNN models on fingerprint images and minutiae-centered local patches in the image to detect and localize regions of fingerprint alterations, and (ii) train a Generative Adversarial Network (GAN) to synthesize altered fingerprints whose characteristics are similar to true altered fingerprints. A successfully trained GAN can alleviate the limited availability of altered fingerprint images for research. A database of 4,815 altered fingerprints from 270 subjects, and an equal number of rolled fingerprint images are used to train and test our models. The proposed approach achieves a True Detection Rate (TDR) of 99.24% at a False Detection Rate (FDR) of 2%, outperforming published results. The synthetically generated altered fingerprint dataset will be open-sourced.

Matching Fingerphotos to Slap Fingerprint Images

Apr 22, 2018

Abstract:We address the problem of comparing fingerphotos, fingerprint images from a commodity smartphone camera, with the corresponding legacy slap contact-based fingerprint images. Development of robust versions of these technologies would enable the use of the billions of standard Android phones as biometric readers through a simple software download, dramatically lowering the cost and complexity of deployment relative to using a separate fingerprint reader. Two fingerphoto apps running on Android phones and an optical slap reader were utilized for fingerprint collection of 309 subjects who primarily work as construction workers, farmers, and domestic helpers. Experimental results show that a True Accept Rate (TAR) of 95.79 at a False Accept Rate (FAR) of 0.1% can be achieved in matching fingerphotos to slaps (two thumbs and two index fingers) using a COTS fingerprint matcher. By comparison, a baseline TAR of 98.55% at 0.1% FAR is achieved when matching fingerprint images from two different contact-based optical readers. We also report the usability of the two smartphone apps, in terms of failure to acquire rate and fingerprint acquisition time. Our results show that fingerphotos are promising to authenticate individuals (against a national ID database) for banking, welfare distribution, and healthcare applications in developing countries.

Fingerprint Spoof Buster

Dec 12, 2017

Abstract:The primary purpose of a fingerprint recognition system is to ensure a reliable and accurate user authentication, but the security of the recognition system itself can be jeopardized by spoof attacks. This study addresses the problem of developing accurate, generalizable, and efficient algorithms for detecting fingerprint spoof attacks. Specifically, we propose a deep convolutional neural network based approach utilizing local patches centered and aligned using fingerprint minutiae. Experimental results on three public-domain LivDet datasets (2011, 2013, and 2015) show that the proposed approach provides state-of-the-art accuracies in fingerprint spoof detection for intra-sensor, cross-material, cross-sensor, as well as cross-dataset testing scenarios. For example, in LivDet 2015, the proposed approach achieves 99.03% average accuracy over all sensors compared to 95.51% achieved by the LivDet 2015 competition winners. Additionally, two new fingerprint presentation attack datasets containing more than 20,000 images, using two different fingerprint readers, and over 12 different spoof fabrication materials are collected. We also present a graphical user interface, called Fingerprint Spoof Buster, that allows the operator to visually examine the local regions of the fingerprint highlighted as live or spoof, instead of relying on only a single score as output by the traditional approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge