Takeshi Ohashi

Online Data Curation for Object Detection via Marginal Contributions to Dataset-level Average Precision

Nov 18, 2025Abstract:High-quality data has become a primary driver of progress under scale laws, with curated datasets often outperforming much larger unfiltered ones at lower cost. Online data curation extends this idea by dynamically selecting training samples based on the model's evolving state. While effective in classification and multimodal learning, existing online sampling strategies rarely extend to object detection because of its structural complexity and domain gaps. We introduce DetGain, an online data curation method specifically for object detection that estimates the marginal perturbation of each image to dataset-level Average Precision (AP) based on its prediction quality. By modeling global score distributions, DetGain efficiently estimates the global AP change and computes teacher-student contribution gaps to select informative samples at each iteration. The method is architecture-agnostic and minimally intrusive, enabling straightforward integration into diverse object detection architectures. Experiments on the COCO dataset with multiple representative detectors show consistent improvements in accuracy. DetGain also demonstrates strong robustness under low-quality data and can be effectively combined with knowledge distillation techniques to further enhance performance, highlighting its potential as a general and complementary strategy for data-efficient object detection.

SemiISP/SemiIE: Semi-Supervised Image Signal Processor and Image Enhancement Leveraging One-to-Many Mapping sRGB-to-RAW

Apr 03, 2025Abstract:DNN-based methods have been successful in Image Signal Processor (ISP) and image enhancement (IE) tasks. However, the cost of creating training data for these tasks is considerably higher than for other tasks, making it difficult to prepare large-scale datasets. Also, creating personalized ISP and IE with minimal training data can lead to new value streams since preferred image quality varies depending on the person and use case. While semi-supervised learning could be a potential solution in such cases, it has rarely been utilized for these tasks. In this paper, we realize semi-supervised learning for ISP and IE leveraging a RAW image reconstruction (sRGB-to-RAW) method. Although existing sRGB-to-RAW methods can generate pseudo-RAW image datasets that improve the accuracy of RAW-based high-level computer vision tasks such as object detection, their quality is not sufficient for ISP and IE tasks that require precise image quality definition. Therefore, we also propose a sRGB-to-RAW method that can improve the image quality of these tasks. The proposed semi-supervised learning with the proposed sRGB-to-RAW method successfully improves the image quality of various models on various datasets.

Extreme Compression of Adaptive Neural Images

May 27, 2024Abstract:Implicit Neural Representations (INRs) and Neural Fields are a novel paradigm for signal representation, from images and audio to 3D scenes and videos. The fundamental idea is to represent a signal as a continuous and differentiable neural network. This idea offers unprecedented benefits such as continuous resolution and memory efficiency, enabling new compression techniques. However, representing data as neural networks poses new challenges. For instance, given a 2D image as a neural network, how can we further compress such a neural image?. In this work, we present a novel analysis on compressing neural fields, with the focus on images. We also introduce Adaptive Neural Images (ANI), an efficient neural representation that enables adaptation to different inference or transmission requirements. Our proposed method allows to reduce the bits-per-pixel (bpp) of the neural image by 4x, without losing sensitive details or harming fidelity. We achieve this thanks to our successful implementation of 4-bit neural representations. Our work offers a new framework for developing compressed neural fields.

Multi Positive Contrastive Learning with Pose-Consistent Generated Images

Apr 04, 2024

Abstract:Model pre-training has become essential in various recognition tasks. Meanwhile, with the remarkable advancements in image generation models, pre-training methods utilizing generated images have also emerged given their ability to produce unlimited training data. However, while existing methods utilizing generated images excel in classification, they fall short in more practical tasks, such as human pose estimation. In this paper, we have experimentally demonstrated it and propose the generation of visually distinct images with identical human poses. We then propose a novel multi-positive contrastive learning, which optimally utilize the previously generated images to learn structural features of the human body. We term the entire learning pipeline as GenPoCCL. Despite using only less than 1% amount of data compared to current state-of-the-art method, GenPoCCL captures structural features of the human body more effectively, surpassing existing methods in a variety of human-centric perception tasks.

Mixed-precision Supernet Training from Vision Foundation Models using Low Rank Adapter

Mar 29, 2024Abstract:Compression of large and performant vision foundation models (VFMs) into arbitrary bit-wise operations (BitOPs) allows their deployment on various hardware. We propose to fine-tune a VFM to a mixed-precision quantized supernet. The supernet-based neural architecture search (NAS) can be adopted for this purpose, which trains a supernet, and then subnets within arbitrary hardware budgets can be extracted. However, existing methods face difficulties in optimizing the mixed-precision search space and incurring large memory costs during training. To tackle these challenges, first, we study the effective search space design for fine-tuning a VFM by comparing different operators (such as resolution, feature size, width, depth, and bit-widths) in terms of performance and BitOPs reduction. Second, we propose memory-efficient supernet training using a low-rank adapter (LoRA) and a progressive training strategy. The proposed method is evaluated for the recently proposed VFM, Segment Anything Model, fine-tuned on segmentation tasks. The searched model yields about a 95% reduction in BitOPs without incurring performance degradation.

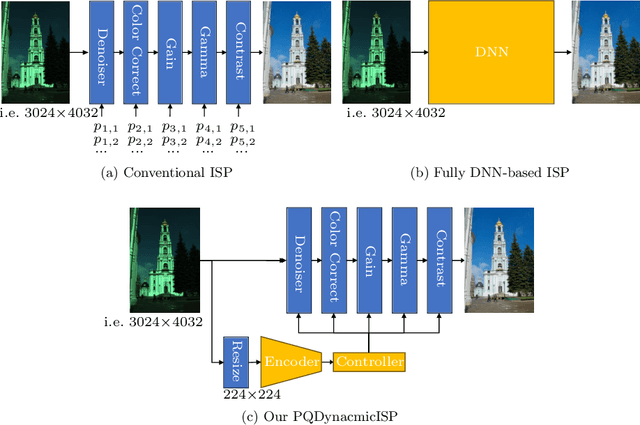

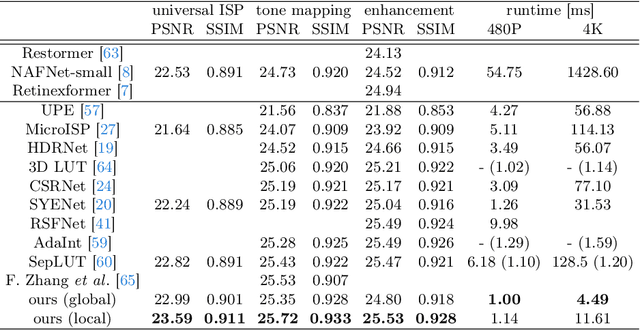

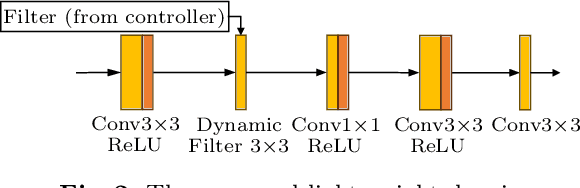

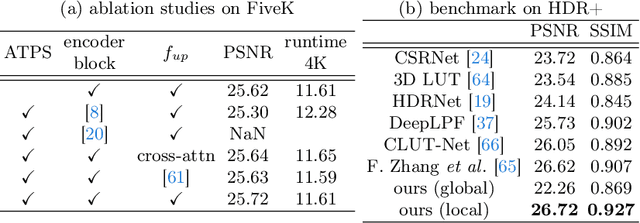

PQDynamicISP: Dynamically Controlled Image Signal Processor for Any Image Sensors Pursuing Perceptual Quality

Mar 15, 2024

Abstract:Full DNN-based image signal processors (ISPs) have been actively studied and have achieved superior image quality compared to conventional ISPs. In contrast to this trend, we propose a lightweight ISP that consists of simple conventional ISP functions but achieves high image quality by increasing expressiveness. Specifically, instead of tuning the parameters of the ISP, we propose to control them dynamically for each environment and even locally. As a result, state-of-the-art accuracy is achieved on various datasets, including other tasks like tone mapping and image enhancement, even though ours is lighter than DNN-based ISPs. Additionally, our method can process different image sensors with a single ISP through dynamic control, whereas conventional methods require training for each sensor.

Self-Supervised Reversed Image Signal Processing via Reference-Guided Dynamic Parameter Selection

Mar 24, 2023Abstract:Unprocessed sensor outputs (RAW images) potentially improve both low-level and high-level computer vision algorithms, but the lack of large-scale RAW image datasets is a barrier to research. Thus, reversed Image Signal Processing (ISP) which converts existing RGB images into RAW images has been studied. However, most existing methods require camera-specific metadata or paired RGB and RAW images to model the conversion, and they are not always available. In addition, there are issues in handling diverse ISPs and recovering global illumination. To tackle these limitations, we propose a self-supervised reversed ISP method that does not require metadata and paired images. The proposed method converts a RGB image into a RAW-like image taken in the same environment with the same sensor as a reference RAW image by dynamically selecting parameters of the reversed ISP pipeline based on the reference RAW image. The parameter selection is trained via pseudo paired data created from unpaired RGB and RAW images. We show that the proposed method is able to learn various reversed ISPs with comparable accuracy to other state-of-the-art supervised methods and convert unknown RGB images from COCO and Flickr1M to target RAW-like images more accurately in terms of pixel distribution. We also demonstrate that our generated RAW images improve performance on real RAW image object detection task.

Efficient Joint Detection and Multiple Object Tracking with Spatially Aware Transformer

Nov 09, 2022

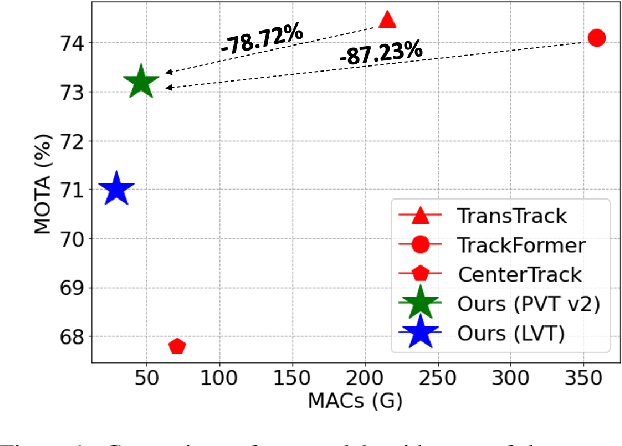

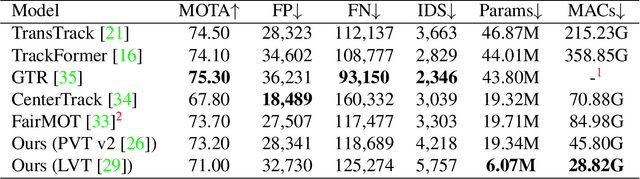

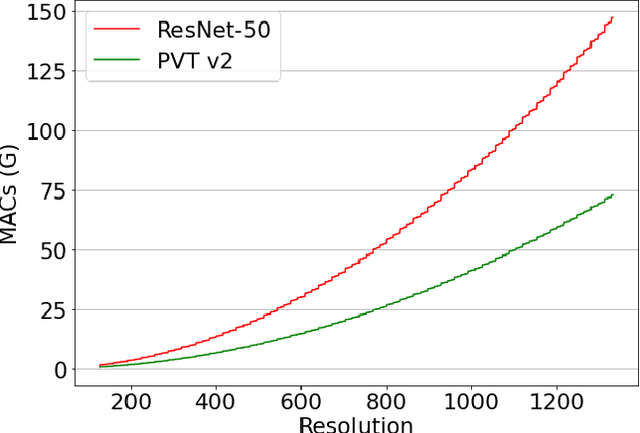

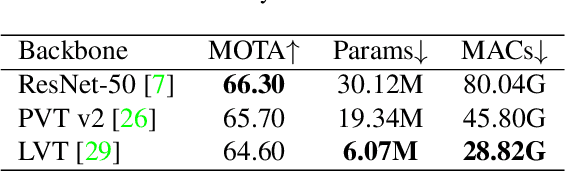

Abstract:We propose a light-weight and highly efficient Joint Detection and Tracking pipeline for the task of Multi-Object Tracking using a fully-transformer architecture. It is a modified version of TransTrack, which overcomes the computational bottleneck associated with its design, and at the same time, achieves state-of-the-art MOTA score of 73.20%. The model design is driven by a transformer based backbone instead of CNN, which is highly scalable with the input resolution. We also propose a drop-in replacement for Feed Forward Network of transformer encoder layer, by using Butterfly Transform Operation to perform channel fusion and depth-wise convolution to learn spatial context within the feature maps, otherwise missing within the attention maps of the transformer. As a result of our modifications, we reduce the overall model size of TransTrack by 58.73% and the complexity by 78.72%. Therefore, we expect our design to provide novel perspectives for architecture optimization in future research related to multi-object tracking.

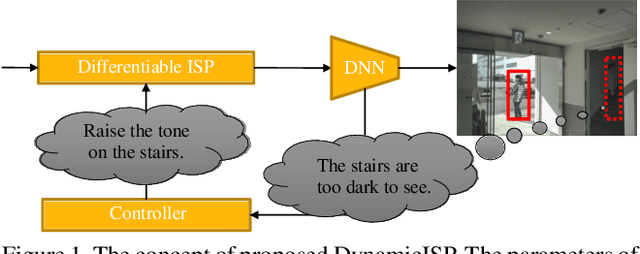

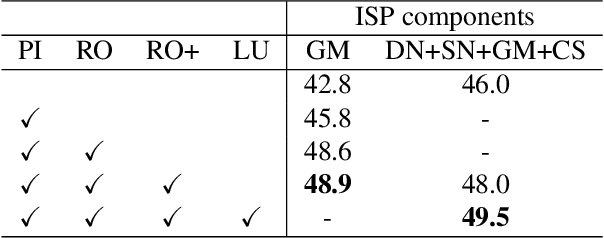

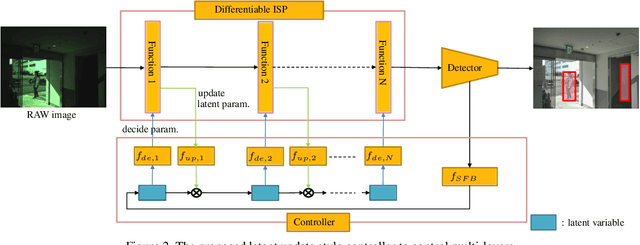

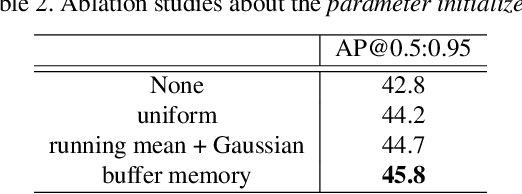

DynamicISP: Dynamically Controlled Image Signal Processor for Image Recognition

Nov 02, 2022

Abstract:Image signal processor (ISP) plays an important role not only for human perceptual quality but also for computer vision. In most cases, experts resort to manual tuning of many parameters in the ISPs for perceptual quality. It failed in sub-optimal, especially for computer vision. Aiming to improve ISPs, two approaches have been actively proposed; tuning the parameters with machine learning, or constructing an ISP with DNN. The former is lightweight but lacks expressive powers. The latter has expressive powers but it was too heavy to calculate on edge devices. To this end, we propose DynamicISP, which consists of traditional simple ISP functions but their parameters are controlled dynamically per image according to what the downstream image recognition model felt to the previous frame. Our proposed method successfully controlled parameters of multiple ISP functions and got state-of-the-art accuracy with a small computational cost.

Rawgment: Noise-Accounted RAW Augmentation Enables Recognition in a Wide Variety of Environments

Oct 28, 2022

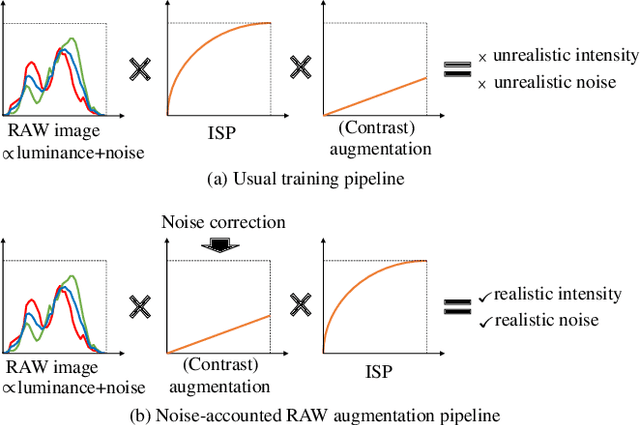

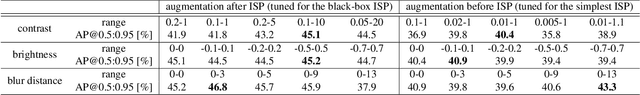

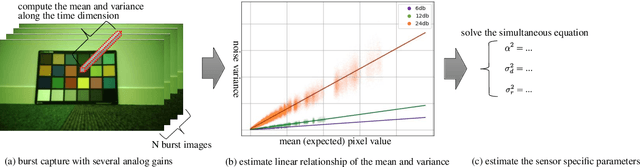

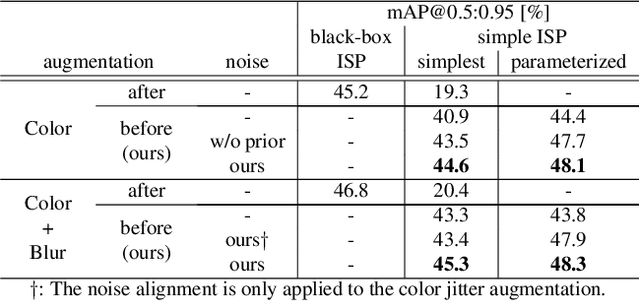

Abstract:Image recognition models that can work in challenging environments (e.g., extremely dark, blurry, or high dynamic range conditions) must be useful. However, creating a training dataset for such environments is expensive and hard due to the difficulties of data collection and annotation. It is desirable if we could get a robust model without the need of hard-to-obtain dataset. One simple approach is to apply data augmentation such as color jitter and blur to standard RGB (sRGB) images in simple scenes. Unfortunately, this approach struggles to yield realistic images in terms of pixel intensity and noise distribution due to not considering the non-linearity of Image Signal Processor (ISP) and noise characteristics of an image sensor. Instead, we propose a noise-accounted RAW image augmentation method. In essence, color jitter and blur augmentation are applied to a RAW image before applying non-linear ISP, yielding realistic intensity. Furthermore, we introduce a noise amount alignment method that calibrates the domain gap in noise property caused by the augmentation. We show that our proposed noise-accounted RAW augmentation method doubles the image recognition accuracy in challenging environments only with simple training data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge