Tai Fei

Leveraging Transformer Decoder for Automotive Radar Object Detection

Jan 19, 2026Abstract:In this paper, we present a Transformer-based architecture for 3D radar object detection that uses a novel Transformer Decoder as the prediction head to directly regress 3D bounding boxes and class scores from radar feature representations. To bridge multi-scale radar features and the decoder, we propose Pyramid Token Fusion (PTF), a lightweight module that converts a feature pyramid into a unified, scale-aware token sequence. By formulating detection as a set prediction problem with learnable object queries and positional encodings, our design models long-range spatial-temporal correlations and cross-feature interactions. This approach eliminates dense proposal generation and heuristic post-processing such as extensive non-maximum suppression (NMS) tuning. We evaluate the proposed framework on the RADDet, where it achieves significant improvements over state-of-the-art radar-only baselines.

Synthetic FMCW Radar Range Azimuth Maps Augmentation with Generative Diffusion Model

Jan 09, 2026Abstract:The scarcity and low diversity of well-annotated automotive radar datasets often limit the performance of deep-learning-based environmental perception. To overcome these challenges, we propose a conditional generative framework for synthesizing realistic Frequency-Modulated Continuous-Wave radar Range-Azimuth Maps. Our approach leverages a generative diffusion model to generate radar data for multiple object categories, including pedestrians, cars, and cyclists. Specifically, conditioning is achieved via Confidence Maps, where each channel represents a semantic class and encodes Gaussian-distributed annotations at target locations. To address radar-specific characteristics, we incorporate Geometry Aware Conditioning and Temporal Consistency Regularization into the generative process. Experiments on the ROD2021 dataset demonstrate that signal reconstruction quality improves by \SI{3.6}{dB} in Peak Signal-to-Noise Ratio over baseline methods, while training with a combination of real and synthetic datasets improves overall mean Average Precision by 4.15% compared with conventional image-processing-based augmentation. These results indicate that our generative framework not only produces physically plausible and diverse radar spectrum but also substantially improves model generalization in downstream tasks.

High-Resolution Range-Doppler Imaging from One-Bit PMCW Radar via Generative Adversarial Networks

Mar 17, 2025

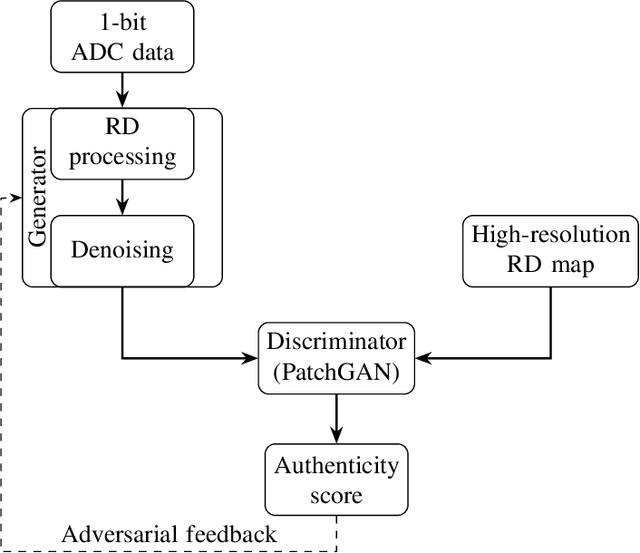

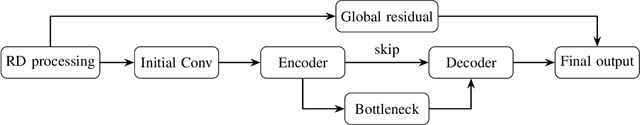

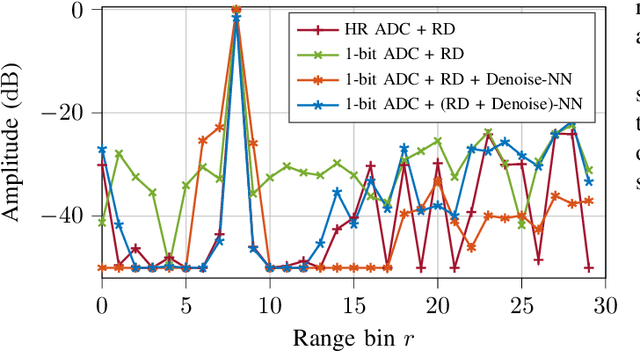

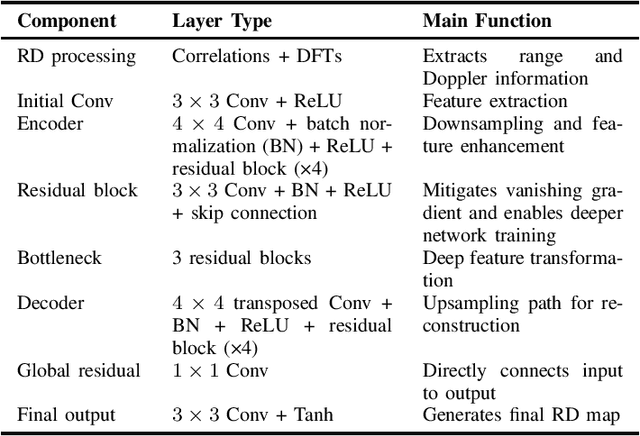

Abstract:Digital modulation schemes such as PMCW have recently attracted increasing attention as possible replacements for FMCW modulation in future automotive radar systems. A significant obstacle to their widespread adoption is the expensive and power-consuming ADC required at gigahertz frequencies. To mitigate these challenges, employing low-resolution ADC, such as one-bit, has been suggested. Nonetheless, using one-bit sampling results in the loss of essential information. This study explores two RD imaging methods in PMCW radar systems utilizing NN. The first method merges standard RD signal processing with a GAN, whereas the second method uses an E2E strategy in which traditional signal processing is substituted with an NN-based RD module. The findings indicate that these methods can substantially improve the probability of detecting targets in the range-Doppler domain.

Sequential Maximum-Likelihood Estimation of Wideband Polynomial-Phase Signals on Sensor Array

Dec 30, 2024Abstract:This paper presents a novel sequential estimator for the direction-of-arrival and polynomial coefficients of wideband polynomial-phase signals impinging on a sensor array. Addressing the computational challenges of Maximum-likelihood estimation for this problem, we propose a method leveraging random sampling consensus (RANSAC) applied to the time-frequency spatial signatures of sources. Our approach supports multiple sources and higher-order polynomials by employing coherent array processing and sequential approximations of the Maximum-likelihood cost function. We also propose a low-complexity variant that estimates source directions via angular domain random sampling. Numerical evaluations demonstrate that the proposed methods achieve Cram\'er-Rao bounds in challenging multi-source scenarios, including closely spaced time-frequency spatial signatures, highlighting their suitability for advanced radar signal processing applications.

Warping of Radar Data into Camera Image for Cross-Modal Supervision in Automotive Applications

Dec 23, 2020

Abstract:In this paper, we present a novel framework to project automotive radar range-Doppler (RD) spectrum into camera image. The utilized warping operation is designed to be fully differentiable, which allows error backpropagation through the operation. This enables the training of neural networks (NN) operating exclusively on RD spectrum by utilizing labels provided from camera vision models. As the warping operation relies on accurate scene flow, additionally, we present a novel scene flow estimation algorithm fed from camera, lidar and radar, enabling us to improve the accuracy of the warping operation. We demonstrate the framework in multiple applications like direction-of-arrival (DoA) estimation, target detection, semantic segmentation and estimation of radar power from camera data. Extensive evaluations have been carried out for the DoA application and suggest superior quality for NN based estimators compared to classical estimators. The novel scene flow estimation approach is benchmarked against state-of-the-art scene flow algorithms and outperforms them by roughly a third.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge