Syed Ishtiaque Ahmed

LLM-Based Multi-Task Bangla Hate Speech Detection: Type, Severity, and Target

Oct 02, 2025Abstract:Online social media platforms are central to everyday communication and information seeking. While these platforms serve positive purposes, they also provide fertile ground for the spread of hate speech, offensive language, and bullying content targeting individuals, organizations, and communities. Such content undermines safety, participation, and equity online. Reliable detection systems are therefore needed, especially for low-resource languages where moderation tools are limited. In Bangla, prior work has contributed resources and models, but most are single-task (e.g., binary hate/offense) with limited coverage of multi-facet signals (type, severity, target). We address these gaps by introducing the first multi-task Bangla hate-speech dataset, BanglaMultiHate, one of the largest manually annotated corpus to date. Building on this resource, we conduct a comprehensive, controlled comparison spanning classical baselines, monolingual pretrained models, and LLMs under zero-shot prompting and LoRA fine-tuning. Our experiments assess LLM adaptability in a low-resource setting and reveal a consistent trend: although LoRA-tuned LLMs are competitive with BanglaBERT, culturally and linguistically grounded pretraining remains critical for robust performance. Together, our dataset and findings establish a stronger benchmark for developing culturally aligned moderation tools in low-resource contexts. For reproducibility, we will release the dataset and all related scripts.

Human-Aligned Faithfulness in Toxicity Explanations of LLMs

Jun 23, 2025Abstract:The discourse around toxicity and LLMs in NLP largely revolves around detection tasks. This work shifts the focus to evaluating LLMs' reasoning about toxicity -- from their explanations that justify a stance -- to enhance their trustworthiness in downstream tasks. Despite extensive research on explainability, it is not straightforward to adopt existing methods to evaluate free-form toxicity explanation due to their over-reliance on input text perturbations, among other challenges. To account for these, we propose a novel, theoretically-grounded multi-dimensional criterion, Human-Aligned Faithfulness (HAF), that measures the extent to which LLMs' free-form toxicity explanations align with those of a rational human under ideal conditions. We develop six metrics, based on uncertainty quantification, to comprehensively evaluate \haf of LLMs' toxicity explanations with no human involvement, and highlight how "non-ideal" the explanations are. We conduct several experiments on three Llama models (of size up to 70B) and an 8B Ministral model on five diverse toxicity datasets. Our results show that while LLMs generate plausible explanations to simple prompts, their reasoning about toxicity breaks down when prompted about the nuanced relations between the complete set of reasons, the individual reasons, and their toxicity stances, resulting in inconsistent and nonsensical responses. We open-source our code and LLM-generated explanations at https://github.com/uofthcdslab/HAF.

BTPD: A Multilingual Hand-curated Dataset of Bengali Transnational Political Discourse Across Online Communities

Jun 07, 2025Abstract:Understanding political discourse in online spaces is crucial for analyzing public opinion and ideological polarization. While social computing and computational linguistics have explored such discussions in English, such research efforts are significantly limited in major yet under-resourced languages like Bengali due to the unavailability of datasets. In this paper, we present a multilingual dataset of Bengali transnational political discourse (BTPD) collected from three online platforms, each representing distinct community structures and interaction dynamics. Besides describing how we hand-curated the dataset through community-informed keyword-based retrieval, this paper also provides a general overview of its topics and multilingual content.

Talking About the Assumption in the Room

Feb 18, 2025

Abstract:The reference to assumptions in how practitioners use or interact with machine learning (ML) systems is ubiquitous in HCI and responsible ML discourse. However, what remains unclear from prior works is the conceptualization of assumptions and how practitioners identify and handle assumptions throughout their workflows. This leads to confusion about what assumptions are and what needs to be done with them. We use the concept of an argument from Informal Logic, a branch of Philosophy, to offer a new perspective to understand and explicate the confusions surrounding assumptions. Through semi-structured interviews with 22 ML practitioners, we find what contributes most to these confusions is how independently assumptions are constructed, how reactively and reflectively they are handled, and how nebulously they are recorded. Our study brings the peripheral discussion of assumptions in ML to the center and presents recommendations for practitioners to better think about and work with assumptions.

Evaluating the Economic Implications of Using Machine Learning in Clinical Psychiatry

Nov 07, 2024Abstract:With the growing interest in using AI and machine learning (ML) in medicine, there is an increasing number of literature covering the application and ethics of using AI and ML in areas of medicine such as clinical psychiatry. The problem is that there is little literature covering the economic aspects associated with using ML in clinical psychiatry. This study addresses this gap by specifically studying the economic implications of using ML in clinical psychiatry. In this paper, we evaluate the economic implications of using ML in clinical psychiatry through using three problem-oriented case studies, literature on economics, socioeconomic and medical AI, and two types of health economic evaluations. In addition, we provide details on fairness, legal, ethics and other considerations for ML in clinical psychiatry.

On the Reliability of Large Language Models to Misinformed and Demographically-Informed Prompts

Oct 06, 2024Abstract:We investigate and observe the behaviour and performance of Large Language Model (LLM)-backed chatbots in addressing misinformed prompts and questions with demographic information within the domains of Climate Change and Mental Health. Through a combination of quantitative and qualitative methods, we assess the chatbots' ability to discern the veracity of statements, their adherence to facts, and the presence of bias or misinformation in their responses. Our quantitative analysis using True/False questions reveals that these chatbots can be relied on to give the right answers to these close-ended questions. However, the qualitative insights, gathered from domain experts, shows that there are still concerns regarding privacy, ethical implications, and the necessity for chatbots to direct users to professional services. We conclude that while these chatbots hold significant promise, their deployment in sensitive areas necessitates careful consideration, ethical oversight, and rigorous refinement to ensure they serve as a beneficial augmentation to human expertise rather than an autonomous solution.

Towards a New Participatory Approach for Designing Artificial Intelligence and Data-Driven Technologies

Mar 30, 2021

Abstract:With there being many technical and ethical issues with artificial intelligence (AI) that involve marginalized communities, there is a growing interest for design methods used with marginalized people that may be transferable to the design of AI technologies. Participatory design (PD) is a design method that is often used with marginalized communities for the design of social development, policy, IT and other matters and solutions. However, there are issues with the current PD, raising concerns when it is applied to the design of technologies, including AI technologies. This paper argues for the use of PD for the design of AI technologies, and introduces and proposes a new PD, which we call agile participatory design, that not only can could be used for the design of AI and data-driven technologies, but also overcomes issues surrounding current PD and its use in the design of such technologies.

Towards Automated Sexual Violence Report Tracking

Nov 16, 2019

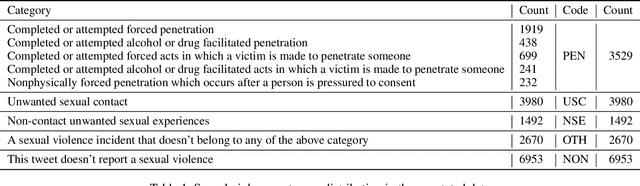

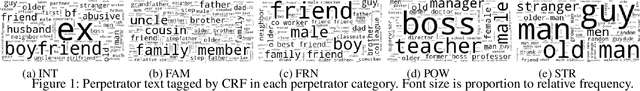

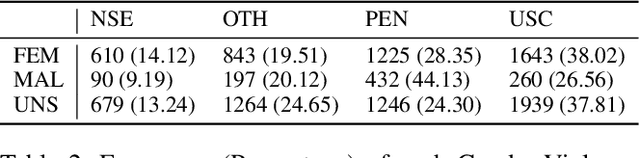

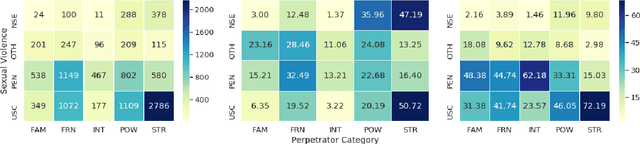

Abstract:Tracking sexual violence is a challenging task. In this paper, we present a supervised learning-based automated sexual violence report tracking model that is more scalable, and reliable than its crowdsource based counterparts. We define the sexual violence report tracking problem by considering victim, perpetrator contexts and the nature of the violence. We find that our model could identify sexual violence reports with a precision and recall of 80.4% and 83.4%, respectively. Moreover, we also applied the model during and after the \#MeToo movement. Several interesting findings are discovered which are not easily identifiable from a shallow analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge