Sung-Jin Kim

Diversify and Conquer: Open-set Disagreement for Robust Semi-supervised Learning with Outliers

May 30, 2025Abstract:Conventional semi-supervised learning (SSL) ideally assumes that labeled and unlabeled data share an identical class distribution, however in practice, this assumption is easily violated, as unlabeled data often includes unknown class data, i.e., outliers. The outliers are treated as noise, considerably degrading the performance of SSL models. To address this drawback, we propose a novel framework, Diversify and Conquer (DAC), to enhance SSL robustness in the context of open-set semi-supervised learning. In particular, we note that existing open-set SSL methods rely on prediction discrepancies between inliers and outliers from a single model trained on labeled data. This approach can be easily failed when the labeled data is insufficient, leading to performance degradation that is worse than naive SSL that do not account for outliers. In contrast, our approach exploits prediction disagreements among multiple models that are differently biased towards the unlabeled distribution. By leveraging the discrepancies arising from training on unlabeled data, our method enables robust outlier detection even when the labeled data is underspecified. Our key contribution is constructing a collection of differently biased models through a single training process. By encouraging divergent heads to be differently biased towards outliers while making consistent predictions for inliers, we exploit the disagreement among these heads as a measure to identify unknown concepts. Our code is available at https://github.com/heejokong/DivCon.

Classification of Distraction Levels Using Hybrid Deep Neural Networks From EEG Signals

Dec 13, 2022Abstract:Non-invasive brain-computer interface technology has been developed for detecting human mental states with high performances. Detection of the pilots' mental states is particularly critical because their abnormal mental states could cause catastrophic accidents. In this study, we presented the feasibility of classifying distraction levels (namely, normal state, low distraction, and high distraction) by applying the deep learning method. To the best of our knowledge, this study is the first attempt to classify distraction levels under a flight environment. We proposed a model for classifying distraction levels. A total of ten pilots conducted the experiment in a simulated flight environment. The grand-average accuracy was 0.8437 for classifying distraction levels across all subjects. Hence, we believe that it will contribute significantly to autonomous driving or flight based on artificial intelligence technology in the future.

CropCat: Data Augmentation for Smoothing the Feature Distribution of EEG Signals

Dec 13, 2022Abstract:Brain-computer interface (BCI) is a communication system between humans and computers reflecting human intention without using a physical control device. Since deep learning is robust in extracting features from data, research on decoding electroencephalograms by applying deep learning has progressed in the BCI domain. However, the application of deep learning in the BCI domain has issues with a lack of data and overconfidence. To solve these issues, we proposed a novel data augmentation method, CropCat. CropCat consists of two versions, CropCat-spatial and CropCat-temporal. We designed our method by concatenating the cropped data after cropping the data, which have different labels in spatial and temporal axes. In addition, we adjusted the label based on the ratio of cropped length. As a result, the generated data from our proposed method assisted in revising the ambiguous decision boundary into apparent caused by a lack of data. Due to the effectiveness of the proposed method, the performance of the four EEG signal decoding models is improved in two motor imagery public datasets compared to when the proposed method is not applied. Hence, we demonstrate that generated data by CropCat smooths the feature distribution of EEG signals when training the model.

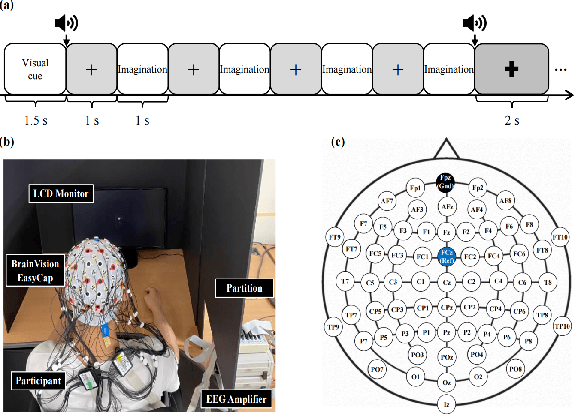

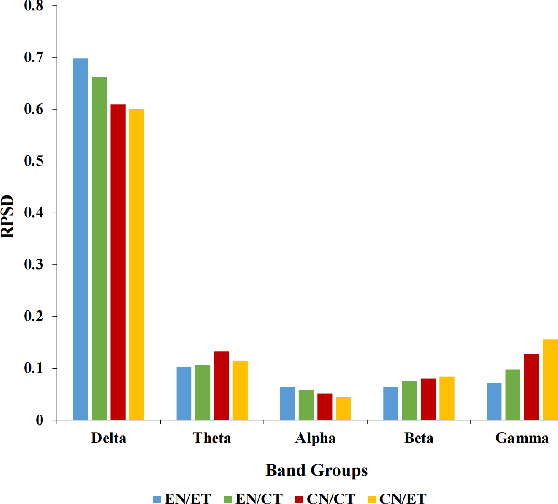

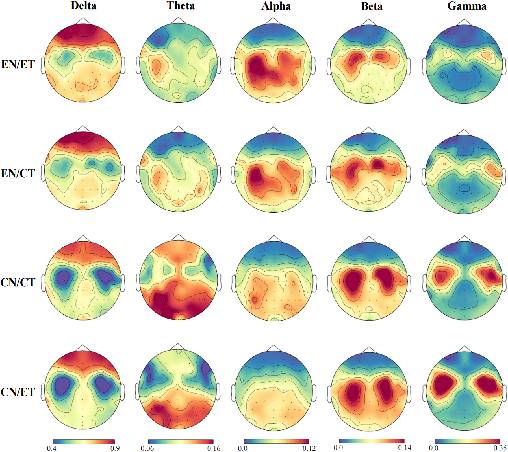

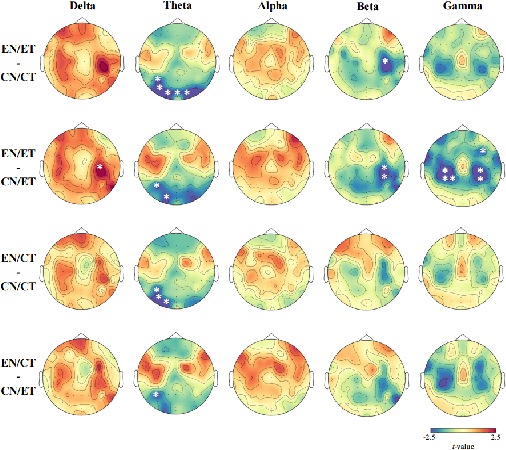

Decoding Neural Correlation of Language-Specific Imagined Speech using EEG Signals

Apr 15, 2022

Abstract:Speech impairments due to cerebral lesions and degenerative disorders can be devastating. For humans with severe speech deficits, imagined speech in the brain-computer interface has been a promising hope for reconstructing the neural signals of speech production. However, studies in the EEG-based imagined speech domain still have some limitations due to high variability in spatial and temporal information and low signal-to-noise ratio. In this paper, we investigated the neural signals for two groups of native speakers with two tasks with different languages, English and Chinese. Our assumption was that English, a non-tonal and phonogram-based language, would have spectral differences in neural computation compared to Chinese, a tonal and ideogram-based language. The results showed the significant difference in the relative power spectral density between English and Chinese in specific frequency band groups. Also, the spatial evaluation of Chinese native speakers in the theta band was distinctive during the imagination task. Hence, this paper would suggest the key spectral and spatial information of word imagination with specialized language while decoding the neural signals of speech.

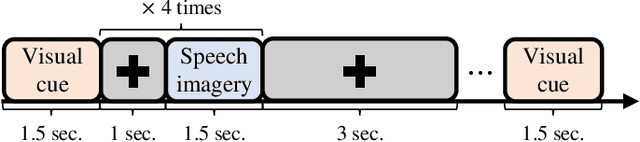

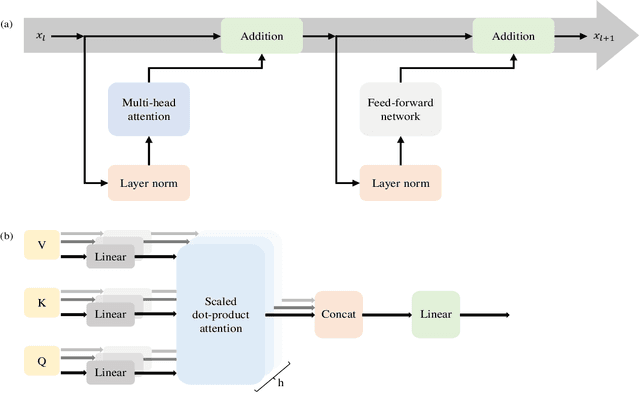

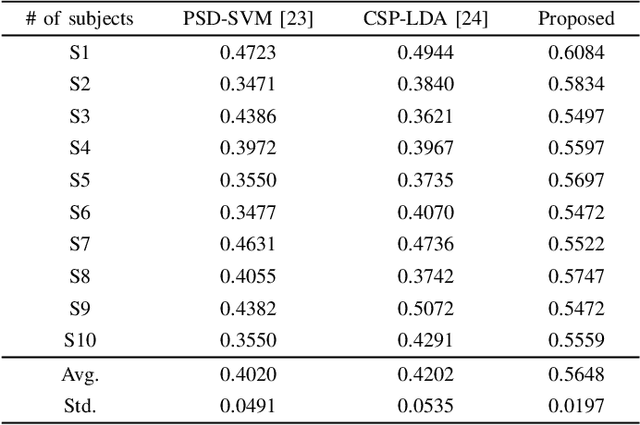

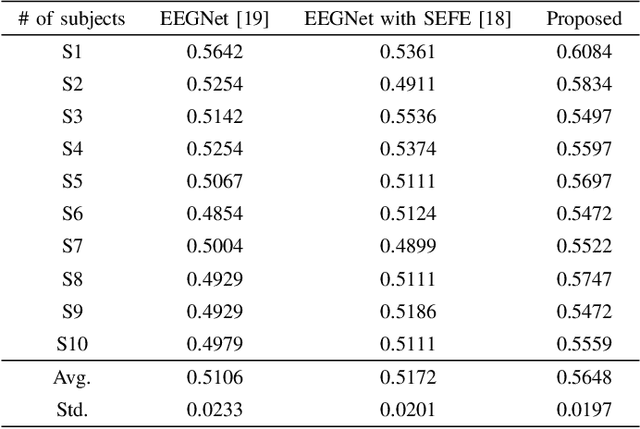

Decoding High-level Imagined Speech using Attention-based Deep Neural Networks

Dec 13, 2021

Abstract:Brain-computer interface (BCI) is the technology that enables the communication between humans and devices by reflecting status and intentions of humans. When conducting imagined speech, the users imagine the pronunciation as if actually speaking. In the case of decoding imagined speech-based EEG signals, complex task can be conducted more intuitively, but decoding performance is lower than that of other BCI paradigms. We modified our previous model for decoding imagined speech-based EEG signals. Ten subjects participated in the experiment. The average accuracy of our proposed method was 0.5648 for classifying four words. In other words, our proposed method has significant strength in learning local features. Hence, we demonstrated the feasibility of decoding imagined speech-based EEG signals with robust performance.

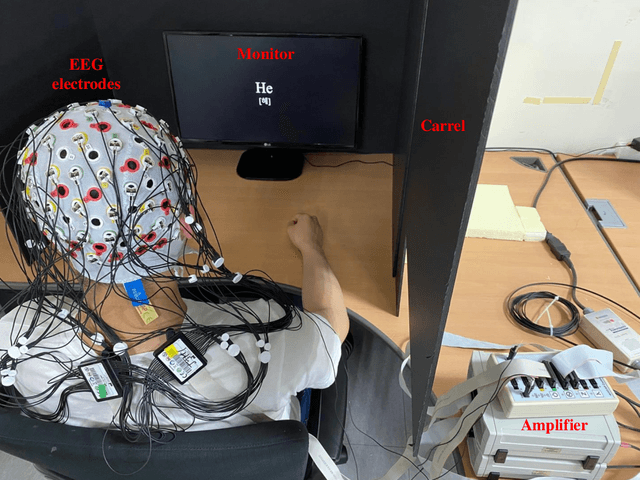

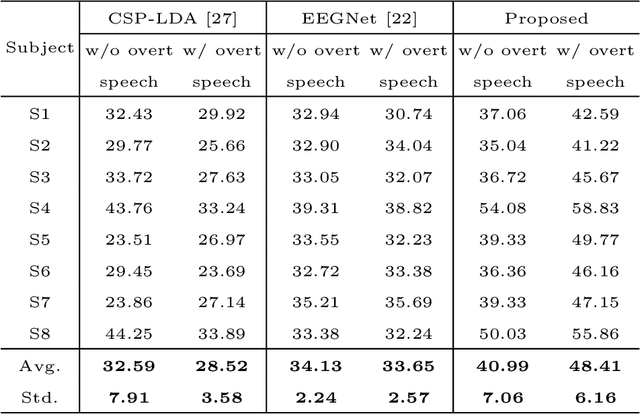

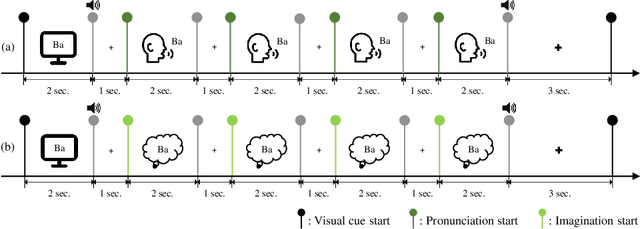

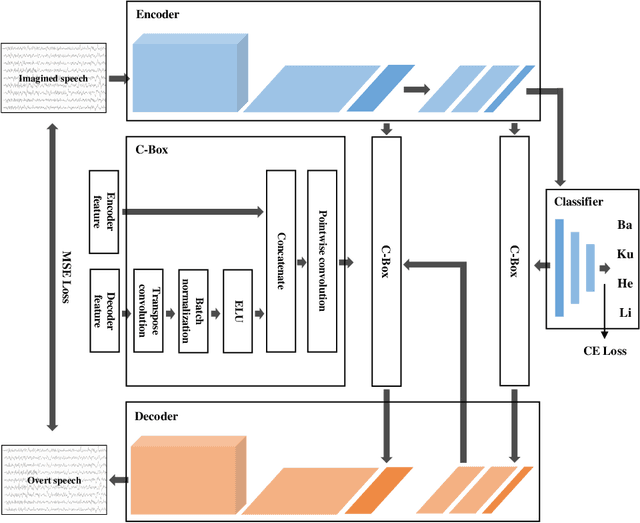

DAL: Feature Learning from Overt Speech to Decode Imagined Speech-based EEG Signals with Convolutional Autoencoder

Jul 15, 2021

Abstract:Brain-computer interface (BCI) is one of the tools which enables the communication between humans and devices by reflecting intention and status of humans. With the development of artificial intelligence, the interest in communication between humans and drones using electroencephalogram (EEG) is increased. Especially, in the case of controlling drone swarms such as direction or formation, there are many advantages compared with controlling a drone unit. Imagined speech is one of the endogenous BCI paradigms, which can identify intentions of users. When conducting imagined speech, the users imagine the pronunciation as if actually speaking. In contrast, overt speech is a task in which the users directly pronounce the words. When controlling drone swarms using imagined speech, complex commands can be delivered more intuitively, but decoding performance is lower than that of other endogenous BCI paradigms. We proposed the Deep-autoleaner (DAL) to learn EEG features of overt speech for imagined speech-based EEG signals classification. To the best of our knowledge, this study is the first attempt to use EEG features of overt speech to decode imagined speech-based EEG signals with an autoencoder. A total of eight subjects participated in the experiment. When classifying four words, the average accuracy of the DAL was 48.41%. In addition, when comparing the performance between w/o and w/ EEG features of overt speech, there was a performance improvement of 7.42% when including EEG features of overt speech. Hence, we demonstrated that EEG features of overt speech could improve the decoding performance of imagined speech.

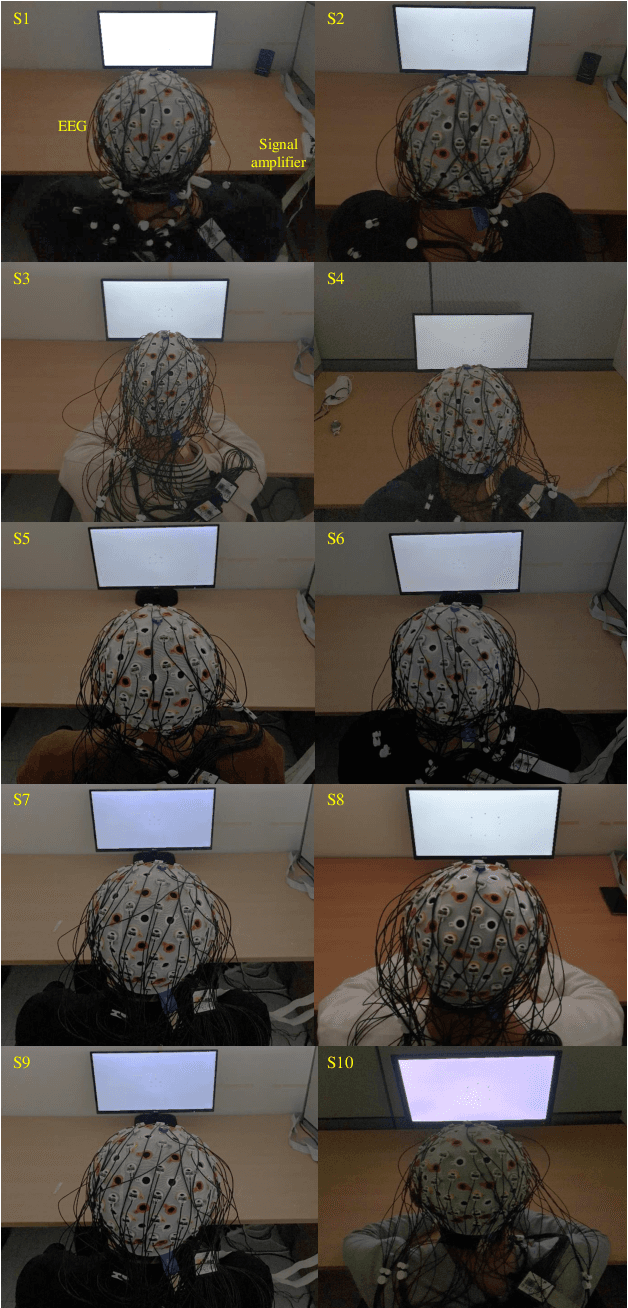

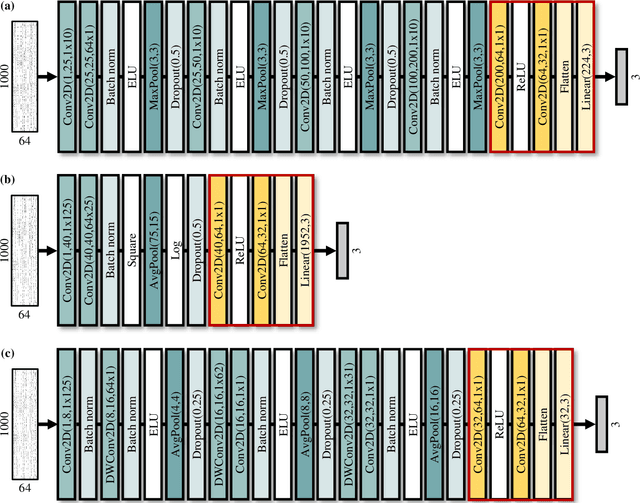

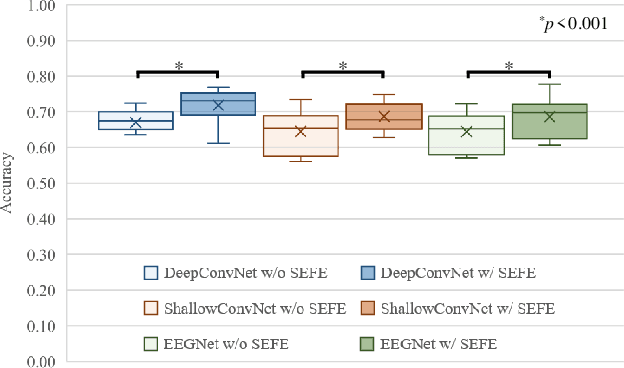

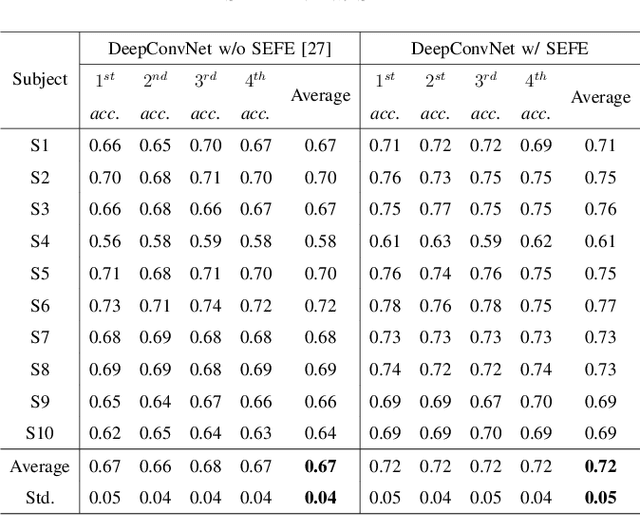

Subject-Independent Brain-Computer Interface for Decoding High-Level Visual Imagery Tasks

Jun 08, 2021

Abstract:Brain-computer interface (BCI) is used for communication between humans and devices by recognizing status and intention of humans. Communication between humans and a drone using electroencephalogram (EEG) signals is one of the most challenging issues in the BCI domain. In particular, the control of drone swarms (the direction and formation) has more advantages compared to the control of a drone. The visual imagery (VI) paradigm is that subjects visually imagine specific objects or scenes. Reduction of the variability among EEG signals of subjects is essential for practical BCI-based systems. In this study, we proposed the subepoch-wise feature encoder (SEFE) to improve the performances in the subject-independent tasks by using the VI dataset. This study is the first attempt to demonstrate the possibility of generalization among subjects in the VI-based BCI. We used the leave-one-subject-out cross-validation for evaluating the performances. We obtained higher performances when including our proposed module than excluding our proposed module. The DeepConvNet with SEFE showed the highest performance of 0.72 among six different decoding models. Hence, we demonstrated the feasibility of decoding the VI dataset in the subject-independent task with robust performances by using our proposed module.

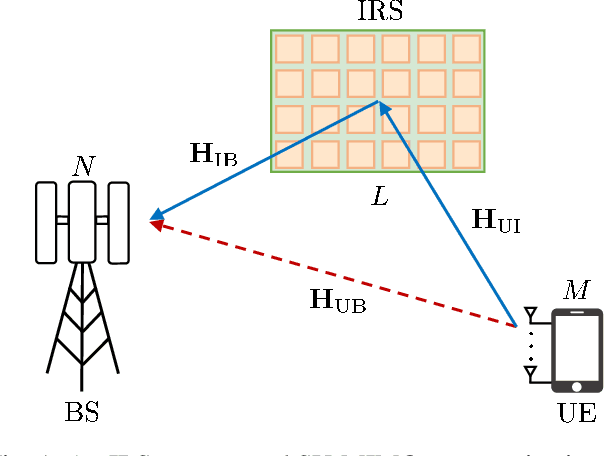

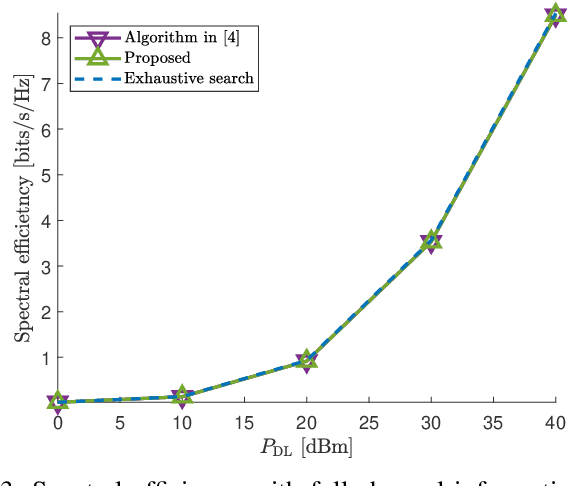

Hybrid Beamforming for Intelligent Reflecting Surface Aided Millimeter Wave MIMO Systems

May 28, 2021

Abstract:While communication systems that employ millimeter wave (mmWave) frequency bands can support extremely high data rates, they must use large antenna arrays in order to overcome the severe propagation loss of mmWave signals. As the cost of traditional fully-digital beamforming at baseband increases rapidly with the number of antennas, hybrid beamforming that requires only a small number of radio frequency (RF) chains has been considered as a key enabling technology for mmWave communications. Intelligent reflecting surface (IRS) is another innovative technology that has been proposed as an integral element of future communication systems, establishing the favorable propagation environment in a timely manner through the use of low-cost passive reflecting elements. In this paper, we study IRS-aided mmWave multiple-input multiple-output (MIMO) systems with hybrid beamforming architectures. We first propose the joint design of IRS reflection pattern and hybrid beamformer for narrowband MIMO systems. Then, by exploiting the sparsity of frequency-selective mmWave channels in the angular domain, we generalize the proposed joint design to broadband MIMO systems with orthogonal frequency division multiplexing (OFDM) modulation. Simulation results demonstrate that the proposed joint designs can significantly enhance the spectral efficiency of the systems of interest and achieve superior performance over the existing designs.

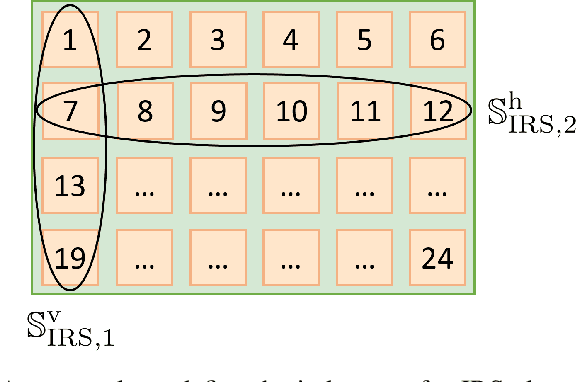

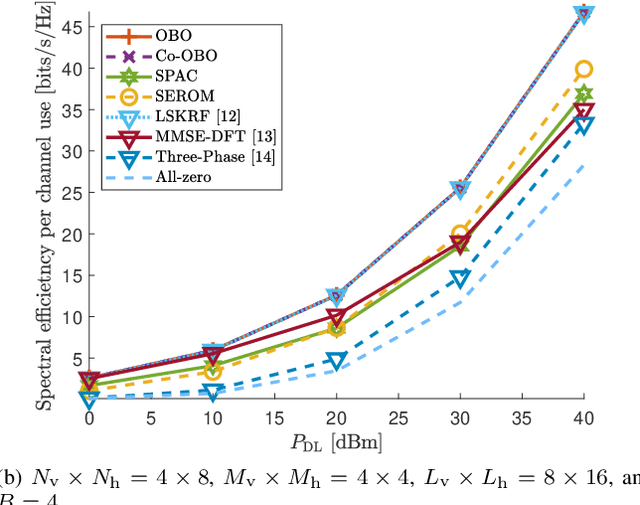

Practical Channel Estimation and Phase Shift Design for Intelligent Reflecting Surface Empowered MIMO Systems

Apr 29, 2021

Abstract:In this paper, channel estimation techniques and phase shift design for intelligent reflecting surface (IRS)-empowered single-user multiple-input multiple-output (SU-MIMO) systems are proposed. Among four channel estimation techniques developed in the paper, the two novel ones, single-path approximated channel (SPAC) and selective emphasis on rank-one matrices (SEROM), have low training overhead to enable practical IRS-empowered SU-MIMO systems. SPAC is mainly based on parameter estimation by approximating IRS-related channels as dominant single-path channels. SEROM exploits IRS phase shifts as well as training signals for channel estimation and easily adjusts its training overhead. A closed-form solution for IRS phase shift design is also developed to maximize spectral efficiency where the solution only requires basic linear operations. Numerical results show that SPAC and SEROM combined with the proposed IRS phase shift design achieve high spectral efficiency even with low training overhead compared to existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge