Seong-Whan Lee

Semantic Prioritization in Visual Counterfactual Explanations with Weighted Segmentation and Auto-Adaptive Region Selection

Nov 17, 2025Abstract:In the domain of non-generative visual counterfactual explanations (CE), traditional techniques frequently involve the substitution of sections within a query image with corresponding sections from distractor images. Such methods have historically overlooked the semantic relevance of the replacement regions to the target object, thereby impairing the model's interpretability and hindering the editing workflow. Addressing these challenges, the present study introduces an innovative methodology named as Weighted Semantic Map with Auto-adaptive Candidate Editing Network (WSAE-Net). Characterized by two significant advancements: the determination of an weighted semantic map and the auto-adaptive candidate editing sequence. First, the generation of the weighted semantic map is designed to maximize the reduction of non-semantic feature units that need to be computed, thereby optimizing computational efficiency. Second, the auto-adaptive candidate editing sequences are designed to determine the optimal computational order among the feature units to be processed, thereby ensuring the efficient generation of counterfactuals while maintaining the semantic relevance of the replacement feature units to the target object. Through comprehensive experimentation, our methodology demonstrates superior performance, contributing to a more lucid and in-depth understanding of visual counterfactual explanations.

ChemFixer: Correcting Invalid Molecules to Unlock Previously Unseen Chemical Space

Nov 14, 2025Abstract:Deep learning-based molecular generation models have shown great potential in efficiently exploring vast chemical spaces by generating potential drug candidates with desired properties. However, these models often produce chemically invalid molecules, which limits the usable scope of the learned chemical space and poses significant challenges for practical applications. To address this issue, we propose ChemFixer, a framework designed to correct invalid molecules into valid ones. ChemFixer is built on a transformer architecture, pre-trained using masking techniques, and fine-tuned on a large-scale dataset of valid/invalid molecular pairs that we constructed. Through comprehensive evaluations across diverse generative models, ChemFixer improved molecular validity while effectively preserving the chemical and biological distributional properties of the original outputs. This indicates that ChemFixer can recover molecules that could not be previously generated, thereby expanding the diversity of potential drug candidates. Furthermore, ChemFixer was effectively applied to a drug-target interaction (DTI) prediction task using limited data, improving the validity of generated ligands and discovering promising ligand-protein pairs. These results suggest that ChemFixer is not only effective in data-limited scenarios, but also extensible to a wide range of downstream tasks. Taken together, ChemFixer shows promise as a practical tool for various stages of deep learning-based drug discovery, enhancing molecular validity and expanding accessible chemical space.

* This is the author's preprint version of the article accepted to IEEE JBHI. Final published version: https://doi.org/10.1109/JBHI.2025.3593825. High-quality PDF (publisher version): https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=11106678. Note: Some figures may appear distorted due to arXiv's TeXLive rendering

Text-guided Weakly Supervised Framework for Dynamic Facial Expression Recognition

Nov 14, 2025Abstract:Dynamic facial expression recognition (DFER) aims to identify emotional states by modeling the temporal changes in facial movements across video sequences. A key challenge in DFER is the many-to-one labeling problem, where a video composed of numerous frames is assigned a single emotion label. A common strategy to mitigate this issue is to formulate DFER as a Multiple Instance Learning (MIL) problem. However, MIL-based approaches inherently suffer from the visual diversity of emotional expressions and the complexity of temporal dynamics. To address this challenge, we propose TG-DFER, a text-guided weakly supervised framework that enhances MIL-based DFER by incorporating semantic guidance and coherent temporal modeling. We incorporate a vision-language pre-trained (VLP) model is integrated to provide semantic guidance through fine-grained textual descriptions of emotional context. Furthermore, we introduce visual prompts, which align enriched textual emotion labels with visual instance features, enabling fine-grained reasoning and frame-level relevance estimation. In addition, a multi-grained temporal network is designed to jointly capture short-term facial dynamics and long-range emotional flow, ensuring coherent affective understanding across time. Extensive results demonstrate that TG-DFER achieves improved generalization, interpretability, and temporal sensitivity under weak supervision.

Meta-cognitive Multi-scale Hierarchical Reasoning for Motor Imagery Decoding

Nov 11, 2025Abstract:Brain-computer interface (BCI) aims to decode motor intent from noninvasive neural signals to enable control of external devices, but practical deployment remains limited by noise and variability in motor imagery (MI)-based electroencephalogram (EEG) signals. This work investigates a hierarchical and meta-cognitive decoding framework for four-class MI classification. We introduce a multi-scale hierarchical signal processing module that reorganizes backbone features into temporal multi-scale representations, together with an introspective uncertainty estimation module that assigns per-cycle reliability scores and guides iterative refinement. We instantiate this framework on three standard EEG backbones (EEGNet, ShallowConvNet, and DeepConvNet) and evaluate four-class MI decoding using the BCI Competition IV-2a dataset under a subject-independent setting. Across all backbones, the proposed components improve average classification accuracy and reduce inter-subject variance compared to the corresponding baselines, indicating increased robustness to subject heterogeneity and noisy trials. These results suggest that combining hierarchical multi-scale processing with introspective confidence estimation can enhance the reliability of MI-based BCI systems.

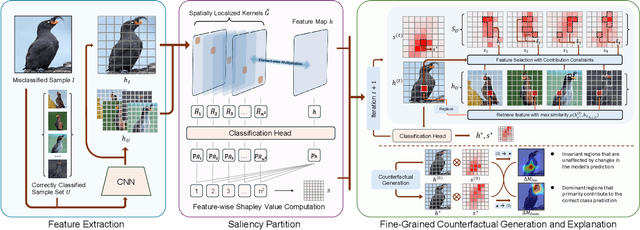

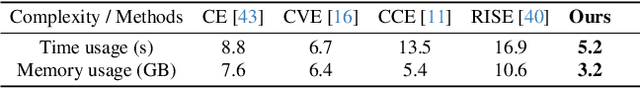

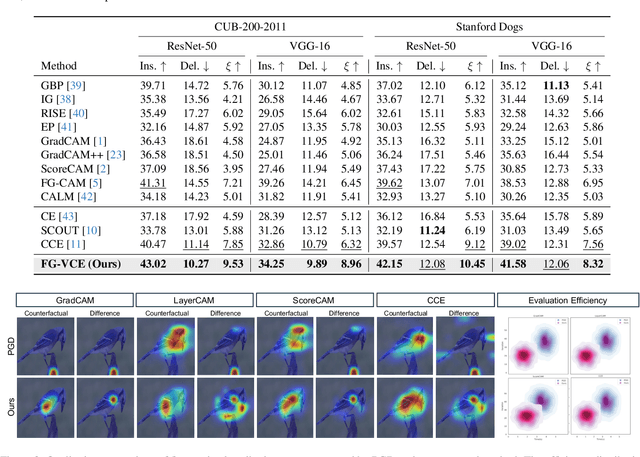

Towards Fine-Grained Interpretability: Counterfactual Explanations for Misclassification with Saliency Partition

Nov 11, 2025

Abstract:Attribution-based explanation techniques capture key patterns to enhance visual interpretability; however, these patterns often lack the granularity needed for insight in fine-grained tasks, particularly in cases of model misclassification, where explanations may be insufficiently detailed. To address this limitation, we propose a fine-grained counterfactual explanation framework that generates both object-level and part-level interpretability, addressing two fundamental questions: (1) which fine-grained features contribute to model misclassification, and (2) where dominant local features influence counterfactual adjustments. Our approach yields explainable counterfactuals in a non-generative manner by quantifying similarity and weighting component contributions within regions of interest between correctly classified and misclassified samples. Furthermore, we introduce a saliency partition module grounded in Shapley value contributions, isolating features with region-specific relevance. Extensive experiments demonstrate the superiority of our approach in capturing more granular, intuitively meaningful regions, surpassing fine-grained methods.

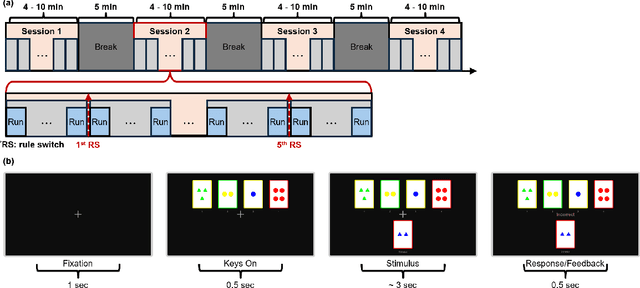

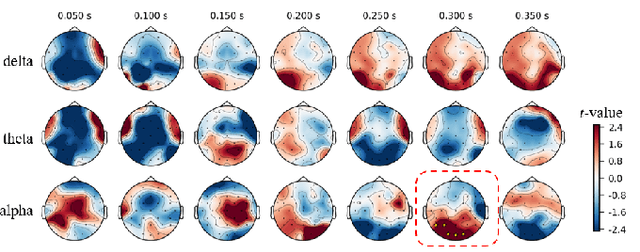

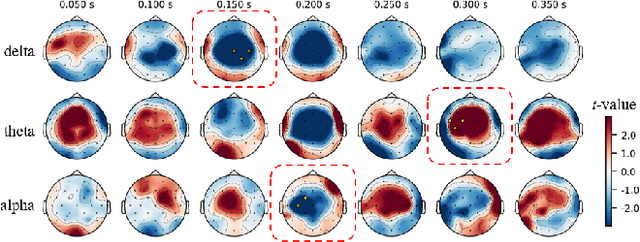

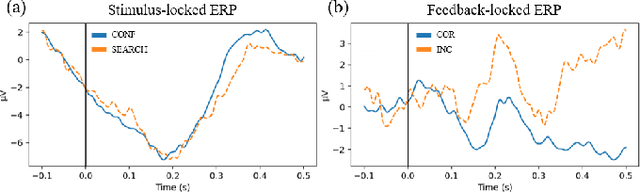

Neurophysiological Characteristics of Adaptive Reasoning for Creative Problem-Solving Strategy

Nov 11, 2025

Abstract:Adaptive reasoning enables humans to flexibly adjust inference strategies when environmental rules or contexts change, yet its underlying neural dynamics remain unclear. This study investigated the neurophysiological mechanisms of adaptive reasoning using a card-sorting paradigm combined with electroencephalography and compared human performance with that of a multimodal large language model. Stimulus- and feedback-locked analyses revealed coordinated delta-theta-alpha dynamics: early delta-theta activity reflected exploratory monitoring and rule inference, whereas occipital alpha engagement indicated confirmatory stabilization of attention after successful rule identification. In contrast, the multimodal large language model exhibited only short-term feedback-driven adjustments without hierarchical rule abstraction or genuine adaptive reasoning. These findings identify the neural signatures of human adaptive reasoning and highlight the need for brain-inspired artificial intelligence that incorporates oscillatory feedback coordination for true context-sensitive adaptation.

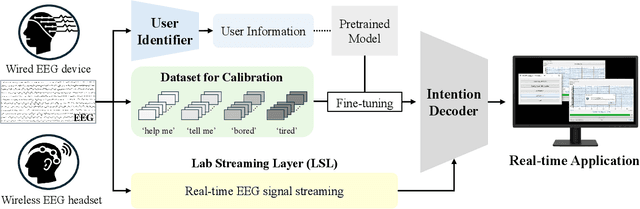

Toward Practical BCI: A Real-time Wireless Imagined Speech EEG Decoding System

Nov 11, 2025

Abstract:Brain-computer interface (BCI) research, while promising, has largely been confined to static and fixed environments, limiting real-world applicability. To move towards practical BCI, we introduce a real-time wireless imagined speech electroencephalogram (EEG) decoding system designed for flexibility and everyday use. Our framework focuses on practicality, demonstrating extensibility beyond wired EEG devices to portable, wireless hardware. A user identification module recognizes the operator and provides a personalized, user-specific service. To achieve seamless, real-time operation, we utilize the lab streaming layer to manage the continuous streaming of live EEG signals to the personalized decoder. This end-to-end pipeline enables a functional real-time application capable of classifying user commands from imagined speech EEG signals, achieving an overall 4-class accuracy of 62.00 % on a wired device and 46.67 % on a portable wireless headset. This paper demonstrates a significant step towards truly practical and accessible BCI technology, establishing a clear direction for future research in robust, practical, and personalized neural interfaces.

Enhancing Spatio-Temporal Zero-shot Action Recognition with Language-driven Description Attributes

Nov 03, 2025Abstract:Vision-Language Models (VLMs) have demonstrated impressive capabilities in zero-shot action recognition by learning to associate video embeddings with class embeddings. However, a significant challenge arises when relying solely on action classes to provide semantic context, particularly due to the presence of multi-semantic words, which can introduce ambiguity in understanding the intended concepts of actions. To address this issue, we propose an innovative approach that harnesses web-crawled descriptions, leveraging a large-language model to extract relevant keywords. This method reduces the need for human annotators and eliminates the laborious manual process of attribute data creation. Additionally, we introduce a spatio-temporal interaction module designed to focus on objects and action units, facilitating alignment between description attributes and video content. In our zero-shot experiments, our model achieves impressive results, attaining accuracies of 81.0%, 53.1%, and 68.9% on UCF-101, HMDB-51, and Kinetics-600, respectively, underscoring the model's adaptability and effectiveness across various downstream tasks.

Reconstructing Unseen Sentences from Speech-related Biosignals for Open-vocabulary Neural Communication

Oct 31, 2025Abstract:Brain-to-speech (BTS) systems represent a groundbreaking approach to human communication by enabling the direct transformation of neural activity into linguistic expressions. While recent non-invasive BTS studies have largely focused on decoding predefined words or sentences, achieving open-vocabulary neural communication comparable to natural human interaction requires decoding unconstrained speech. Additionally, effectively integrating diverse signals derived from speech is crucial for developing personalized and adaptive neural communication and rehabilitation solutions for patients. This study investigates the potential of speech synthesis for previously unseen sentences across various speech modes by leveraging phoneme-level information extracted from high-density electroencephalography (EEG) signals, both independently and in conjunction with electromyography (EMG) signals. Furthermore, we examine the properties affecting phoneme decoding accuracy during sentence reconstruction and offer neurophysiological insights to further enhance EEG decoding for more effective neural communication solutions. Our findings underscore the feasibility of biosignal-based sentence-level speech synthesis for reconstructing unseen sentences, highlighting a significant step toward developing open-vocabulary neural communication systems adapted to diverse patient needs and conditions. Additionally, this study provides meaningful insights into the development of communication and rehabilitation solutions utilizing EEG-based decoding technologies.

Uncertainty-Aware Cross-Modal Knowledge Distillation with Prototype Learning for Multimodal Brain-Computer Interfaces

Jul 17, 2025Abstract:Electroencephalography (EEG) is a fundamental modality for cognitive state monitoring in brain-computer interfaces (BCIs). However, it is highly susceptible to intrinsic signal errors and human-induced labeling errors, which lead to label noise and ultimately degrade model performance. To enhance EEG learning, multimodal knowledge distillation (KD) has been explored to transfer knowledge from visual models with rich representations to EEG-based models. Nevertheless, KD faces two key challenges: modality gap and soft label misalignment. The former arises from the heterogeneous nature of EEG and visual feature spaces, while the latter stems from label inconsistencies that create discrepancies between ground truth labels and distillation targets. This paper addresses semantic uncertainty caused by ambiguous features and weakly defined labels. We propose a novel cross-modal knowledge distillation framework that mitigates both modality and label inconsistencies. It aligns feature semantics through a prototype-based similarity module and introduces a task-specific distillation head to resolve label-induced inconsistency in supervision. Experimental results demonstrate that our approach improves EEG-based emotion regression and classification performance, outperforming both unimodal and multimodal baselines on a public multimodal dataset. These findings highlight the potential of our framework for BCI applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge