Dae-Hyeok Lee

Classification of Distraction Levels Using Hybrid Deep Neural Networks From EEG Signals

Dec 13, 2022Abstract:Non-invasive brain-computer interface technology has been developed for detecting human mental states with high performances. Detection of the pilots' mental states is particularly critical because their abnormal mental states could cause catastrophic accidents. In this study, we presented the feasibility of classifying distraction levels (namely, normal state, low distraction, and high distraction) by applying the deep learning method. To the best of our knowledge, this study is the first attempt to classify distraction levels under a flight environment. We proposed a model for classifying distraction levels. A total of ten pilots conducted the experiment in a simulated flight environment. The grand-average accuracy was 0.8437 for classifying distraction levels across all subjects. Hence, we believe that it will contribute significantly to autonomous driving or flight based on artificial intelligence technology in the future.

CropCat: Data Augmentation for Smoothing the Feature Distribution of EEG Signals

Dec 13, 2022Abstract:Brain-computer interface (BCI) is a communication system between humans and computers reflecting human intention without using a physical control device. Since deep learning is robust in extracting features from data, research on decoding electroencephalograms by applying deep learning has progressed in the BCI domain. However, the application of deep learning in the BCI domain has issues with a lack of data and overconfidence. To solve these issues, we proposed a novel data augmentation method, CropCat. CropCat consists of two versions, CropCat-spatial and CropCat-temporal. We designed our method by concatenating the cropped data after cropping the data, which have different labels in spatial and temporal axes. In addition, we adjusted the label based on the ratio of cropped length. As a result, the generated data from our proposed method assisted in revising the ambiguous decision boundary into apparent caused by a lack of data. Due to the effectiveness of the proposed method, the performance of the four EEG signal decoding models is improved in two motor imagery public datasets compared to when the proposed method is not applied. Hence, we demonstrate that generated data by CropCat smooths the feature distribution of EEG signals when training the model.

Decoding Neural Correlation of Language-Specific Imagined Speech using EEG Signals

Apr 15, 2022

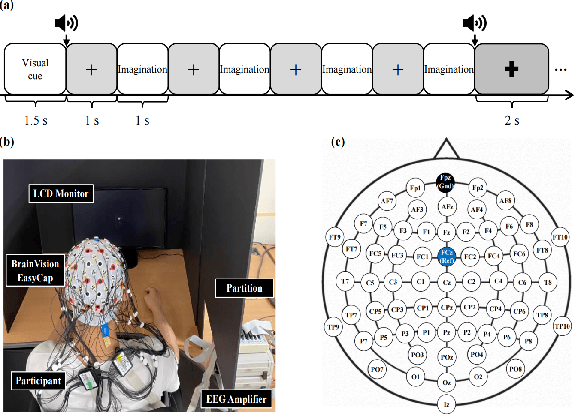

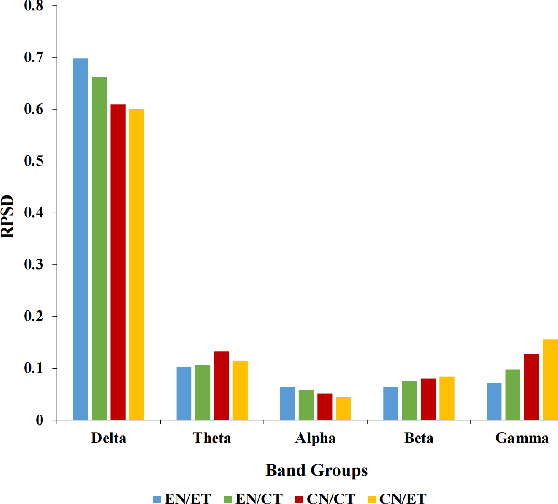

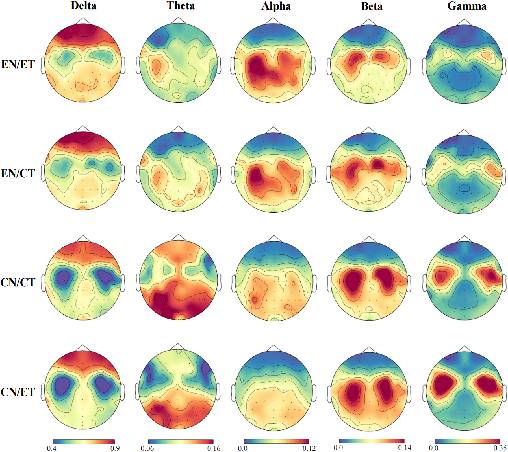

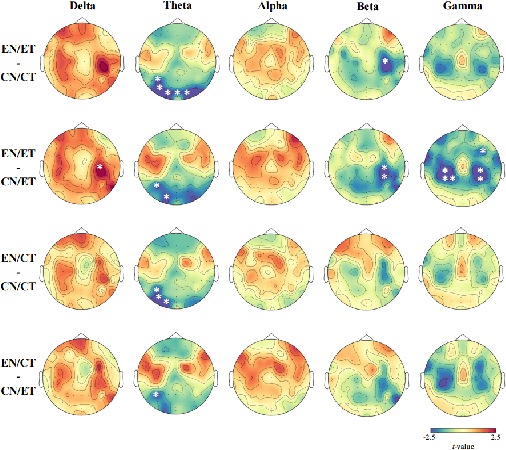

Abstract:Speech impairments due to cerebral lesions and degenerative disorders can be devastating. For humans with severe speech deficits, imagined speech in the brain-computer interface has been a promising hope for reconstructing the neural signals of speech production. However, studies in the EEG-based imagined speech domain still have some limitations due to high variability in spatial and temporal information and low signal-to-noise ratio. In this paper, we investigated the neural signals for two groups of native speakers with two tasks with different languages, English and Chinese. Our assumption was that English, a non-tonal and phonogram-based language, would have spectral differences in neural computation compared to Chinese, a tonal and ideogram-based language. The results showed the significant difference in the relative power spectral density between English and Chinese in specific frequency band groups. Also, the spatial evaluation of Chinese native speakers in the theta band was distinctive during the imagination task. Hence, this paper would suggest the key spectral and spatial information of word imagination with specialized language while decoding the neural signals of speech.

Decoding High-level Imagined Speech using Attention-based Deep Neural Networks

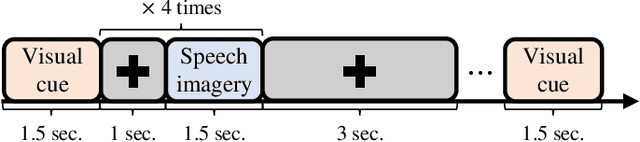

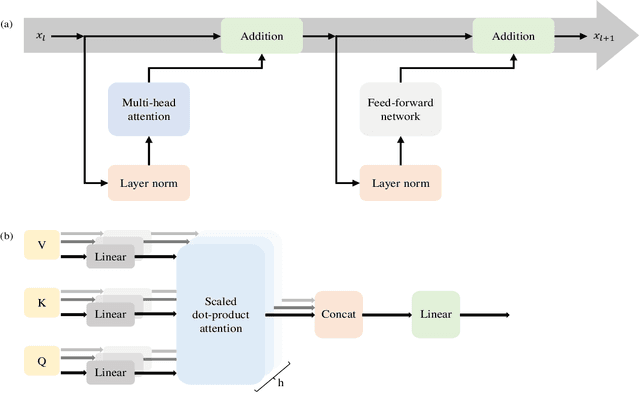

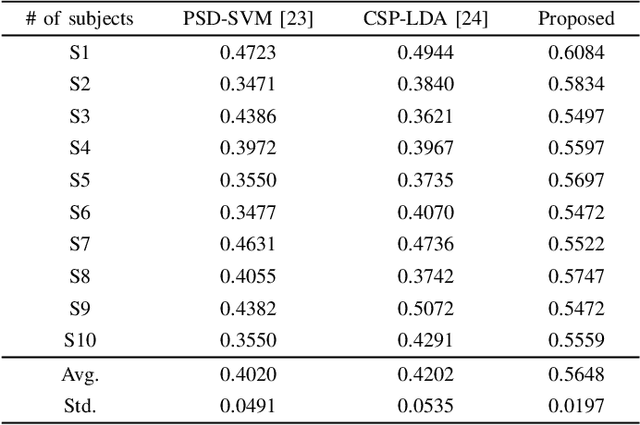

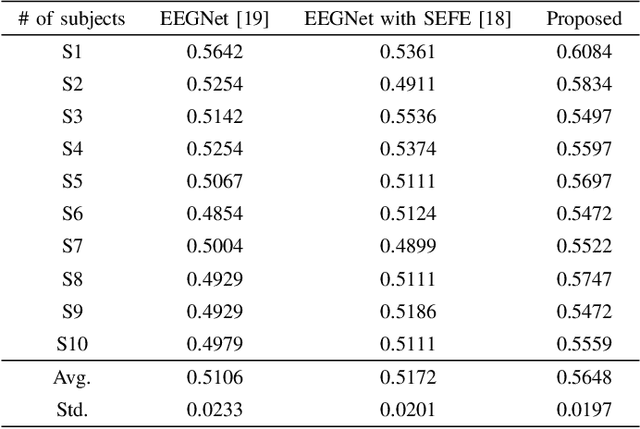

Dec 13, 2021

Abstract:Brain-computer interface (BCI) is the technology that enables the communication between humans and devices by reflecting status and intentions of humans. When conducting imagined speech, the users imagine the pronunciation as if actually speaking. In the case of decoding imagined speech-based EEG signals, complex task can be conducted more intuitively, but decoding performance is lower than that of other BCI paradigms. We modified our previous model for decoding imagined speech-based EEG signals. Ten subjects participated in the experiment. The average accuracy of our proposed method was 0.5648 for classifying four words. In other words, our proposed method has significant strength in learning local features. Hence, we demonstrated the feasibility of decoding imagined speech-based EEG signals with robust performance.

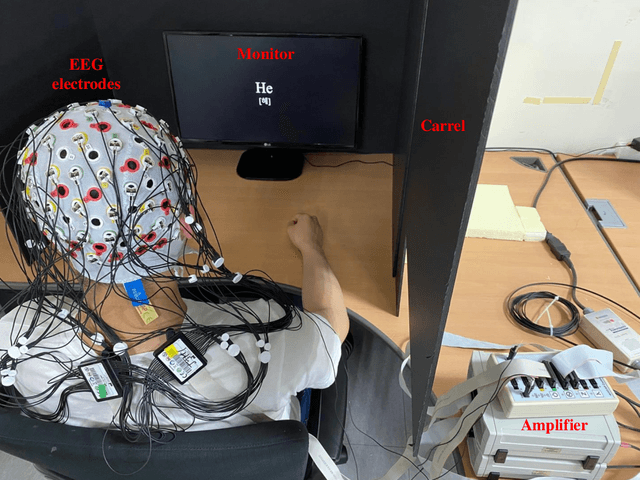

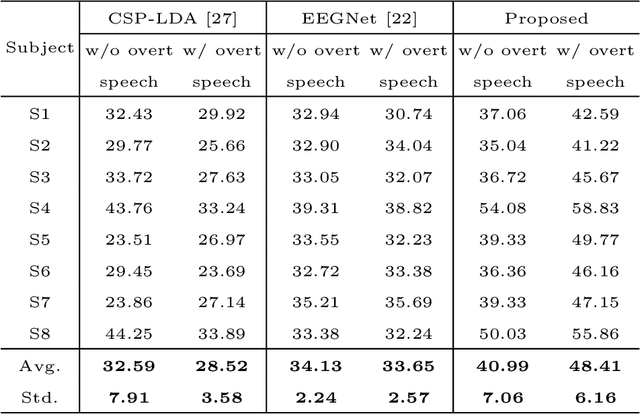

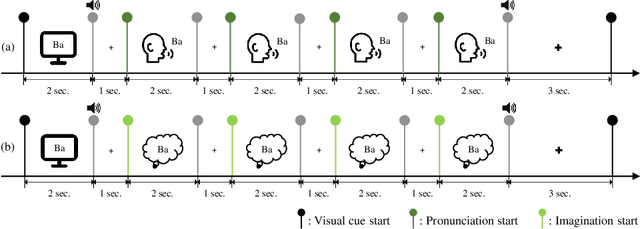

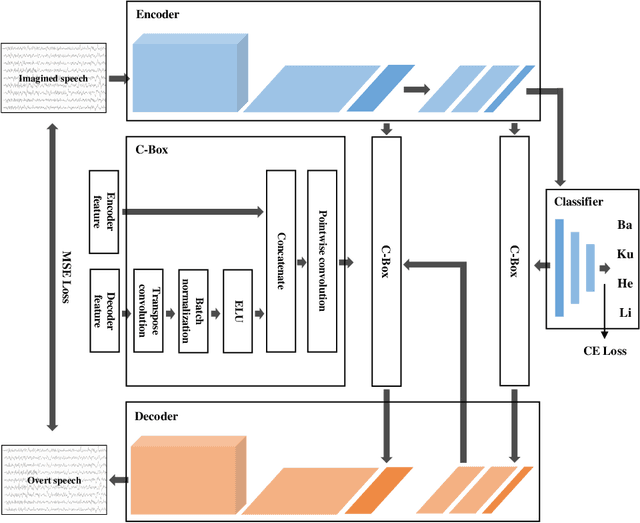

DAL: Feature Learning from Overt Speech to Decode Imagined Speech-based EEG Signals with Convolutional Autoencoder

Jul 15, 2021

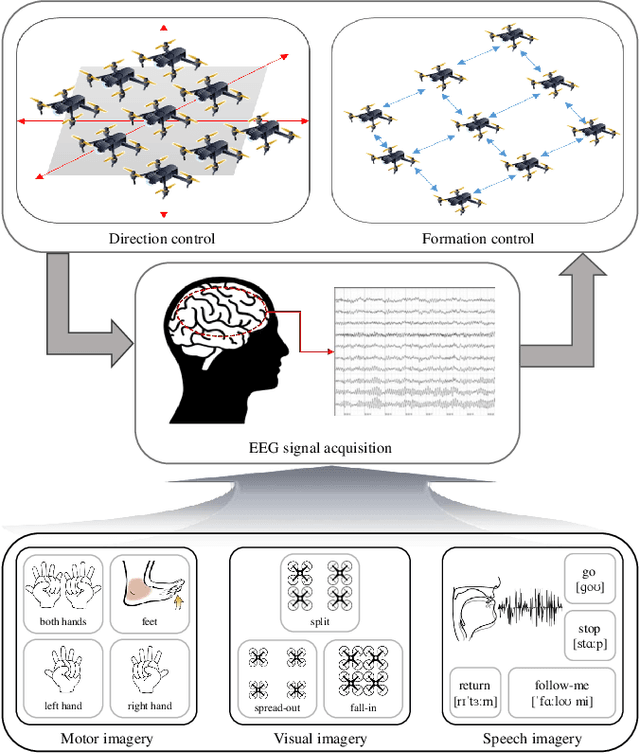

Abstract:Brain-computer interface (BCI) is one of the tools which enables the communication between humans and devices by reflecting intention and status of humans. With the development of artificial intelligence, the interest in communication between humans and drones using electroencephalogram (EEG) is increased. Especially, in the case of controlling drone swarms such as direction or formation, there are many advantages compared with controlling a drone unit. Imagined speech is one of the endogenous BCI paradigms, which can identify intentions of users. When conducting imagined speech, the users imagine the pronunciation as if actually speaking. In contrast, overt speech is a task in which the users directly pronounce the words. When controlling drone swarms using imagined speech, complex commands can be delivered more intuitively, but decoding performance is lower than that of other endogenous BCI paradigms. We proposed the Deep-autoleaner (DAL) to learn EEG features of overt speech for imagined speech-based EEG signals classification. To the best of our knowledge, this study is the first attempt to use EEG features of overt speech to decode imagined speech-based EEG signals with an autoencoder. A total of eight subjects participated in the experiment. When classifying four words, the average accuracy of the DAL was 48.41%. In addition, when comparing the performance between w/o and w/ EEG features of overt speech, there was a performance improvement of 7.42% when including EEG features of overt speech. Hence, we demonstrated that EEG features of overt speech could improve the decoding performance of imagined speech.

Towards Natural Brain-Machine Interaction using Endogenous Potentials based on Deep Neural Networks

Jun 25, 2021

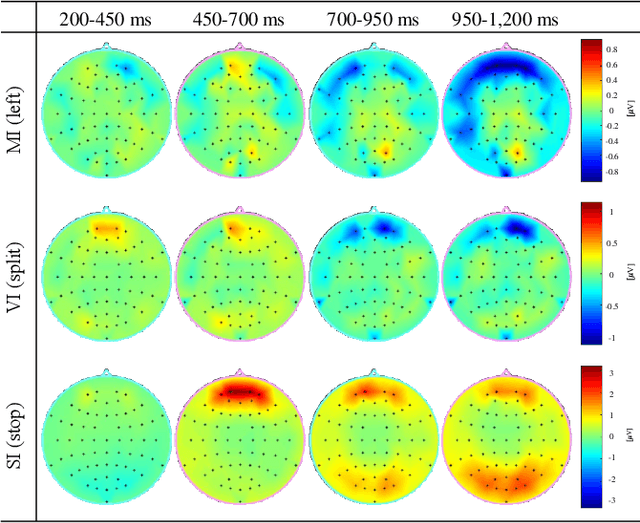

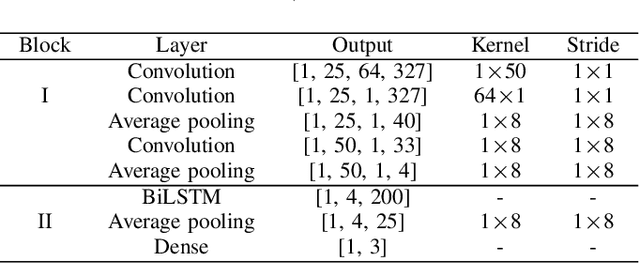

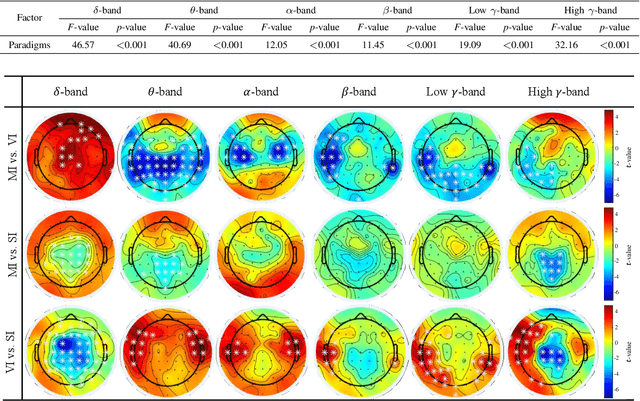

Abstract:Human-robot collaboration has the potential to maximize the efficiency of the operation of autonomous robots. Brain-machine interface (BMI) would be a desirable technology to collaborate with robots since the intention or state of users can be translated from the neural activities. However, the electroencephalogram (EEG), which is one of the most popularly used non-invasive BMI modalities, has low accuracy and a limited degree of freedom (DoF) due to a low signal-to-noise ratio. Thus, improving the performance of multi-class EEG classification is crucial to develop more flexible BMI-based human-robot collaboration. In this study, we investigated the possibility for inter-paradigm classification of multiple endogenous BMI paradigms, such as motor imagery (MI), visual imagery (VI), and speech imagery (SI), to enhance the limited DoF while maintaining robust accuracy. We conducted the statistical and neurophysiological analyses on MI, VI, and SI and classified three paradigms using the proposed temporal information-based neural network (TINN). We confirmed that statistically significant features could be extracted on different brain regions when classifying three endogenous paradigms. Moreover, our proposed TINN showed the highest accuracy of 0.93 compared to the previous methods for classifying three different types of mental imagery tasks (MI, VI, and SI).

Subject-Independent Brain-Computer Interface for Decoding High-Level Visual Imagery Tasks

Jun 08, 2021

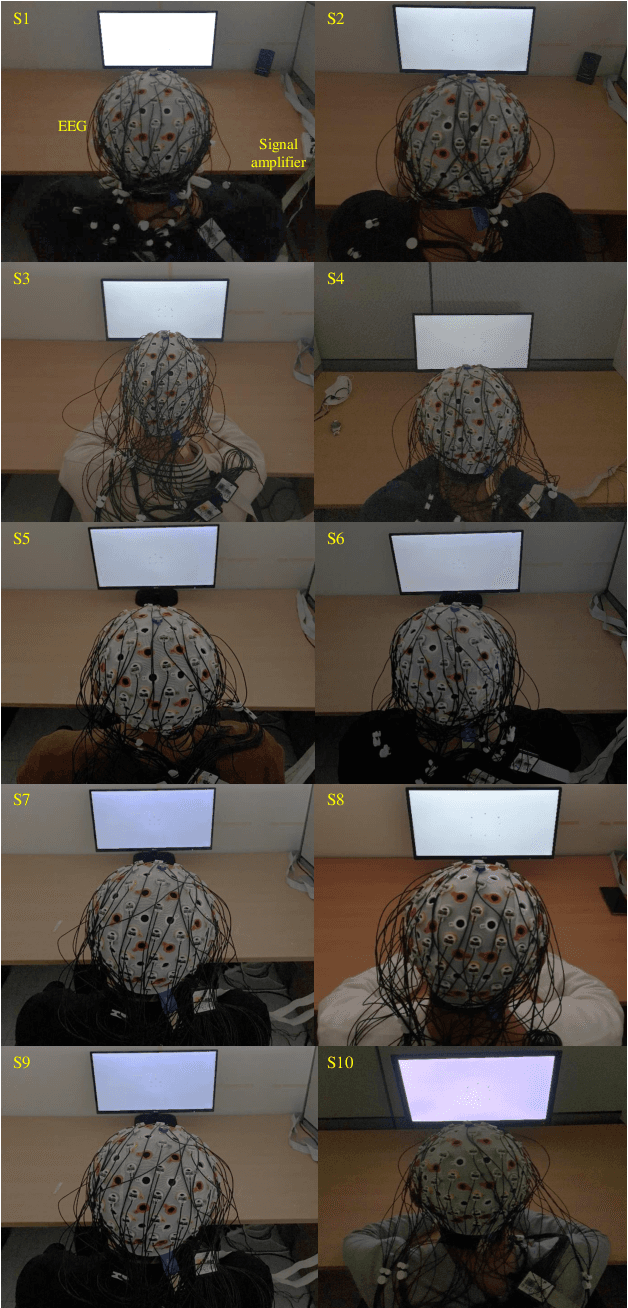

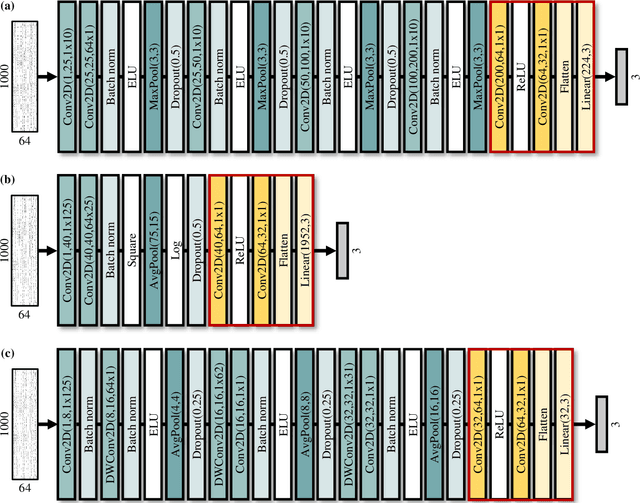

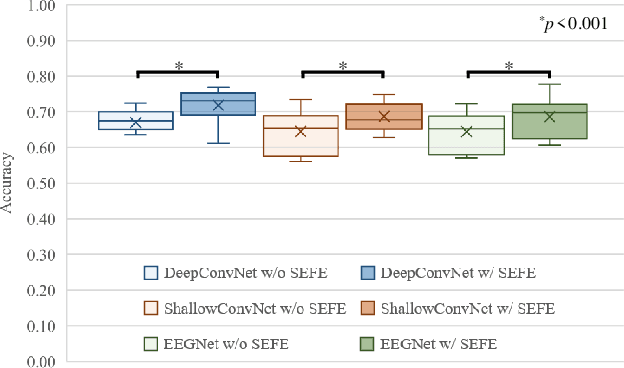

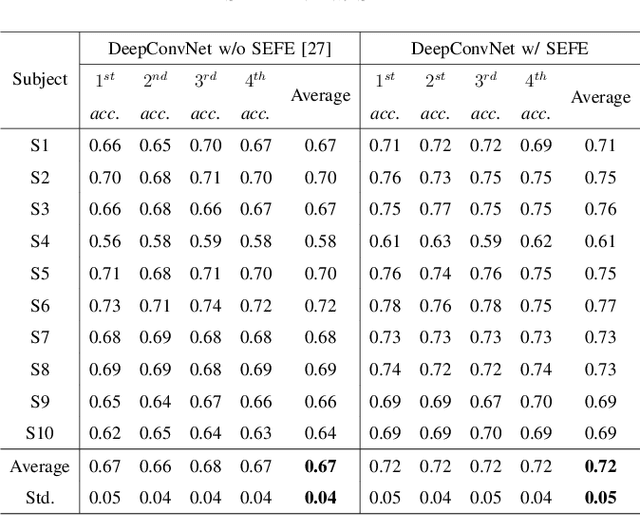

Abstract:Brain-computer interface (BCI) is used for communication between humans and devices by recognizing status and intention of humans. Communication between humans and a drone using electroencephalogram (EEG) signals is one of the most challenging issues in the BCI domain. In particular, the control of drone swarms (the direction and formation) has more advantages compared to the control of a drone. The visual imagery (VI) paradigm is that subjects visually imagine specific objects or scenes. Reduction of the variability among EEG signals of subjects is essential for practical BCI-based systems. In this study, we proposed the subepoch-wise feature encoder (SEFE) to improve the performances in the subject-independent tasks by using the VI dataset. This study is the first attempt to demonstrate the possibility of generalization among subjects in the VI-based BCI. We used the leave-one-subject-out cross-validation for evaluating the performances. We obtained higher performances when including our proposed module than excluding our proposed module. The DeepConvNet with SEFE showed the highest performance of 0.72 among six different decoding models. Hence, we demonstrated the feasibility of decoding the VI dataset in the subject-independent task with robust performances by using our proposed module.

Towards Brain-Computer Interfaces for Drone Swarm Control

Feb 03, 2020

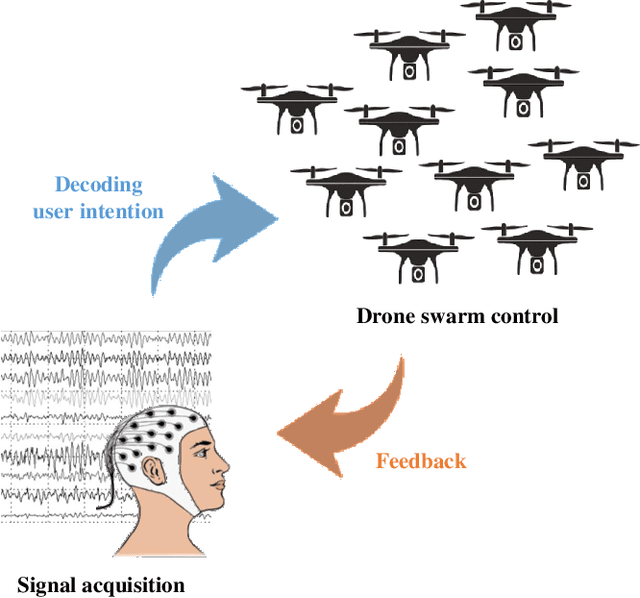

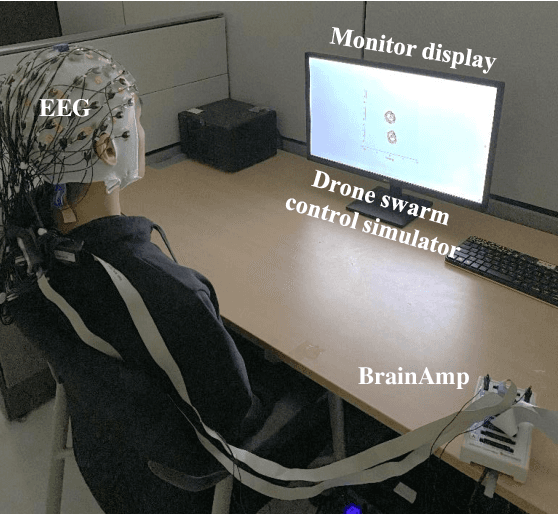

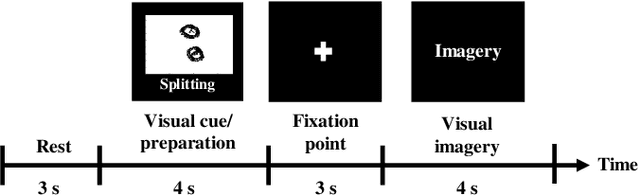

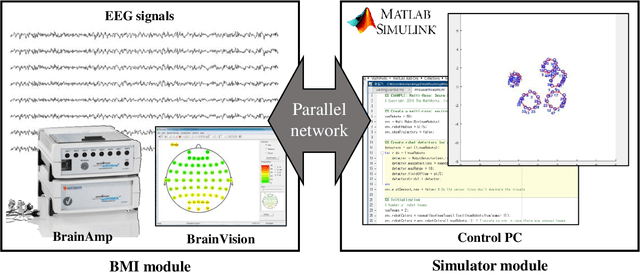

Abstract:Noninvasive brain-computer interface (BCI) decodes brain signals to understand user intention. Recent advances have been developed for the BCI-based drone control system as the demand for drone control increases. Especially, drone swarm control based on brain signals could provide various industries such as military service or industry disaster. This paper presents a prototype of a brain swarm interface system for a variety of scenarios using a visual imagery paradigm. We designed the experimental environment that could acquire brain signals under a drone swarm control simulator environment. Through the system, we collected the electroencephalogram (EEG) signals with respect to four different scenarios. Seven subjects participated in our experiment and evaluated classification performances using the basic machine learning algorithm. The grand average classification accuracy is higher than the chance level accuracy. Hence, we could confirm the feasibility of the drone swarm control system based on EEG signals for performing high-level tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge