Suchendra M. Bhandarkar

Object-Oriented Material Classification and 3D Clustering for Improved Semantic Perception and Mapping in Mobile Robots

Jul 08, 2024Abstract:Classification of different object surface material types can play a significant role in the decision-making algorithms for mobile robots and autonomous vehicles. RGB-based scene-level semantic segmentation has been well-addressed in the literature. However, improving material recognition using the depth modality and its integration with SLAM algorithms for 3D semantic mapping could unlock new potential benefits in the robotics perception pipeline. To this end, we propose a complementarity-aware deep learning approach for RGB-D-based material classification built on top of an object-oriented pipeline. The approach further integrates the ORB-SLAM2 method for 3D scene mapping with multiscale clustering of the detected material semantics in the point cloud map generated by the visual SLAM algorithm. Extensive experimental results with existing public datasets and newly contributed real-world robot datasets demonstrate a significant improvement in material classification and 3D clustering accuracy compared to state-of-the-art approaches for 3D semantic scene mapping.

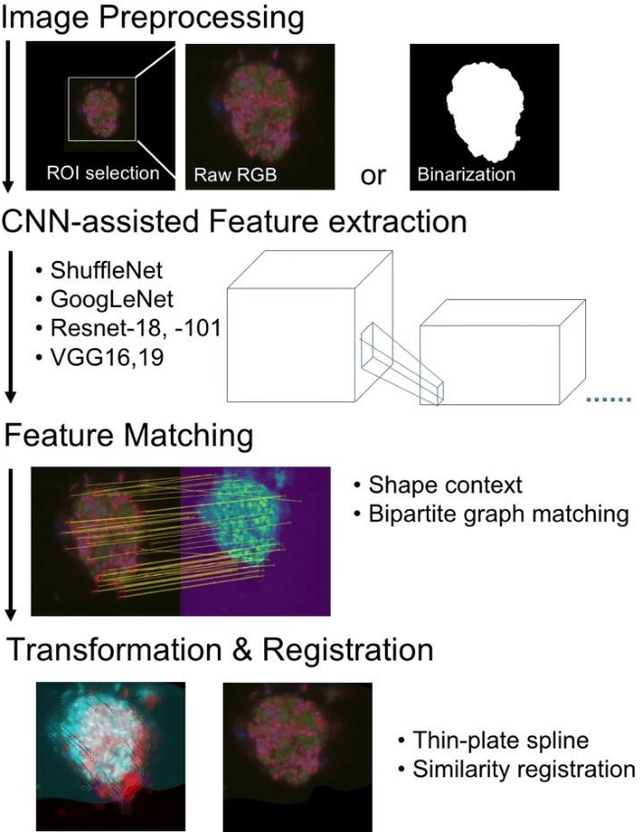

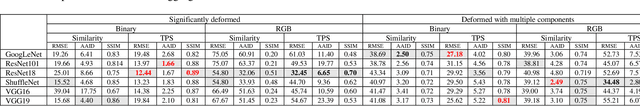

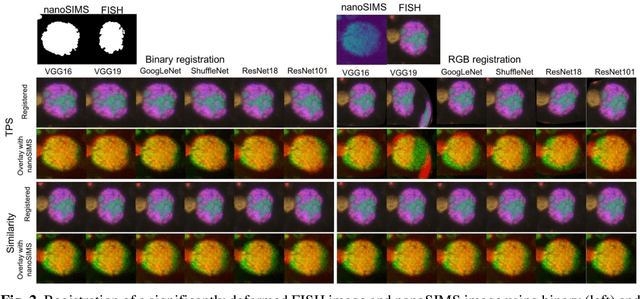

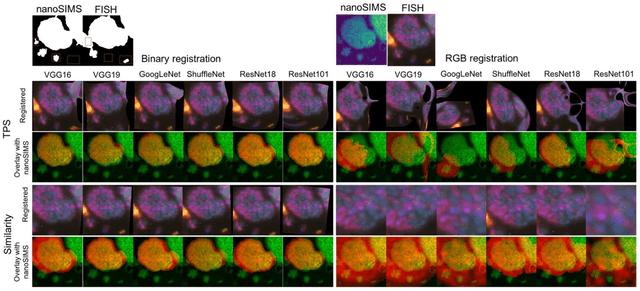

Multimodal registration of FISH and nanoSIMS images using convolutional neural network models

Jan 14, 2022

Abstract:Nanoscale secondary ion mass spectrometry (nanoSIMS) and fluorescence in situ hybridization (FISH) microscopy provide high-resolution, multimodal image representations of the identity and cell activity respectively of targeted microbial communities in microbiological research. Despite its importance to microbiologists, multimodal registration of FISH and nanoSIMS images is challenging given the morphological distortion and background noise in both images. In this study, we use convolutional neural networks (CNNs) for multiscale feature extraction, shape context for computation of the minimum transformation cost feature matching and the thin-plate spline (TPS) model for multimodal registration of the FISH and nanoSIMS images. All the six tested CNN models, VGG16, VGG19, GoogLeNet and ShuffleNet, ResNet18 and ResNet101 performed well, demonstrating the utility of CNNs in the registration of multimodal images with significant background noise and morphology distortion. We also show aggregate shape preserved by binarization to be a robust feature for registering multimodal microbiology-related images.

High-resolution Ecosystem Mapping in Repetitive Environments Using Dual Camera SLAM

Jan 10, 2022

Abstract:Structure from Motion (SfM) techniques are being increasingly used to create 3D maps from images in many domains including environmental monitoring. However, SfM techniques are often confounded in visually repetitive environments as they rely primarily on globally distinct image features. Simultaneous Localization and Mapping (SLAM) techniques offer a potential solution in visually repetitive environments since they use local feature matching, but SLAM approaches work best with wide-angle cameras that are often unsuitable for documenting the environmental system of interest. We resolve this issue by proposing a dual-camera SLAM approach that uses a forward facing wide-angle camera for localization and a downward facing narrower angle, high-resolution camera for documentation. Video frames acquired by the forward facing camera video are processed using a standard SLAM approach providing a trajectory of the imaging system through the environment which is then used to guide the registration of the documentation camera images. Fragmentary maps, initially produced from the documentation camera images via monocular SLAM, are subsequently scaled and aligned with the localization camera trajectory and finally subjected to a global optimization procedure to produce a unified, refined map. An experimental comparison with several state-of-the-art SfM approaches shows the dual-camera SLAM approach to perform better in repetitive environmental systems based on select samples of ground control point markers.

Matching Disparate Image Pairs Using Shape-Aware ConvNets

Nov 24, 2018

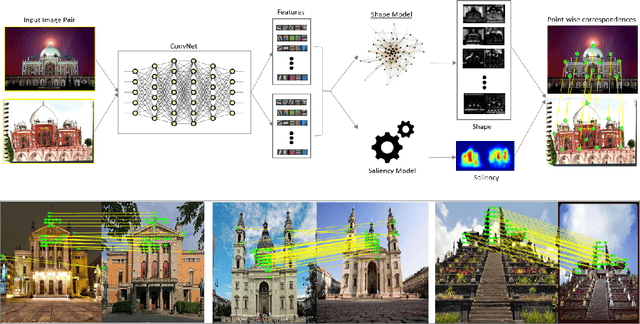

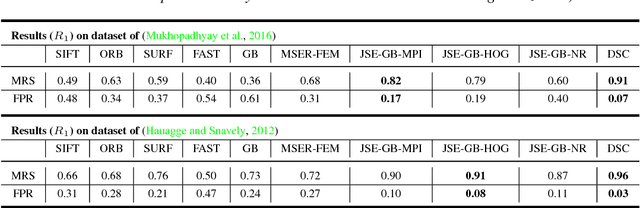

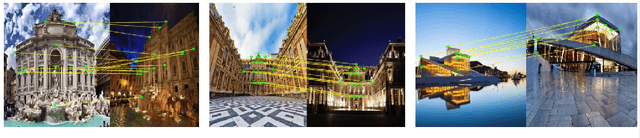

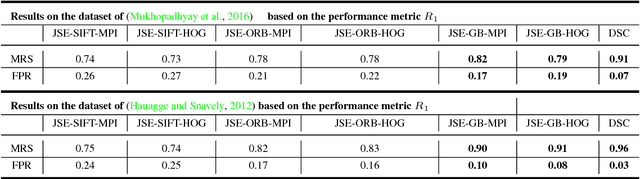

Abstract:An end-to-end trainable ConvNet architecture, that learns to harness the power of shape representation for matching disparate image pairs, is proposed. Disparate image pairs are deemed those that exhibit strong affine variations in scale, viewpoint and projection parameters accompanied by the presence of partial or complete occlusion of objects and extreme variations in ambient illumination. Under these challenging conditions, neither local nor global feature-based image matching methods, when used in isolation, have been observed to be effective. The proposed correspondence determination scheme for matching disparate images exploits high-level shape cues that are derived from low-level local feature descriptors, thus combining the best of both worlds. A graph-based representation for the disparate image pair is generated by constructing an affinity matrix that embeds the distances between feature points in two images, thus modeling the correspondence determination problem as one of graph matching. The eigenspectrum of the affinity matrix, i.e., the learned global shape representation, is then used to further regress the transformation or homography that defines the correspondence between the source image and target image. The proposed scheme is shown to yield state-of-the-art results for both, coarse-level shape matching as well as fine point-wise correspondence determination.

Deep Spectral Correspondence for Matching Disparate Image Pairs

Sep 12, 2018

Abstract:A novel, non-learning-based, saliency-aware, shape-cognizant correspondence determination technique is proposed for matching image pairs that are significantly disparate in nature. Images in the real world often exhibit high degrees of variation in scale, orientation, viewpoint, illumination and affine projection parameters, and are often accompanied by the presence of textureless regions and complete or partial occlusion of scene objects. The above conditions confound most correspondence determination techniques by rendering impractical the use of global contour-based descriptors or local pixel-level features for establishing correspondence. The proposed deep spectral correspondence (DSC) determination scheme harnesses the representational power of local feature descriptors to derive a complex high-level global shape representation for matching disparate images. The proposed scheme reasons about correspondence between disparate images using high-level global shape cues derived from low-level local feature descriptors. Consequently, the proposed scheme enjoys the best of both worlds, i.e., a high degree of invariance to affine parameters such as scale, orientation, viewpoint, illumination afforded by the global shape cues and robustness to occlusion provided by the low-level feature descriptors. While the shape-based component within the proposed scheme infers what to look for, an additional saliency-based component dictates where to look at thereby tackling the noisy correspondences arising from the presence of textureless regions and complex backgrounds. In the proposed scheme, a joint image graph is constructed using distances computed between interest points in the appearance (i.e., image) space. Eigenspectral decomposition of the joint image graph allows for reasoning about shape similarity to be performed jointly, in the appearance space and eigenspace.

Local Geometry Inclusive Global Shape Representation

Jul 20, 2017

Abstract:Knowledge of shape geometry plays a pivotal role in many shape analysis applications. In this paper we introduce a local geometry-inclusive global representation of 3D shapes based on computation of the shortest quasi-geodesic paths between all possible pairs of points on the 3D shape manifold. In the proposed representation, the normal curvature along the quasi-geodesic paths between any two points on the shape surface is preserved. We employ the eigenspectrum of the proposed global representation to address the problems of determination of region-based correspondence between isometric shapes and characterization of self-symmetry in the absence of prior knowledge in the form of user-defined correspondence maps. We further utilize the commutative property of the resulting shape descriptor to extract stable regions between isometric shapes that differ from one another by a high degree of isometry transformation. We also propose various shape characterization metrics in terms of the eigenvector decomposition of the shape descriptor spectrum to quantify the correspondence and self-symmetry of 3D shapes. The performance of the proposed 3D shape descriptor is experimentally compared with the performance of other relevant state-of-the-art 3D shape descriptors.

Morphological Analusis Of The Left Ventricular Eendocardial Surface Using A Bag-Of-Features Descriptor

Feb 13, 2013

Abstract:The limitations of conventional imaging techniques have hitherto precluded a thorough and formal investigation of the complex morphology of the left ventricular (LV) endocardial surface and its relation to the severity of Coronary Artery Disease (CAD). Recent developments in high-resolution Multirow-Detector Computed Tomography (MDCT) scanner technology have enabled the imaging of LV endocardial surface morphology in a single heart beat. Analysis of high-resolution Computed Tomography (CT) images from a 320-MDCT scanner allows the study of the relationship between percent Diameter Stenosis (DS) of the major coronary arteries and localization of the cardiac segments affected by coronary arterial stenosis. In this paper a novel approach for the analysis using a combination of rigid transformation-invariant shape descriptors and a more generalized isometry-invariant Bag-of-Features (BoF) descriptor, is proposed and implemented. The proposed approach is shown to be successful in identifying, localizing and quantifying the incidence and extent of CAD and thus, is seen to have a potentially significant clinical impact. Specifically, the association between the incidence and extent of CAD, determined via the percent DS measurements of the major coronary arteries, and the alterations in the endocardial surface morphology is formally quantified. A multivariate regression test performed on a strict leave-one-out basis are shown to exhibit a distinct pattern in terms of the correlation coefficient within the cardiac segments where the incidence of coronary arterial stenosis is localized.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge