Steven Landgraf

Rethinking Semi-supervised Segmentation Beyond Accuracy: Reliability and Robustness

Jun 06, 2025Abstract:Semantic segmentation is critical for scene understanding but demands costly pixel-wise annotations, attracting increasing attention to semi-supervised approaches to leverage abundant unlabeled data. While semi-supervised segmentation is often promoted as a path toward scalable, real-world deployment, it is astonishing that current evaluation protocols exclusively focus on segmentation accuracy, entirely overlooking reliability and robustness. These qualities, which ensure consistent performance under diverse conditions (robustness) and well-calibrated model confidences as well as meaningful uncertainties (reliability), are essential for safety-critical applications like autonomous driving, where models must handle unpredictable environments and avoid sudden failures at all costs. To address this gap, we introduce the Reliable Segmentation Score (RSS), a novel metric that combines predictive accuracy, calibration, and uncertainty quality measures via a harmonic mean. RSS penalizes deficiencies in any of its components, providing an easy and intuitive way of holistically judging segmentation models. Comprehensive evaluations of UniMatchV2 against its predecessor and a supervised baseline show that semi-supervised methods often trade reliability for accuracy. While out-of-domain evaluations demonstrate UniMatchV2's robustness, they further expose persistent reliability shortcomings. We advocate for a shift in evaluation protocols toward more holistic metrics like RSS to better align semi-supervised learning research with real-world deployment needs.

A Critical Synthesis of Uncertainty Quantification and Foundation Models in Monocular Depth Estimation

Jan 14, 2025

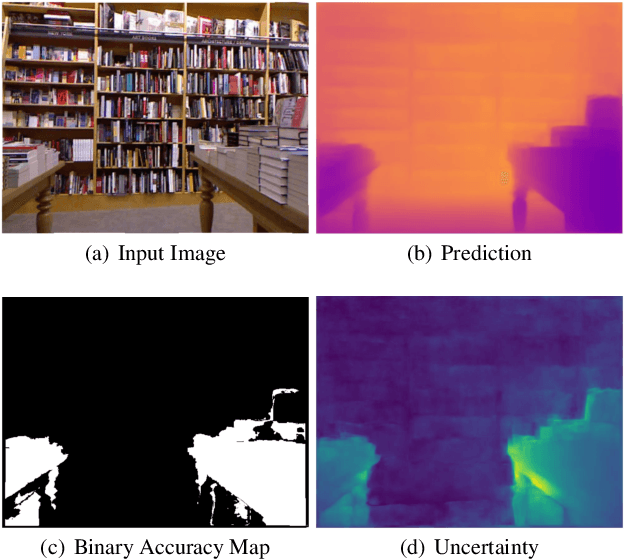

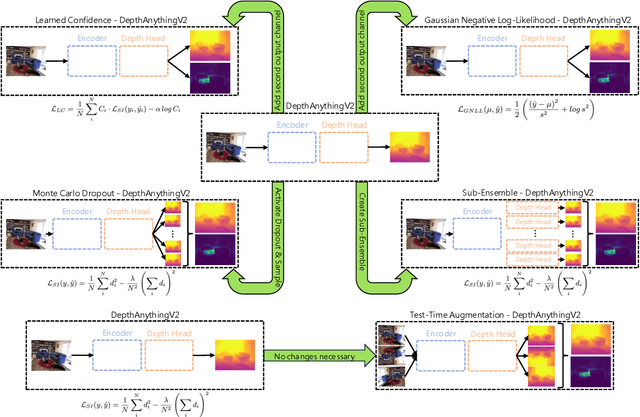

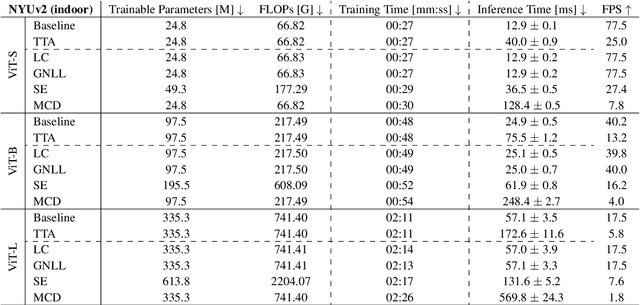

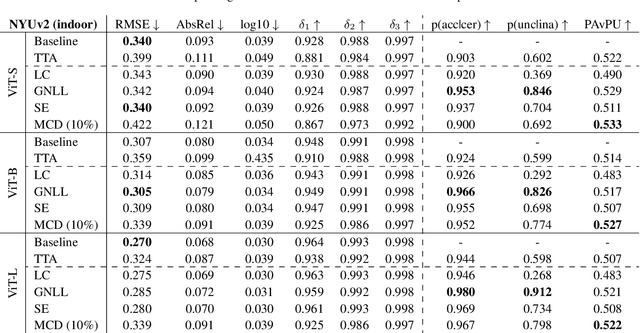

Abstract:While recent foundation models have enabled significant breakthroughs in monocular depth estimation, a clear path towards safe and reliable deployment in the real-world remains elusive. Metric depth estimation, which involves predicting absolute distances, poses particular challenges, as even the most advanced foundation models remain prone to critical errors. Since quantifying the uncertainty has emerged as a promising endeavor to address these limitations and enable trustworthy deployment, we fuse five different uncertainty quantification methods with the current state-of-the-art DepthAnythingV2 foundation model. To cover a wide range of metric depth domains, we evaluate their performance on four diverse datasets. Our findings identify fine-tuning with the Gaussian Negative Log-Likelihood Loss (GNLL) as a particularly promising approach, offering reliable uncertainty estimates while maintaining predictive performance and computational efficiency on par with the baseline, encompassing both training and inference time. By fusing uncertainty quantification and foundation models within the context of monocular depth estimation, this paper lays a critical foundation for future research aimed at improving not only model performance but also its explainability. Extending this critical synthesis of uncertainty quantification and foundation models into other crucial tasks, such as semantic segmentation and pose estimation, presents exciting opportunities for safer and more reliable machine vision systems.

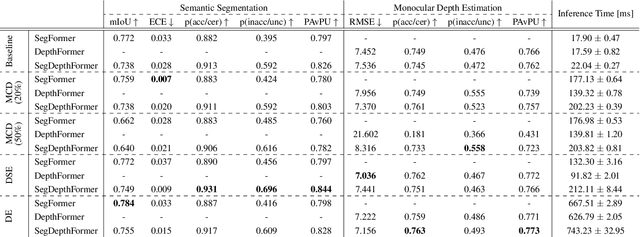

Evaluation of Multi-task Uncertainties in Joint Semantic Segmentation and Monocular Depth Estimation

May 27, 2024

Abstract:While a number of promising uncertainty quantification methods have been proposed to address the prevailing shortcomings of deep neural networks like overconfidence and lack of explainability, quantifying predictive uncertainties in the context of joint semantic segmentation and monocular depth estimation has not been explored yet. Since many real-world applications are multi-modal in nature and, hence, have the potential to benefit from multi-task learning, this is a substantial gap in current literature. To this end, we conduct a comprehensive series of experiments to study how multi-task learning influences the quality of uncertainty estimates in comparison to solving both tasks separately.

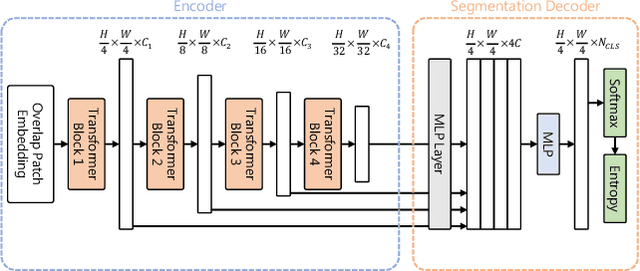

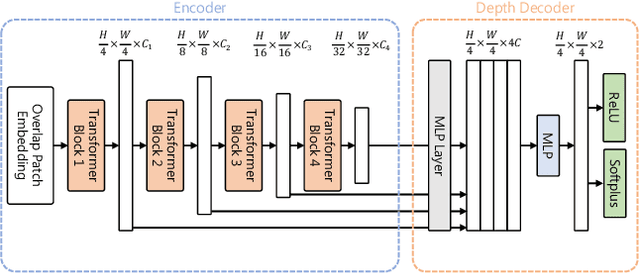

Efficient Multi-task Uncertainties for Joint Semantic Segmentation and Monocular Depth Estimation

Feb 16, 2024

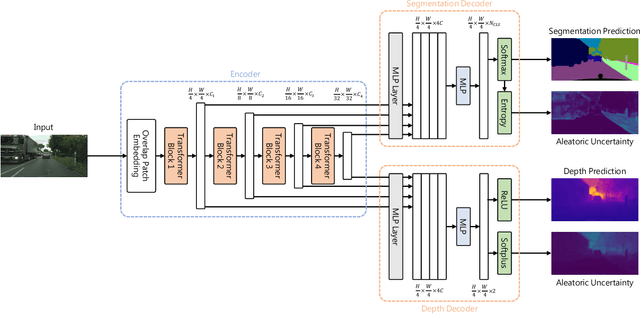

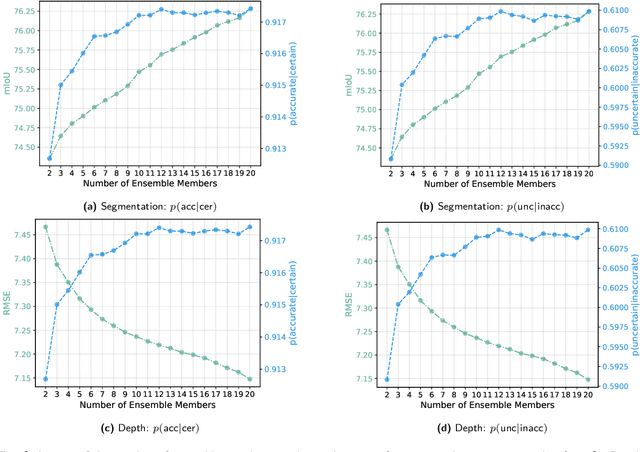

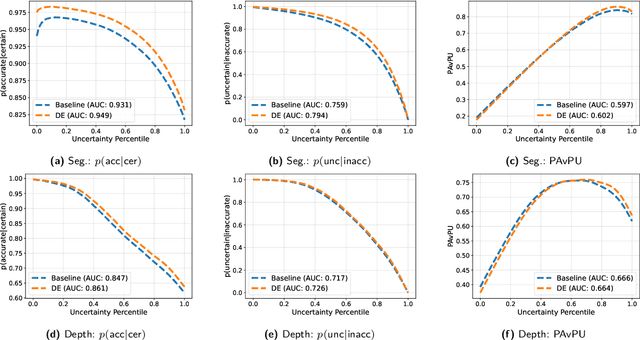

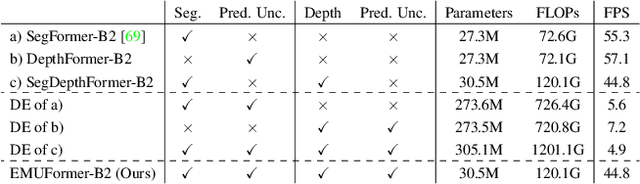

Abstract:Quantifying the predictive uncertainty emerged as a possible solution to common challenges like overconfidence or lack of explainability and robustness of deep neural networks, albeit one that is often computationally expensive. Many real-world applications are multi-modal in nature and hence benefit from multi-task learning. In autonomous driving, for example, the joint solution of semantic segmentation and monocular depth estimation has proven to be valuable. In this work, we first combine different uncertainty quantification methods with joint semantic segmentation and monocular depth estimation and evaluate how they perform in comparison to each other. Additionally, we reveal the benefits of multi-task learning with regard to the uncertainty quality compared to solving both tasks separately. Based on these insights, we introduce EMUFormer, a novel student-teacher distillation approach for joint semantic segmentation and monocular depth estimation as well as efficient multi-task uncertainty quantification. By implicitly leveraging the predictive uncertainties of the teacher, EMUFormer achieves new state-of-the-art results on Cityscapes and NYUv2 and additionally estimates high-quality predictive uncertainties for both tasks that are comparable or superior to a Deep Ensemble despite being an order of magnitude more efficient.

Density Uncertainty Quantification with NeRF-Ensembles: Impact of Data and Scene Constraints

Dec 22, 2023Abstract:In the fields of computer graphics, computer vision and photogrammetry, Neural Radiance Fields (NeRFs) are a major topic driving current research and development. However, the quality of NeRF-generated 3D scene reconstructions and subsequent surface reconstructions, heavily relies on the network output, particularly the density. Regarding this critical aspect, we propose to utilize NeRF-Ensembles that provide a density uncertainty estimate alongside the mean density. We demonstrate that data constraints such as low-quality images and poses lead to a degradation of the training process, increased density uncertainty and decreased predicted density. Even with high-quality input data, the density uncertainty varies based on scene constraints such as acquisition constellations, occlusions and material properties. NeRF-Ensembles not only provide a tool for quantifying the uncertainty but exhibit two promising advantages: Enhanced robustness and artifact removal. Through the utilization of NeRF-Ensembles instead of single NeRFs, small outliers are removed, yielding a smoother output with improved completeness of structures. Furthermore, applying percentile-based thresholds on density uncertainty outliers proves to be effective for the removal of large (foggy) artifacts in post-processing. We conduct our methodology on 3 different datasets: (i) synthetic benchmark dataset, (ii) real benchmark dataset, (iii) real data under realistic recording conditions and sensors.

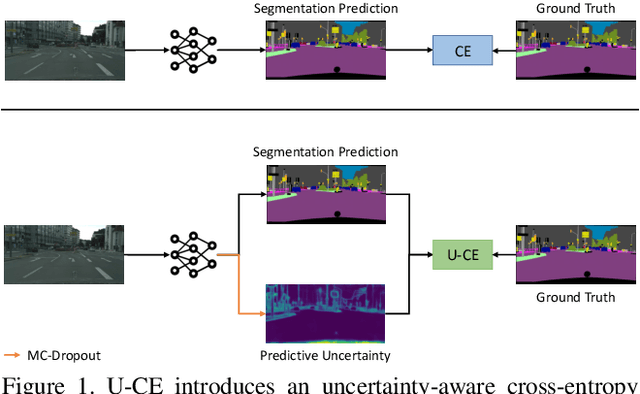

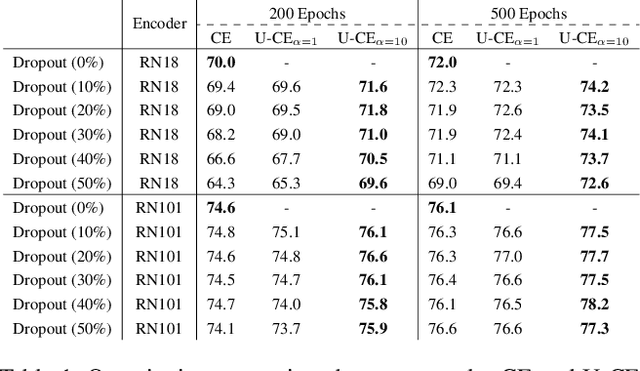

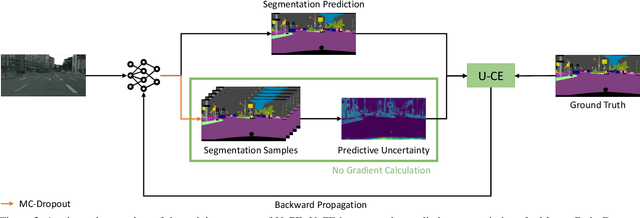

U-CE: Uncertainty-aware Cross-Entropy for Semantic Segmentation

Jul 19, 2023

Abstract:Deep neural networks have shown exceptional performance in various tasks, but their lack of robustness, reliability, and tendency to be overconfident pose challenges for their deployment in safety-critical applications like autonomous driving. In this regard, quantifying the uncertainty inherent to a model's prediction is a promising endeavour to address these shortcomings. In this work, we present a novel Uncertainty-aware Cross-Entropy loss (U-CE) that incorporates dynamic predictive uncertainties into the training process by pixel-wise weighting of the well-known cross-entropy loss (CE). Through extensive experimentation, we demonstrate the superiority of U-CE over regular CE training on two benchmark datasets, Cityscapes and ACDC, using two common backbone architectures, ResNet-18 and ResNet-101. With U-CE, we manage to train models that not only improve their segmentation performance but also provide meaningful uncertainties after training. Consequently, we contribute to the development of more robust and reliable segmentation models, ultimately advancing the state-of-the-art in safety-critical applications and beyond.

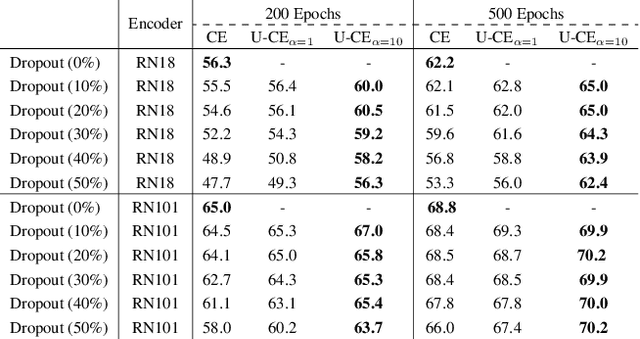

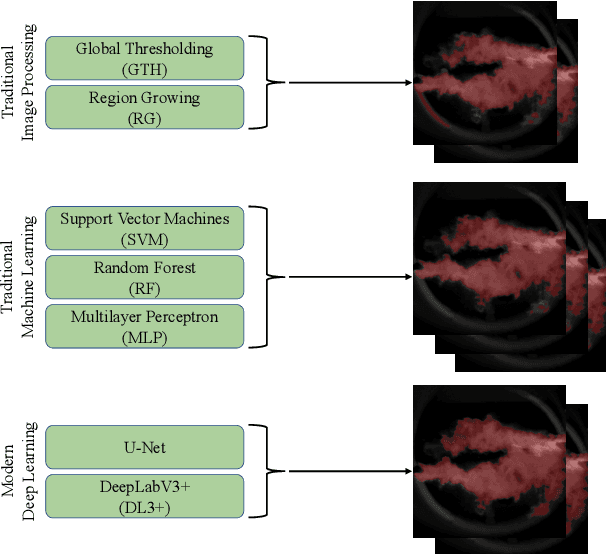

Segmentation of Industrial Burner Flames: A Comparative Study from Traditional Image Processing to Machine and Deep Learning

Jun 26, 2023

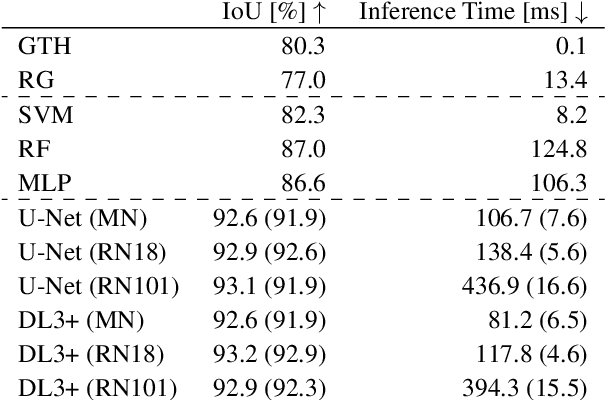

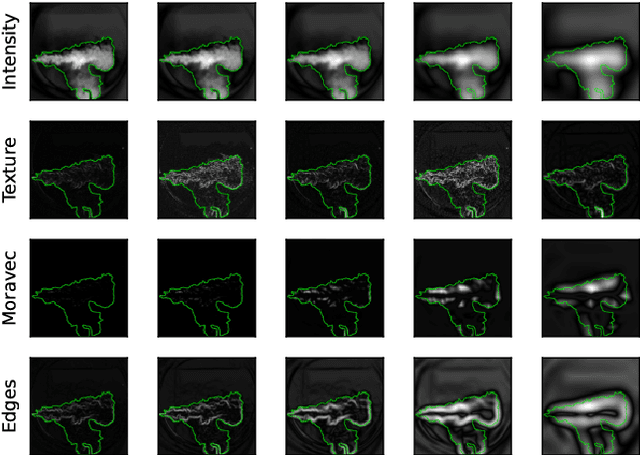

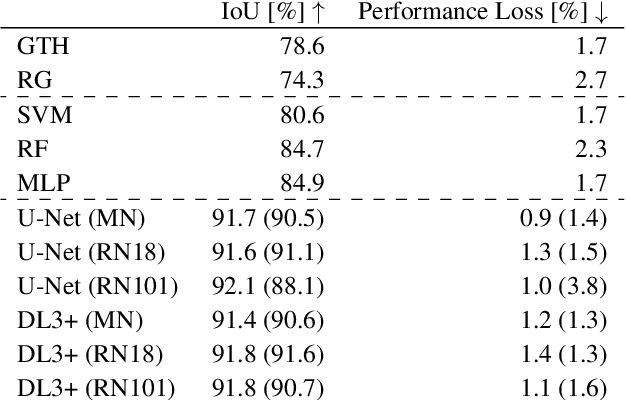

Abstract:In many industrial processes, such as power generation, chemical production, and waste management, accurately monitoring industrial burner flame characteristics is crucial for safe and efficient operation. A key step involves separating the flames from the background through binary segmentation. Decades of machine vision research have produced a wide range of possible solutions, from traditional image processing to traditional machine learning and modern deep learning methods. In this work, we present a comparative study of multiple segmentation approaches, namely Global Thresholding, Region Growing, Support Vector Machines, Random Forest, Multilayer Perceptron, U-Net, and DeepLabV3+, that are evaluated on a public benchmark dataset of industrial burner flames. We provide helpful insights and guidance for researchers and practitioners aiming to select an appropriate approach for the binary segmentation of industrial burner flames and beyond. For the highest accuracy, deep learning is the leading approach, while for fast and simple solutions, traditional image processing techniques remain a viable option.

DUDES: Deep Uncertainty Distillation using Ensembles for Semantic Segmentation

Mar 17, 2023Abstract:Deep neural networks lack interpretability and tend to be overconfident, which poses a serious problem in safety-critical applications like autonomous driving, medical imaging, or machine vision tasks with high demands on reliability. Quantifying the predictive uncertainty is a promising endeavour to open up the use of deep neural networks for such applications. Unfortunately, current available methods are computationally expensive. In this work, we present a novel approach for efficient and reliable uncertainty estimation which we call Deep Uncertainty Distillation using Ensembles for Segmentation (DUDES). DUDES applies student-teacher distillation with a Deep Ensemble to accurately approximate predictive uncertainties with a single forward pass while maintaining simplicity and adaptability. Experimentally, DUDES accurately captures predictive uncertainties without sacrificing performance on the segmentation task and indicates impressive capabilities of identifying wrongly classified pixels and out-of-domain samples on the Cityscapes dataset. With DUDES, we manage to simultaneously simplify and outperform previous work on Deep Ensemble-based Uncertainty Distillation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge