Steven Davy

WaveHiT-SR: Hierarchical Wavelet Network for Efficient Image Super-Resolution

Aug 27, 2025

Abstract:Transformers have demonstrated promising performance in computer vision tasks, including image super-resolution (SR). The quadratic computational complexity of window self-attention mechanisms in many transformer-based SR methods forces the use of small, fixed windows, limiting the receptive field. In this paper, we propose a new approach by embedding the wavelet transform within a hierarchical transformer framework, called (WaveHiT-SR). First, using adaptive hierarchical windows instead of static small windows allows to capture features across different levels and greatly improve the ability to model long-range dependencies. Secondly, the proposed model utilizes wavelet transforms to decompose images into multiple frequency subbands, allowing the network to focus on both global and local features while preserving structural details. By progressively reconstructing high-resolution images through hierarchical processing, the network reduces computational complexity without sacrificing performance. The multi-level decomposition strategy enables the network to capture fine-grained information in lowfrequency components while enhancing high-frequency textures. Through extensive experimentation, we confirm the effectiveness and efficiency of our WaveHiT-SR. Our refined versions of SwinIR-Light, SwinIR-NG, and SRFormer-Light deliver cutting-edge SR results, achieving higher efficiency with fewer parameters, lower FLOPs, and faster speeds.

Tailored-LLaMA: Optimizing Few-Shot Learning in Pruned LLaMA Models with Task-Specific Prompts

Oct 24, 2024

Abstract:Large language models demonstrate impressive proficiency in language understanding and generation. Nonetheless, training these models from scratch, even the least complex billion-parameter variant demands significant computational resources rendering it economically impractical for many organizations. With large language models functioning as general-purpose task solvers, this paper investigates their task-specific fine-tuning. We employ task-specific datasets and prompts to fine-tune two pruned LLaMA models having 5 billion and 4 billion parameters. This process utilizes the pre-trained weights and focuses on a subset of weights using the LoRA method. One challenge in fine-tuning the LLaMA model is crafting a precise prompt tailored to the specific task. To address this, we propose a novel approach to fine-tune the LLaMA model under two primary constraints: task specificity and prompt effectiveness. Our approach, Tailored LLaMA initially employs structural pruning to reduce the model sizes from 7B to 5B and 4B parameters. Subsequently, it applies a carefully designed prompt specific to the task and utilizes the LoRA method to accelerate the fine-tuning process. Moreover, fine-tuning a model pruned by 50\% for less than one hour restores the mean accuracy of classification tasks to 95.68\% at a 20\% compression ratio and to 86.54\% at a 50\% compression ratio through few-shot learning with 50 shots. Our validation of Tailored LLaMA on these two pruned variants demonstrates that even when compressed to 50\%, the models maintain over 65\% of the baseline model accuracy in few-shot classification and generation tasks. These findings highlight the efficacy of our tailored approach in maintaining high performance with significantly reduced model sizes.

SpikeBottleNet: Energy Efficient Spike Neural Network Partitioning for Feature Compression in Device-Edge Co-Inference Systems

Oct 11, 2024Abstract:The advent of intelligent mobile applications highlights the crucial demand for deploying powerful deep learning models on resource-constrained mobile devices. An effective solution in this context is the device-edge co-inference framework, which partitions a deep neural network between a mobile device and a nearby edge server. This approach requires balancing on-device computations and communication costs, often achieved through compressed intermediate feature transmission. Conventional deep neural network architectures require continuous data processing, leading to substantial energy consumption by edge devices. This motivates exploring binary, event-driven activations enabled by spiking neural networks (SNNs), known for their extremely energy efficiency. In this research, we propose a novel architecture named SpikeBottleNet, a significant improvement to the existing architecture by integrating SNNs. A key aspect of our investigation is the development of an intermediate feature compression technique specifically designed for SNNs. This technique leverages a split computing approach for SNNs to partition complex architectures, such as Spike ResNet50. By incorporating the power of SNNs within device-edge co-inference systems, experimental results demonstrate that our SpikeBottleNet achieves a significant bit compression ratio of up to 256x in the final convolutional layer while maintaining high classification accuracy with only a 2.5% reduction. Moreover, compared to the baseline BottleNet++ architecture, our framework reduces the transmitted feature size at earlier splitting points by 75%. Furthermore, in terms of the energy efficiency of edge devices, our methodology surpasses the baseline by a factor of up to 98, demonstrating significant enhancements in both efficiency and performance.

Energy-Efficient Uncertainty-Aware Biomass Composition Prediction at the Edge

Apr 17, 2024

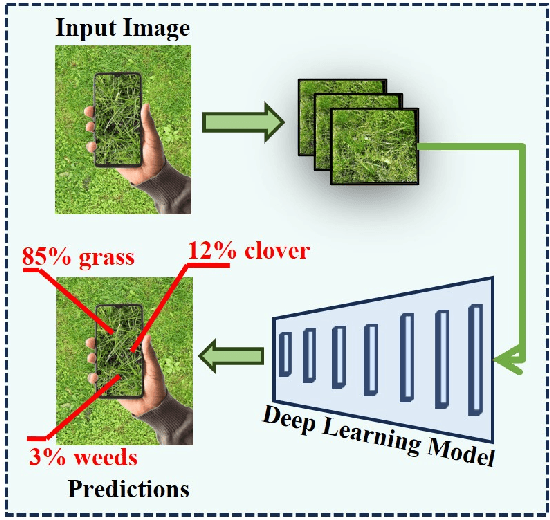

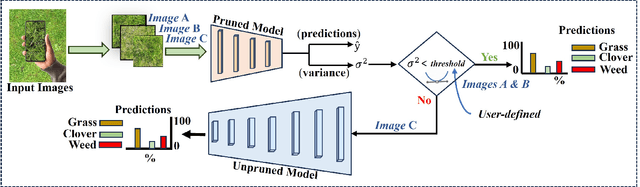

Abstract:Clover fixates nitrogen from the atmosphere to the ground, making grass-clover mixtures highly desirable to reduce external nitrogen fertilization. Herbage containing clover additionally promotes higher food intake, resulting in higher milk production. Herbage probing however remains largely unused as it requires a time-intensive manual laboratory analysis. Without this information, farmers are unable to perform localized clover sowing or take targeted fertilization decisions. Deep learning algorithms have been proposed with the goal to estimate the dry biomass composition from images of the grass directly in the fields. The energy-intensive nature of deep learning however limits deployment to practical edge devices such as smartphones. This paper proposes to fill this gap by applying filter pruning to reduce the energy requirement of existing deep learning solutions. We report that although pruned networks are accurate on controlled, high-quality images of the grass, they struggle to generalize to real-world smartphone images that are blurry or taken from challenging angles. We address this challenge by training filter-pruned models using a variance attenuation loss so they can predict the uncertainty of their predictions. When the uncertainty exceeds a threshold, we re-infer using a more accurate unpruned model. This hybrid approach allows us to reduce energy consumption while retaining a high accuracy. We evaluate our algorithm on two datasets: the GrassClover and the Irish clover using an NVIDIA Jetson Nano edge device. We find that we reduce energy reduction with respect to state-of-the-art solutions by 50% on average with only 4% accuracy loss.

Complexity-Driven CNN Compression for Resource-constrained Edge AI

Aug 26, 2022

Abstract:Recent advances in Artificial Intelligence (AI) on the Internet of Things (IoT)-enabled network edge has realized edge intelligence in several applications such as smart agriculture, smart hospitals, and smart factories by enabling low-latency and computational efficiency. However, deploying state-of-the-art Convolutional Neural Networks (CNNs) such as VGG-16 and ResNets on resource-constrained edge devices is practically infeasible due to their large number of parameters and floating-point operations (FLOPs). Thus, the concept of network pruning as a type of model compression is gaining attention for accelerating CNNs on low-power devices. State-of-the-art pruning approaches, either structured or unstructured do not consider the different underlying nature of complexities being exhibited by convolutional layers and follow a training-pruning-retraining pipeline, which results in additional computational overhead. In this work, we propose a novel and computationally efficient pruning pipeline by exploiting the inherent layer-level complexities of CNNs. Unlike typical methods, our proposed complexity-driven algorithm selects a particular layer for filter-pruning based on its contribution to overall network complexity. We follow a procedure that directly trains the pruned model and avoids the computationally complex ranking and fine-tuning steps. Moreover, we define three modes of pruning, namely parameter-aware (PA), FLOPs-aware (FA), and memory-aware (MA), to introduce versatile compression of CNNs. Our results show the competitive performance of our approach in terms of accuracy and acceleration. Lastly, we present a trade-off between different resources and accuracy which can be helpful for developers in making the right decisions in resource-constrained IoT environments.

AI and 6G into the Metaverse: Fundamentals, Challenges and Future Research Trends

Aug 23, 2022

Abstract:Since Facebook was renamed Meta, a lot of attention, debate, and exploration have intensified about what the Metaverse is, how it works, and the possible ways to exploit it. It is anticipated that Metaverse will be a continuum of rapidly emerging technologies, usecases, capabilities, and experiences that will make it up for the next evolution of the Internet. Several researchers have already surveyed the literature on artificial intelligence (AI) and wireless communications in realizing the Metaverse. However, due to the rapid emergence of technologies, there is a need for a comprehensive and in-depth review of the role of AI, 6G, and the nexus of both in realizing the immersive experiences of Metaverse. Therefore, in this survey, we first introduce the background and ongoing progress in augmented reality (AR), virtual reality (VR), mixed reality (MR) and spatial computing, followed by the technical aspects of AI and 6G. Then, we survey the role of AI in the Metaverse by reviewing the state-of-the-art in deep learning, computer vision, and edge AI. Next, we investigate the promising services of B5G/6G towards Metaverse, followed by identifying the role of AI in 6G networks and 6G networks for AI in support of Metaverse applications. Finally, we enlist the existing and potential applications, usecases, and projects to highlight the importance of progress in the Metaverse. Moreover, in order to provide potential research directions to researchers, we enlist the challenges, research gaps, and lessons learned identified from the literature review of the aforementioned technologies.

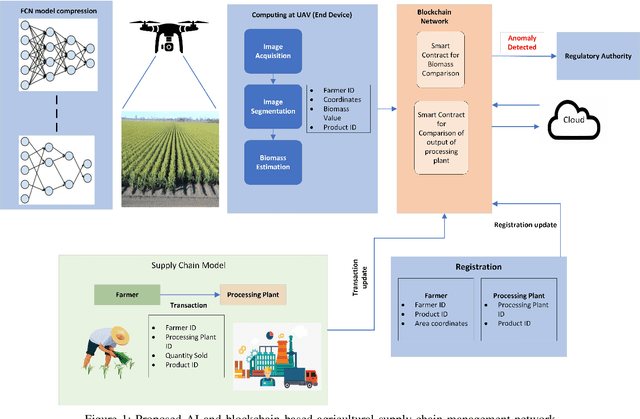

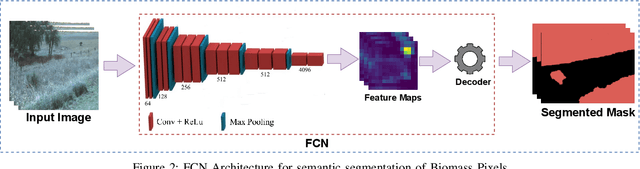

Towards On-Device AI and Blockchain for 6G enabled Agricultural Supply-chain Management

Mar 12, 2022

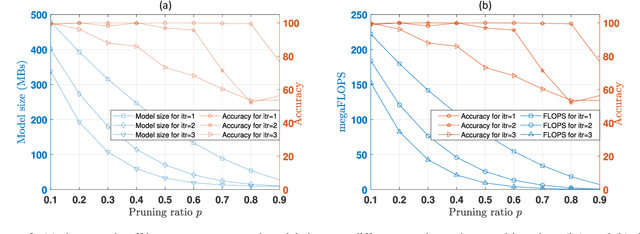

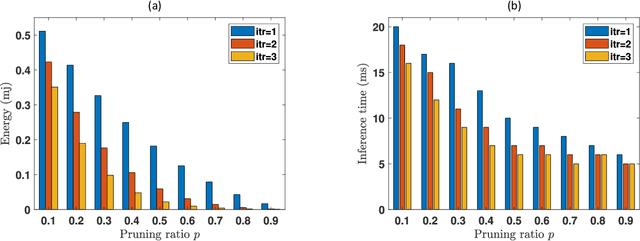

Abstract:6G envisions artificial intelligence (AI) powered solutions for enhancing the quality-of-service (QoS) in the network and to ensure optimal utilization of resources. In this work, we propose an architecture based on the combination of unmanned aerial vehicles (UAVs), AI and blockchain for agricultural supply-chain management with the purpose of ensuring traceability, transparency, tracking inventories and contracts. We propose a solution to facilitate on-device AI by generating a roadmap of models with various resource-accuracy trade-offs. A fully convolutional neural network (FCN) model is used for biomass estimation through images captured by the UAV. Instead of a single compressed FCN model for deployment on UAV, we motivate the idea of iterative pruning to provide multiple task-specific models with various complexities and accuracy. To alleviate the impact of flight failure in a 6G enabled dynamic UAV network, the proposed model selection strategy will assist UAVs to update the model based on the runtime resource requirements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge