Stefan Ultes

Retrieval-Augmented Neural Response Generation Using Logical Reasoning and Relevance Scoring

Oct 20, 2023

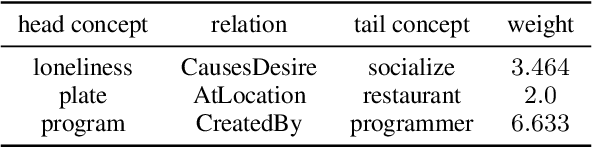

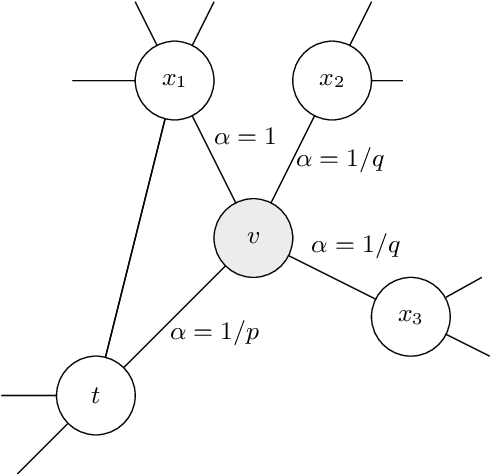

Abstract:Constructing responses in task-oriented dialogue systems typically relies on information sources such the current dialogue state or external databases. This paper presents a novel approach to knowledge-grounded response generation that combines retrieval-augmented language models with logical reasoning. The approach revolves around a knowledge graph representing the current dialogue state and background information, and proceeds in three steps. The knowledge graph is first enriched with logically derived facts inferred using probabilistic logical programming. A neural model is then employed at each turn to score the conversational relevance of each node and edge of this extended graph. Finally, the elements with highest relevance scores are converted to a natural language form, and are integrated into the prompt for the neural conversational model employed to generate the system response. We investigate the benefits of the proposed approach on two datasets (KVRET and GraphWOZ) along with a human evaluation. Experimental results show that the combination of (probabilistic) logical reasoning with conversational relevance scoring does increase both the factuality and fluency of the responses.

Fostering User Engagement in the Critical Reflection of Arguments

Aug 17, 2023Abstract:A natural way to resolve different points of view and form opinions is through exchanging arguments and knowledge. Facing the vast amount of available information on the internet, people tend to focus on information consistent with their beliefs. Especially when the issue is controversial, information is often selected that does not challenge one's beliefs. To support a fair and unbiased opinion-building process, we propose a chatbot system that engages in a deliberative dialogue with a human. In contrast to persuasive systems, the envisioned chatbot aims to provide a diverse and representative overview - embedded in a conversation with the user. To account for a reflective and unbiased exploration of the topic, we enable the system to intervene if the user is too focused on their pre-existing opinion. Therefore we propose a model to estimate the users' reflective engagement (RUE), defined as their critical thinking and open-mindedness. We report on a user study with 58 participants to test our model and the effect of the intervention mechanism, discuss the implications of the results, and present perspectives for future work. The results show a significant effect on both user reflection and total user focus, proving our proposed approach's validity.

System-Initiated Transitions from Chit-Chat to Task-Oriented Dialogues with Transition Info Extractor and Transition Sentence Generator

Aug 06, 2023

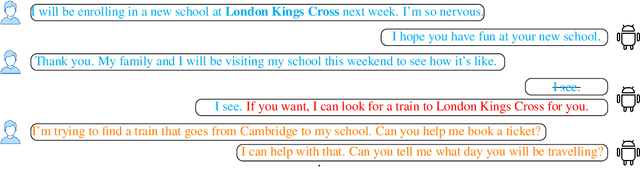

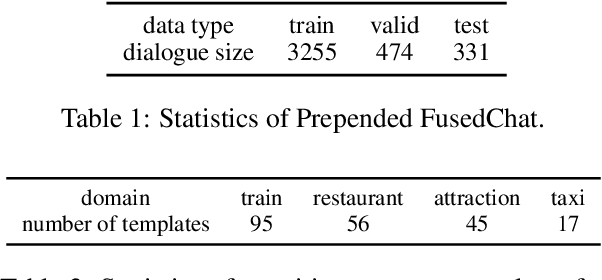

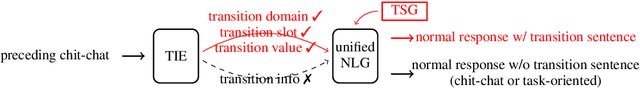

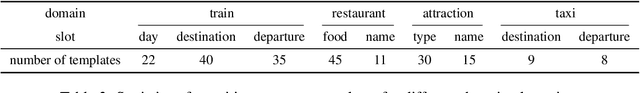

Abstract:In this work, we study dialogue scenarios that start from chit-chat but eventually switch to task-related services, and investigate how a unified dialogue model, which can engage in both chit-chat and task-oriented dialogues, takes the initiative during the dialogue mode transition from chit-chat to task-oriented in a coherent and cooperative manner. We firstly build a {transition info extractor} (TIE) that keeps track of the preceding chit-chat interaction and detects the potential user intention to switch to a task-oriented service. Meanwhile, in the unified model, a {transition sentence generator} (TSG) is extended through efficient Adapter tuning and transition prompt learning. When the TIE successfully finds task-related information from the preceding chit-chat, such as a transition domain, then the TSG is activated automatically in the unified model to initiate this transition by generating a transition sentence under the guidance of transition information extracted by TIE. The experimental results show promising performance regarding the proactive transitions. We achieve an additional large improvement on TIE model by utilizing Conditional Random Fields (CRF). The TSG can flexibly generate transition sentences while maintaining the unified capabilities of normal chit-chat and task-oriented response generation.

Unified Conversational Models with System-Initiated Transitions between Chit-Chat and Task-Oriented Dialogues

Jul 04, 2023Abstract:Spoken dialogue systems (SDSs) have been separately developed under two different categories, task-oriented and chit-chat. The former focuses on achieving functional goals and the latter aims at creating engaging social conversations without special goals. Creating a unified conversational model that can engage in both chit-chat and task-oriented dialogue is a promising research topic in recent years. However, the potential ``initiative'' that occurs when there is a change between dialogue modes in one dialogue has rarely been explored. In this work, we investigate two kinds of dialogue scenarios, one starts from chit-chat implicitly involving task-related topics and finally switching to task-oriented requests; the other starts from task-oriented interaction and eventually changes to casual chat after all requested information is provided. We contribute two efficient prompt models which can proactively generate a transition sentence to trigger system-initiated transitions in a unified dialogue model. One is a discrete prompt model trained with two discrete tokens, the other one is a continuous prompt model using continuous prompt embeddings automatically generated by a classifier. We furthermore show that the continuous prompt model can also be used to guide the proactive transitions between particular domains in a multi-domain task-oriented setting.

GraphWOZ: Dialogue Management with Conversational Knowledge Graphs

Nov 23, 2022

Abstract:We present a new approach to dialogue management using conversational knowledge graphs as core representation of the dialogue state. To this end, we introduce a new dataset, GraphWOZ, which comprises Wizard-of-Oz dialogues in which human participants interact with a robot acting as a receptionist. In contrast to most existing work on dialogue management, GraphWOZ relies on a dialogue state explicitly represented as a dynamic knowledge graph instead of a fixed set of slots. This graph is composed of a varying number of entities (such as individuals, places, events, utterances and mentions) and relations between them (such as persons being part of a group or attending an event). The graph is then regularly updated on the basis of new observations and system actions. GraphWOZ is released along with detailed manual annotations related to the user intents, system responses, and reference relations occurring in both user and system turns. Based on GraphWOZ, we present experimental results for two dialogue management tasks, namely conversational entity linking and response ranking. For conversational entity linking, we show how to connect utterance mentions to their corresponding entity in the knowledge graph with a neural model relying on a combination of both string and graph-based features. Response ranking is then performed by summarizing the relevant content of the graph into a text, which is concatenated with the dialogue history and employed as input to score possible responses to a given dialogue state.

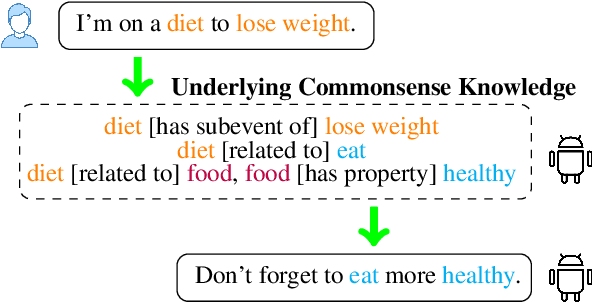

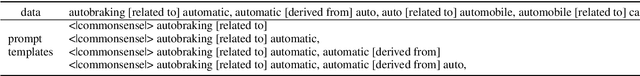

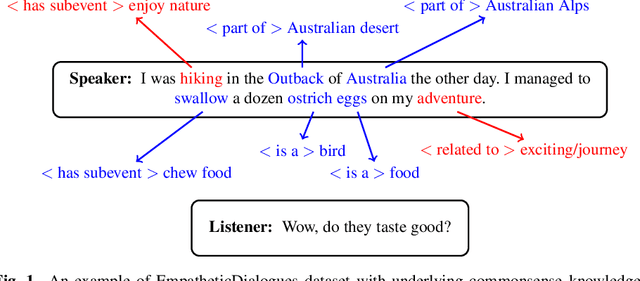

ConceptNet infused DialoGPT for Underlying Commonsense Understanding and Reasoning in Dialogue Response Generation

Sep 29, 2022

Abstract:The pre-trained conversational models still fail to capture the implicit commonsense (CS) knowledge hidden in the dialogue interaction, even though they were pre-trained with an enormous dataset. In order to build a dialogue agent with CS capability, we firstly inject external knowledge into a pre-trained conversational model to establish basic commonsense through efficient Adapter tuning (Section 4). Secondly, we propose the ``two-way learning'' method to enable the bidirectional relationship between CS knowledge and sentence pairs so that the model can generate a sentence given the CS triplets, also generate the underlying CS knowledge given a sentence (Section 5). Finally, we leverage this integrated CS capability to improve open-domain dialogue response generation so that the dialogue agent is capable of understanding the CS knowledge hidden in dialogue history on top of inferring related other knowledge to further guide response generation (Section 6). The experiment results demonstrate that CS\_Adapter fusion helps DialoGPT to be able to generate series of CS knowledge. And the DialoGPT+CS\_Adapter response model adapted from CommonGen training can generate underlying CS triplets that fits better to dialogue context.

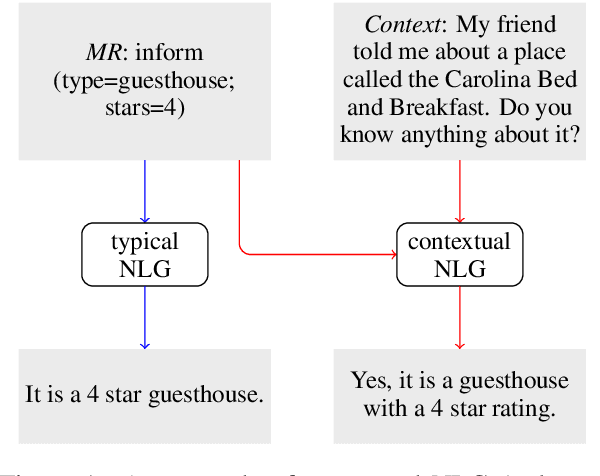

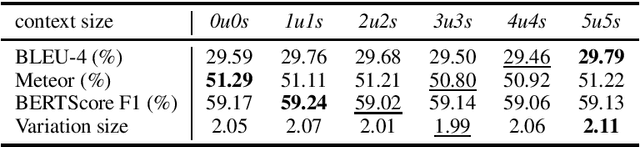

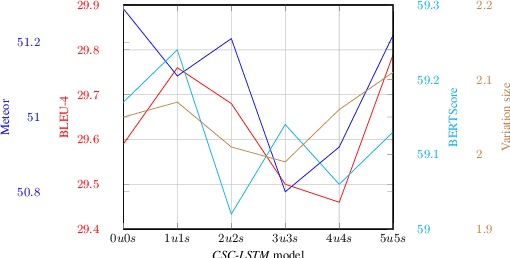

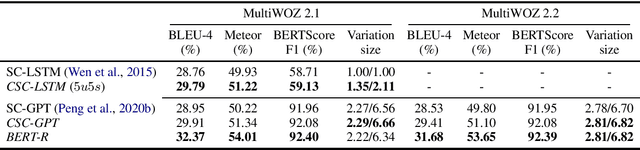

Context Matters in Semantically Controlled Language Generation for Task-oriented Dialogue Systems

Nov 28, 2021

Abstract:This work combines information about the dialogue history encoded by pre-trained model with a meaning representation of the current system utterance to realize contextual language generation in task-oriented dialogues. We utilize the pre-trained multi-context ConveRT model for context representation in a model trained from scratch; and leverage the immediate preceding user utterance for context generation in a model adapted from the pre-trained GPT-2. Both experiments with the MultiWOZ dataset show that contextual information encoded by pre-trained model improves the performance of response generation both in automatic metrics and human evaluation. Our presented contextual generator enables higher variety of generated responses that fit better to the ongoing dialogue. Analysing the context size shows that longer context does not automatically lead to better performance, but the immediate preceding user utterance plays an essential role for contextual generation. In addition, we also propose a re-ranker for the GPT-based generation model. The experiments show that the response selected by the re-ranker has a significant improvement on automatic metrics.

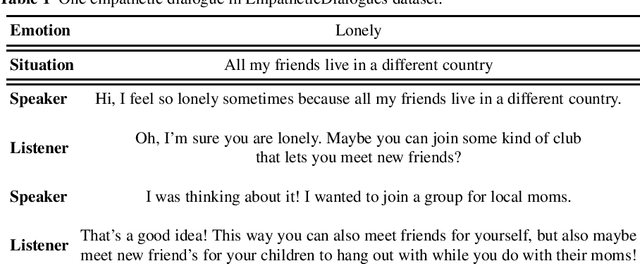

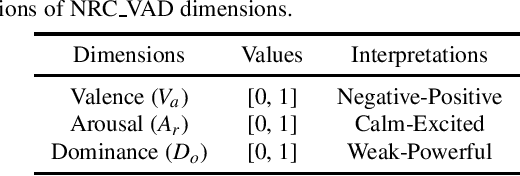

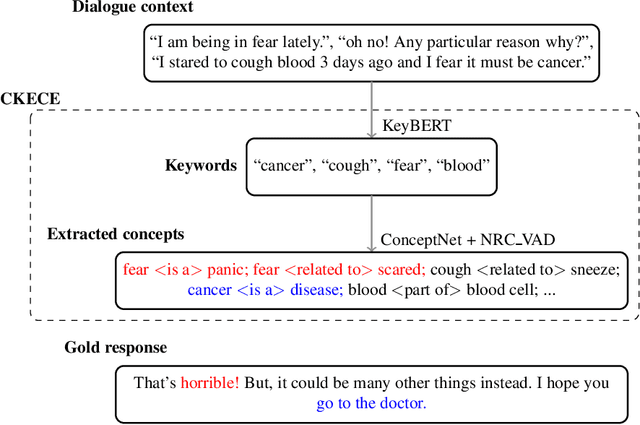

Empathetic Dialogue Generation with Pre-trained RoBERTa-GPT2 and External Knowledge

Sep 07, 2021

Abstract:One challenge for dialogue agents is to recognize feelings of the conversation partner and respond accordingly. In this work, RoBERTa-GPT2 is proposed for empathetic dialogue generation, where the pre-trained auto-encoding RoBERTa is utilised as encoder and the pre-trained auto-regressive GPT-2 as decoder. With the combination of the pre-trained RoBERTa and GPT-2, our model realizes a new state-of-the-art emotion accuracy. To enable the empathetic ability of RoBERTa-GPT2 model, we propose a commonsense knowledge and emotional concepts extractor, in which the commonsensible and emotional concepts of dialogue context are extracted for the GPT-2 decoder. The experiment results demonstrate that the empathetic dialogue generation benefits from both pre-trained encoder-decoder architecture and external knowledge.

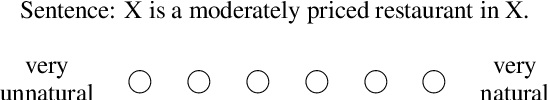

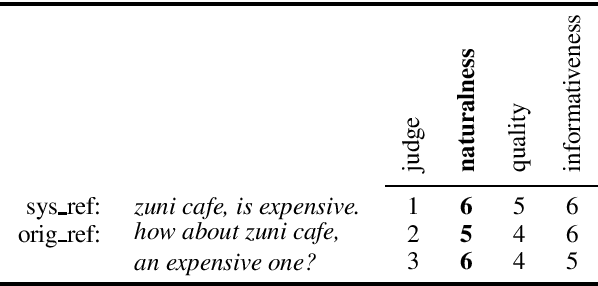

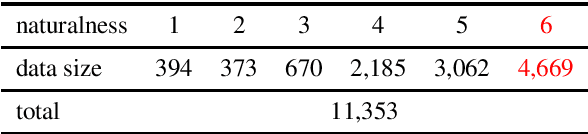

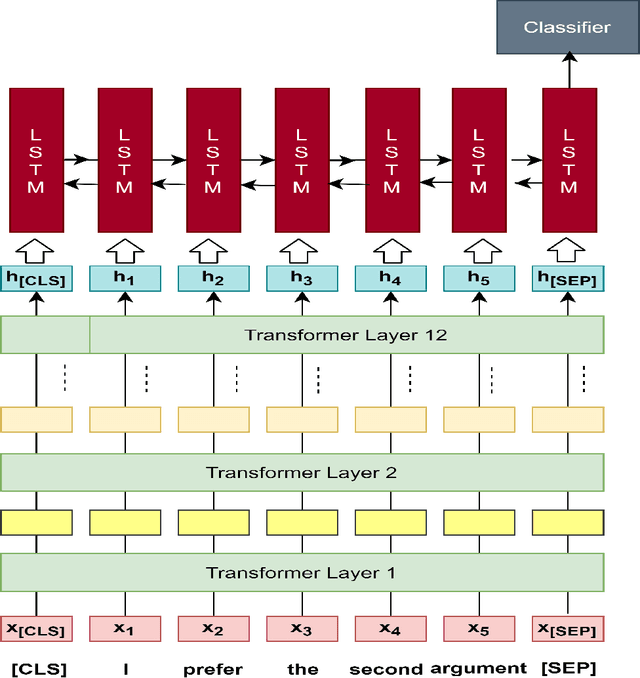

Naturalness Evaluation of Natural Language Generation in Task-oriented Dialogues using BERT

Sep 07, 2021

Abstract:This paper presents an automatic method to evaluate the naturalness of natural language generation in dialogue systems. While this task was previously rendered through expensive and time-consuming human labor, we present this novel task of automatic naturalness evaluation of generated language. By fine-tuning the BERT model, our proposed naturalness evaluation method shows robust results and outperforms the baselines: support vector machines, bi-directional LSTMs, and BLEURT. In addition, the training speed and evaluation performance of naturalness model are improved by transfer learning from quality and informativeness linguistic knowledge.

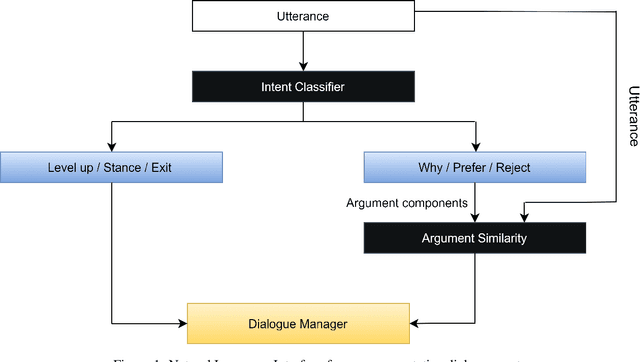

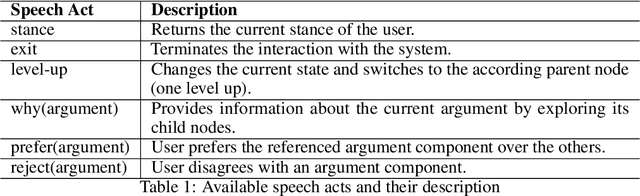

Natural Language Understanding for Argumentative Dialogue Systems in the Opinion Building Domain

Mar 03, 2021

Abstract:This paper introduces a natural language understanding (NLU) framework for argumentative dialogue systems in the information-seeking and opinion building domain. Our approach distinguishes multiple user intents and identifies system arguments the user refers to in his or her natural language utterances. Our model is applicable in an argumentative dialogue system that allows the user to inform him-/herself about and build his/her opinion towards a controversial topic. In order to evaluate the proposed approach, we collect user utterances for the interaction with the respective system and labeled with intent and reference argument in an extensive online study. The data collection includes multiple topics and two different user types (native speakers from the UK and non-native speakers from China). The evaluation indicates a clear advantage of the utilized techniques over baseline approaches, as well as a robustness of the proposed approach against new topics and different language proficiency as well as cultural background of the user.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge