Stefan Schneider

Transformer-based nowcasting of radar composites from satellite images for severe weather

Oct 30, 2023Abstract:Weather radar data are critical for nowcasting and an integral component of numerical weather prediction models. While weather radar data provide valuable information at high resolution, their ground-based nature limits their availability, which impedes large-scale applications. In contrast, meteorological satellites cover larger domains but with coarser resolution. However, with the rapid advancements in data-driven methodologies and modern sensors aboard geostationary satellites, new opportunities are emerging to bridge the gap between ground- and space-based observations, ultimately leading to more skillful weather prediction with high accuracy. Here, we present a Transformer-based model for nowcasting ground-based radar image sequences using satellite data up to two hours lead time. Trained on a dataset reflecting severe weather conditions, the model predicts radar fields occurring under different weather phenomena and shows robustness against rapidly growing/decaying fields and complex field structures. Model interpretation reveals that the infrared channel centered at 10.3 $\mu m$ (C13) contains skillful information for all weather conditions, while lightning data have the highest relative feature importance in severe weather conditions, particularly in shorter lead times. The model can support precipitation nowcasting across large domains without an explicit need for radar towers, enhance numerical weather prediction and hydrological models, and provide radar proxy for data-scarce regions. Moreover, the open-source framework facilitates progress towards operational data-driven nowcasting.

Counting Fish and Dolphins in Sonar Images Using Deep Learning

Jul 24, 2020

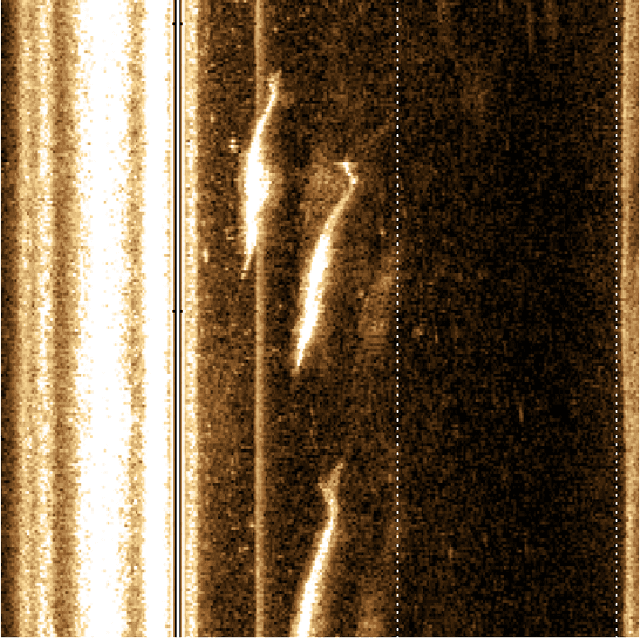

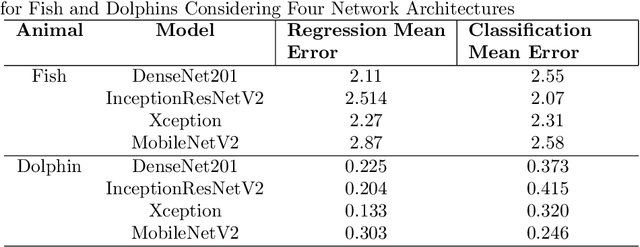

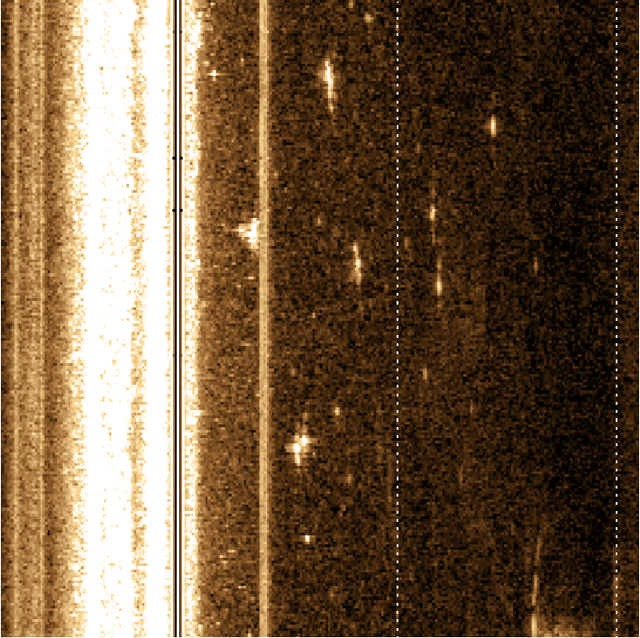

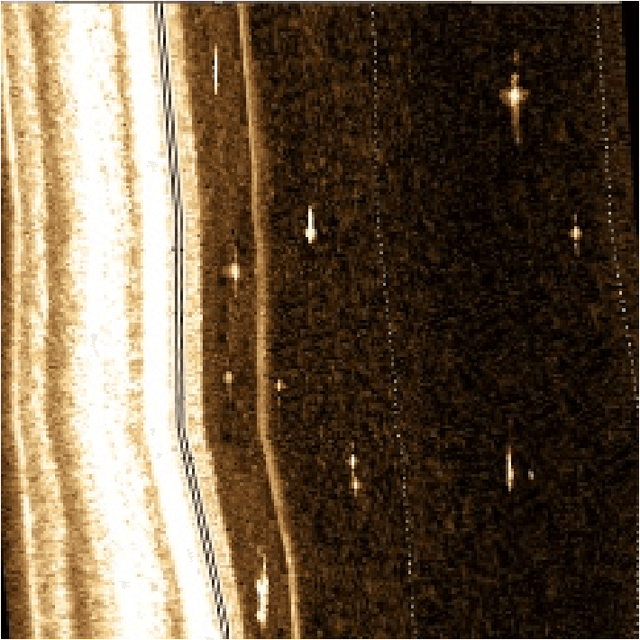

Abstract:Deep learning provides the opportunity to improve upon conflicting reports considering the relationship between the Amazon river's fish and dolphin abundance and reduced canopy cover as a result of deforestation. Current methods of fish and dolphin abundance estimates are performed by on-site sampling using visual and capture/release strategies. We propose a novel approach to calculating fish abundance using deep learning for fish and dolphin estimates from sonar images taken from the back of a trolling boat. We consider a data set of 143 images ranging from 0-34 fish, and 0-3 dolphins provided by the Fund Amazonia research group. To overcome the data limitation, we test the capabilities of data augmentation on an unconventional 15/85 training/testing split. Using 20 training images, we simulate a gradient of data up to 25,000 images using augmented backgrounds and randomly placed/rotation cropped fish and dolphin taken from the training set. We then train four multitask network architectures: DenseNet201, InceptionNetV2, Xception, and MobileNetV2 to predict fish and dolphin numbers using two function approximation methods: regression and classification. For regression, Densenet201 performed best for fish and Xception best for dolphin with mean squared errors of 2.11 and 0.133 respectively. For classification, InceptionResNetV2 performed best for fish and MobileNetV2 best for dolphins with a mean error of 2.07 and 0.245 respectively. Considering the 123 testing images, our results show the success of data simulation for limited sonar data sets. We find DenseNet201 is able to identify dolphins after approximately 5000 training images, while fish required the full 25,000. Our method can be used to lower costs and expedite the data analysis of fish and dolphin abundance to real-time along the Amazon river and river systems worldwide.

Similarity Learning Networks for Animal Individual Re-Identification - Beyond the Capabilities of a Human Observer

Feb 21, 2019

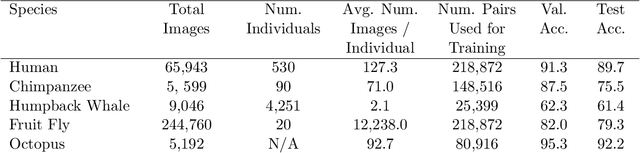

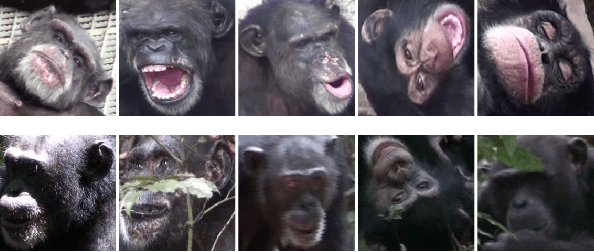

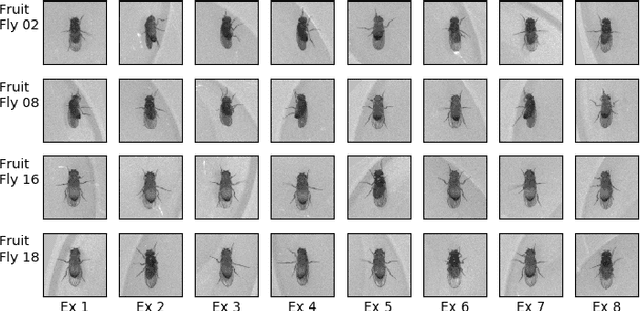

Abstract:The ability of a researcher to re-identify (re-ID) an animal individual upon re-encounter is fundamental for addressing a broad range of questions in the study of ecosystem function, community and population dynamics, and behavioural ecology. Tagging animals during mark and recapture studies is the most common method for reliable animal re-ID however camera traps are a desirable alternative, requiring less labour, much less intrusion, and prolonged and continuous monitoring into an environment. Despite these advantages, the analyses of camera traps and video for re-ID by humans are criticized for their biases related to human judgment and inconsistencies between analyses. Recent years have witnessed the emergence of deep learning systems which re-ID humans based on image and video data with near perfect accuracy. Despite this success, there are limited examples of this approach for animal re-ID. Here, we demonstrate the viability of novel deep similarity learning methods on five species: humans, chimpanzees, humpback whales, octopus and fruit flies. Our implementation demonstrates the generality of this framework as the same process provides accurate results beyond the capabilities of a human observer. In combination with a species object detection model, this methodology will allow ecologists with camera/video trap data to re-identify individuals that exit and re-enter the camera frame. Our expectation is that this is just the beginning of a major trend that could stand to revolutionize the analysis of camera trap data and, ultimately, our approach to animal ecology.

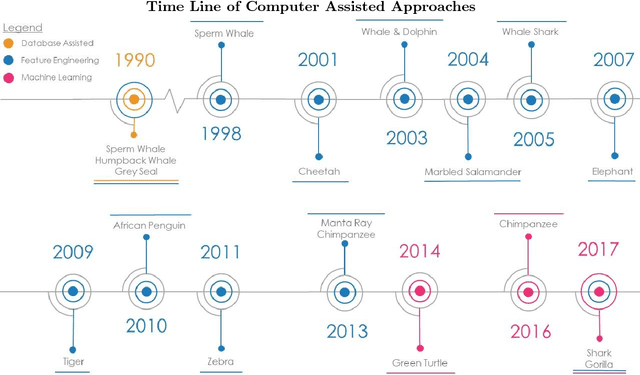

Past, Present, and Future Approaches Using Computer Vision for Animal Re-Identification from Camera Trap Data

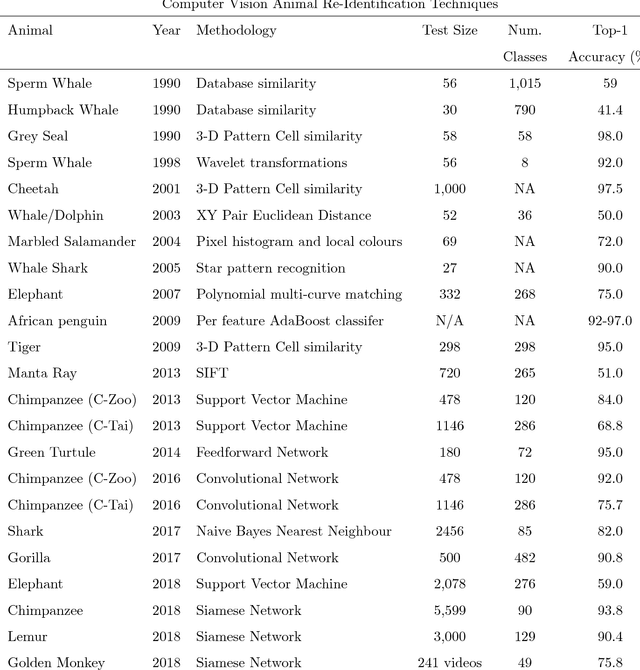

Nov 19, 2018

Abstract:The ability of a researcher to re-identify (re-ID) an individual animal upon re-encounter is fundamental for addressing a broad range of questions in the study of ecosystem function, community and population dynamics, and behavioural ecology. In this review, we describe a brief history of camera traps for re-ID, present a collection of computer vision feature engineering methodologies previously used for animal re-ID, provide an introduction to the underlying mechanisms of deep learning relevant to animal re-ID, highlight the success of deep learning methods for human re-ID, describe the few ecological studies currently utilizing deep learning for camera trap analyses, and our predictions for near future methodologies based on the rapid development of deep learning methods. By utilizing novel deep learning methods for object detection and similarity comparisons, ecologists can extract animals from an image/video data and train deep learning classifiers to re-ID animal individuals beyond the capabilities of a human observer. This methodology will allow ecologists with camera/video trap data to re-identify individuals that exit and re-enter the camera frame. Our expectation is that this is just the beginning of a major trend that could stand to revolutionize the analysis of camera trap data and, ultimately, our approach to animal ecology.

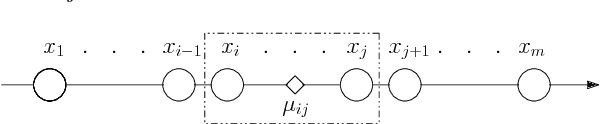

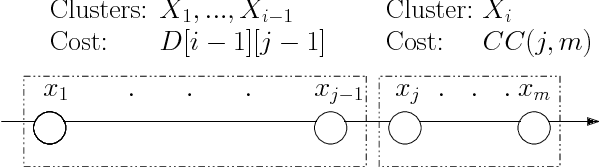

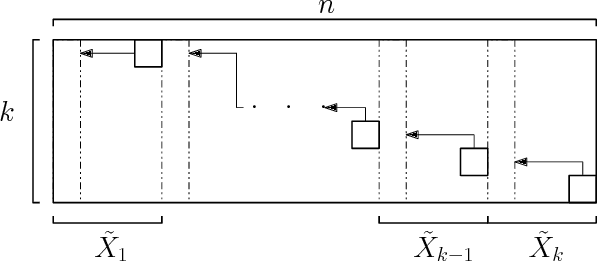

Fast Exact k-Means, k-Medians and Bregman Divergence Clustering in 1D

Apr 25, 2018

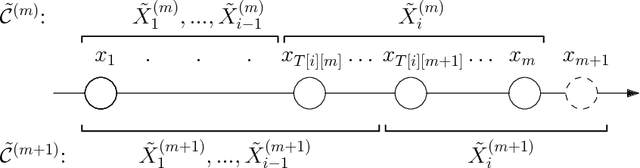

Abstract:The $k$-Means clustering problem on $n$ points is NP-Hard for any dimension $d\ge 2$, however, for the 1D case there exists exact polynomial time algorithms. Previous literature reported an $O(kn^2)$ time dynamic programming algorithm that uses $O(kn)$ space. It turns out that the problem has been considered under a different name more than twenty years ago. We present all the existing work that had been overlooked and compare the various solutions theoretically. Moreover, we show how to reduce the space usage for some of them, as well as generalize them to data structures that can quickly report an optimal $k$-Means clustering for any $k$. Finally we also generalize all the algorithms to work for the absolute distance and to work for any Bregman Divergence. We complement our theoretical contributions by experiments that compare the practical performance of the various algorithms.

Deep Learning Object Detection Methods for Ecological Camera Trap Data

Mar 28, 2018

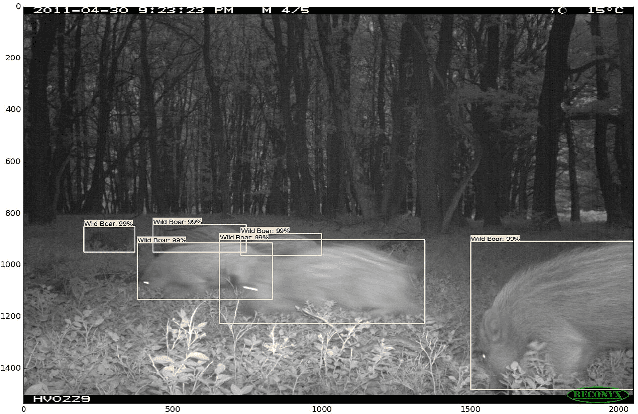

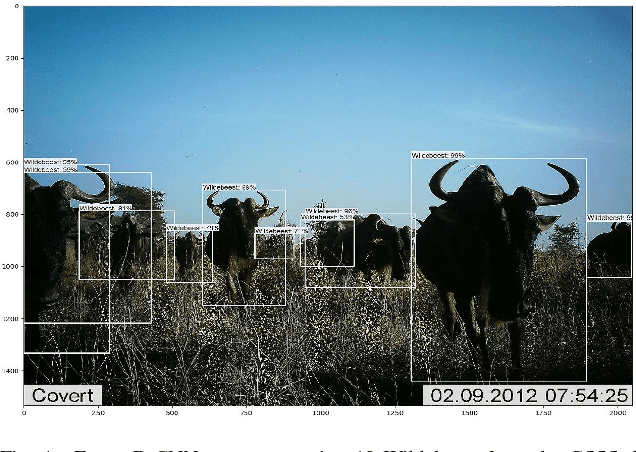

Abstract:Deep learning methods for computer vision tasks show promise for automating the data analysis of camera trap images. Ecological camera traps are a common approach for monitoring an ecosystem's animal population, as they provide continual insight into an environment without being intrusive. However, the analysis of camera trap images is expensive, labour intensive, and time consuming. Recent advances in the field of deep learning for object detection show promise towards automating the analysis of camera trap images. Here, we demonstrate their capabilities by training and comparing two deep learning object detection classifiers, Faster R-CNN and YOLO v2.0, to identify, quantify, and localize animal species within camera trap images using the Reconyx Camera Trap and the self-labeled Gold Standard Snapshot Serengeti data sets. When trained on large labeled datasets, object recognition methods have shown success. We demonstrate their use, in the context of realistically sized ecological data sets, by testing if object detection methods are applicable for ecological research scenarios when utilizing transfer learning. Faster R-CNN outperformed YOLO v2.0 with average accuracies of 93.0\% and 76.7\% on the two data sets, respectively. Our findings show promising steps towards the automation of the labourious task of labeling camera trap images, which can be used to improve our understanding of the population dynamics of ecosystems across the planet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge