Stefan Engblom

Robust and integrative Bayesian neural networks for likelihood-free parameter inference

Feb 12, 2021

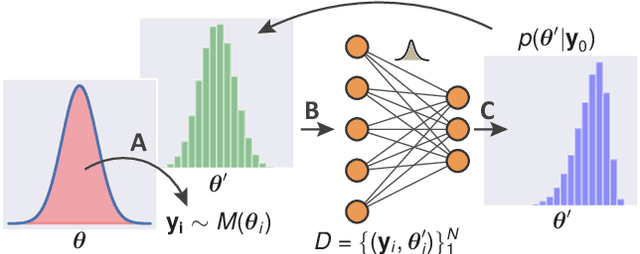

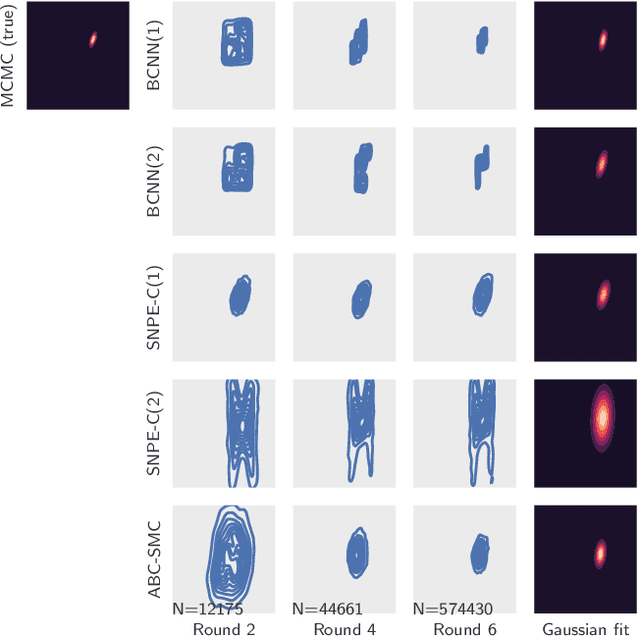

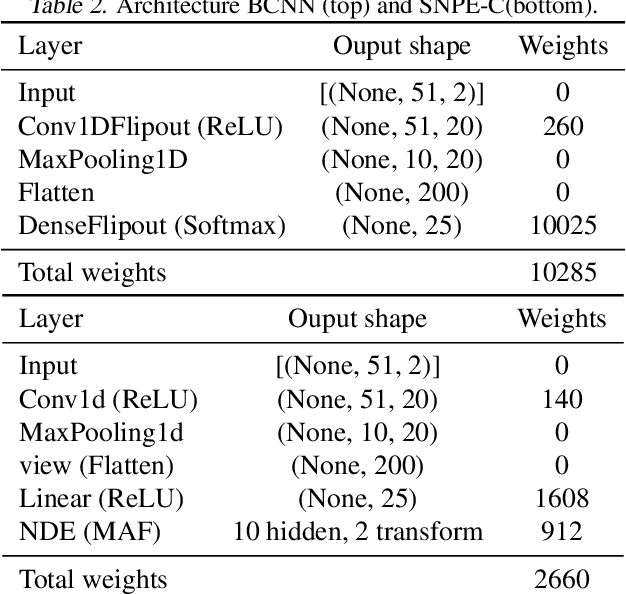

Abstract:State-of-the-art neural network-based methods for learning summary statistics have delivered promising results for simulation-based likelihood-free parameter inference. Existing approaches require density estimation as a post-processing step building upon deterministic neural networks, and do not take network prediction uncertainty into account. This work proposes a robust integrated approach that learns summary statistics using Bayesian neural networks, and directly estimates the posterior density using categorical distributions. An adaptive sampling scheme selects simulation locations to efficiently and iteratively refine the predictive posterior of the network conditioned on observations. This allows for more efficient and robust convergence on comparatively large prior spaces. We demonstrate our approach on benchmark examples and compare against related methods.

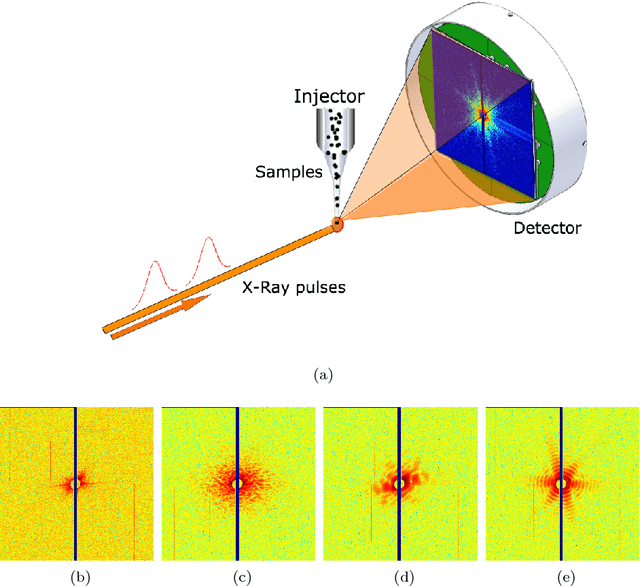

Flash X-ray diffraction imaging in 3D: a proposed analysis pipeline

Oct 30, 2019

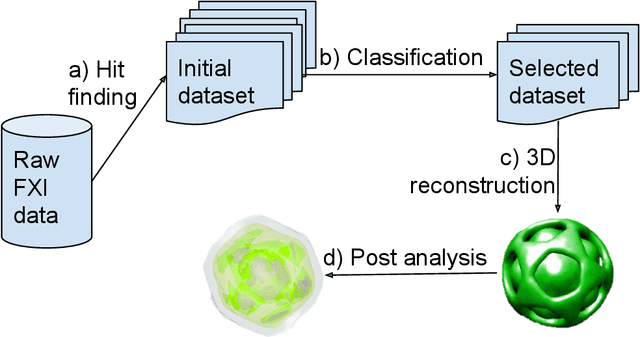

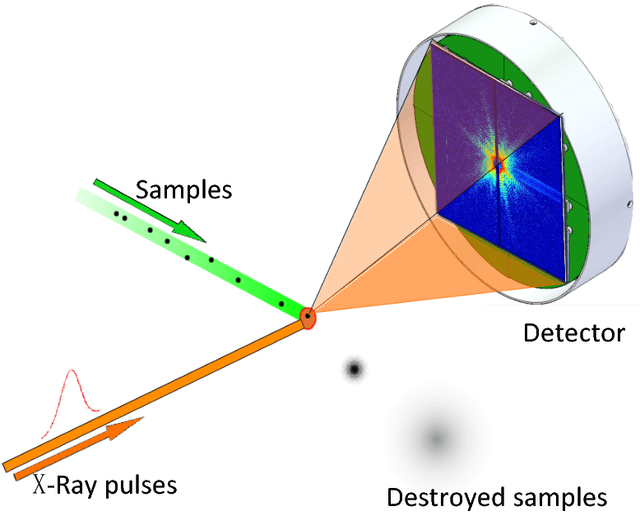

Abstract:Modern Flash X-ray diffraction imaging (FXI) acquires diffraction signals from single biomolecules at a high repetition rate from Free X-ray Electron lasers (XFELs), easily obtaining millions of 2D diffraction patterns from a single experiment. Due to the stochastic nature of FXI experiments and the massive volumes of data, retrieving 3D electron densities from raw 2D diffraction patterns is a challenging and time-consuming task. We propose a semi-automatic data analysis pipeline for FXI experiments, which includes four steps: hit finding and preliminary filtering, pattern classification, 3D Fourier reconstruction, and post analysis. We also include a recently developed bootstrap methodology in the post-analysis step for uncertainty analysis and quality control. To achieve the best possible resolution, we further suggest using background subtraction, signal windowing, and convex optimization techniques when retrieving the Fourier phases in the post-analysis step. As an application example, we quantified the 3D electron structure of the PR772 virus using the proposed data-analysis pipeline. The retrieved structure was above the detector-edge resolution and clearly showed the pseudo-icosahedral capsid of the PR772.

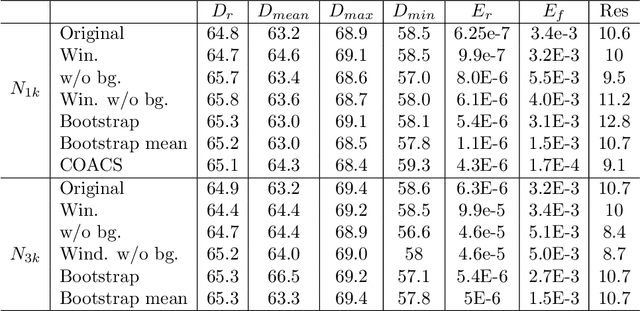

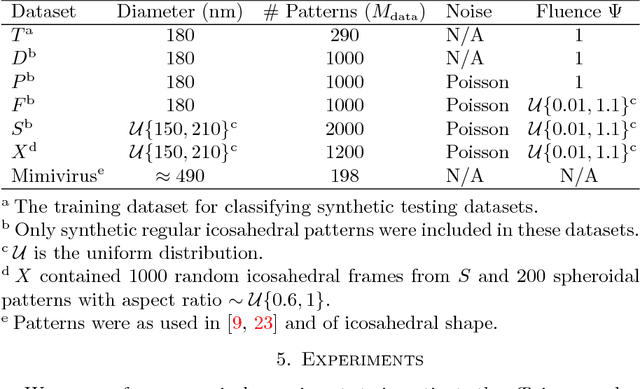

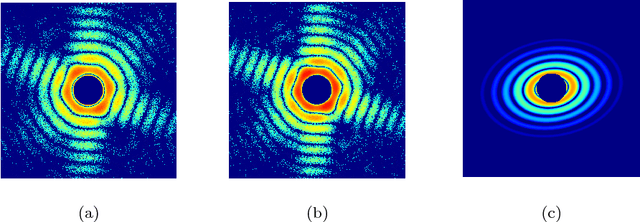

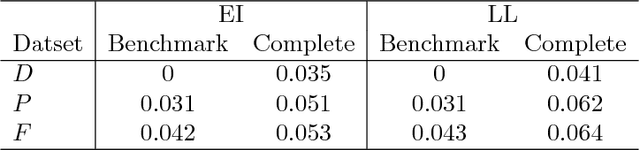

Supervised Classification Methods for Flash X-ray single particle diffraction Imaging

Oct 25, 2018

Abstract:Current Flash X-ray single-particle diffraction Imaging (FXI) experiments, which operate on modern X-ray Free Electron Lasers (XFELs), can record millions of interpretable diffraction patterns from individual biomolecules per day. Due to the stochastic nature of the XFELs, those patterns will to a varying degree include scatterings from contaminated samples. Also, the heterogeneity of the sample biomolecules is unavoidable and complicates data processing. Reducing the data volumes and selecting high-quality single-molecule patterns are therefore critical steps in the experimental set-up. In this paper, we present two supervised template-based learning methods for classifying FXI patterns. Our Eigen-Image and Log-Likelihood classifier can find the best-matched template for a single-molecule pattern within a few milliseconds. It is also straightforward to parallelize them so as to fully match the XFEL repetition rate, thereby enabling processing at site.

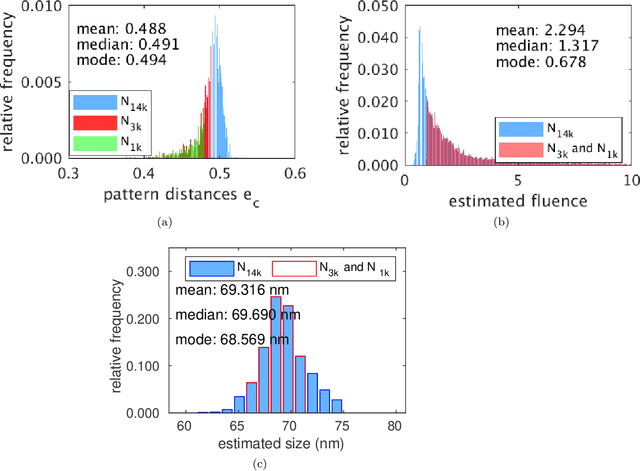

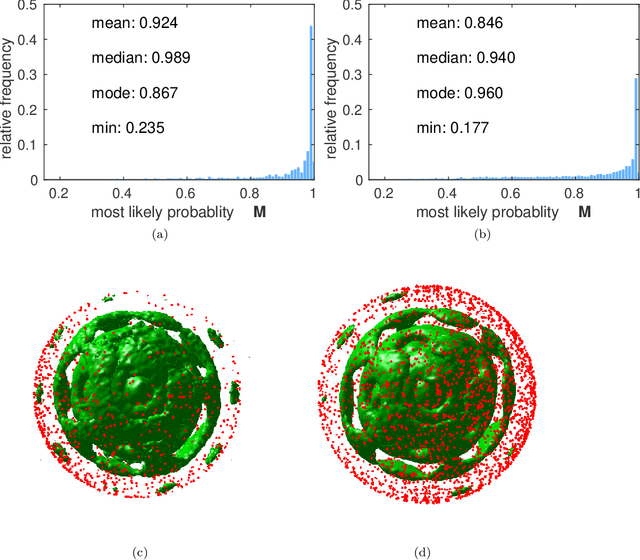

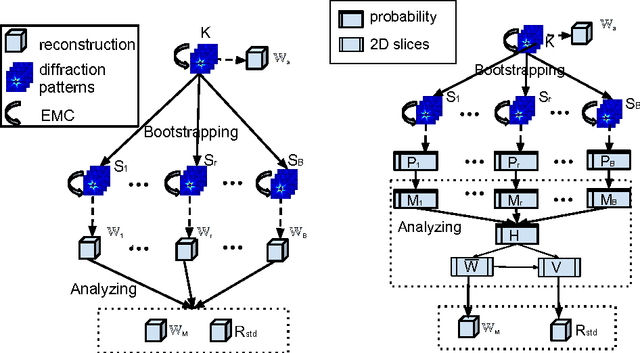

Assessing Uncertainties in X-ray Single-particle Three-dimensional reconstructions

Jan 02, 2017

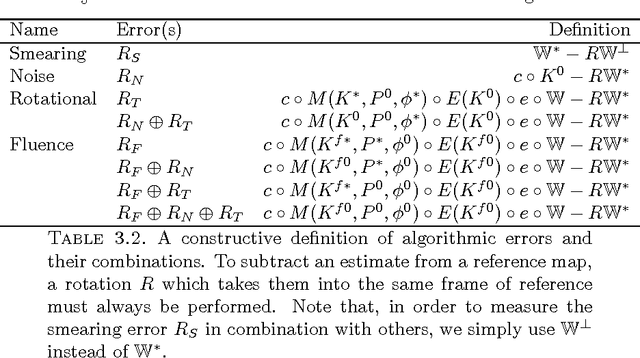

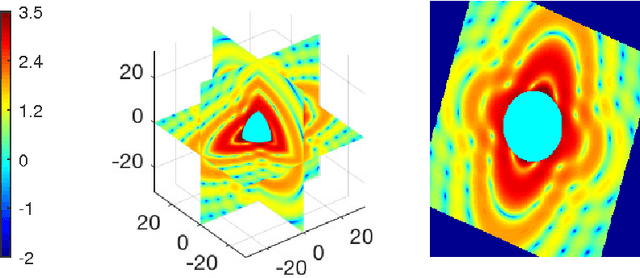

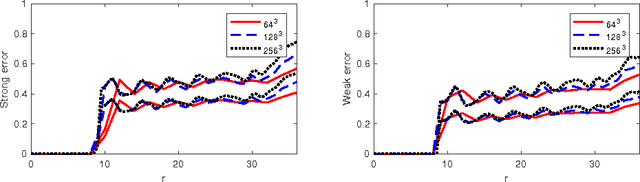

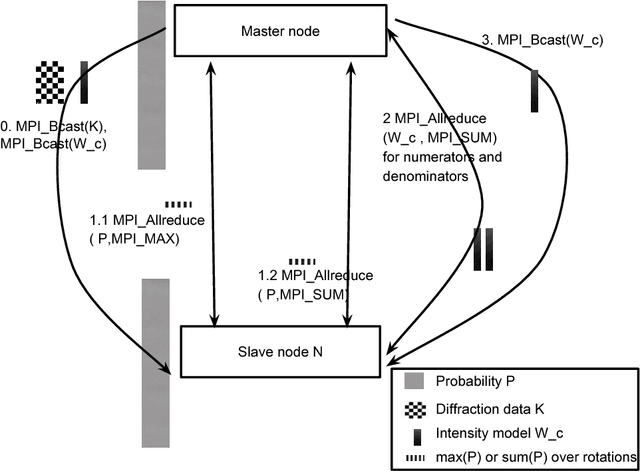

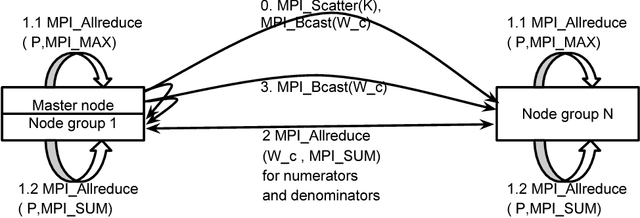

Abstract:Modern technology for producing extremely bright and coherent X-ray laser pulses provides the possibility to acquire a large number of diffraction patterns from individual biological nanoparticles, including proteins, viruses, and DNA. These two-dimensional diffraction patterns can be practically reconstructed and retrieved down to a resolution of a few \angstrom. In principle, a sufficiently large collection of diffraction patterns will contain the required information for a full three-dimensional reconstruction of the biomolecule. The computational methodology for this reconstruction task is still under development and highly resolved reconstructions have not yet been produced. We analyze the Expansion-Maximization-Compression scheme, the current state of the art approach for this very challenging application, by isolating different sources of uncertainty. Through numerical experiments on synthetic data we evaluate their respective impact. We reach conclusions of relevance for handling actual experimental data, as well as pointing out certain improvements to the underlying estimation algorithm. We also introduce a practically applicable computational methodology in the form of bootstrap procedures for assessing reconstruction uncertainty in the real data case. We evaluate the sharpness of this approach and argue that this type of procedure will be critical in the near future when handling the increasing amount of data.

* 21 pages

Machine learning for ultrafast X-ray diffraction patterns on large-scale GPU clusters

Dec 16, 2014

Abstract:The classical method of determining the atomic structure of complex molecules by analyzing diffraction patterns is currently undergoing drastic developments. Modern techniques for producing extremely bright and coherent X-ray lasers allow a beam of streaming particles to be intercepted and hit by an ultrashort high energy X-ray beam. Through machine learning methods the data thus collected can be transformed into a three-dimensional volumetric intensity map of the particle itself. The computational complexity associated with this problem is very high such that clusters of data parallel accelerators are required. We have implemented a distributed and highly efficient algorithm for inversion of large collections of diffraction patterns targeting clusters of hundreds of GPUs. With the expected enormous amount of diffraction data to be produced in the foreseeable future, this is the required scale to approach real time processing of data at the beam site. Using both real and synthetic data we look at the scaling properties of the application and discuss the overall computational viability of this exciting and novel imaging technique.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge