Stefan Brandl

Simultaneous Unlearning of Multiple Protected User Attributes From Variational Autoencoder Recommenders Using Adversarial Training

Oct 28, 2024Abstract:In widely used neural network-based collaborative filtering models, users' history logs are encoded into latent embeddings that represent the users' preferences. In this setting, the models are capable of mapping users' protected attributes (e.g., gender or ethnicity) from these user embeddings even without explicit access to them, resulting in models that may treat specific demographic user groups unfairly and raise privacy issues. While prior work has approached the removal of a single protected attribute of a user at a time, multiple attributes might come into play in real-world scenarios. In the work at hand, we present AdvXMultVAE which aims to unlearn multiple protected attributes (exemplified by gender and age) simultaneously to improve fairness across demographic user groups. For this purpose, we couple a variational autoencoder (VAE) architecture with adversarial training (AdvMultVAE) to support simultaneous removal of the users' protected attributes with continuous and/or categorical values. Our experiments on two datasets, LFM-2b-100k and Ml-1m, from the music and movie domains, respectively, show that our approach can yield better results than its singular removal counterparts (based on AdvMultVAE) in effectively mitigating demographic biases whilst improving the anonymity of latent embeddings.

The Importance of Cognitive Biases in the Recommendation Ecosystem

Aug 22, 2024Abstract:Cognitive biases have been studied in psychology, sociology, and behavioral economics for decades. Traditionally, they have been considered a negative human trait that leads to inferior decision-making, reinforcement of stereotypes, or can be exploited to manipulate consumers, respectively. We argue that cognitive biases also manifest in different parts of the recommendation ecosystem and at different stages of the recommendation process. More importantly, we contest this traditional detrimental perspective on cognitive biases and claim that certain cognitive biases can be beneficial when accounted for by recommender systems. Concretely, we provide empirical evidence that biases such as feature-positive effect, Ikea effect, and cultural homophily can be observed in various components of the recommendation pipeline, including input data (such as ratings or side information), recommendation algorithm or model (and consequently recommended items), and user interactions with the system. In three small experiments covering recruitment and entertainment domains, we study the pervasiveness of the aforementioned biases. We ultimately advocate for a prejudice-free consideration of cognitive biases to improve user and item models as well as recommendation algorithms.

Analyzing Item Popularity Bias of Music Recommender Systems: Are Different Genders Equally Affected?

Aug 16, 2021

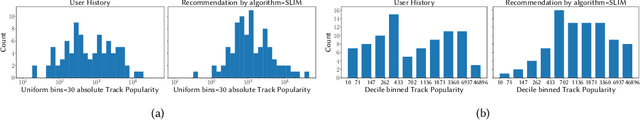

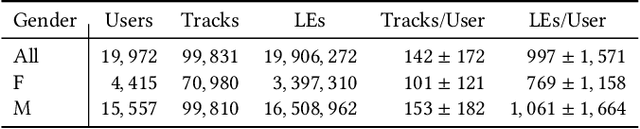

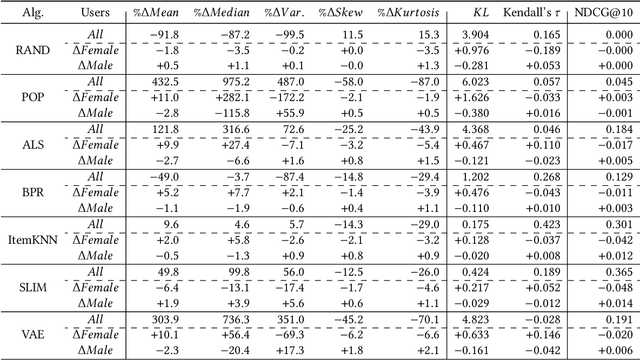

Abstract:Several studies have identified discrepancies between the popularity of items in user profiles and the corresponding recommendation lists. Such behavior, which concerns a variety of recommendation algorithms, is referred to as popularity bias. Existing work predominantly adopts simple statistical measures, such as the difference of mean or median popularity, to quantify popularity bias. Moreover, it does so irrespective of user characteristics other than the inclination to popular content. In this work, in contrast, we propose to investigate popularity differences (between the user profile and recommendation list) in terms of median, a variety of statistical moments, as well as similarity measures that consider the entire popularity distributions (Kullback-Leibler divergence and Kendall's tau rank-order correlation). This results in a more detailed picture of the characteristics of popularity bias. Furthermore, we investigate whether such algorithmic popularity bias affects users of different genders in the same way. We focus on music recommendation and conduct experiments on the recently released standardized LFM-2b dataset, containing listening profiles of Last.fm users. We investigate the algorithmic popularity bias of seven common recommendation algorithms (five collaborative filtering and two baselines). Our experiments show that (1) the studied metrics provide novel insights into popularity bias in comparison with only using average differences, (2) algorithms less inclined towards popularity bias amplification do not necessarily perform worse in terms of utility (NDCG), (3) the majority of the investigated recommenders intensify the popularity bias of the female users.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge