Stavros Nousias

BIMgent: Towards Autonomous Building Modeling via Computer-use Agents

Jun 08, 2025Abstract:Existing computer-use agents primarily focus on general-purpose desktop automation tasks, with limited exploration of their application in highly specialized domains. In particular, the 3D building modeling process in the Architecture, Engineering, and Construction (AEC) sector involves open-ended design tasks and complex interaction patterns within Building Information Modeling (BIM) authoring software, which has yet to be thoroughly addressed by current studies. In this paper, we propose BIMgent, an agentic framework powered by multimodal large language models (LLMs), designed to enable autonomous building model authoring via graphical user interface (GUI) operations. BIMgent automates the architectural building modeling process, including multimodal input for conceptual design, planning of software-specific workflows, and efficient execution of the authoring GUI actions. We evaluate BIMgent on real-world building modeling tasks, including both text-based conceptual design generation and reconstruction from existing building design. The design quality achieved by BIMgent was found to be reasonable. Its operations achieved a 32% success rate, whereas all baseline models failed to complete the tasks (0% success rate). Results demonstrate that BIMgent effectively reduces manual workload while preserving design intent, highlighting its potential for practical deployment in real-world architectural modeling scenarios.

VectorGraphNET: Graph Attention Networks for Accurate Segmentation of Complex Technical Drawings

Oct 02, 2024

Abstract:This paper introduces a new approach to extract and analyze vector data from technical drawings in PDF format. Our method involves converting PDF files into SVG format and creating a feature-rich graph representation, which captures the relationships between vector entities using geometrical information. We then apply a graph attention transformer with hierarchical label definition to achieve accurate line-level segmentation. Our approach is evaluated on two datasets, including the public FloorplanCAD dataset, which achieves state-of-the-art results on weighted F1 score, surpassing existing methods. The proposed vector-based method offers a more scalable solution for large-scale technical drawing analysis compared to vision-based approaches, while also requiring significantly less GPU power than current state-of-the-art vector-based techniques. Moreover, it demonstrates improved performance in terms of the weighted F1 (wF1) score on the semantic segmentation task. Our results demonstrate the effectiveness of our approach in extracting meaningful information from technical drawings, enabling new applications, and improving existing workflows in the AEC industry. Potential applications of our approach include automated building information modeling (BIM) and construction planning, which could significantly impact the efficiency and productivity of the industry.

Text2BIM: Generating Building Models Using a Large Language Model-based Multi-Agent Framework

Aug 15, 2024

Abstract:The conventional BIM authoring process typically requires designers to master complex and tedious modeling commands in order to materialize their design intentions within BIM authoring tools. This additional cognitive burden complicates the design process and hinders the adoption of BIM and model-based design in the AEC (Architecture, Engineering, and Construction) industry. To facilitate the expression of design intentions more intuitively, we propose Text2BIM, an LLM-based multi-agent framework that can generate 3D building models from natural language instructions. This framework orchestrates multiple LLM agents to collaborate and reason, transforming textual user input into imperative code that invokes the BIM authoring tool's APIs, thereby generating editable BIM models with internal layouts, external envelopes, and semantic information directly in the software. Furthermore, a rule-based model checker is introduced into the agentic workflow, utilizing predefined domain knowledge to guide the LLM agents in resolving issues within the generated models and iteratively improving model quality. Extensive experiments were conducted to compare and analyze the performance of three different LLMs under the proposed framework. The evaluation results demonstrate that our approach can effectively generate high-quality, structurally rational building models that are aligned with the abstract concepts specified by user input. Finally, an interactive software prototype was developed to integrate the framework into the BIM authoring software Vectorworks, showcasing the potential of modeling by chatting.

Coordinating robotized construction using advanced robotic simulation: The case of collaborative brick wall assembly

May 27, 2024

Abstract:Utilizing robotic systems in the construction industry is gaining popularity due to their build time, precision, and efficiency. In this paper, we introduce a system that allows the coordination of multiple manipulator robots for construction activities. As a case study, we chose robotic brick wall assembly. By utilizing a multi robot system where arm manipulators collaborate with each other, the entirety of a potentially long wall can be assembled simultaneously. However, the reduction of overall bricklaying time is dependent on the minimization of time required for each individual manipulator. In this paper, we execute the simulation with various placements of material and the robots base, as well as different robot configurations, to determine the optimal position of the robot and material and the best configuration for the robot. The simulation results provide users with insights into how to find the best placement of robots and raw materials for brick wall assembly.

Towards predicting Pedestrian Evacuation Time and Density from Floorplans using a Vision Transformer

Jun 27, 2023

Abstract:Conventional pedestrian simulators are inevitable tools in the design process of a building, as they enable project engineers to prevent overcrowding situations and plan escape routes for evacuation. However, simulation runtime and the multiple cumbersome steps in generating simulation results are potential bottlenecks during the building design process. Data-driven approaches have demonstrated their capability to outperform conventional methods in speed while delivering similar or even better results across many disciplines. In this work, we present a deep learning-based approach based on a Vision Transformer to predict density heatmaps over time and total evacuation time from a given floorplan. Specifically, due to limited availability of public datasets, we implement a parametric data generation pipeline including a conventional simulator. This enables us to build a large synthetic dataset that we use to train our architecture. Furthermore, we seamlessly integrate our model into a BIM-authoring tool to generate simulation results instantly and automatically.

Patient-specific modelling, simulation and real time processing for respiratory diseases

Jul 13, 2022

Abstract:Asthma is a common chronic disease of the respiratory system causing significant disability and societal burden. It affects over 500 million people worldwide and generates costs exceeding $USD 56 billion in 2011 in the United States. Managing asthma involves controlling symptoms, preventing exacerbations, and maintaining lung function. Improving asthma control affects the daily life of patients and is associated with a reduced risk of exacerbations and lung function impairment, reduces the cost of asthma care and indirect costs associated with reduced productivity. Understanding the complex dynamics of the pulmonary system and the lung's response to disease, injury, and treatment is fundamental to the advancement of Asthma treatment. Computational models of the respiratory system seek to provide a theoretical framework to understand the interaction between structure and function. Their application can improve pulmonary medicine by a patient-specific approach to medicinal methodologies optimizing the delivery given the personalized geometry and personalized ventilation patterns while introducing a patient-specific technique that maximizes drug delivery. A three-fold objective addressed within this dissertation becomes prominent at this point. The first part refers to the comprehension of pulmonary pathophysiology and the mechanics of Asthma and subsequently of constrictive pulmonary conditions in general. The second part refers to the design and implementation of tools that facilitate personalized medicine to improve delivery and effectiveness. Finally, the third part refers to the self-management of the condition, meaning that medical personnel and patients have access to tools and methods that allow the first party to easily track the course of the condition and the second party, i.e. the patient to easily self-manage it alleviating the significant burden from the health system.

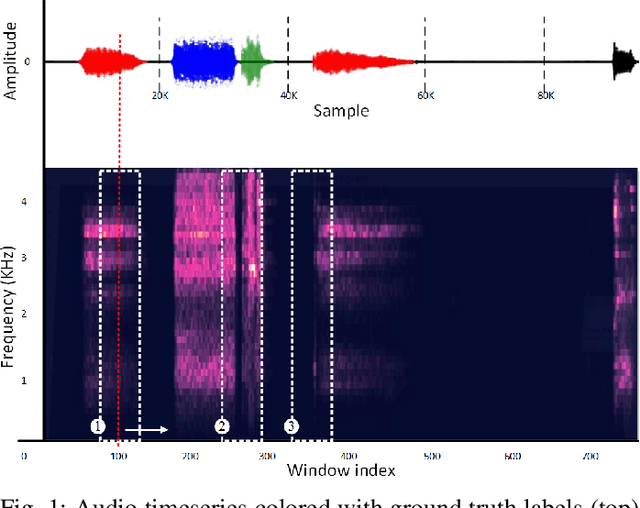

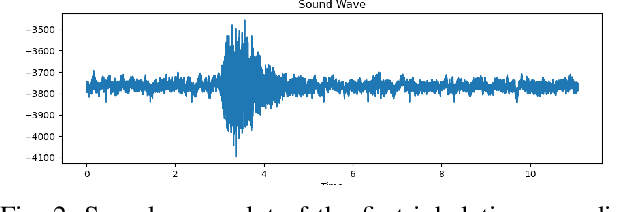

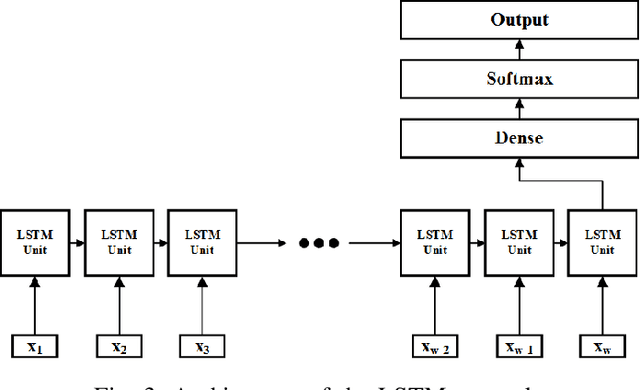

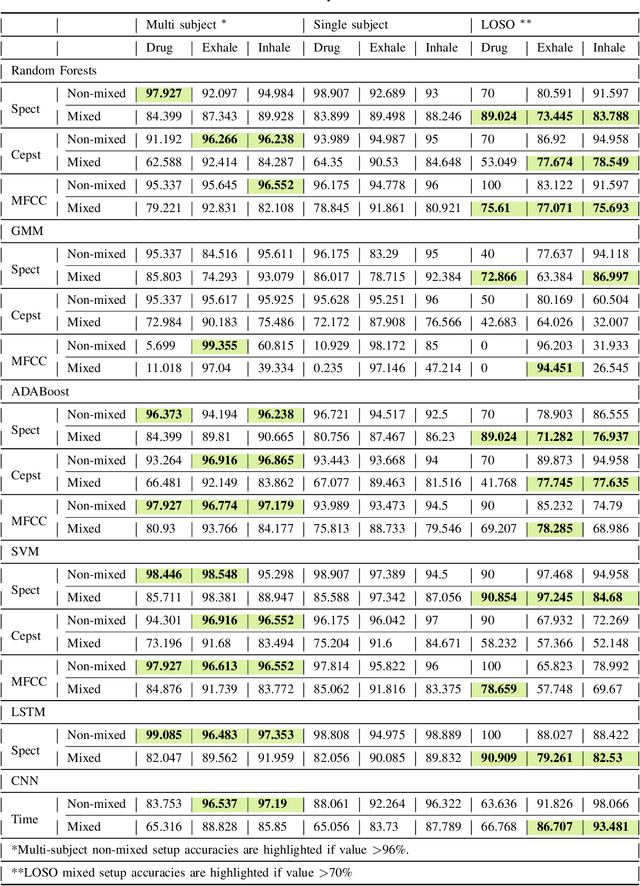

Revisiting Audio Pattern Recognition for Asthma Medication Adherence: Evaluation with the RDA Benchmark Suite

Jun 01, 2022

Abstract:Asthma is a common, usually long-term respiratory disease with negative impact on society and the economy worldwide. Treatment involves using medical devices (inhalers) that distribute medication to the airways, and its efficiency depends on the precision of the inhalation technique. Health monitoring systems equipped with sensors and embedded with sound signal detection enable the recognition of drug actuation and could be powerful tools for reliable audio content analysis. This paper revisits audio pattern recognition and machine learning techniques for asthma medication adherence assessment and presents the Respiratory and Drug Actuation (RDA) Suite(https://gitlab.com/vvr/monitoring-medication-adherence/rda-benchmark) for benchmarking and further research. The RDA Suite includes a set of tools for audio processing, feature extraction and classification and is provided along with a dataset consisting of respiratory and drug actuation sounds. The classification models in RDA are implemented based on conventional and advanced machine learning and deep network architectures. This study provides a comparative evaluation of the implemented approaches, examines potential improvements and discusses challenges and future tendencies.

Fast mesh denoising with data driven normal filtering using deep variational autoencoders

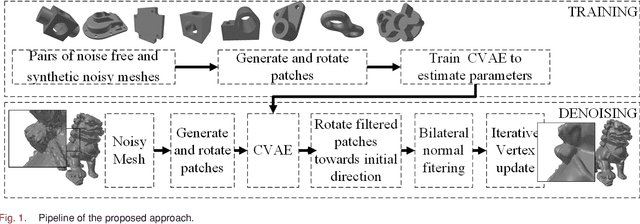

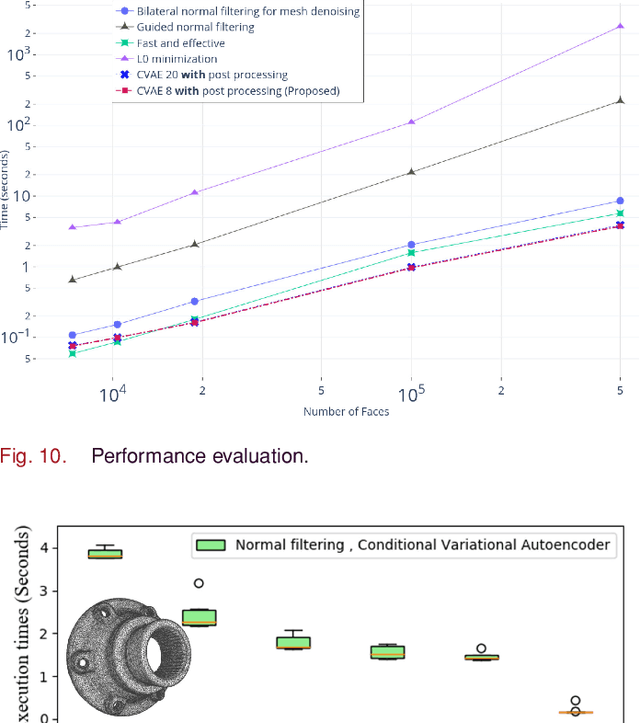

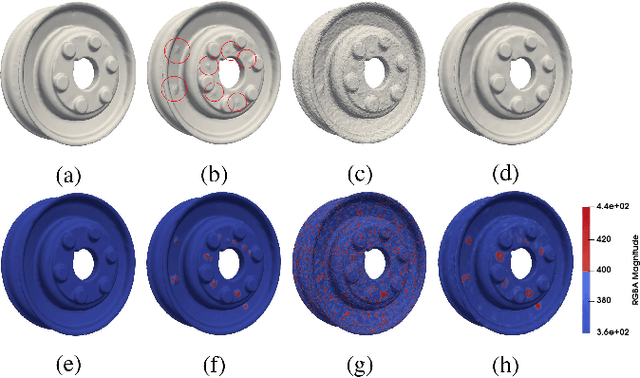

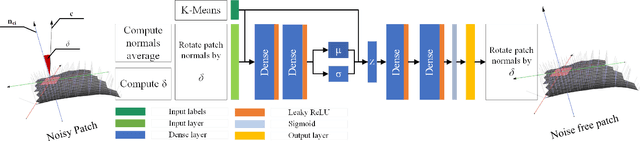

Nov 24, 2021

Abstract:Recent advances in 3D scanning technology have enabled the deployment of 3D models in various industrial applications like digital twins, remote inspection and reverse engineering. Despite their evolving performance, 3D scanners, still introduce noise and artifacts in the acquired dense models. In this work, we propose a fast and robust denoising method for dense 3D scanned industrial models. The proposed approach employs conditional variational autoencoders to effectively filter face normals. Training and inference are performed in a sliding patch setup reducing the size of the required training data and execution times. We conducted extensive evaluation studies using 3D scanned and CAD models. The results verify plausible denoising outcomes, demonstrating similar or higher reconstruction accuracy, compared to other state-of-the-art approaches. Specifically, for 3D models with more than 1e4 faces, the presented pipeline is twice as fast as methods with equivalent reconstruction error.

* 12 pages, 12 figures

Accelerating deep neural networks for efficient scene understanding in automotive cyber-physical systems

Jul 19, 2021

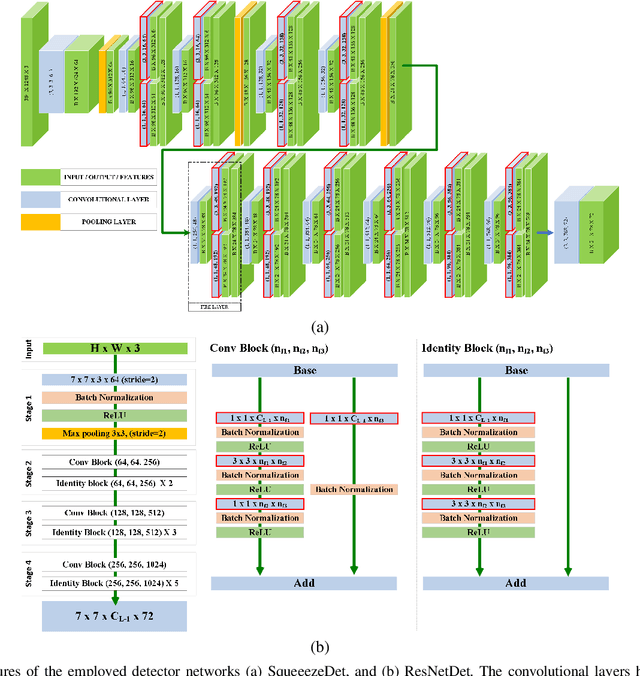

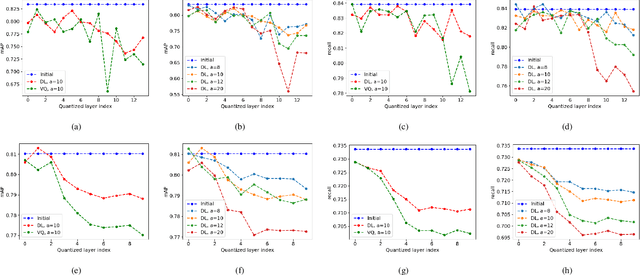

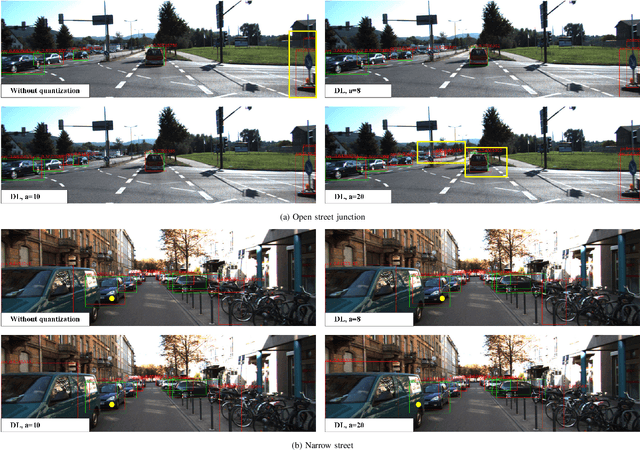

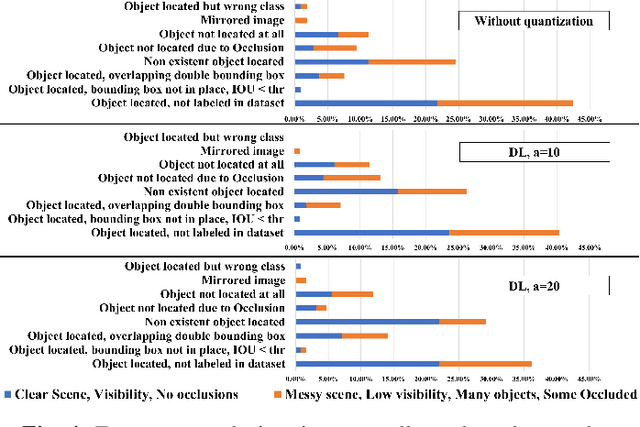

Abstract:Automotive Cyber-Physical Systems (ACPS) have attracted a significant amount of interest in the past few decades, while one of the most critical operations in these systems is the perception of the environment. Deep learning and, especially, the use of Deep Neural Networks (DNNs) provides impressive results in analyzing and understanding complex and dynamic scenes from visual data. The prediction horizons for those perception systems are very short and inference must often be performed in real time, stressing the need of transforming the original large pre-trained networks into new smaller models, by utilizing Model Compression and Acceleration (MCA) techniques. Our goal in this work is to investigate best practices for appropriately applying novel weight sharing techniques, optimizing the available variables and the training procedures towards the significant acceleration of widely adopted DNNs. Extensive evaluation studies carried out using various state-of-the-art DNN models in object detection and tracking experiments, provide details about the type of errors that manifest after the application of weight sharing techniques, resulting in significant acceleration gains with negligible accuracy losses.

Efficient automated U-Net based tree crown delineation using UAV multi-spectral imagery on embedded devices

Jul 16, 2021

Abstract:Delineation approaches provide significant benefits to various domains, including agriculture, environmental and natural disasters monitoring. Most of the work in the literature utilize traditional segmentation methods that require a large amount of computational and storage resources. Deep learning has transformed computer vision and dramatically improved machine translation, though it requires massive dataset for training and significant resources for inference. More importantly, energy-efficient embedded vision hardware delivering real-time and robust performance is crucial in the aforementioned application. In this work, we propose a U-Net based tree delineation method, which is effectively trained using multi-spectral imagery but can then delineate single-spectrum images. The deep architecture that also performs localization, i.e., a class label corresponds to each pixel, has been successfully used to allow training with a small set of segmented images. The ground truth data were generated using traditional image denoising and segmentation approaches. To be able to execute the proposed DNN efficiently in embedded platforms designed for deep learning approaches, we employ traditional model compression and acceleration methods. Extensive evaluation studies using data collected from UAVs equipped with multi-spectral cameras demonstrate the effectiveness of the proposed methods in terms of delineation accuracy and execution efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge