André Borrmann

A Modular Reference Architecture for MCP-Servers Enabling Agentic BIM Interaction

Dec 21, 2025Abstract:Agentic workflows driven by large language models (LLMs) are increasingly applied to Building Information Modelling (BIM), enabling natural-language retrieval, modification and generation of IFC models. Recent work has begun adopting the emerging Model Context Protocol (MCP) as a uniform tool-calling interface for LLMs, simplifying the agent side of BIM interaction. While MCP standardises how LLMs invoke tools, current BIM-side implementations are still authoring tool-specific and ad hoc, limiting reuse, evaluation, and workflow portability across environments. This paper addresses this gap by introducing a modular reference architecture for MCP servers that enables API-agnostic, isolated and reproducible agentic BIM interactions. From a systematic analysis of recurring capabilities in recent literature, we derive a core set of requirements. These inform a microservice architecture centred on an explicit adapter contract that decouples the MCP interface from specific BIM-APIs. A prototype implementation using IfcOpenShell demonstrates feasibility across common modification and generation tasks. Evaluation across representative scenarios shows that the architecture enables reliable workflows, reduces coupling, and provides a reusable foundation for systematic research.

BIMgent: Towards Autonomous Building Modeling via Computer-use Agents

Jun 08, 2025Abstract:Existing computer-use agents primarily focus on general-purpose desktop automation tasks, with limited exploration of their application in highly specialized domains. In particular, the 3D building modeling process in the Architecture, Engineering, and Construction (AEC) sector involves open-ended design tasks and complex interaction patterns within Building Information Modeling (BIM) authoring software, which has yet to be thoroughly addressed by current studies. In this paper, we propose BIMgent, an agentic framework powered by multimodal large language models (LLMs), designed to enable autonomous building model authoring via graphical user interface (GUI) operations. BIMgent automates the architectural building modeling process, including multimodal input for conceptual design, planning of software-specific workflows, and efficient execution of the authoring GUI actions. We evaluate BIMgent on real-world building modeling tasks, including both text-based conceptual design generation and reconstruction from existing building design. The design quality achieved by BIMgent was found to be reasonable. Its operations achieved a 32% success rate, whereas all baseline models failed to complete the tasks (0% success rate). Results demonstrate that BIMgent effectively reduces manual workload while preserving design intent, highlighting its potential for practical deployment in real-world architectural modeling scenarios.

BIMCaP: BIM-based AI-supported LiDAR-Camera Pose Refinement

Dec 04, 2024

Abstract:This paper introduces BIMCaP, a novel method to integrate mobile 3D sparse LiDAR data and camera measurements with pre-existing building information models (BIMs), enhancing fast and accurate indoor mapping with affordable sensors. BIMCaP refines sensor poses by leveraging a 3D BIM and employing a bundle adjustment technique to align real-world measurements with the model. Experiments using real-world open-access data show that BIMCaP achieves superior accuracy, reducing translational error by over 4 cm compared to current state-of-the-art methods. This advancement enhances the accuracy and cost-effectiveness of 3D mapping methodologies like SLAM. BIMCaP's improvements benefit various fields, including construction site management and emergency response, by providing up-to-date, aligned digital maps for better decision-making and productivity. Link to the repository: https://github.com/MigVega/BIMCaP

VectorGraphNET: Graph Attention Networks for Accurate Segmentation of Complex Technical Drawings

Oct 02, 2024

Abstract:This paper introduces a new approach to extract and analyze vector data from technical drawings in PDF format. Our method involves converting PDF files into SVG format and creating a feature-rich graph representation, which captures the relationships between vector entities using geometrical information. We then apply a graph attention transformer with hierarchical label definition to achieve accurate line-level segmentation. Our approach is evaluated on two datasets, including the public FloorplanCAD dataset, which achieves state-of-the-art results on weighted F1 score, surpassing existing methods. The proposed vector-based method offers a more scalable solution for large-scale technical drawing analysis compared to vision-based approaches, while also requiring significantly less GPU power than current state-of-the-art vector-based techniques. Moreover, it demonstrates improved performance in terms of the weighted F1 (wF1) score on the semantic segmentation task. Our results demonstrate the effectiveness of our approach in extracting meaningful information from technical drawings, enabling new applications, and improving existing workflows in the AEC industry. Potential applications of our approach include automated building information modeling (BIM) and construction planning, which could significantly impact the efficiency and productivity of the industry.

SLAM2REF: Advancing Long-Term Mapping with 3D LiDAR and Reference Map Integration for Precise 6-DoF Trajectory Estimation and Map Extension

Aug 28, 2024Abstract:This paper presents a pioneering solution to the task of integrating mobile 3D LiDAR and inertial measurement unit (IMU) data with existing building information models or point clouds, which is crucial for achieving precise long-term localization and mapping in indoor, GPS-denied environments. Our proposed framework, SLAM2REF, introduces a novel approach for automatic alignment and map extension utilizing reference 3D maps. The methodology is supported by a sophisticated multi-session anchoring technique, which integrates novel descriptors and registration methodologies. Real-world experiments reveal the framework's remarkable robustness and accuracy, surpassing current state-of-the-art methods. Our open-source framework's significance lies in its contribution to resilient map data management, enhancing processes across diverse sectors such as construction site monitoring, emergency response, disaster management, and others, where fast-updated digital 3D maps contribute to better decision-making and productivity. Moreover, it offers advancements in localization and mapping research. Link to the repository: https://github.com/MigVega/SLAM2REF, Data: https://doi.org/10.14459/2024mp1743877.

BIM-SLAM: Integrating BIM Models in Multi-session SLAM for Lifelong Mapping using 3D LiDAR

Aug 28, 2024

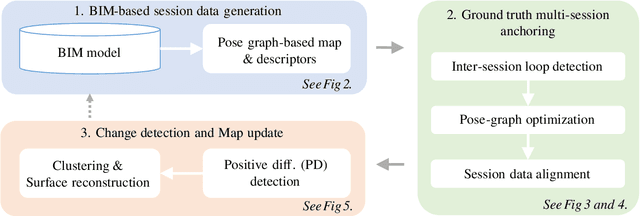

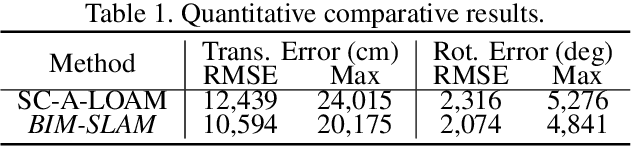

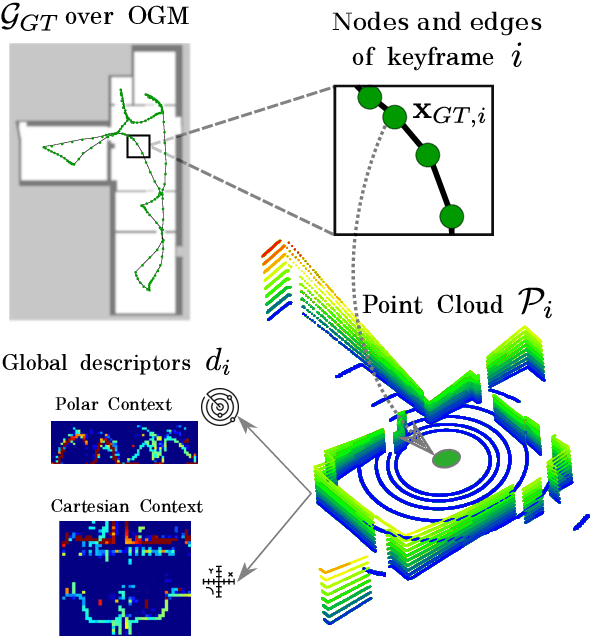

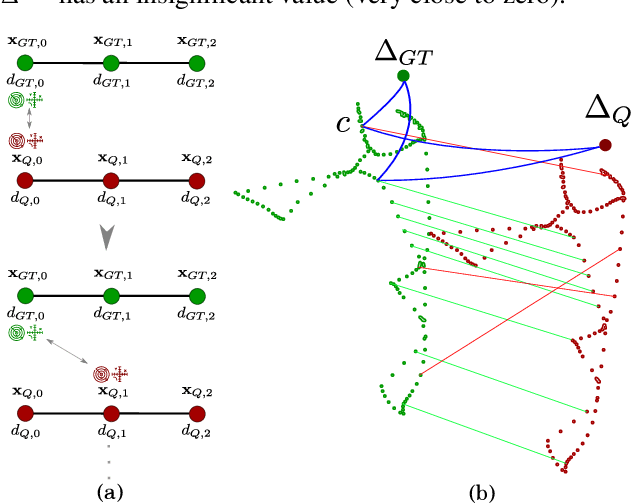

Abstract:While 3D LiDAR sensor technology is becoming more advanced and cheaper every day, the growth of digitalization in the AEC industry contributes to the fact that 3D building information models (BIM models) are now available for a large part of the built environment. These two facts open the question of how 3D models can support 3D LiDAR long-term SLAM in indoor, GPS-denied environments. This paper proposes a methodology that leverages BIM models to create an updated map of indoor environments with sequential LiDAR measurements. Session data (pose graph-based map and descriptors) are initially generated from BIM models. Then, real-world data is aligned with the session data from the model using multi-session anchoring while minimizing the drift on the real-world data. Finally, the new elements not present in the BIM model are identified, grouped, and reconstructed in a surface representation, allowing a better visualization next to the BIM model. The framework enables the creation of a coherent map aligned with the BIM model that does not require prior knowledge of the initial pose of the robot, and it does not need to be inside the map.

* Conference paper in ISARC 2023

Text2BIM: Generating Building Models Using a Large Language Model-based Multi-Agent Framework

Aug 15, 2024

Abstract:The conventional BIM authoring process typically requires designers to master complex and tedious modeling commands in order to materialize their design intentions within BIM authoring tools. This additional cognitive burden complicates the design process and hinders the adoption of BIM and model-based design in the AEC (Architecture, Engineering, and Construction) industry. To facilitate the expression of design intentions more intuitively, we propose Text2BIM, an LLM-based multi-agent framework that can generate 3D building models from natural language instructions. This framework orchestrates multiple LLM agents to collaborate and reason, transforming textual user input into imperative code that invokes the BIM authoring tool's APIs, thereby generating editable BIM models with internal layouts, external envelopes, and semantic information directly in the software. Furthermore, a rule-based model checker is introduced into the agentic workflow, utilizing predefined domain knowledge to guide the LLM agents in resolving issues within the generated models and iteratively improving model quality. Extensive experiments were conducted to compare and analyze the performance of three different LLMs under the proposed framework. The evaluation results demonstrate that our approach can effectively generate high-quality, structurally rational building models that are aligned with the abstract concepts specified by user input. Finally, an interactive software prototype was developed to integrate the framework into the BIM authoring software Vectorworks, showcasing the potential of modeling by chatting.

OpenSU3D: Open World 3D Scene Understanding using Foundation Models

Jul 19, 2024

Abstract:In this paper, we present a novel, scalable approach for constructing open set, instance-level 3D scene representations, advancing open world understanding of 3D environments. Existing methods require pre-constructed 3D scenes and face scalability issues due to per-point feature vector learning, limiting their efficacy with complex queries. Our method overcomes these limitations by incrementally building instance-level 3D scene representations using 2D foundation models, efficiently aggregating instance-level details such as masks, feature vectors, names, and captions. We introduce fusion schemes for feature vectors to enhance their contextual knowledge and performance on complex queries. Additionally, we explore large language models for robust automatic annotation and spatial reasoning tasks. We evaluate our proposed approach on multiple scenes from ScanNet and Replica datasets demonstrating zero-shot generalization capabilities, exceeding current state-of-the-art methods in open world 3D scene understanding.

Coordinating robotized construction using advanced robotic simulation: The case of collaborative brick wall assembly

May 27, 2024

Abstract:Utilizing robotic systems in the construction industry is gaining popularity due to their build time, precision, and efficiency. In this paper, we introduce a system that allows the coordination of multiple manipulator robots for construction activities. As a case study, we chose robotic brick wall assembly. By utilizing a multi robot system where arm manipulators collaborate with each other, the entirety of a potentially long wall can be assembled simultaneously. However, the reduction of overall bricklaying time is dependent on the minimization of time required for each individual manipulator. In this paper, we execute the simulation with various placements of material and the robots base, as well as different robot configurations, to determine the optimal position of the robot and material and the best configuration for the robot. The simulation results provide users with insights into how to find the best placement of robots and raw materials for brick wall assembly.

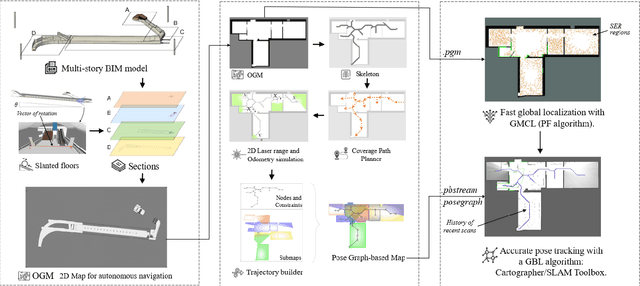

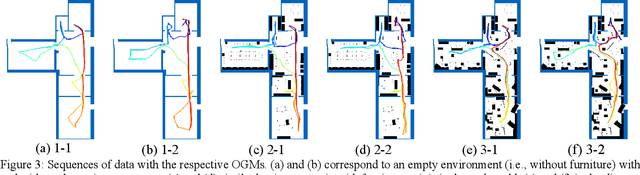

Occupancy Grid Map to Pose Graph-based Map: Robust BIM-based 2D-LiDAR Localization for Lifelong Indoor Navigation in Changing and Dynamic Environments

Aug 10, 2023

Abstract:Several studies rely on the de facto standard Adaptive Monte Carlo Localization (AMCL) method to localize a robot in an Occupancy Grid Map (OGM) extracted from a building information model (BIM model). However, most of these studies assume that the BIM model precisely represents the real world, which is rarely true. Discrepancies between the reference BIM model and the real world (Scan-BIM deviations) are not only due to furniture or clutter but also the usual as-planned and as-built deviations that exist with any model created in the design phase. These deviations affect the accuracy of AMCL drastically. This paper proposes an open-source method to generate appropriate Pose Graph-based maps from BIM models for robust 2D-LiDAR localization in changing and dynamic environments. First, 2D OGMs are automatically generated from complex BIM models. These OGMs only represent structural elements allowing indoor autonomous robot navigation. Then, an efficient technique converts these 2D OGMs into Pose Graph-based maps enabling more accurate robot pose tracking. Finally, we leverage the different map representations for accurate, robust localization with a combination of state-of-the-art algorithms. Moreover, we provide a quantitative comparison of various state-of-the-art localization algorithms in three simulated scenarios with varying levels of Scan-BIM deviations and dynamic agents. More precisely, we compare two Particle Filter (PF) algorithms: AMCL and General Monte Carlo Localization (GMCL); and two Graph-based Localization (GBL) methods: Google's Cartographer and SLAM Toolbox, solving the global localization and pose tracking problems. The numerous experiments demonstrate that the proposed method contributes to a robust localization with an as-designed BIM model or a sparse OGM in changing and dynamic environments, outperforming the conventional AMCL in accuracy and robustness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge