Miguel Arturo Vega Torres

BIMCaP: BIM-based AI-supported LiDAR-Camera Pose Refinement

Dec 04, 2024

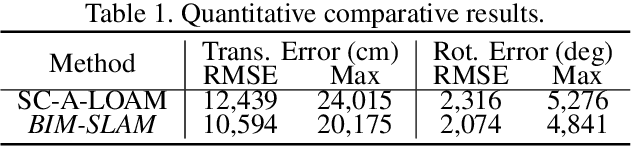

Abstract:This paper introduces BIMCaP, a novel method to integrate mobile 3D sparse LiDAR data and camera measurements with pre-existing building information models (BIMs), enhancing fast and accurate indoor mapping with affordable sensors. BIMCaP refines sensor poses by leveraging a 3D BIM and employing a bundle adjustment technique to align real-world measurements with the model. Experiments using real-world open-access data show that BIMCaP achieves superior accuracy, reducing translational error by over 4 cm compared to current state-of-the-art methods. This advancement enhances the accuracy and cost-effectiveness of 3D mapping methodologies like SLAM. BIMCaP's improvements benefit various fields, including construction site management and emergency response, by providing up-to-date, aligned digital maps for better decision-making and productivity. Link to the repository: https://github.com/MigVega/BIMCaP

SLAM2REF: Advancing Long-Term Mapping with 3D LiDAR and Reference Map Integration for Precise 6-DoF Trajectory Estimation and Map Extension

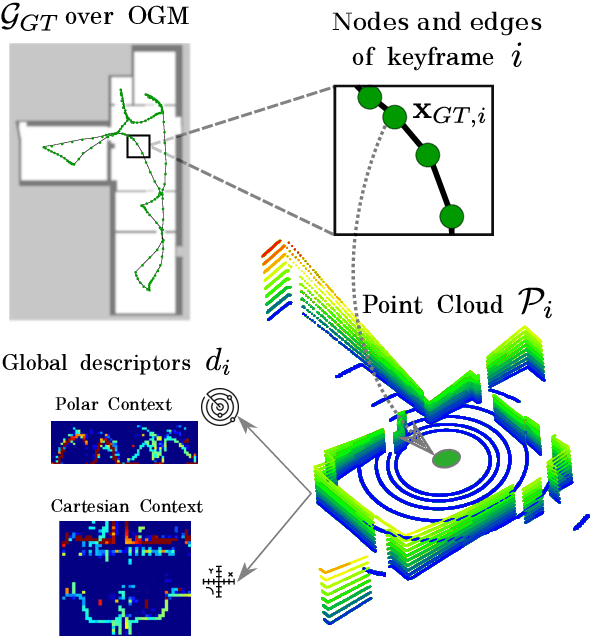

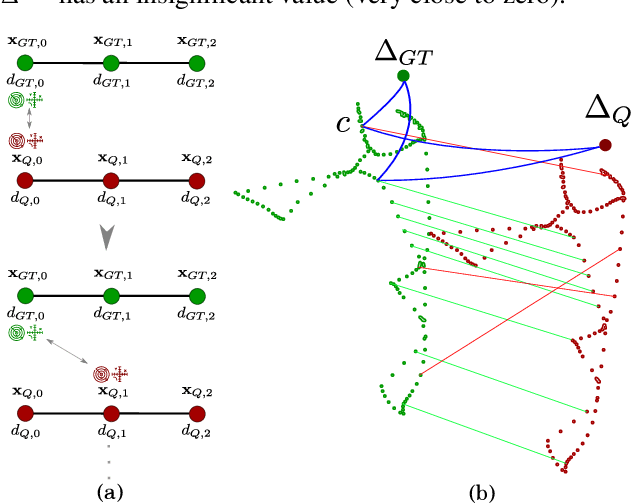

Aug 28, 2024Abstract:This paper presents a pioneering solution to the task of integrating mobile 3D LiDAR and inertial measurement unit (IMU) data with existing building information models or point clouds, which is crucial for achieving precise long-term localization and mapping in indoor, GPS-denied environments. Our proposed framework, SLAM2REF, introduces a novel approach for automatic alignment and map extension utilizing reference 3D maps. The methodology is supported by a sophisticated multi-session anchoring technique, which integrates novel descriptors and registration methodologies. Real-world experiments reveal the framework's remarkable robustness and accuracy, surpassing current state-of-the-art methods. Our open-source framework's significance lies in its contribution to resilient map data management, enhancing processes across diverse sectors such as construction site monitoring, emergency response, disaster management, and others, where fast-updated digital 3D maps contribute to better decision-making and productivity. Moreover, it offers advancements in localization and mapping research. Link to the repository: https://github.com/MigVega/SLAM2REF, Data: https://doi.org/10.14459/2024mp1743877.

BIM-SLAM: Integrating BIM Models in Multi-session SLAM for Lifelong Mapping using 3D LiDAR

Aug 28, 2024

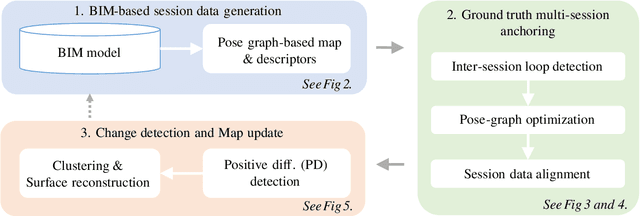

Abstract:While 3D LiDAR sensor technology is becoming more advanced and cheaper every day, the growth of digitalization in the AEC industry contributes to the fact that 3D building information models (BIM models) are now available for a large part of the built environment. These two facts open the question of how 3D models can support 3D LiDAR long-term SLAM in indoor, GPS-denied environments. This paper proposes a methodology that leverages BIM models to create an updated map of indoor environments with sequential LiDAR measurements. Session data (pose graph-based map and descriptors) are initially generated from BIM models. Then, real-world data is aligned with the session data from the model using multi-session anchoring while minimizing the drift on the real-world data. Finally, the new elements not present in the BIM model are identified, grouped, and reconstructed in a surface representation, allowing a better visualization next to the BIM model. The framework enables the creation of a coherent map aligned with the BIM model that does not require prior knowledge of the initial pose of the robot, and it does not need to be inside the map.

* Conference paper in ISARC 2023

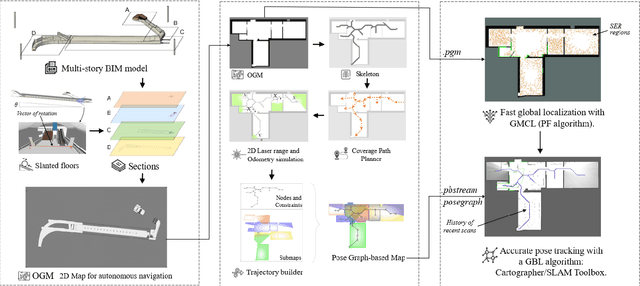

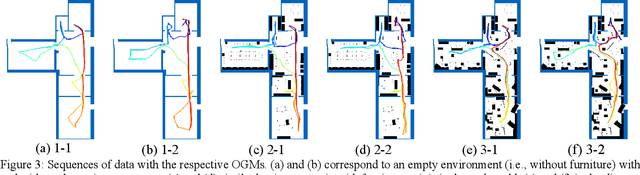

Occupancy Grid Map to Pose Graph-based Map: Robust BIM-based 2D-LiDAR Localization for Lifelong Indoor Navigation in Changing and Dynamic Environments

Aug 10, 2023

Abstract:Several studies rely on the de facto standard Adaptive Monte Carlo Localization (AMCL) method to localize a robot in an Occupancy Grid Map (OGM) extracted from a building information model (BIM model). However, most of these studies assume that the BIM model precisely represents the real world, which is rarely true. Discrepancies between the reference BIM model and the real world (Scan-BIM deviations) are not only due to furniture or clutter but also the usual as-planned and as-built deviations that exist with any model created in the design phase. These deviations affect the accuracy of AMCL drastically. This paper proposes an open-source method to generate appropriate Pose Graph-based maps from BIM models for robust 2D-LiDAR localization in changing and dynamic environments. First, 2D OGMs are automatically generated from complex BIM models. These OGMs only represent structural elements allowing indoor autonomous robot navigation. Then, an efficient technique converts these 2D OGMs into Pose Graph-based maps enabling more accurate robot pose tracking. Finally, we leverage the different map representations for accurate, robust localization with a combination of state-of-the-art algorithms. Moreover, we provide a quantitative comparison of various state-of-the-art localization algorithms in three simulated scenarios with varying levels of Scan-BIM deviations and dynamic agents. More precisely, we compare two Particle Filter (PF) algorithms: AMCL and General Monte Carlo Localization (GMCL); and two Graph-based Localization (GBL) methods: Google's Cartographer and SLAM Toolbox, solving the global localization and pose tracking problems. The numerous experiments demonstrate that the proposed method contributes to a robust localization with an as-designed BIM model or a sparse OGM in changing and dynamic environments, outperforming the conventional AMCL in accuracy and robustness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge