Sowon Hahn

Chain of Empathy: Enhancing Empathetic Response of Large Language Models Based on Psychotherapy Models

Nov 02, 2023

Abstract:We present a novel method, the Chain of Empathy (CoE) prompting, that utilizes insights from psychotherapy to induce Large Language Models (LLMs) to reason about human emotional states. This method is inspired by various psychotherapy approaches including Cognitive Behavioral Therapy (CBT), Dialectical Behavior Therapy (DBT), Person Centered Therapy (PCT), and Reality Therapy (RT), each leading to different patterns of interpreting clients' mental states. LLMs without reasoning generated predominantly exploratory responses. However, when LLMs used CoE reasoning, we found a more comprehensive range of empathetic responses aligned with the different reasoning patterns of each psychotherapy model. The CBT based CoE resulted in the most balanced generation of empathetic responses. The findings underscore the importance of understanding the emotional context and how it affects human and AI communication. Our research contributes to understanding how psychotherapeutic models can be incorporated into LLMs, facilitating the development of context-specific, safer, and empathetic AI.

Developing Social Robots with Empathetic Non-Verbal Cues Using Large Language Models

Aug 31, 2023Abstract:We propose augmenting the empathetic capacities of social robots by integrating non-verbal cues. Our primary contribution is the design and labeling of four types of empathetic non-verbal cues, abbreviated as SAFE: Speech, Action (gesture), Facial expression, and Emotion, in a social robot. These cues are generated using a Large Language Model (LLM). We developed an LLM-based conversational system for the robot and assessed its alignment with social cues as defined by human counselors. Preliminary results show distinct patterns in the robot's responses, such as a preference for calm and positive social emotions like 'joy' and 'lively', and frequent nodding gestures. Despite these tendencies, our approach has led to the development of a social robot capable of context-aware and more authentic interactions. Our work lays the groundwork for future studies on human-robot interactions, emphasizing the essential role of both verbal and non-verbal cues in creating social and empathetic robots.

Social Robots As Companions for Lonely Hearts: The Role of Anthropomorphism and Robot Appearances

Jun 05, 2023

Abstract:Loneliness is a distressing personal experience and a growing social issue. Social robots could alleviate the pain of loneliness, particularly for those who lack in-person interaction. This paper investigated how the effect of loneliness on anthropomorphizing social robots differs by robot appearances, and how it leads to the purchase intention of social robots. Participants viewed a video of one of the three robots(machine-like, animal-like, and human-like) moving and interacting with a human counterpart. The results revealed that when individuals were lonelier, the tendency to anthropomorphize human-like robots increased more than that of animal-like robots. The moderating effect remained significant after covariates were included. The increase in anthropomorphic tendency predicted the heightened purchase intent. The findings imply that human-like robots induce lonely individuals' desire to replenish the sense of connectedness from robots more than animal-like robots, and that anthropomorphic tendency reveals the potential of social robots as real-life companions of lonely individuals.

A Portrait of Emotion: Empowering Self-Expression through AI-Generated Art

Apr 26, 2023

Abstract:We investigated the potential and limitations of generative artificial intelligence (AI) in reflecting the authors' cognitive processes through creative expression. The focus is on the AI-generated artwork's ability to understand human intent (alignment) and visually represent emotions based on criteria such as creativity, aesthetic, novelty, amusement, and depth. Results show a preference for images based on the descriptions of the authors' emotions over the main events. We also found that images that overrepresent specific elements or stereotypes negatively impact AI alignment. Our findings suggest that AI could facilitate creativity and the self-expression of emotions. Our research framework with generative AIs can help design AI-based interventions in related fields (e.g., mental health education, therapy, and counseling).

When Crowd Meets Persona: Creating a Large-Scale Open-Domain Persona Dialogue Corpus

Apr 01, 2023

Abstract:Building a natural language dataset requires caution since word semantics is vulnerable to subtle text change or the definition of the annotated concept. Such a tendency can be seen in generative tasks like question-answering and dialogue generation and also in tasks that create a categorization-based corpus, like topic classification or sentiment analysis. Open-domain conversations involve two or more crowdworkers freely conversing about any topic, and collecting such data is particularly difficult for two reasons: 1) the dataset should be ``crafted" rather than ``obtained" due to privacy concerns, and 2) paid creation of such dialogues may differ from how crowdworkers behave in real-world settings. In this study, we tackle these issues when creating a large-scale open-domain persona dialogue corpus, where persona implies that the conversation is performed by several actors with a fixed persona and user-side workers from an unspecified crowd.

A Computational Approach to Measure Empathy and Theory-of-Mind from Written Texts

Aug 26, 2021

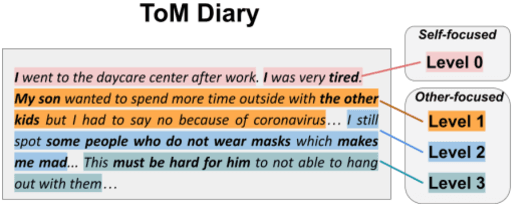

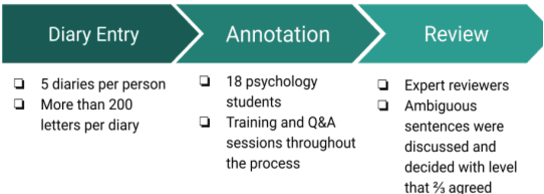

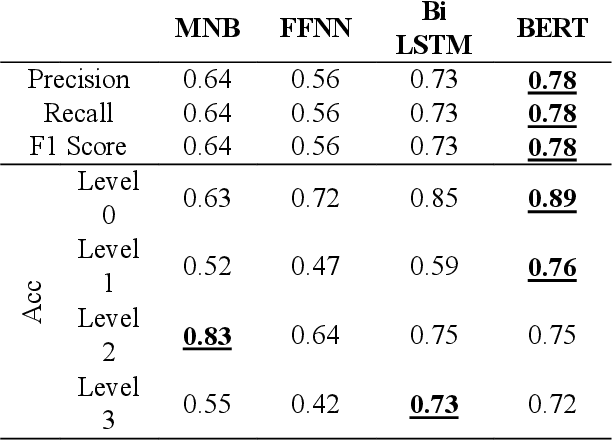

Abstract:Theory-of-mind (ToM), a human ability to infer the intentions and thoughts of others, is an essential part of empathetic experiences. We provide here the framework for using NLP models to measure ToM expressed in written texts. For this purpose, we introduce ToM-Diary, a crowdsourced 18,238 diaries with 74,014 Korean sentences annotated with different ToM levels. Each diary was annotated with ToM levels by trained psychology students and reviewed by selected psychology experts. The annotators first divided the diaries based on whether they mentioned other people: self-focused and other-focused. Examples of self-focused sentences are "I am feeling good". The other-focused sentences were further classified into different levels. These levels differ by whether the writer 1) mentions the presence of others without inferring their mental state(e.g., I saw a man walking down the street), 2) fails to take the perspective of others (e.g., I don't understand why they refuse to wear masks), or 3) successfully takes the perspective of others (It must have been hard for them to continue working). We tested whether state-of-the-art transformer-based models (e.g., BERT) could predict underlying ToM levels in sentences. We found that BERT more successfully detected self-focused sentences than other-focused ones. Sentences that successfully take the perspective of others (the highest ToM level) were the most difficult to predict. Our study suggests a promising direction for large-scale and computational approaches for identifying the ability of authors to empathize and take the perspective of others. The dataset is at [URL](https://github.com/humanfactorspsych/covid19-tom-empathy-diary)

Gentlemen on the Road: Effect of Yielding Behavior of Autonomous Vehicle on Pedestrian Head Orientation

May 16, 2020

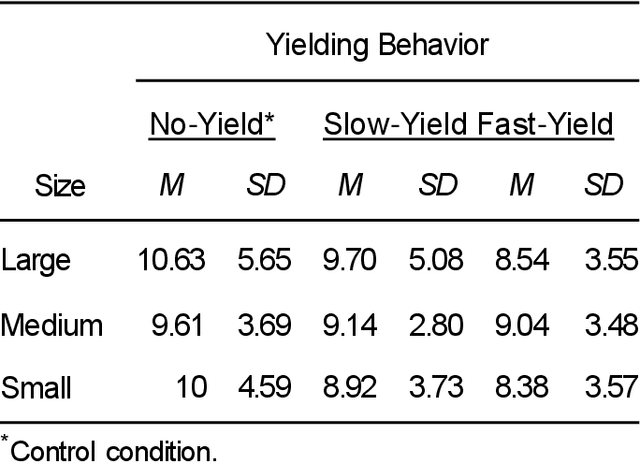

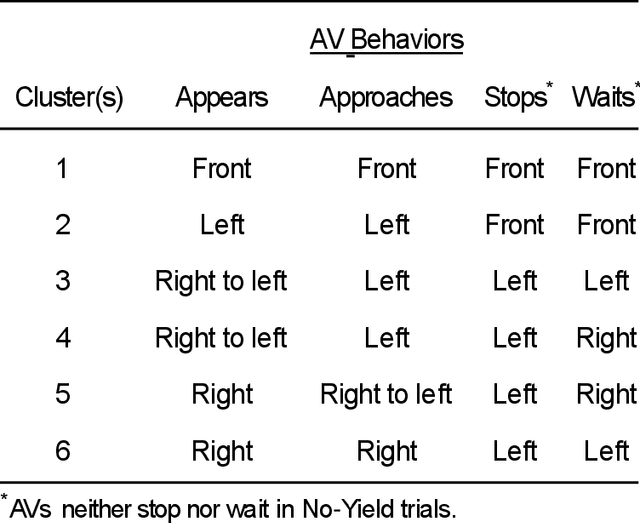

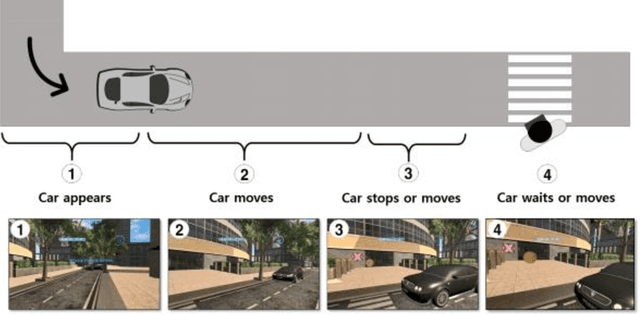

Abstract:Autonomous vehicles can improve pedestrian safety by learning human-like social behaviors (e.g., yielding). We conducted a virtual reality experiment with 39 participants and measured crossing times (seconds) and head orientation (yaw degrees). We manipulated AV yielding behavior (no-yield, slow-yield, and fast-yield) and the AV size (small, medium, and large). Using Dynamic time warping and K-means clustering, we classified the head orientation change of pedestrians by time into 6 clusters of patterns. Results indicate that head orientation change of pedestrians was influenced by AV yielding behavior as well as the size of the AV. Participants fixated on the front most of the time even when the car approached near. Participants changed head orientation most frequently when a large size AV did not yield (no-yield). In post-experiment interviews, participants reported that yielding behavior and size affected their decision to cross and perceived safety. For autonomous vehicles to be perceived as more safe and trustful, vehicle-specific factors such as size and yielding behavior should be considered in the designing process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge