Songlin Xu

Classroom Simulacra: Building Contextual Student Generative Agents in Online Education for Learning Behavioral Simulation

Feb 04, 2025

Abstract:Student simulation supports educators to improve teaching by interacting with virtual students. However, most existing approaches ignore the modulation effects of course materials because of two challenges: the lack of datasets with granularly annotated course materials, and the limitation of existing simulation models in processing extremely long textual data. To solve the challenges, we first run a 6-week education workshop from N = 60 students to collect fine-grained data using a custom built online education system, which logs students' learning behaviors as they interact with lecture materials over time. Second, we propose a transferable iterative reflection (TIR) module that augments both prompting-based and finetuning-based large language models (LLMs) for simulating learning behaviors. Our comprehensive experiments show that TIR enables the LLMs to perform more accurate student simulation than classical deep learning models, even with limited demonstration data. Our TIR approach better captures the granular dynamism of learning performance and inter-student correlations in classrooms, paving the way towards a ''digital twin'' for online education.

Peer attention enhances student learning

Dec 04, 2023Abstract:Human visual attention is susceptible to social influences. In education, peer effects impact student learning, but their precise role in modulating attention remains unclear. Our experiment (N=311) demonstrates that displaying peer visual attention regions when students watch online course videos enhances their focus and engagement. However, students retain adaptability in following peer attention cues. Overall, guided peer attention improves learning experiences and outcomes. These findings elucidate how peer visual attention shapes students' gaze patterns, deepening understanding of peer influence on learning. They also offer insights into designing adaptive online learning interventions leveraging peer attention modelling to optimize student attentiveness and success.

Leveraging generative artificial intelligence to simulate student learning behavior

Oct 30, 2023

Abstract:Student simulation presents a transformative approach to enhance learning outcomes, advance educational research, and ultimately shape the future of effective pedagogy. We explore the feasibility of using large language models (LLMs), a remarkable achievement in AI, to simulate student learning behaviors. Unlike conventional machine learning based prediction, we leverage LLMs to instantiate virtual students with specific demographics and uncover intricate correlations among learning experiences, course materials, understanding levels, and engagement. Our objective is not merely to predict learning outcomes but to replicate learning behaviors and patterns of real students. We validate this hypothesis through three experiments. The first experiment, based on a dataset of N = 145, simulates student learning outcomes from demographic data, revealing parallels with actual students concerning various demographic factors. The second experiment (N = 4524) results in increasingly realistic simulated behaviors with more assessment history for virtual students modelling. The third experiment (N = 27), incorporating prior knowledge and course interactions, indicates a strong link between virtual students' learning behaviors and fine-grained mappings from test questions, course materials, engagement and understanding levels. Collectively, these findings deepen our understanding of LLMs and demonstrate its viability for student simulation, empowering more adaptable curricula design to enhance inclusivity and educational effectiveness.

Modeling Human Cognition with a Hybrid Deep Reinforcement Learning Agent

Jan 18, 2023

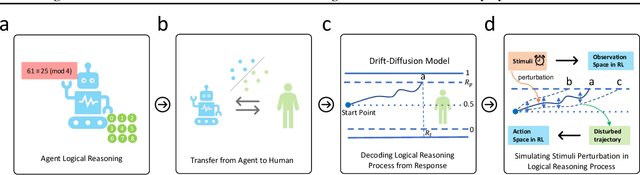

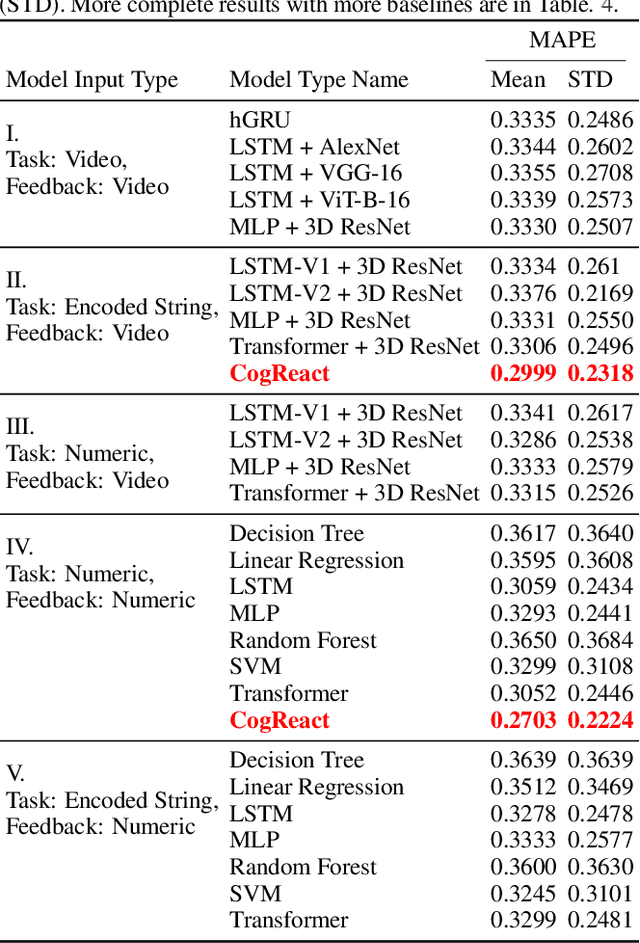

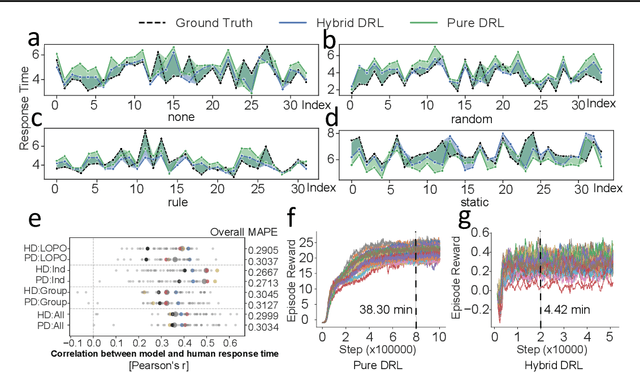

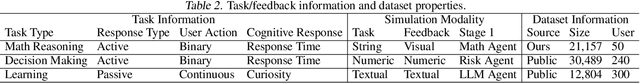

Abstract:Human cognition model could help us gain insights in how human cognition behaviors work under external stimuli, pave the way for synthetic data generation, and assist in adaptive intervention design for cognition regulation. When the external stimuli is highly dynamic, it becomes hard to model the effect that how the stimuli influences human cognition behaviors. Here we propose a novel hybrid deep reinforcement learning (HDRL) framework integrating drift-diffusion model to simulate the effect of dynamic time pressure on human cognition performance. We start with a N=50 user study to investigate how different factors may affect human performance, which help us gain prior knowledge in framework design. The evaluation demonstrates that this framework could improve human cognition modeling quantitatively and capture the general trend of human cognition behaviors qualitatively. Our framework could also be extended to explore and simulate how different external factors play a role in human behaviors.

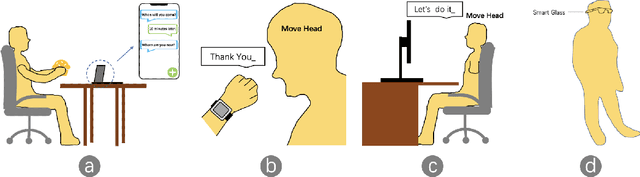

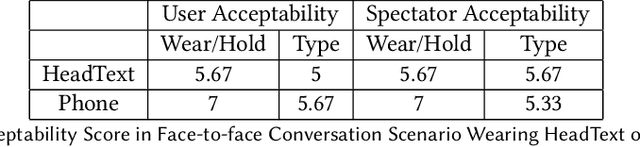

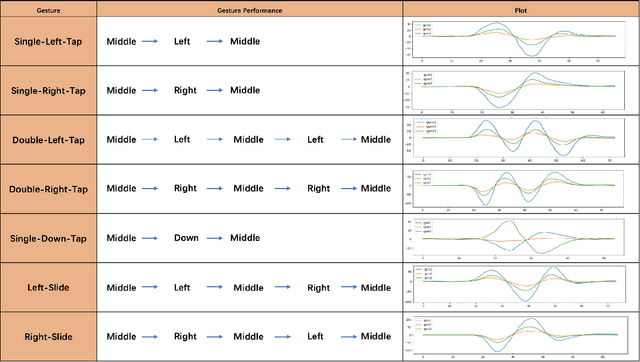

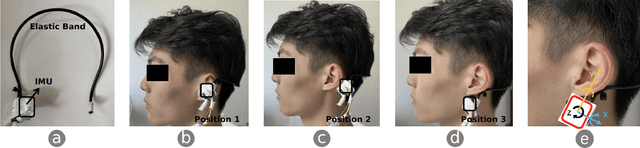

HeadText: Exploring Hands-free Text Entry using Head Gestures by Motion Sensing on a Smart Earpiece

May 23, 2022

Abstract:We present HeadText, a hands-free technique on a smart earpiece for text entry by motion sensing. Users input text utilizing only 7 head gestures for key selection, word selection, word commitment and word cancelling tasks. Head gesture recognition is supported by motion sensing on a smart earpiece to capture head moving signals and machine learning algorithms (K-Nearest-Neighbor (KNN) with a Dynamic Time Warping (DTW) distance measurement). A 10-participant user study proved that HeadText could recognize 7 head gestures at an accuracy of 94.29%. After that, the second user study presented that HeadText could achieve a maximum accuracy of 10.65 WPM and an average accuracy of 9.84 WPM for text entry. Finally, we demonstrate potential applications of HeadText in hands-free scenarios for (a). text entry of people with motor impairments, (b). private text entry, and (c). socially acceptable text entry.

Estimating Risk Levels of Driving Scenarios through Analysis of Driving Styles for Autonomous Vehicles

Apr 23, 2019

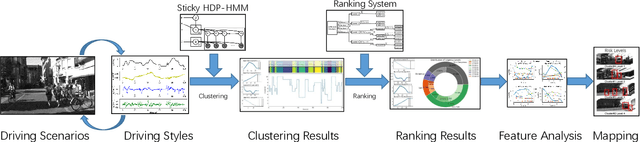

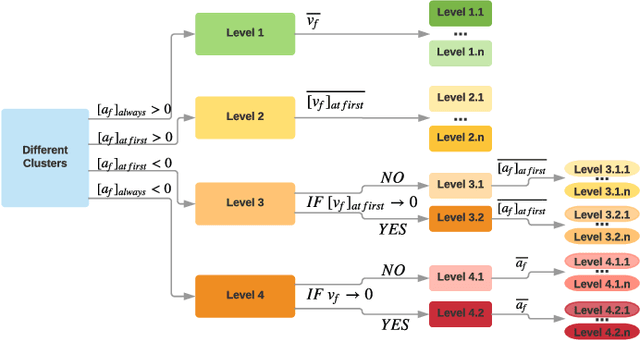

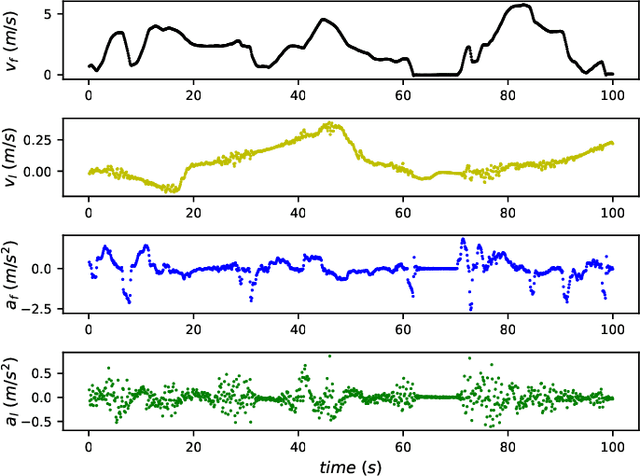

Abstract:In order to operate safely on the road, autonomous vehicles need not only to be able to identify objects in front of them, but also to be able to estimate the risk level of the object in front of the vehicle automatically. It is obvious that different objects have different levels of danger to autonomous vehicles. An evaluation system is needed to automatically determine the danger level of the object for the autonomous vehicle. It would be too subjective and incomplete if the system were completely defined by humans. Based on this, we propose a framework based on nonparametric Bayesian learning method -- a sticky hierarchical Dirichlet process hidden Markov model(sticky HDP-HMM), and discover the relationship between driving scenarios and driving styles. We use the analysis of driving styles of autonomous vehicles to reflect the risk levels of driving scenarios to the vehicles. In this framework, we firstly use sticky HDP-HMM to extract driving styles from the dataset and get different clusters, then an evaluation system is proposed to evaluate and rank the urgency levels of the clusters. Finally, we map the driving scenarios to the ranking results and thus get clusters of driving scenarios in different risk levels. More importantly, we find the relationship between driving scenarios and driving styles. The experiment shows that our framework can cluster and rank driving styles of different urgency levels and find the relationship between driving scenarios and driving styles and the conclusions also fit people's common sense when driving. Furthermore, this framework can be used for autonomous vehicles to estimate risk levels of driving scenarios and help them make precise and safe decisions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge