Siyuan Lin

MapLocNet: Coarse-to-Fine Feature Registration for Visual Re-Localization in Navigation Maps

Jul 11, 2024

Abstract:Robust localization is the cornerstone of autonomous driving, especially in challenging urban environments where GPS signals suffer from multipath errors. Traditional localization approaches rely on high-definition (HD) maps, which consist of precisely annotated landmarks. However, building HD map is expensive and challenging to scale up. Given these limitations, leveraging navigation maps has emerged as a promising low-cost alternative for localization. Current approaches based on navigation maps can achieve highly accurate localization, but their complex matching strategies lead to unacceptable inference latency that fails to meet the real-time demands. To address these limitations, we propose a novel transformer-based neural re-localization method. Inspired by image registration, our approach performs a coarse-to-fine neural feature registration between navigation map and visual bird's-eye view features. Our method significantly outperforms the current state-of-the-art OrienterNet on both the nuScenes and Argoverse datasets, which is nearly 10%/20% localization accuracy and 30/16 FPS improvement on single-view and surround-view input settings, separately. We highlight that our research presents an HD-map-free localization method for autonomous driving, offering cost-effective, reliable, and scalable performance in challenging driving environments.

BLOS-BEV: Navigation Map Enhanced Lane Segmentation Network, Beyond Line of Sight

Jul 11, 2024

Abstract:Bird's-eye-view (BEV) representation is crucial for the perception function in autonomous driving tasks. It is difficult to balance the accuracy, efficiency and range of BEV representation. The existing works are restricted to a limited perception range within 50 meters. Extending the BEV representation range can greatly benefit downstream tasks such as topology reasoning, scene understanding, and planning by offering more comprehensive information and reaction time. The Standard-Definition (SD) navigation maps can provide a lightweight representation of road structure topology, characterized by ease of acquisition and low maintenance costs. An intuitive idea is to combine the close-range visual information from onboard cameras with the beyond line-of-sight (BLOS) environmental priors from SD maps to realize expanded perceptual capabilities. In this paper, we propose BLOS-BEV, a novel BEV segmentation model that incorporates SD maps for accurate beyond line-of-sight perception, up to 200m. Our approach is applicable to common BEV architectures and can achieve excellent results by incorporating information derived from SD maps. We explore various feature fusion schemes to effectively integrate the visual BEV representations and semantic features from the SD map, aiming to leverage the complementary information from both sources optimally. Extensive experiments demonstrate that our approach achieves state-of-the-art performance in BEV segmentation on nuScenes and Argoverse benchmark. Through multi-modal inputs, BEV segmentation is significantly enhanced at close ranges below 50m, while also demonstrating superior performance in long-range scenarios, surpassing other methods by over 20% mIoU at distances ranging from 50-200m.

Lane Departure Prediction Based on Closed-Loop Vehicle Dynamics

Jan 24, 2022

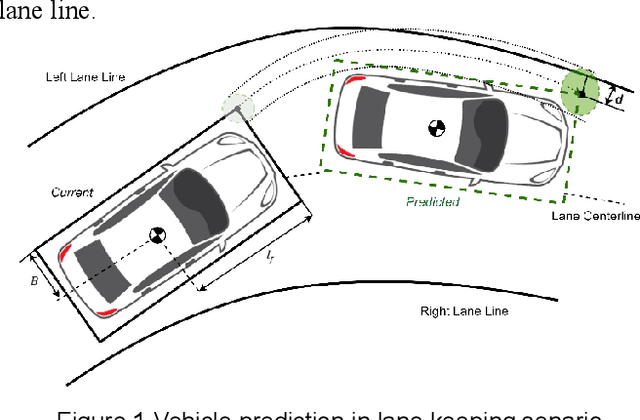

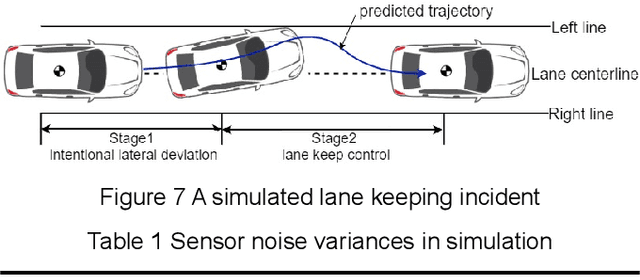

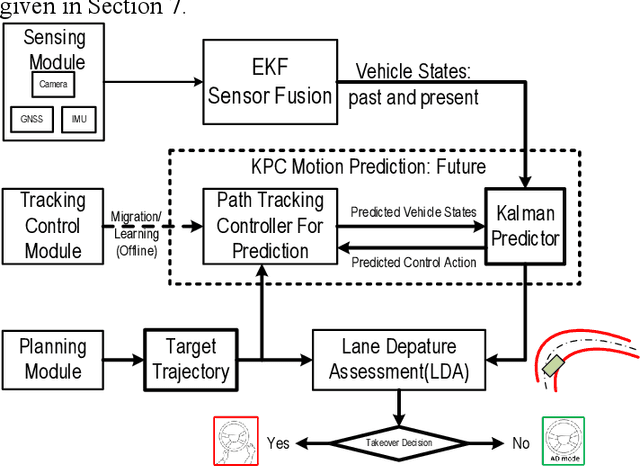

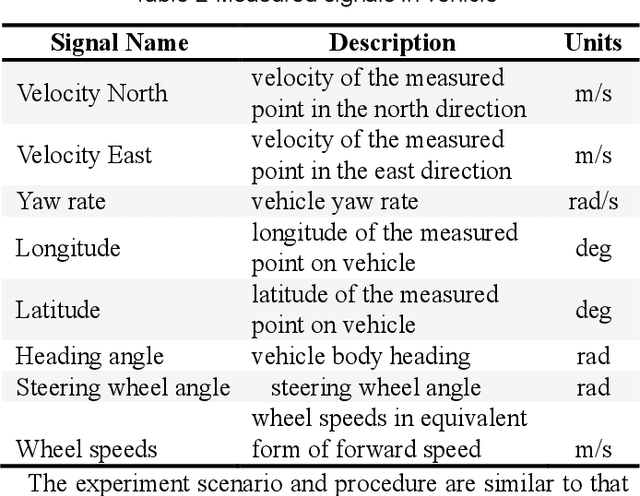

Abstract:An automated driving system should have the ability to supervise its own performance and to request human driver to take over when necessary. In the lane keeping scenario, the prediction of vehicle future trajectory is the key to realize safe and trustworthy driving automation. Previous studies on vehicle trajectory prediction mainly fall into two categories, i.e. physics-based and manoeuvre-based methods. Using a physics-based methodology, this paper proposes a lane departure prediction algorithm based on closed-loop vehicle dynamics model. We use extended Kalman filter to estimate the current vehicle states based on sensing module outputs. Then a Kalman Predictor with actual lane keeping control law is used to predict steering actions and vehicle states in the future. A lane departure assessment module evaluates the probabilistic distribution of vehicle corner positions and decides whether to initiate a human takeover request. The prediction algorithm is capable to describe the stochastic characteristics of future vehicle pose, which is preliminarily proved in simulated tests. Finally, the on-road tests at speeds of 15 to 50 km/h further show that the pro-posed method can accurately predict vehicle future trajectory. It may work as a promising solution to lane departure risk assessment for automated lane keeping functions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge