Siwang Zhou

Pedestrian Trajectory Prediction Based on Social Interactions Learning With Random Weights

Jan 13, 2025

Abstract:Pedestrian trajectory prediction is a critical technology in the evolution of self-driving cars toward complete artificial intelligence. Over recent years, focusing on the trajectories of pedestrians to model their social interactions has surged with great interest in more accurate trajectory predictions. However, existing methods for modeling pedestrian social interactions rely on pre-defined rules, struggling to capture non-explicit social interactions. In this work, we propose a novel framework named DTGAN, which extends the application of Generative Adversarial Networks (GANs) to graph sequence data, with the primary objective of automatically capturing implicit social interactions and achieving precise predictions of pedestrian trajectory. DTGAN innovatively incorporates random weights within each graph to eliminate the need for pre-defined interaction rules. We further enhance the performance of DTGAN by exploring diverse task loss functions during adversarial training, which yields improvements of 16.7\% and 39.3\% on metrics ADE and FDE, respectively. The effectiveness and accuracy of our framework are verified on two public datasets. The experimental results show that our proposed DTGAN achieves superior performance and is well able to understand pedestrians' intentions.

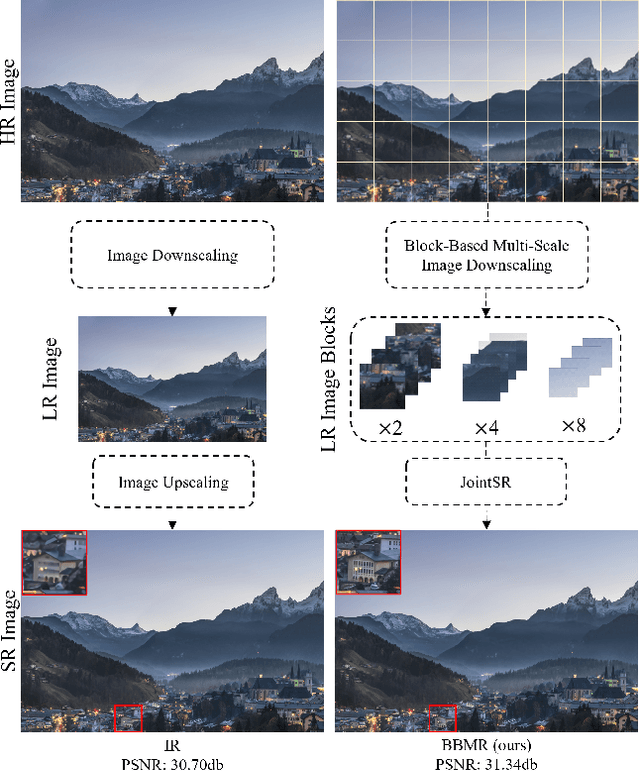

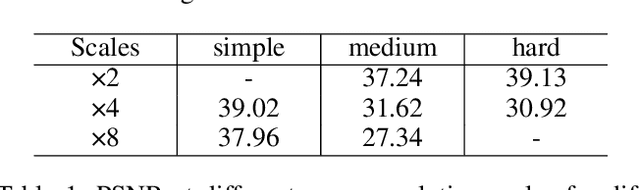

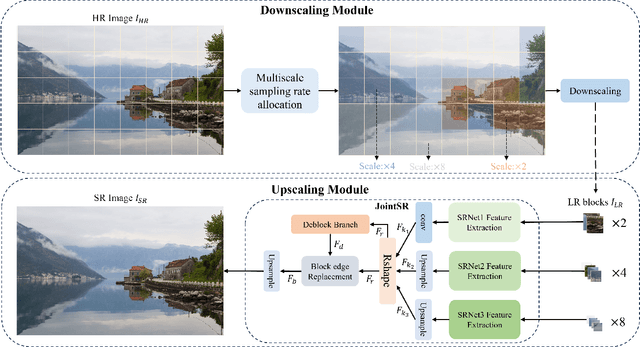

Block-Based Multi-Scale Image Rescaling

Dec 16, 2024

Abstract:Image rescaling (IR) seeks to determine the optimal low-resolution (LR) representation of a high-resolution (HR) image to reconstruct a high-quality super-resolution (SR) image. Typically, HR images with resolutions exceeding 2K possess rich information that is unevenly distributed across the image. Traditional image rescaling methods often fall short because they focus solely on the overall scaling rate, ignoring the varying amounts of information in different parts of the image. To address this limitation, we propose a Block-Based Multi-Scale Image Rescaling Framework (BBMR), tailored for IR tasks involving HR images of 2K resolution and higher. BBMR consists of two main components: the Downscaling Module and the Upscaling Module. In the Downscaling Module, the HR image is segmented into sub-blocks of equal size, with each sub-block receiving a dynamically allocated scaling rate while maintaining a constant overall scaling rate. For the Upscaling Module, we introduce the Joint Super-Resolution method (JointSR), which performs SR on these sub-blocks with varying scaling rates and effectively eliminates blocking artifacts. Experimental results demonstrate that BBMR significantly enhances the SR image quality on the of 2K and 4K test dataset compared to initial network image rescaling methods.

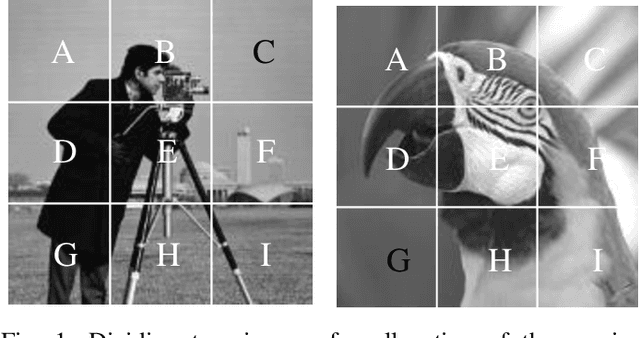

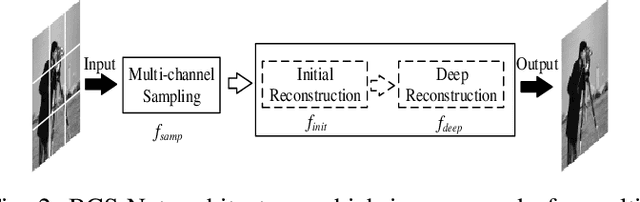

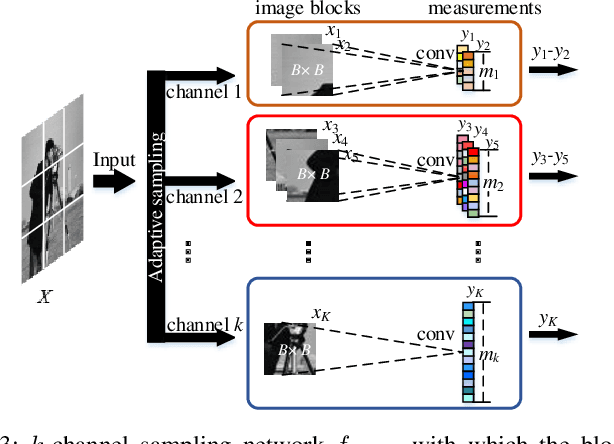

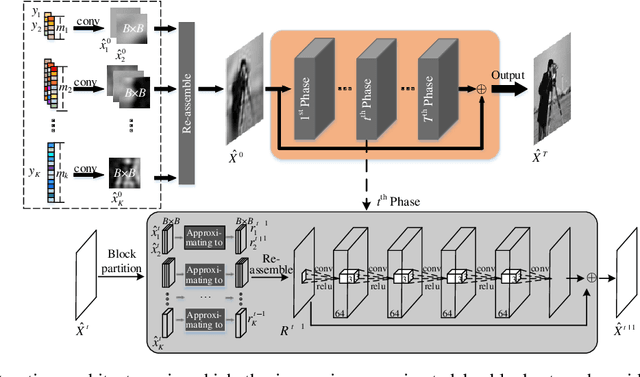

Multi-Channel Deep Networks for Block-Based Image Compressive Sensing

Aug 28, 2019

Abstract:Incorporating deep neural networks in image compressive sensing (CS) receives intensive attentions recently. As deep network approaches learn the inverse mapping directly from the CS measurements, a number of models have to be trained, each of which corresponds to a sampling rate. This may potentially degrade the performance of image CS, especially when multiple sampling rates are assigned to different blocks within an image. In this paper, we develop a multi-channel deep network for block-based image CS with performance significantly exceeding the current state-of-the-art methods. The significant performance improvement of the model is attributed to block-based sampling rates allocation and model-level removal of blocking artifacts. Specifically, the image blocks with a variety of sampling rates can be reconstructed in a single model by exploiting inter-block correlation. At the same time, the initially reconstructed blocks are reassembled into a full image to remove blocking artifacts within the network by unrolling a hand-designed block-based CS algorithm. Experimental results demonstrate that the proposed method outperforms the state-of-the-art CS methods by a large margin in terms of objective metrics, PSNR, SSIM, and subjective visual quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge