Simon Dirmeier

Causal Posterior Estimation

May 27, 2025Abstract:We present Causal Posterior Estimation (CPE), a novel method for Bayesian inference in simulator models, i.e., models where the evaluation of the likelihood function is intractable or too computationally expensive, but where one can simulate model outputs given parameter values. CPE utilizes a normalizing flow-based (NF) approximation to the posterior distribution which carefully incorporates the conditional dependence structure induced by the graphical representation of the model into the neural network. Thereby it is possible to improve the accuracy of the approximation. We introduce both discrete and continuous NF architectures for CPE and propose a constant-time sampling procedure for the continuous case which reduces the computational complexity of drawing samples to O(1) as for discrete NFs. We show, through an extensive experimental evaluation, that by incorporating the conditional dependencies induced by the graphical model directly into the neural network, rather than learning them from data, CPE is able to conduct highly accurate posterior inference either outperforming or matching the state of the art in the field.

High Resolution Seismic Waveform Generation using Denoising Diffusion

Oct 25, 2024

Abstract:Accurate prediction and synthesis of seismic waveforms are crucial for seismic hazard assessment and earthquake-resistant infrastructure design. Existing prediction methods, such as Ground Motion Models and physics-based simulations, often fail to capture the full complexity of seismic wavefields, particularly at higher frequencies. This study introduces a novel, efficient, and scalable generative model for high-frequency seismic waveform generation. Our approach leverages a spectrogram representation of seismic waveform data, which is reduced to a lower-dimensional submanifold via an autoencoder. A state-of-the-art diffusion model is trained to generate this latent representation, conditioned on key input parameters: earthquake magnitude, recording distance, site conditions, and faulting type. The model generates waveforms with frequency content up to 50 Hz. Any scalar ground motion statistic, such as peak ground motion amplitudes and spectral accelerations, can be readily derived from the synthesized waveforms. We validate our model using commonly used seismological metrics, and performance metrics from image generation studies. Our results demonstrate that our openly available model can generate distributions of realistic high-frequency seismic waveforms across a wide range of input parameters, even in data-sparse regions. For the scalar ground motion statistics commonly used in seismic hazard and earthquake engineering studies, we show that the model accurately reproduces both the median trends of the real data and its variability. To evaluate and compare the growing number of this and similar 'Generative Waveform Models' (GWM), we argue that they should generally be openly available and that they should be included in community efforts for ground motion model evaluations.

Simulation-based inference with the Python Package sbijax

Sep 28, 2024Abstract:Neural simulation-based inference (SBI) describes an emerging family of methods for Bayesian inference with intractable likelihood functions that use neural networks as surrogate models. Here we introduce sbijax, a Python package that implements a wide variety of state-of-the-art methods in neural simulation-based inference using a user-friendly programming interface. sbijax offers high-level functionality to quickly construct SBI estimators, and compute and visualize posterior distributions with only a few lines of code. In addition, the package provides functionality for conventional approximate Bayesian computation, to compute model diagnostics, and to automatically estimate summary statistics. By virtue of being entirely written in JAX, sbijax is extremely computationally efficient, allowing rapid training of neural networks and executing code automatically in parallel on both CPU and GPU.

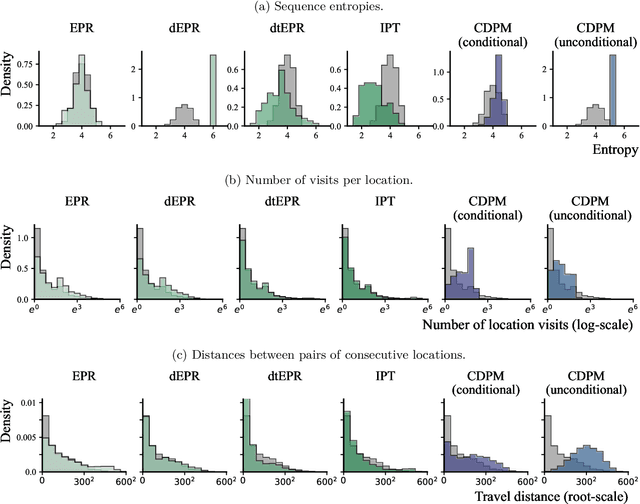

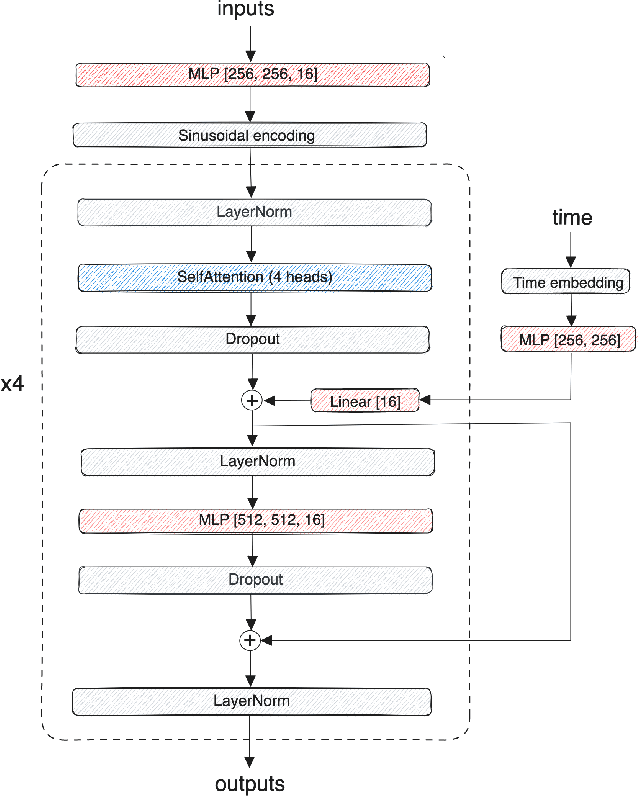

Synthetic location trajectory generation using categorical diffusion models

Feb 19, 2024

Abstract:Diffusion probabilistic models (DPMs) have rapidly evolved to be one of the predominant generative models for the simulation of synthetic data, for instance, for computer vision, audio, natural language processing, or biomolecule generation. Here, we propose using DPMs for the generation of synthetic individual location trajectories (ILTs) which are sequences of variables representing physical locations visited by individuals. ILTs are of major importance in mobility research to understand the mobility behavior of populations and to ultimately inform political decision-making. We represent ILTs as multi-dimensional categorical random variables and propose to model their joint distribution using a continuous DPM by first applying the diffusion process in a continuous unconstrained space and then mapping the continuous variables into a discrete space. We demonstrate that our model can synthesize realistic ILPs by comparing conditionally and unconditionally generated sequences to real-world ILPs from a GNSS tracking data set which suggests the potential use of our model for synthetic data generation, for example, for benchmarking models used in mobility research.

Revealing behavioral impact on mobility prediction networks through causal interventions

Nov 20, 2023Abstract:Deep neural networks are increasingly utilized in mobility prediction tasks, yet their intricate internal workings pose challenges for interpretability, especially in comprehending how various aspects of mobility behavior affect predictions. In this study, we introduce a causal intervention framework to assess the impact of mobility-related factors on neural networks designed for next location prediction -- a task focusing on predicting the immediate next location of an individual. To achieve this, we employ individual mobility models to generate synthetic location visit sequences and control behavior dynamics by intervening in their data generation process. We evaluate the interventional location sequences using mobility metrics and input them into well-trained networks to analyze performance variations. The results demonstrate the effectiveness in producing location sequences with distinct mobility behaviors, thus facilitating the simulation of diverse spatial and temporal changes. These changes result in performance fluctuations in next location prediction networks, revealing impacts of critical mobility behavior factors, including sequential patterns in location transitions, proclivity for exploring new locations, and preferences in location choices at population and individual levels. The gained insights hold significant value for the real-world application of mobility prediction networks, and the framework is expected to promote the use of causal inference for enhancing the interpretability and robustness of neural networks in mobility applications.

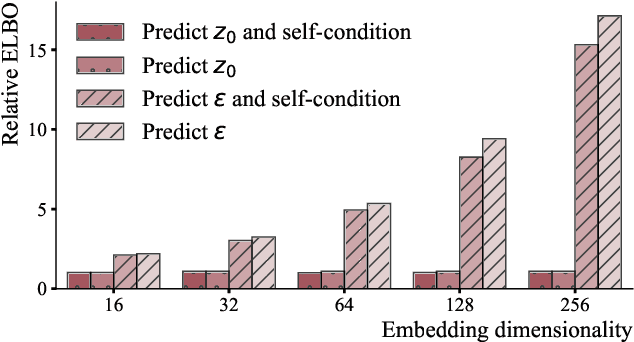

Diffusion models for probabilistic programming

Nov 01, 2023Abstract:We propose Diffusion Model Variational Inference (DMVI), a novel method for automated approximate inference in probabilistic programming languages (PPLs). DMVI utilizes diffusion models as variational approximations to the true posterior distribution by deriving a novel bound to the marginal likelihood objective used in Bayesian modelling. DMVI is easy to implement, allows hassle-free inference in PPLs without the drawbacks of, e.g., variational inference using normalizing flows, and does not make any constraints on the underlying neural network model. We evaluate DMVI on a set of common Bayesian models and show that its posterior inferences are in general more accurate than those of contemporary methods used in PPLs while having a similar computational cost and requiring less manual tuning.

Uncertainty quantification and out-of-distribution detection using surjective normalizing flows

Nov 01, 2023

Abstract:Reliable quantification of epistemic and aleatoric uncertainty is of crucial importance in applications where models are trained in one environment but applied to multiple different environments, often seen in real-world applications for example, in climate science or mobility analysis. We propose a simple approach using surjective normalizing flows to identify out-of-distribution data sets in deep neural network models that can be computed in a single forward pass. The method builds on recent developments in deep uncertainty quantification and generative modeling with normalizing flows. We apply our method to a synthetic data set that has been simulated using a mechanistic model from the mobility literature and several data sets simulated from interventional distributions induced by soft and atomic interventions on that model, and demonstrate that our method can reliably discern out-of-distribution data from in-distribution data. We compare the surjective flow model to a Dirichlet process mixture model and a bijective flow and find that the surjections are a crucial component to reliably distinguish in-distribution from out-of-distribution data.

Simulation-based inference using surjective sequential neural likelihood estimation

Aug 02, 2023

Abstract:We present Surjective Sequential Neural Likelihood (SSNL) estimation, a novel method for simulation-based inference in models where the evaluation of the likelihood function is not tractable and only a simulator that can generate synthetic data is available. SSNL fits a dimensionality-reducing surjective normalizing flow model and uses it as a surrogate likelihood function which allows for conventional Bayesian inference using either Markov chain Monte Carlo methods or variational inference. By embedding the data in a low-dimensional space, SSNL solves several issues previous likelihood-based methods had when applied to high-dimensional data sets that, for instance, contain non-informative data dimensions or lie along a lower-dimensional manifold. We evaluate SSNL on a wide variety of experiments and show that it generally outperforms contemporary methods used in simulation-based inference, for instance, on a challenging real-world example from astrophysics which models the magnetic field strength of the sun using a solar dynamo model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge