Shuo Nie

Stop Rewarding Hallucinated Steps: Faithfulness-Aware Step-Level Reinforcement Learning for Small Reasoning Models

Feb 05, 2026Abstract:As large language models become smaller and more efficient, small reasoning models (SRMs) are crucial for enabling chain-of-thought (CoT) reasoning in resource-constrained settings. However, they are prone to faithfulness hallucinations, especially in intermediate reasoning steps. Existing mitigation methods based on online reinforcement learning rely on outcome-based rewards or coarse-grained CoT evaluation, which can inadvertently reinforce unfaithful reasoning when the final answer is correct. To address these limitations, we propose Faithfulness-Aware Step-Level Reinforcement Learning (FaithRL), introducing step-level supervision via explicit faithfulness rewards from a process reward model, together with an implicit truncated resampling strategy that generates contrastive signals from faithful prefixes. Experiments across multiple SRMs and Open-Book QA benchmarks demonstrate that FaithRL consistently reduces hallucinations in both the CoT and final answers, leading to more faithful and reliable reasoning. Code is available at https://github.com/Easy195/FaithRL.

Subgraph Aggregation for Out-of-Distribution Generalization on Graphs

Oct 29, 2024

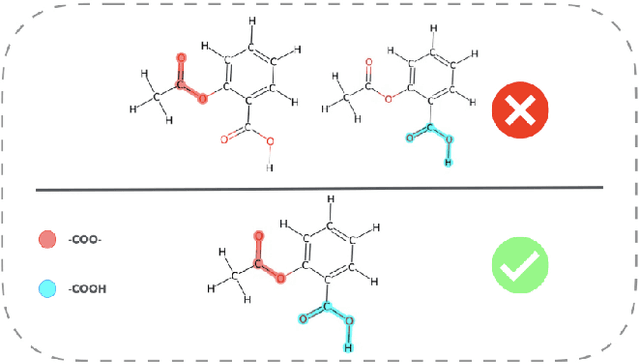

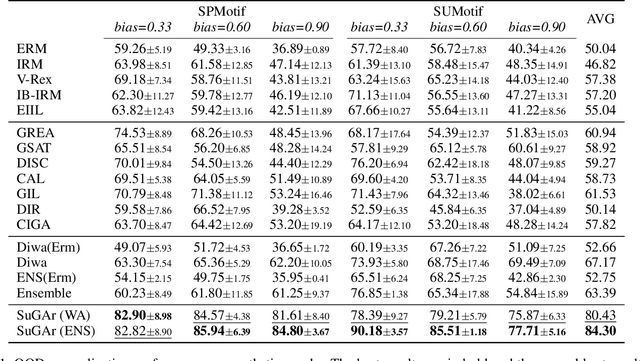

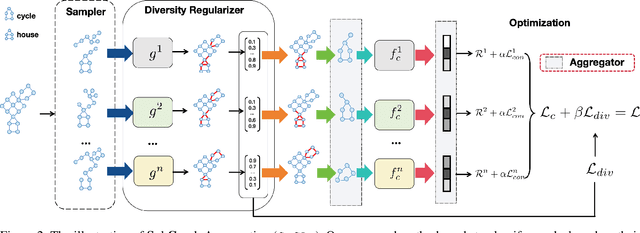

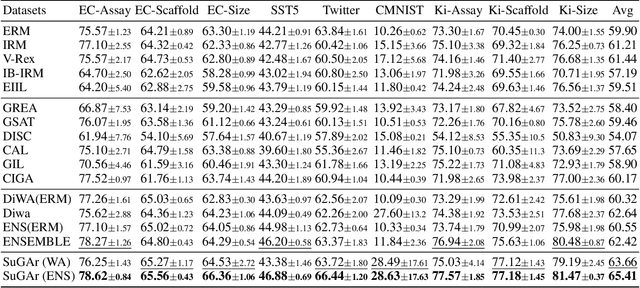

Abstract:Out-of-distribution (OOD) generalization in Graph Neural Networks (GNNs) has gained significant attention due to its critical importance in graph-based predictions in real-world scenarios. Existing methods primarily focus on extracting a single causal subgraph from the input graph to achieve generalizable predictions. However, relying on a single subgraph can lead to susceptibility to spurious correlations and is insufficient for learning invariant patterns behind graph data. Moreover, in many real-world applications, such as molecular property prediction, multiple critical subgraphs may influence the target label property. To address these challenges, we propose a novel framework, SubGraph Aggregation (SuGAr), designed to learn a diverse set of subgraphs that are crucial for OOD generalization on graphs. Specifically, SuGAr employs a tailored subgraph sampler and diversity regularizer to extract a diverse set of invariant subgraphs. These invariant subgraphs are then aggregated by averaging their representations, which enriches the subgraph signals and enhances coverage of the underlying causal structures, thereby improving OOD generalization. Extensive experiments on both synthetic and real-world datasets demonstrate that \ours outperforms state-of-the-art methods, achieving up to a 24% improvement in OOD generalization on graphs. To the best of our knowledge, this is the first work to study graph OOD generalization by learning multiple invariant subgraphs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge