Shixing Chen

Scalable Temporal Localization of Sensitive Activities in Movies and TV Episodes

Jun 16, 2022

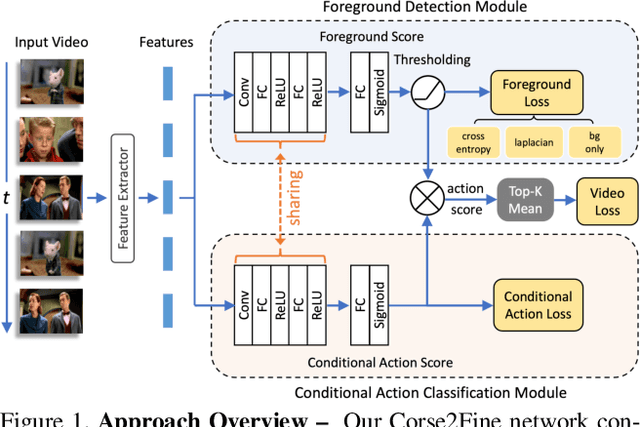

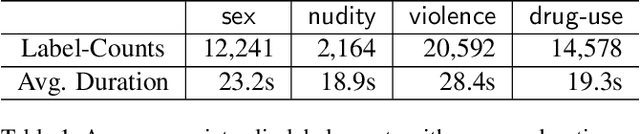

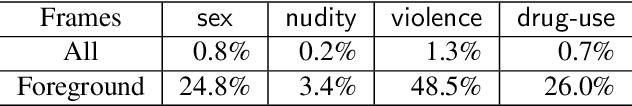

Abstract:To help customers make better-informed viewing choices, video-streaming services try to moderate their content and provide more visibility into which portions of their movies and TV episodes contain age-appropriate material (e.g., nudity, sex, violence, or drug-use). Supervised models to localize these sensitive activities require large amounts of clip-level labeled data which is hard to obtain, while weakly-supervised models to this end usually do not offer competitive accuracy. To address this challenge, we propose a novel Coarse2Fine network designed to make use of readily obtainable video-level weak labels in conjunction with sparse clip-level labels of age-appropriate activities. Our model aggregates frame-level predictions to make video-level classifications and is therefore able to leverage sparse clip-level labels along with video-level labels. Furthermore, by performing frame-level predictions in a hierarchical manner, our approach is able to overcome the label-imbalance problem caused due to the rare-occurrence nature of age-appropriate content. We present comparative results of our approach using 41,234 movies and TV episodes (~3 years of video-content) from 521 sub-genres and 250 countries making it by far the largest-scale empirical analysis of age-appropriate activity localization in long-form videos ever published. Our approach offers 107.2% relative mAP improvement (from 5.5% to 11.4%) over existing state-of-the-art activity-localization approaches.

Robust Cross-Modal Representation Learning with Progressive Self-Distillation

Apr 10, 2022

Abstract:The learning objective of vision-language approach of CLIP does not effectively account for the noisy many-to-many correspondences found in web-harvested image captioning datasets, which contributes to its compute and data inefficiency. To address this challenge, we introduce a novel training framework based on cross-modal contrastive learning that uses progressive self-distillation and soft image-text alignments to more efficiently learn robust representations from noisy data. Our model distills its own knowledge to dynamically generate soft-alignment targets for a subset of images and captions in every minibatch, which are then used to update its parameters. Extensive evaluation across 14 benchmark datasets shows that our method consistently outperforms its CLIP counterpart in multiple settings, including: (a) zero-shot classification, (b) linear probe transfer, and (c) image-text retrieval, without incurring added computational cost. Analysis using an ImageNet-based robustness test-bed reveals that our method offers better effective robustness to natural distribution shifts compared to both ImageNet-trained models and CLIP itself. Lastly, pretraining with datasets spanning two orders of magnitude in size shows that our improvements over CLIP tend to scale with number of training examples.

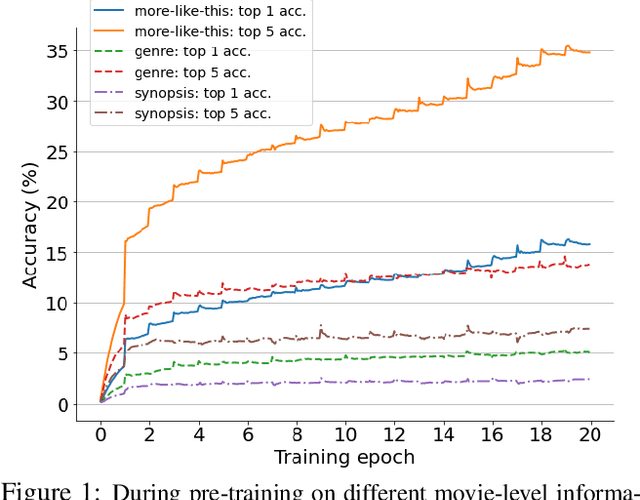

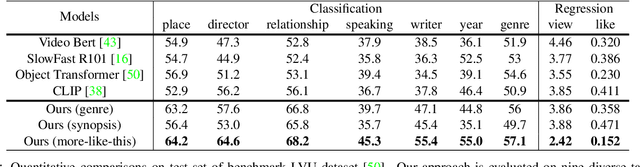

Movies2Scenes: Learning Scene Representations Using Movie Similarities

Mar 12, 2022

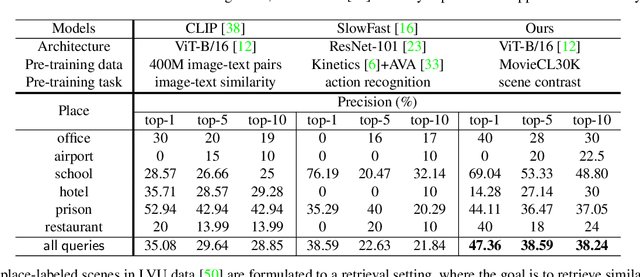

Abstract:Labeling movie-scenes is a time-consuming process which makes applying end-to-end supervised methods for scene-understanding a challenging problem. Moreover, directly using image-based visual representations for scene-understanding tasks does not prove to be effective given the large gap between the two domains. To address these challenges, we propose a novel contrastive learning approach that uses commonly available movie-level information (e.g., co-watch, genre, synopsis) to learn a general-purpose scene-level representation. Our learned representation comfortably outperforms existing state-of-the-art approaches on eleven downstream tasks evaluated using multiple benchmark datasets. To further demonstrate generalizability of our learned representation, we present its comparative results on a set of video-moderation tasks evaluated using a newly collected large-scale internal movie dataset.

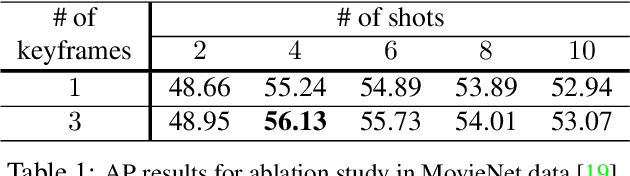

Shot Contrastive Self-Supervised Learning for Scene Boundary Detection

Apr 28, 2021

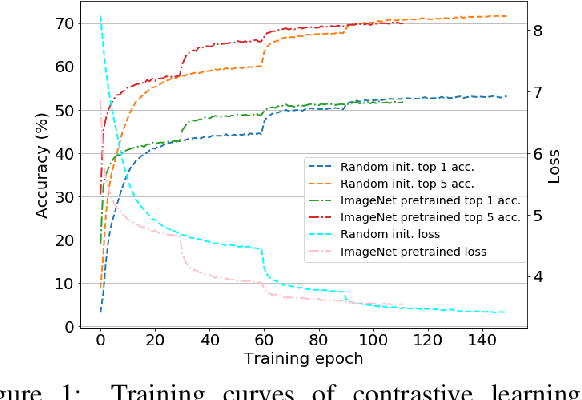

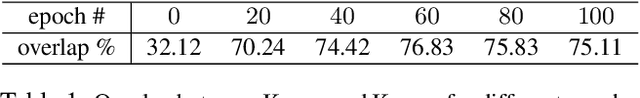

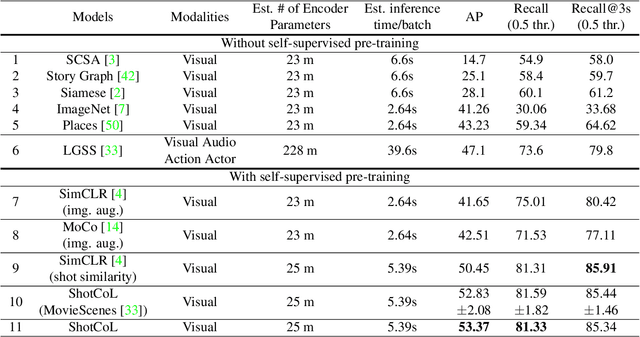

Abstract:Scenes play a crucial role in breaking the storyline of movies and TV episodes into semantically cohesive parts. However, given their complex temporal structure, finding scene boundaries can be a challenging task requiring large amounts of labeled training data. To address this challenge, we present a self-supervised shot contrastive learning approach (ShotCoL) to learn a shot representation that maximizes the similarity between nearby shots compared to randomly selected shots. We show how to apply our learned shot representation for the task of scene boundary detection to offer state-of-the-art performance on the MovieNet dataset while requiring only ~25% of the training labels, using 9x fewer model parameters and offering 7x faster runtime. To assess the effectiveness of ShotCoL on novel applications of scene boundary detection, we take on the problem of finding timestamps in movies and TV episodes where video-ads can be inserted while offering a minimally disruptive viewing experience. To this end, we collected a new dataset called AdCuepoints with 3,975 movies and TV episodes, 2.2 million shots and 19,119 minimally disruptive ad cue-point labels. We present a thorough empirical analysis on this dataset demonstrating the effectiveness of ShotCoL for ad cue-points detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge