Shihong Wang

Annotation-Free Open-Vocabulary Segmentation for Remote-Sensing Images

Aug 25, 2025

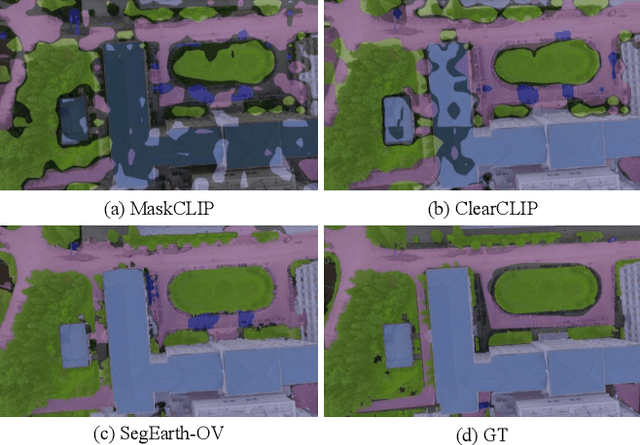

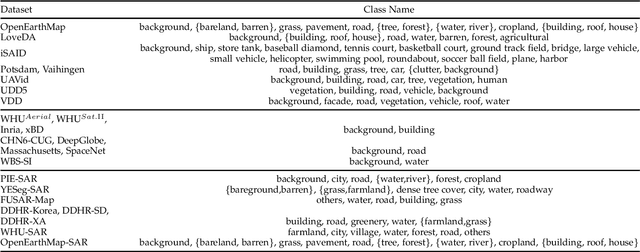

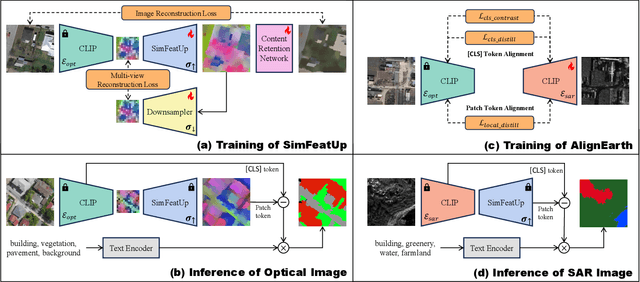

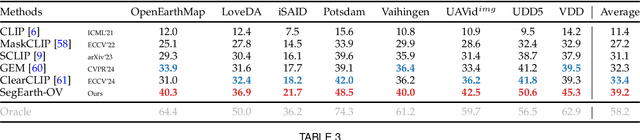

Abstract:Semantic segmentation of remote sensing (RS) images is pivotal for comprehensive Earth observation, but the demand for interpreting new object categories, coupled with the high expense of manual annotation, poses significant challenges. Although open-vocabulary semantic segmentation (OVSS) offers a promising solution, existing frameworks designed for natural images are insufficient for the unique complexities of RS data. They struggle with vast scale variations and fine-grained details, and their adaptation often relies on extensive, costly annotations. To address this critical gap, this paper introduces SegEarth-OV, the first framework for annotation-free open-vocabulary segmentation of RS images. Specifically, we propose SimFeatUp, a universal upsampler that robustly restores high-resolution spatial details from coarse features, correcting distorted target shapes without any task-specific post-training. We also present a simple yet effective Global Bias Alleviation operation to subtract the inherent global context from patch features, significantly enhancing local semantic fidelity. These components empower SegEarth-OV to effectively harness the rich semantics of pre-trained VLMs, making OVSS possible in optical RS contexts. Furthermore, to extend the framework's universality to other challenging RS modalities like SAR images, where large-scale VLMs are unavailable and expensive to create, we introduce AlignEarth, which is a distillation-based strategy and can efficiently transfer semantic knowledge from an optical VLM encoder to an SAR encoder, bypassing the need to build SAR foundation models from scratch and enabling universal OVSS across diverse sensor types. Extensive experiments on both optical and SAR datasets validate that SegEarth-OV can achieve dramatic improvements over the SOTA methods, establishing a robust foundation for annotation-free and open-world Earth observation.

Class Similarity Transition: Decoupling Class Similarities and Imbalance from Generalized Few-shot Segmentation

Apr 08, 2024

Abstract:In Generalized Few-shot Segmentation (GFSS), a model is trained with a large corpus of base class samples and then adapted on limited samples of novel classes. This paper focuses on the relevance between base and novel classes, and improves GFSS in two aspects: 1) mining the similarity between base and novel classes to promote the learning of novel classes, and 2) mitigating the class imbalance issue caused by the volume difference between the support set and the training set. Specifically, we first propose a similarity transition matrix to guide the learning of novel classes with base class knowledge. Then, we leverage the Label-Distribution-Aware Margin (LDAM) loss and Transductive Inference to the GFSS task to address the problem of class imbalance as well as overfitting the support set. In addition, by extending the probability transition matrix, the proposed method can mitigate the catastrophic forgetting of base classes when learning novel classes. With a simple training phase, our proposed method can be applied to any segmentation network trained on base classes. We validated our methods on the adapted version of OpenEarthMap. Compared to existing GFSS baselines, our method excels them all from 3% to 7% and ranks second in the OpenEarthMap Land Cover Mapping Few-Shot Challenge at the completion of this paper. Code: https://github.com/earth-insights/ClassTrans

E-LMC: Extended Linear Model of Coregionalization for Predictions of Spatial Fields

Mar 01, 2022

Abstract:Physical simulations based on partial differential equations typically generate spatial fields results, which are utilized to calculate specific properties of a system for engineering design and optimization. Due to the intensive computational burden of the simulations, a surrogate model mapping the low-dimensional inputs to the spatial fields are commonly built based on a relatively small dataset. To resolve the challenge of predicting the whole spatial field, the popular linear model of coregionalization (LMC) can disentangle complicated correlations within the high-dimensional spatial field outputs and deliver accurate predictions. However, LMC fails if the spatial field cannot be well approximated by a linear combination of base functions with latent processes. In this paper, we extend LMC by introducing an invertible neural network to linearize the highly complex and nonlinear spatial fields such that the LMC can easily generalize to nonlinear problems while preserving the traceability and scalability. Several real-world applications demonstrate that E-LMC can exploit spatial correlations effectively, showing a maximum improvement of about 40% over the original LMC and outperforming the other state-of-the-art spatial field models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge