Shashank Verma

Enhanced Question-Answering for Skill-based learning using Knowledge-based AI and Generative AI

Apr 10, 2025Abstract:Supporting learners' understanding of taught skills in online settings is a longstanding challenge. While exercises and chat-based agents can evaluate understanding in limited contexts, this challenge is magnified when learners seek explanations that delve into procedural knowledge (how things are done) and reasoning (why things happen). We hypothesize that an intelligent agent's ability to understand and explain learners' questions about skills can be significantly enhanced using the TMK (Task-Method-Knowledge) model, a Knowledge-based AI framework. We introduce Ivy, an intelligent agent that leverages an LLM and iterative refinement techniques to generate explanations that embody teleological, causal, and compositional principles. Our initial evaluation demonstrates that this approach goes beyond the typical shallow responses produced by an agent with access to unstructured text, thereby substantially improving the depth and relevance of feedback. This can potentially ensure learners develop a comprehensive understanding of skills crucial for effective problem-solving in online environments.

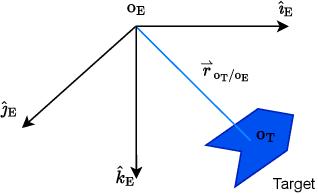

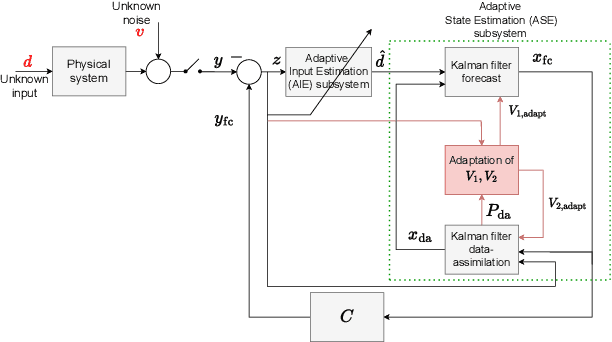

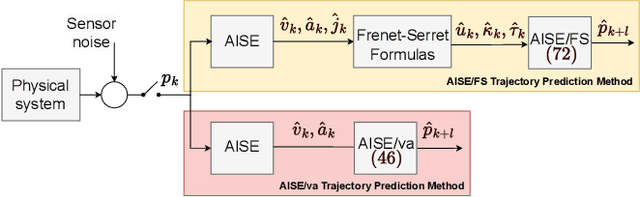

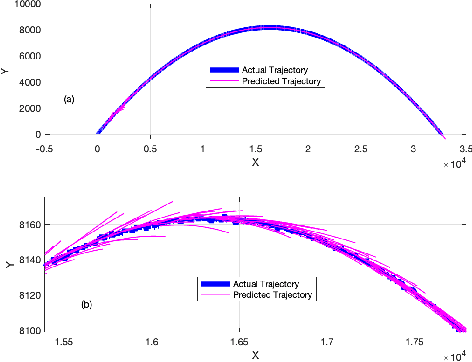

Frenet-Serret-Based Trajectory Prediction

Jan 08, 2025

Abstract:Trajectory prediction is a crucial element of guidance, navigation, and control systems. This paper presents two novel trajectory-prediction methods based on real-time position measurements and adaptive input and state estimation (AISE). The first method, called AISE/va, uses position measurements to estimate the target velocity and acceleration. The second method, called AISE/FS, models the target trajectory as a 3D curve using the Frenet-Serret formulas, which require estimates of velocity, acceleration, and jerk. To estimate velocity, acceleration, and jerk in real time, AISE computes first, second, and third derivatives of the position measurements. AISE does not rely on assumptions about the target maneuver, measurement noise, or disturbances. For trajectory prediction, both methods use measurements of the target position and estimates of its derivatives to extrapolate from the current position. The performance of AISE/va and AISE/FS is compared numerically with the $\alpha$-$\beta$-$\gamma$ filter, which shows that AISE/FS provides more accurate trajectory prediction than AISE/va and traditional methods, especially for complex target maneuvers.

Target Tracking Using the Invariant Extended Kalman Filter with Numerical Differentiation for Estimating Curvature and Torsion

Jan 08, 2025Abstract:The goal of target tracking is to estimate target position, velocity, and acceleration in real time using position data. This paper introduces a novel target-tracking technique that uses adaptive input and state estimation (AISE) for real-time numerical differentiation to estimate velocity, acceleration, and jerk from position data. These estimates are used to model the target motion within the Frenet-Serret (FS) frame. By representing the model in SE(3), the position and velocity are estimated using the invariant extended Kalman filter (IEKF). The proposed method, called FS-IEKF-AISE, is illustrated by numerical examples and compared to prior techniques.

Machine Learning for ALSFRS-R Score Prediction: Making Sense of the Sensor Data

Jul 10, 2024

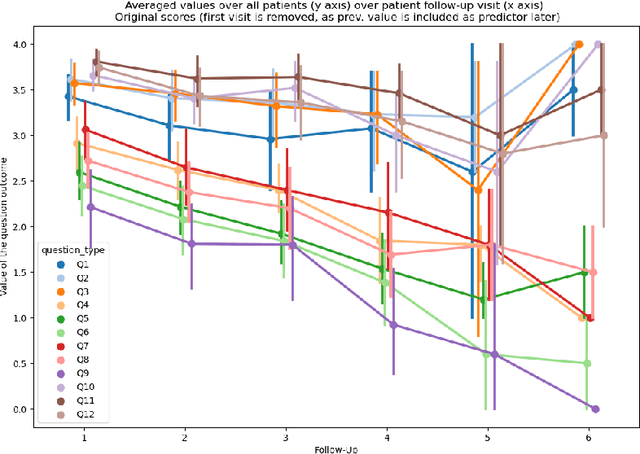

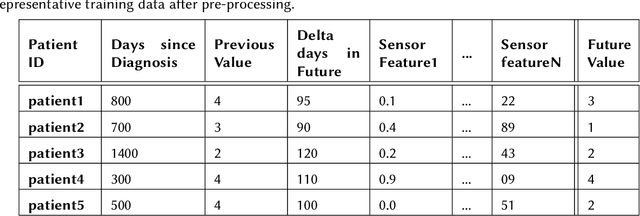

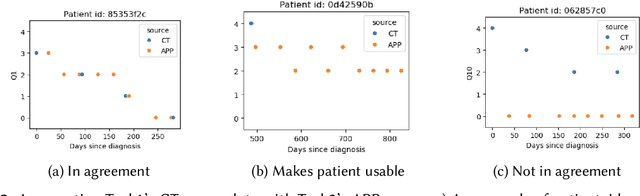

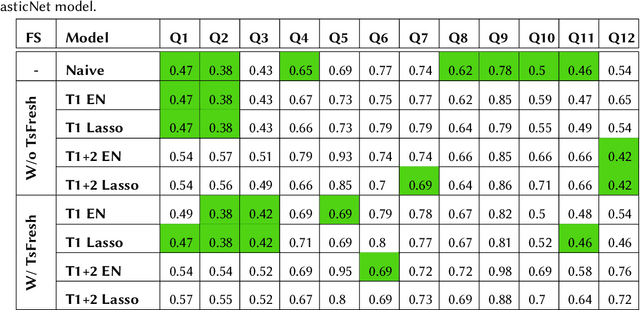

Abstract:Amyotrophic Lateral Sclerosis (ALS) is characterized as a rapidly progressive neurodegenerative disease that presents individuals with limited treatment options in the realm of medical interventions and therapies. The disease showcases a diverse range of onset patterns and progression trajectories, emphasizing the critical importance of early detection of functional decline to enable tailored care strategies and timely therapeutic interventions. The present investigation, spearheaded by the iDPP@CLEF 2024 challenge, focuses on utilizing sensor-derived data obtained through an app. This data is used to construct various machine learning models specifically designed to forecast the advancement of the ALS Functional Rating Scale-Revised (ALSFRS-R) score, leveraging the dataset provided by the organizers. In our analysis, multiple predictive models were evaluated to determine their efficacy in handling ALS sensor data. The temporal aspect of the sensor data was compressed and amalgamated using statistical methods, thereby augmenting the interpretability and applicability of the gathered information for predictive modeling objectives. The models that demonstrated optimal performance were a naive baseline and ElasticNet regression. The naive model achieved a Mean Absolute Error (MAE) of 0.20 and a Root Mean Square Error (RMSE) of 0.49, slightly outperforming the ElasticNet model, which recorded an MAE of 0.22 and an RMSE of 0.50. Our comparative analysis suggests that while the naive approach yielded marginally better predictive accuracy, the ElasticNet model provides a robust framework for understanding feature contributions.

Adaptive Real-Time Numerical Differentiation with Variable-Rate Forgetting and Exponential Resetting

Sep 28, 2023Abstract:Digital PID control requires a differencing operation to implement the D gain. In order to suppress the effects of noisy data, the traditional approach is to filter the data, where the frequency response of the filter is adjusted manually based on the characteristics of the sensor noise. The present paper considers the case where the characteristics of the sensor noise change over time in an unknown way. This problem is addressed by applying adaptive real-time numerical differentiation based on adaptive input and state estimation (AISE). The contribution of this paper is to extend AISE to include variable-rate forgetting with exponential resetting, which allows AISE to more rapidly respond to changing noise characteristics while enforcing the boundedness of the covariance matrix used in recursive least squares.

Kinematics-Based Sensor Fault Detection for Autonomous Vehicles Using Real-Time Numerical Differentiation

Sep 10, 2023

Abstract:Sensor fault detection is of extreme importance for ensuring the safe operation of vehicles. This paper introduces a novel approach to detecting and identifying faulty sensors. For ground vehicles confined to the horizontal plane, this technique is based on six kinematics-based error metrics that are computed in real time by using onboard sensor data encompassing compass, radar, rate gyro, and accelerometer measurements as well as their derivatives. Real-time numerical differentiation is performed by applying the adaptive input and state estimation (AIE/ASE) algorithm. Numerical examples are provided to assess the efficacy of the proposed methodology.

Real-Time Numerical Differentiation of Sampled Data Using Adaptive Input and State Estimation

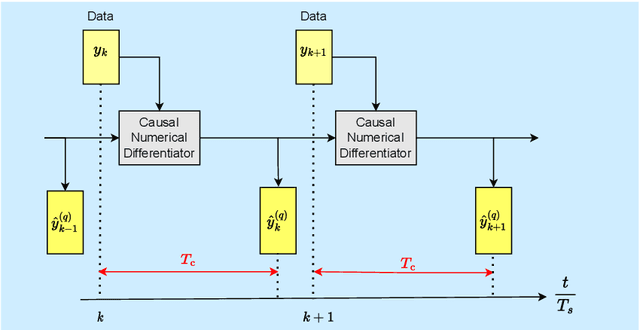

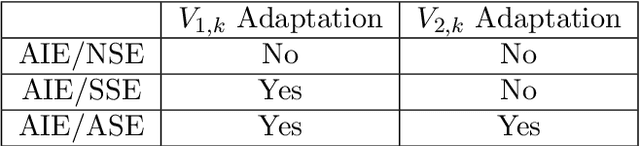

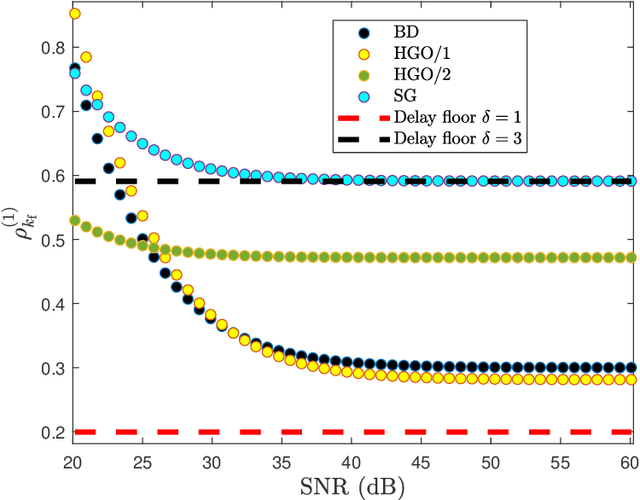

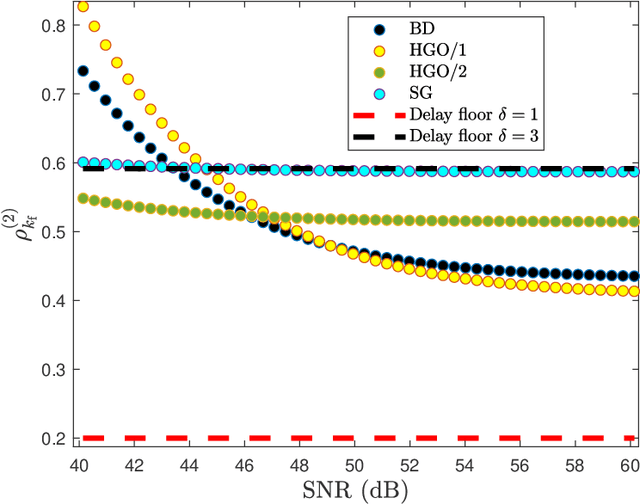

Aug 16, 2023

Abstract:Real-time numerical differentiation plays a crucial role in many digital control algorithms, such as PID control, which requires numerical differentiation to implement derivative action. This paper addresses the problem of numerical differentiation for real-time implementation with minimal prior information about the signal and noise using adaptive input and state estimation. Adaptive input estimation with adaptive state estimation (AIE/ASE) is based on retrospective cost input estimation, while adaptive state estimation is based on an adaptive Kalman filter in which the input-estimation error covariance and the measurement-noise covariance are updated online. The accuracy of AIE/ASE is compared numerically to several conventional numerical differentiation methods. Finally, AIE/ASE is applied to simulated vehicle position data generated from CarSim.

Semi Supervised Phrase Localization in a Bidirectional Caption-Image Retrieval Framework

Aug 08, 2019

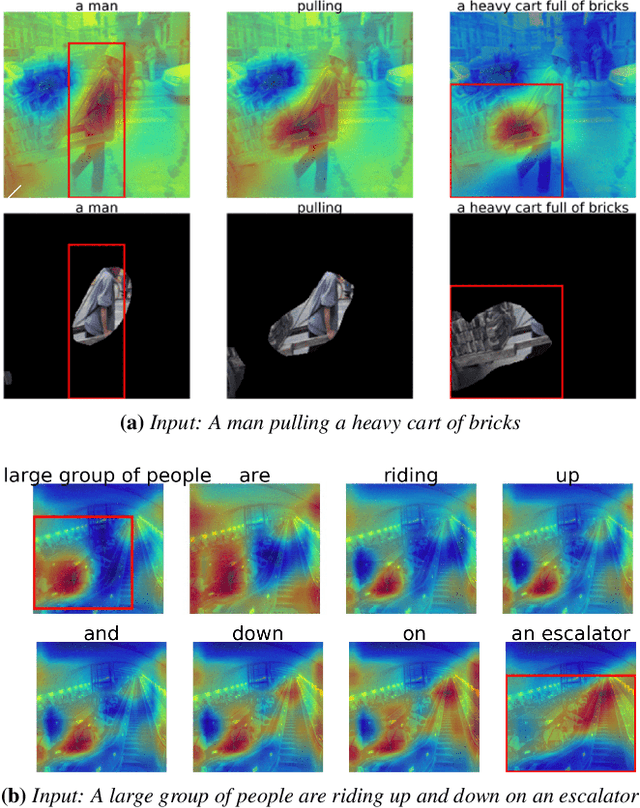

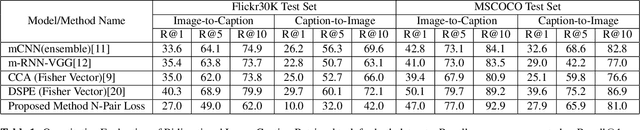

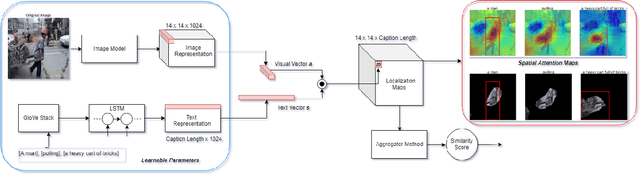

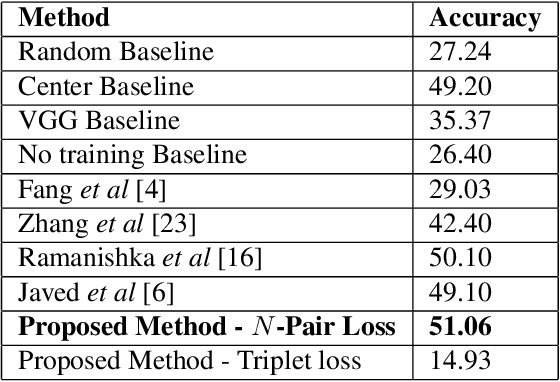

Abstract:We introduce a novel deep neural network architecture that links visual regions to corresponding textual segments including phrases and words. To accomplish this task, our architecture makes use of the rich semantic information available in a joint embedding space of multi-modal data. From this joint embedding space, we extract the associative localization maps that develop naturally, without explicitly providing supervision during training for the localization task. The joint space is learned using a bidirectional ranking objective that is optimized using a $N$-Pair loss formulation. This training mechanism demonstrates the idea that localization information is learned inherently while optimizing a Bidirectional Retrieval objective. The model's retrieval and localization performance is evaluated on MSCOCO and Flickr30K Entities datasets. This architecture outperforms the state of the art results in the semi-supervised phrase localization setting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge