Shashank Sonkar

MalruleLib: Large-Scale Executable Misconception Reasoning with Step Traces for Modeling Student Thinking in Mathematics

Jan 06, 2026Abstract:Student mistakes in mathematics are often systematic: a learner applies a coherent but wrong procedure and repeats it across contexts. We introduce MalruleLib, a learning-science-grounded framework that translates documented misconceptions into executable procedures, drawing on 67 learning-science and mathematics education sources, and generates step-by-step traces of malrule-consistent student work. We formalize a core student-modeling problem as Malrule Reasoning Accuracy (MRA): infer a misconception from one worked mistake and predict the student's next answer under cross-template rephrasing. Across nine language models (4B-120B), accuracy drops from 66% on direct problem solving to 40% on cross-template misconception prediction. MalruleLib encodes 101 malrules over 498 parameterized problem templates and produces paired dual-path traces for both correct reasoning and malrule-consistent student reasoning. Because malrules are executable and templates are parameterizable, MalruleLib can generate over one million instances, enabling scalable supervision and controlled evaluation. Using MalruleLib, we observe cross-template degradations of 10-21%, while providing student step traces improves prediction by 3-15%. We release MalruleLib as infrastructure for educational AI that models student procedures across contexts, enabling diagnosis and feedback that targets the underlying misconception.

The Imitation Game for Educational AI

Feb 21, 2025

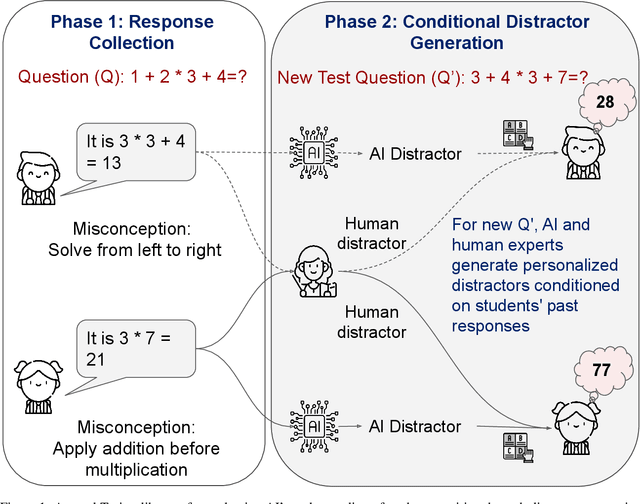

Abstract:As artificial intelligence systems become increasingly prevalent in education, a fundamental challenge emerges: how can we verify if an AI truly understands how students think and reason? Traditional evaluation methods like measuring learning gains require lengthy studies confounded by numerous variables. We present a novel evaluation framework based on a two-phase Turing-like test. In Phase 1, students provide open-ended responses to questions, revealing natural misconceptions. In Phase 2, both AI and human experts, conditioned on each student's specific mistakes, generate distractors for new related questions. By analyzing whether students select AI-generated distractors at rates similar to human expert-generated ones, we can validate if the AI models student cognition. We prove this evaluation must be conditioned on individual responses - unconditioned approaches merely target common misconceptions. Through rigorous statistical sampling theory, we establish precise requirements for high-confidence validation. Our research positions conditioned distractor generation as a probe into an AI system's fundamental ability to model student thinking - a capability that enables adapting tutoring, feedback, and assessments to each student's specific needs.

Do LLMs Make Mistakes Like Students? Exploring Natural Alignment between Language Models and Human Error Patterns

Feb 21, 2025Abstract:Large Language Models (LLMs) have demonstrated remarkable capabilities in various educational tasks, yet their alignment with human learning patterns, particularly in predicting which incorrect options students are most likely to select in multiple-choice questions (MCQs), remains underexplored. Our work investigates the relationship between LLM generation likelihood and student response distributions in MCQs with a specific focus on distractor selections. We collect a comprehensive dataset of MCQs with real-world student response distributions to explore two fundamental research questions: (1). RQ1 - Do the distractors that students more frequently select correspond to those that LLMs assign higher generation likelihood to? (2). RQ2 - When an LLM selects a incorrect choice, does it choose the same distractor that most students pick? Our experiments reveals moderate correlations between LLM-assigned probabilities and student selection patterns for distractors in MCQs. Additionally, when LLMs make mistakes, they are more likley to select the same incorrect answers that commonly mislead students, which is a pattern consistent across both small and large language models. Our work provides empirical evidence that despite LLMs' strong performance on generating educational content, there remains a gap between LLM's underlying reasoning process and human cognitive processes in identifying confusing distractors. Our findings also have significant implications for educational assessment development. The smaller language models could be efficiently utilized for automated distractor generation as they demonstrate similar patterns in identifying confusing answer choices as larger language models. This observed alignment between LLMs and student misconception patterns opens new opportunities for generating high-quality distractors that complement traditional human-designed distractors.

LLM-based Cognitive Models of Students with Misconceptions

Oct 17, 2024

Abstract:Accurately modeling student cognition is crucial for developing effective AI-driven educational technologies. A key challenge is creating realistic student models that satisfy two essential properties: (1) accurately replicating specific misconceptions, and (2) correctly solving problems where these misconceptions are not applicable. This dual requirement reflects the complex nature of student understanding, where misconceptions coexist with correct knowledge. This paper investigates whether Large Language Models (LLMs) can be instruction-tuned to meet this dual requirement and effectively simulate student thinking in algebra. We introduce MalAlgoPy, a novel Python library that generates datasets reflecting authentic student solution patterns through a graph-based representation of algebraic problem-solving. Utilizing MalAlgoPy, we define and examine Cognitive Student Models (CSMs) - LLMs instruction tuned to faithfully emulate realistic student behavior. Our findings reveal that LLMs trained on misconception examples can efficiently learn to replicate errors. However, the training diminishes the model's ability to solve problems correctly, particularly for problem types where the misconceptions are not applicable, thus failing to satisfy second property of CSMs. We demonstrate that by carefully calibrating the ratio of correct to misconception examples in the training data - sometimes as low as 0.25 - it is possible to develop CSMs that satisfy both properties. Our insights enhance our understanding of AI-based student models and pave the way for effective adaptive learning systems.

MalAlgoQA: A Pedagogical Approach for Evaluating Counterfactual Reasoning Abilities

Jul 01, 2024Abstract:This paper introduces MalAlgoQA, a novel dataset designed to evaluate the counterfactual reasoning capabilities of Large Language Models (LLMs) through a pedagogical approach. The dataset comprises mathematics and reading comprehension questions, each accompanied by four answer choices and their corresponding rationales. We focus on the incorrect answer rationales, termed "malgorithms", which highlights flawed reasoning steps leading to incorrect answers and offers valuable insights into erroneous thought processes. We also propose the Malgorithm Identification task, where LLMs are assessed based on their ability to identify corresponding malgorithm given an incorrect answer choice. To evaluate the model performance, we introduce two metrics: Algorithm Identification Accuracy (AIA) for correct answer rationale identification, and Malgorithm Identification Accuracy (MIA) for incorrect answer rationale identification. The task is challenging since state-of-the-art LLMs exhibit significant drops in MIA as compared to AIA. Moreover, we find that the chain-of-thought prompting technique not only fails to consistently enhance MIA, but can also lead to underperformance compared to simple prompting. These findings hold significant implications for the development of more cognitively-inspired LLMs to improve their counterfactual reasoning abilities, particularly through a pedagogical perspective where understanding and rectifying student misconceptions are crucial.

Many-Shot Regurgitation (MSR) Prompting

May 13, 2024Abstract:We introduce Many-Shot Regurgitation (MSR) prompting, a new black-box membership inference attack framework for examining verbatim content reproduction in large language models (LLMs). MSR prompting involves dividing the input text into multiple segments and creating a single prompt that includes a series of faux conversation rounds between a user and a language model to elicit verbatim regurgitation. We apply MSR prompting to diverse text sources, including Wikipedia articles and open educational resources (OER) textbooks, which provide high-quality, factual content and are continuously updated over time. For each source, we curate two dataset types: one that LLMs were likely exposed to during training ($D_{\rm pre}$) and another consisting of documents published after the models' training cutoff dates ($D_{\rm post}$). To quantify the occurrence of verbatim matches, we employ the Longest Common Substring algorithm and count the frequency of matches at different length thresholds. We then use statistical measures such as Cliff's delta, Kolmogorov-Smirnov (KS) distance, and Kruskal-Wallis H test to determine whether the distribution of verbatim matches differs significantly between $D_{\rm pre}$ and $D_{\rm post}$. Our findings reveal a striking difference in the distribution of verbatim matches between $D_{\rm pre}$ and $D_{\rm post}$, with the frequency of verbatim reproduction being significantly higher when LLMs (e.g. GPT models and LLaMAs) are prompted with text from datasets they were likely trained on. For instance, when using GPT-3.5 on Wikipedia articles, we observe a substantial effect size (Cliff's delta $= -0.984$) and a large KS distance ($0.875$) between the distributions of $D_{\rm pre}$ and $D_{\rm post}$. Our results provide compelling evidence that LLMs are more prone to reproducing verbatim content when the input text is likely sourced from their training data.

Regressive Side Effects of Training Language Models to Mimic Student Misconceptions

Apr 23, 2024Abstract:This paper presents a novel exploration into the regressive side effects of training Large Language Models (LLMs) to mimic student misconceptions for personalized education. We highlight the problem that as LLMs are trained to more accurately mimic student misconceptions, there is a compromise in the factual integrity and reasoning ability of the models. Our work involved training an LLM on a student-tutor dialogue dataset to predict student responses. The results demonstrated a decrease in the model's performance across multiple benchmark datasets, including the ARC reasoning challenge and TruthfulQA, which evaluates the truthfulness of model's generated responses. Furthermore, the HaluEval Dial dataset, used for hallucination detection, and MemoTrap, a memory-based task dataset, also reported a decline in the model accuracy. To combat these side effects, we introduced a "hallucination token" technique. This token, appended at the beginning of each student response during training, instructs the model to switch between mimicking student misconceptions and providing factually accurate responses. Despite the significant improvement across all datasets, the technique does not completely restore the LLM's baseline performance, indicating the need for further research in this area. This paper contributes to the ongoing discussion on the use of LLMs for student modeling, emphasizing the need for a balance between personalized education and factual accuracy.

Marking: Visual Grading with Highlighting Errors and Annotating Missing Bits

Apr 22, 2024

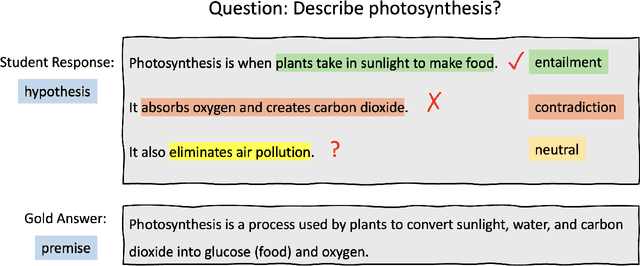

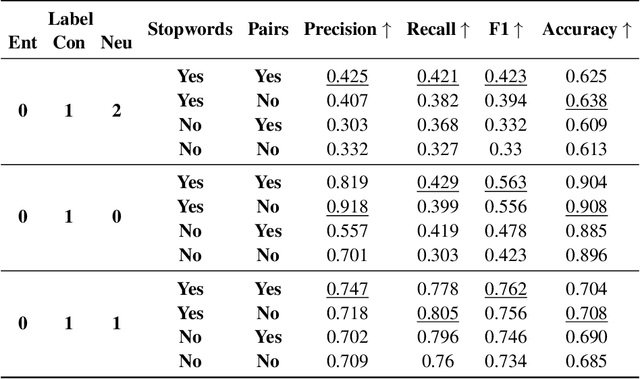

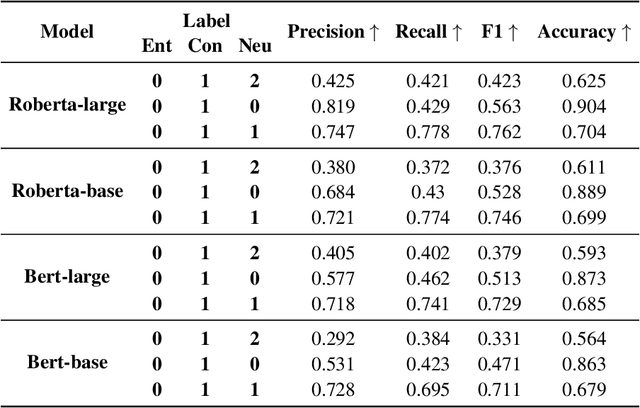

Abstract:In this paper, we introduce "Marking", a novel grading task that enhances automated grading systems by performing an in-depth analysis of student responses and providing students with visual highlights. Unlike traditional systems that provide binary scores, "marking" identifies and categorizes segments of the student response as correct, incorrect, or irrelevant and detects omissions from gold answers. We introduce a new dataset meticulously curated by Subject Matter Experts specifically for this task. We frame "Marking" as an extension of the Natural Language Inference (NLI) task, which is extensively explored in the field of Natural Language Processing. The gold answer and the student response play the roles of premise and hypothesis in NLI, respectively. We subsequently train language models to identify entailment, contradiction, and neutrality from student response, akin to NLI, and with the added dimension of identifying omissions from gold answers. Our experimental setup involves the use of transformer models, specifically BERT and RoBERTa, and an intelligent training step using the e-SNLI dataset. We present extensive baseline results highlighting the complexity of the "Marking" task, which sets a clear trajectory for the upcoming study. Our work not only opens up new avenues for research in AI-powered educational assessment tools, but also provides a valuable benchmark for the AI in education community to engage with and improve upon in the future. The code and dataset can be found at https://github.com/luffycodes/marking.

Automated Long Answer Grading with RiceChem Dataset

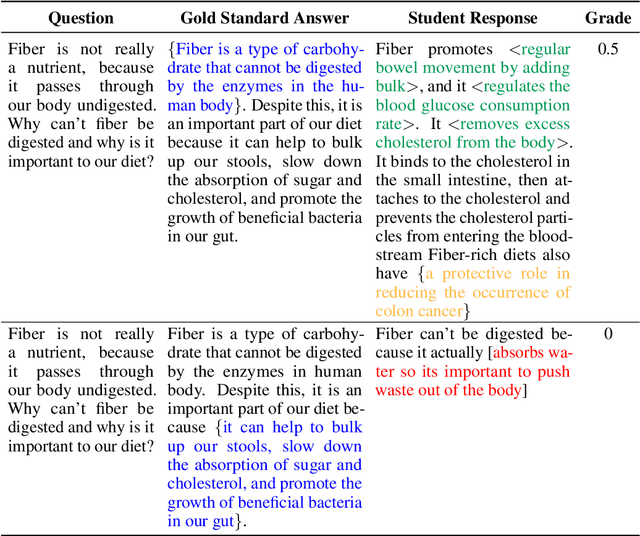

Apr 22, 2024Abstract:We introduce a new area of study in the field of educational Natural Language Processing: Automated Long Answer Grading (ALAG). Distinguishing itself from Automated Short Answer Grading (ASAG) and Automated Essay Grading (AEG), ALAG presents unique challenges due to the complexity and multifaceted nature of fact-based long answers. To study ALAG, we introduce RiceChem, a dataset derived from a college chemistry course, featuring real student responses to long-answer questions with an average word count notably higher than typical ASAG datasets. We propose a novel approach to ALAG by formulating it as a rubric entailment problem, employing natural language inference models to verify whether each criterion, represented by a rubric item, is addressed in the student's response. This formulation enables the effective use of MNLI for transfer learning, significantly improving the performance of models on the RiceChem dataset. We demonstrate the importance of rubric-based formulation in ALAG, showcasing its superiority over traditional score-based approaches in capturing the nuances of student responses. We also investigate the performance of models in cold start scenarios, providing valuable insights into the practical deployment considerations in educational settings. Lastly, we benchmark state-of-the-art open-sourced Large Language Models (LLMs) on RiceChem and compare their results to GPT models, highlighting the increased complexity of ALAG compared to ASAG. Despite leveraging the benefits of a rubric-based approach and transfer learning from MNLI, the lower performance of LLMs on RiceChem underscores the significant difficulty posed by the ALAG task. With this work, we offer a fresh perspective on grading long, fact-based answers and introduce a new dataset to stimulate further research in this important area. Code: \url{https://github.com/luffycodes/Automated-Long-Answer-Grading}.

Pedagogical Alignment of Large Language Models

Feb 07, 2024Abstract:In this paper, we introduce the novel concept of pedagogically aligned Large Language Models (LLMs) that signifies a transformative shift in the application of LLMs within educational contexts. Rather than providing direct responses to user queries, pedagogically-aligned LLMs function as scaffolding tools, breaking complex problems into manageable subproblems and guiding students towards the final answer through constructive feedback and hints. The objective is to equip learners with problem-solving strategies that deepen their understanding and internalization of the subject matter. Previous research in this field has primarily applied the supervised finetuning approach without framing the objective as an alignment problem, hence not employing reinforcement learning through human feedback (RLHF) methods. This study reinterprets the narrative by viewing the task through the lens of alignment and demonstrates how RLHF methods emerge naturally as a superior alternative for aligning LLM behaviour. Building on this perspective, we propose a novel approach for constructing a reward dataset specifically designed for the pedagogical alignment of LLMs. We apply three state-of-the-art RLHF algorithms and find that they outperform SFT significantly. Our qualitative analyses across model differences and hyperparameter sensitivity further validate the superiority of RLHF over SFT. Also, our study sheds light on the potential of online feedback for enhancing the performance of pedagogically-aligned LLMs, thus providing valuable insights for the advancement of these models in educational settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge