Shaowei Zhang

Self-Motivated Multi-Agent Exploration

Jan 05, 2023

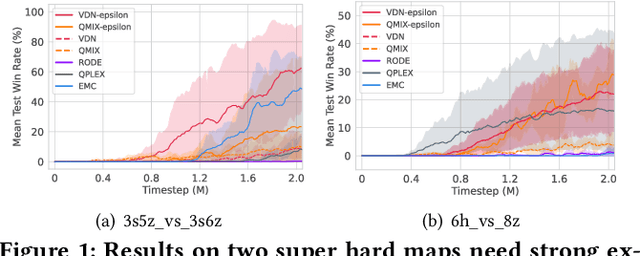

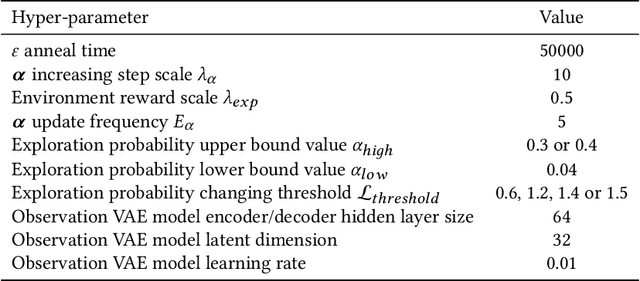

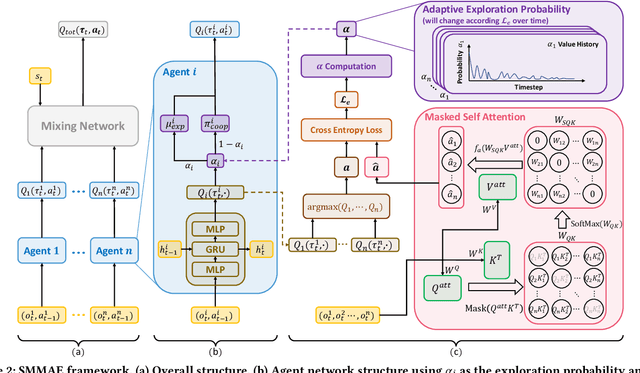

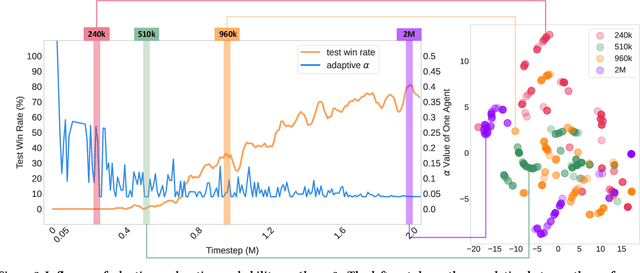

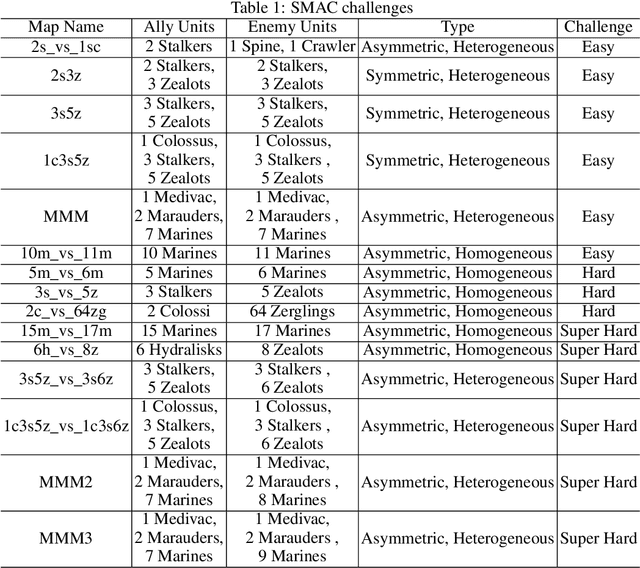

Abstract:In cooperative multi-agent reinforcement learning (CMARL), it is critical for agents to achieve a balance between self-exploration and team collaboration. However, agents can hardly accomplish the team task without coordination and they would be trapped in a local optimum where easy cooperation is accessed without enough individual exploration. Recent works mainly concentrate on agents' coordinated exploration, which brings about the exponentially grown exploration of the state space. To address this issue, we propose Self-Motivated Multi-Agent Exploration (SMMAE), which aims to achieve success in team tasks by adaptively finding a trade-off between self-exploration and team cooperation. In SMMAE, we train an independent exploration policy for each agent to maximize their own visited state space. Each agent learns an adjustable exploration probability based on the stability of the joint team policy. The experiments on highly cooperative tasks in StarCraft II micromanagement benchmark (SMAC) demonstrate that SMMAE can explore task-related states more efficiently, accomplish coordinated behaviours and boost the learning performance.

Learn From the Past: Experience Ensemble Knowledge Distillation

Feb 25, 2022

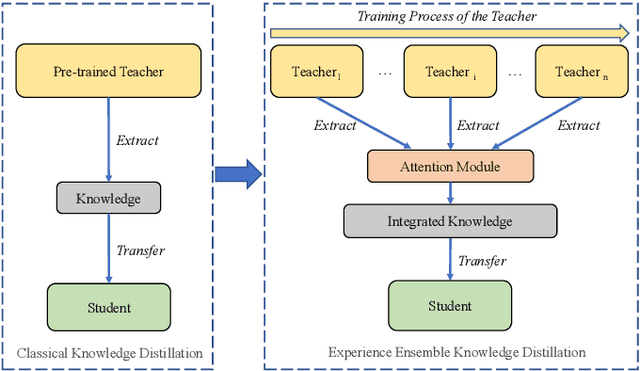

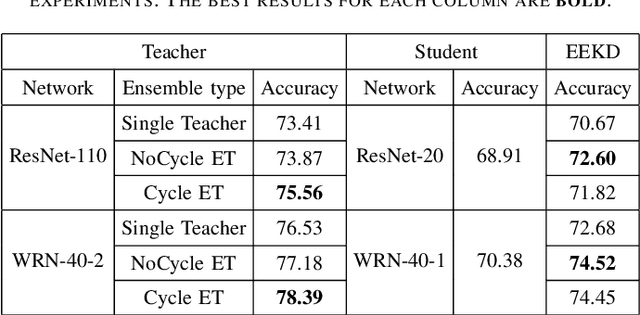

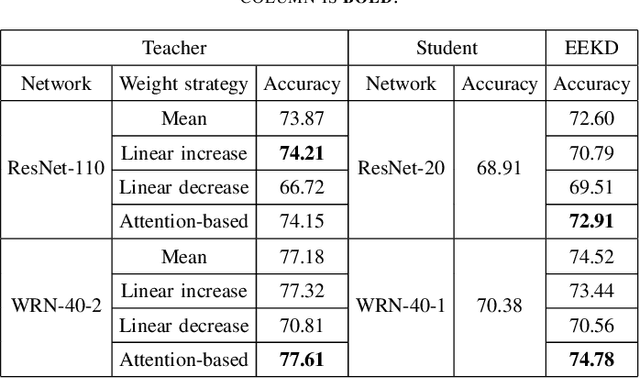

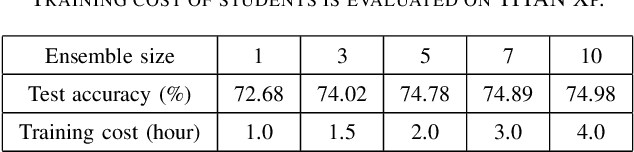

Abstract:Traditional knowledge distillation transfers "dark knowledge" of a pre-trained teacher network to a student network, and ignores the knowledge in the training process of the teacher, which we call teacher's experience. However, in realistic educational scenarios, learning experience is often more important than learning results. In this work, we propose a novel knowledge distillation method by integrating the teacher's experience for knowledge transfer, named experience ensemble knowledge distillation (EEKD). We save a moderate number of intermediate models from the training process of the teacher model uniformly, and then integrate the knowledge of these intermediate models by ensemble technique. A self-attention module is used to adaptively assign weights to different intermediate models in the process of knowledge transfer. Three principles of constructing EEKD on the quality, weights and number of intermediate models are explored. A surprising conclusion is found that strong ensemble teachers do not necessarily produce strong students. The experimental results on CIFAR-100 and ImageNet show that EEKD outperforms the mainstream knowledge distillation methods and achieves the state-of-the-art. In particular, EEKD even surpasses the standard ensemble distillation on the premise of saving training cost.

LINDA: Multi-Agent Local Information Decomposition for Awareness of Teammates

Oct 15, 2021

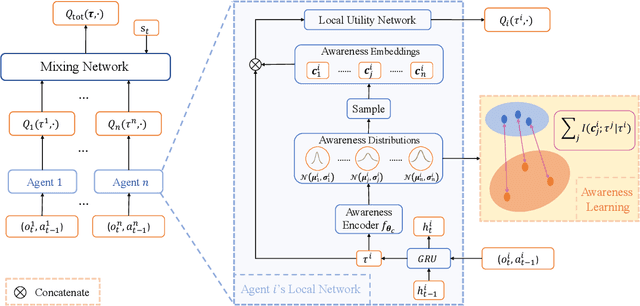

Abstract:In cooperative multi-agent reinforcement learning (MARL), where agents only have access to partial observations, efficiently leveraging local information is critical. During long-time observations, agents can build \textit{awareness} for teammates to alleviate the problem of partial observability. However, previous MARL methods usually neglect this kind of utilization of local information. To address this problem, we propose a novel framework, multi-agent \textit{Local INformation Decomposition for Awareness of teammates} (LINDA), with which agents learn to decompose local information and build awareness for each teammate. We model the awareness as stochastic random variables and perform representation learning to ensure the informativeness of awareness representations by maximizing the mutual information between awareness and the actual trajectory of the corresponding agent. LINDA is agnostic to specific algorithms and can be flexibly integrated to different MARL methods. Sufficient experiments show that the proposed framework learns informative awareness from local partial observations for better collaboration and significantly improves the learning performance, especially on challenging tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge