Shamak Dutta

Hierarchical Informative Path Planning via Graph Guidance and Trajectory Optimization

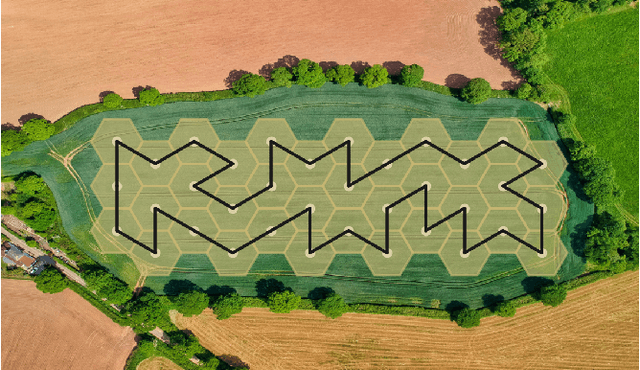

Jan 23, 2026Abstract:We study informative path planning (IPP) with travel budgets in cluttered environments, where an agent collects measurements of a latent field modeled as a Gaussian process (GP) to reduce uncertainty at target locations. Graph-based solvers provide global guarantees but assume pre-selected measurement locations, while continuous trajectory optimization supports path-based sensing but is computationally intensive and sensitive to initialization in obstacle-dense settings. We propose a hierarchical framework with three stages: (i) graph-based global planning, (ii) segment-wise budget allocation using geometric and kernel bounds, and (iii) spline-based refinement of each segment with hard constraints and obstacle pruning. By combining global guidance with local refinement, our method achieves lower posterior uncertainty than graph-only and continuous baselines, while running faster than continuous-space solvers (up to 9x faster than gradient-based methods and 20x faster than black-box optimizers) across synthetic cluttered environments and Arctic datasets.

Approximation Algorithms for Robot Tours in Random Fields with Guaranteed Estimation Accuracy

Oct 14, 2022

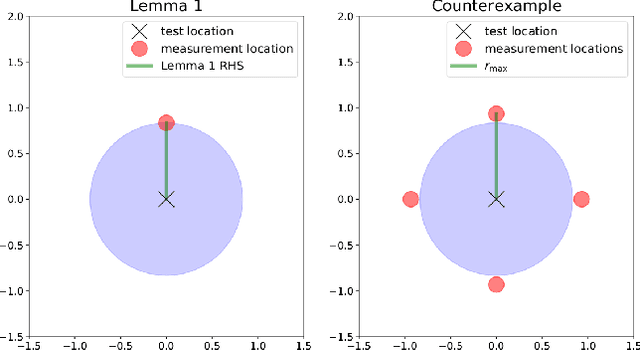

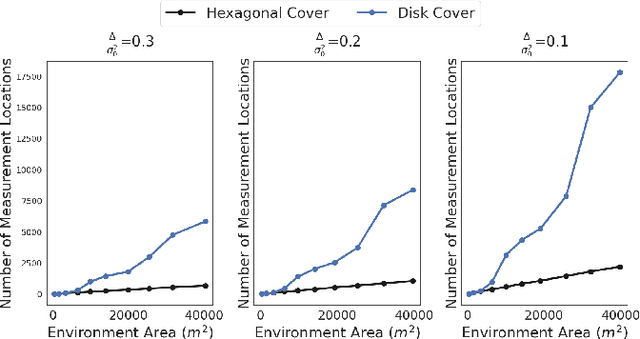

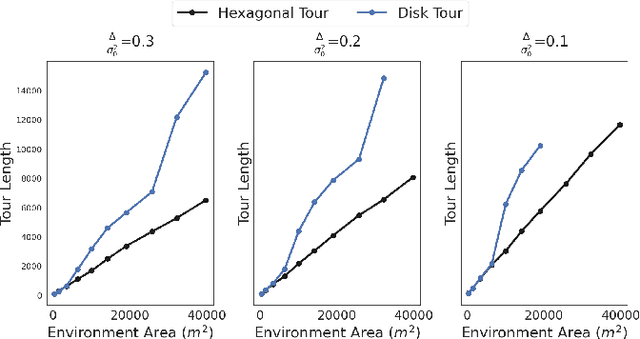

Abstract:We study the sample placement and shortest tour problem for robots tasked with mapping environmental phenomena modeled as stationary random fields. The objective is to minimize the resources used (samples or tour length) while guaranteeing estimation accuracy. We give approximation algorithms for both problems in convex environments. These improve previously known results, both in terms of theoretical guarantees and in simulations. In addition, we disprove an existing claim in the literature on a lower bound for a solution to the sample placement problem.

An Improved Greedy Algorithm for Subset Selection in Linear Estimation

Mar 30, 2022

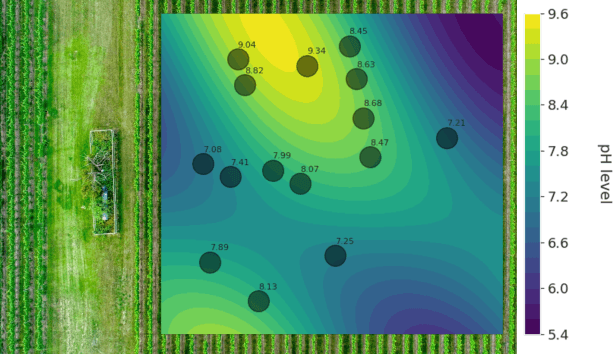

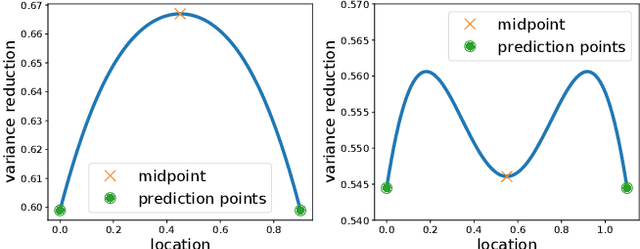

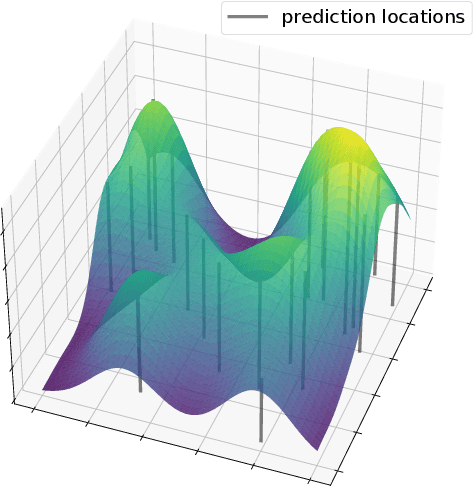

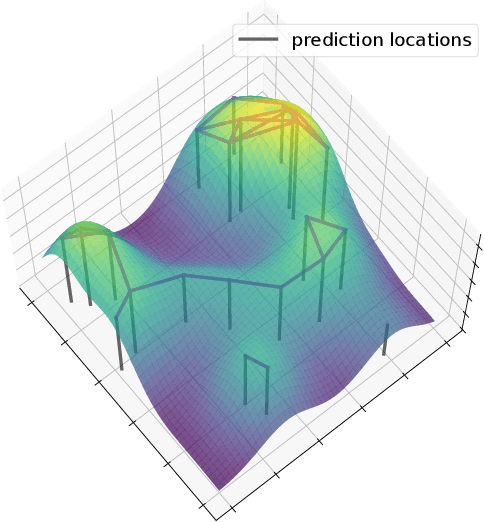

Abstract:In this paper, we consider a subset selection problem in a spatial field where we seek to find a set of k locations whose observations provide the best estimate of the field value at a finite set of prediction locations. The measurements can be taken at any location in the continuous field, and the covariance between the field values at different points is given by the widely used squared exponential covariance function. One approach for observation selection is to perform a grid discretization of the space and obtain an approximate solution using the greedy algorithm. The solution quality improves with a finer grid resolution but at the cost of increased computation. We propose a method to reduce the computational complexity, or conversely to increase solution quality, of the greedy algorithm by considering a search space consisting only of prediction locations and centroids of cliques formed by the prediction locations. We demonstrate the effectiveness of our proposed approach in simulation, both in terms of solution quality and runtime.

Convolutional Neural Networks Regularized by Correlated Noise

Apr 03, 2018

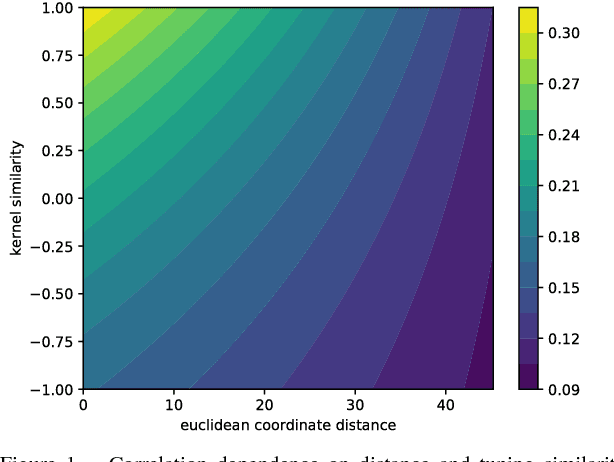

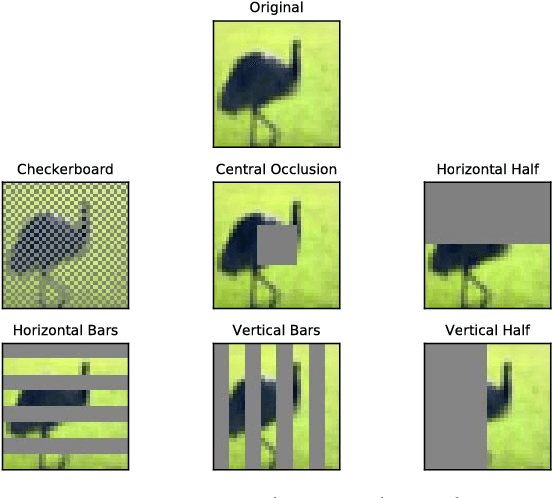

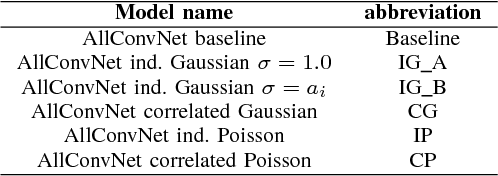

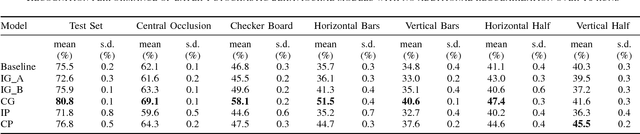

Abstract:Neurons in the visual cortex are correlated in their variability. The presence of correlation impacts cortical processing because noise cannot be averaged out over many neurons. In an effort to understand the functional purpose of correlated variability, we implement and evaluate correlated noise models in deep convolutional neural networks. Inspired by the cortex, correlation is defined as a function of the distance between neurons and their selectivity. We show how to sample from high-dimensional correlated distributions while keeping the procedure differentiable, so that back-propagation can proceed as usual. The impact of correlated variability is evaluated on the classification of occluded and non-occluded images with and without the presence of other regularization techniques, such as dropout. More work is needed to understand the effects of correlations in various conditions, however in 10/12 of the cases we studied, the best performance on occluded images was obtained from a model with correlated noise.

Barcodes for Medical Image Retrieval Using Autoencoded Radon Transform

Sep 16, 2016

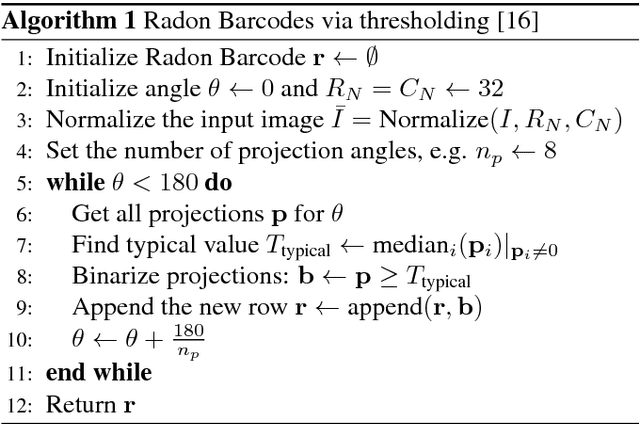

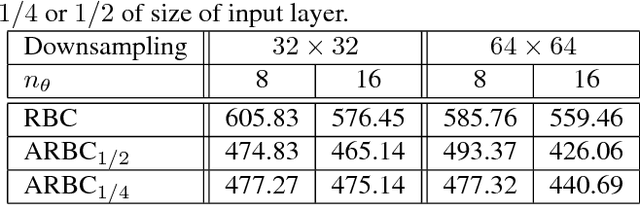

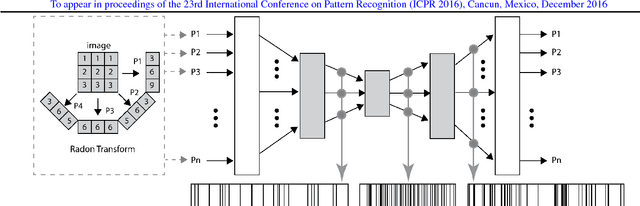

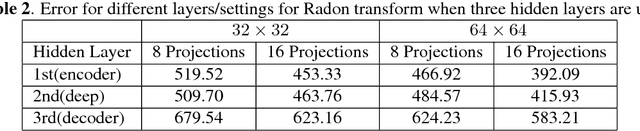

Abstract:Using content-based binary codes to tag digital images has emerged as a promising retrieval technology. Recently, Radon barcodes (RBCs) have been introduced as a new binary descriptor for image search. RBCs are generated by binarization of Radon projections and by assembling them into a vector, namely the barcode. A simple local thresholding has been suggested for binarization. In this paper, we put forward the idea of "autoencoded Radon barcodes". Using images in a training dataset, we autoencode Radon projections to perform binarization on outputs of hidden layers. We employed the mini-batch stochastic gradient descent approach for the training. Each hidden layer of the autoencoder can produce a barcode using a threshold determined based on the range of the logistic function used. The compressing capability of autoencoders apparently reduces the redundancies inherent in Radon projections leading to more accurate retrieval results. The IRMA dataset with 14,410 x-ray images is used to validate the performance of the proposed method. The experimental results, containing comparison with RBCs, SURF and BRISK, show that autoencoded Radon barcode (ARBC) has the capacity to capture important information and to learn richer representations resulting in lower retrieval errors for image retrieval measured with the accuracy of the first hit only.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge