Convolutional Neural Networks Regularized by Correlated Noise

Paper and Code

Apr 03, 2018

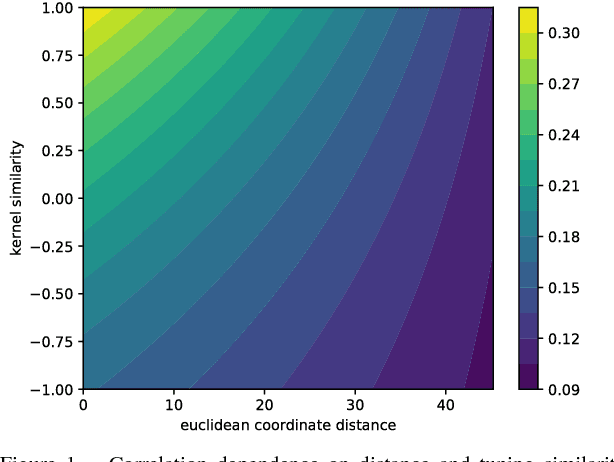

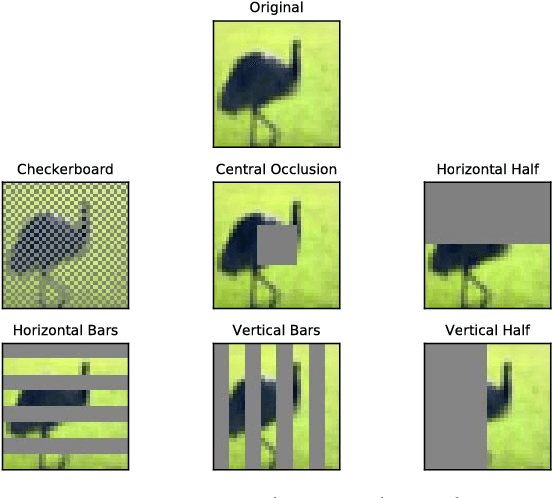

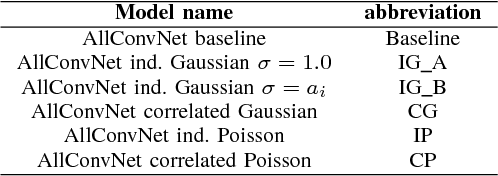

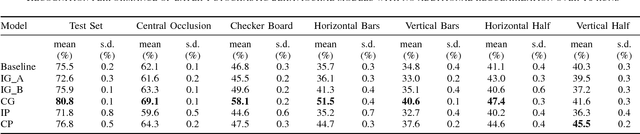

Neurons in the visual cortex are correlated in their variability. The presence of correlation impacts cortical processing because noise cannot be averaged out over many neurons. In an effort to understand the functional purpose of correlated variability, we implement and evaluate correlated noise models in deep convolutional neural networks. Inspired by the cortex, correlation is defined as a function of the distance between neurons and their selectivity. We show how to sample from high-dimensional correlated distributions while keeping the procedure differentiable, so that back-propagation can proceed as usual. The impact of correlated variability is evaluated on the classification of occluded and non-occluded images with and without the presence of other regularization techniques, such as dropout. More work is needed to understand the effects of correlations in various conditions, however in 10/12 of the cases we studied, the best performance on occluded images was obtained from a model with correlated noise.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge