Shahin Khobahi

One-Bit Compressive Sensing: Can We Go Deep and Blind?

Mar 13, 2022

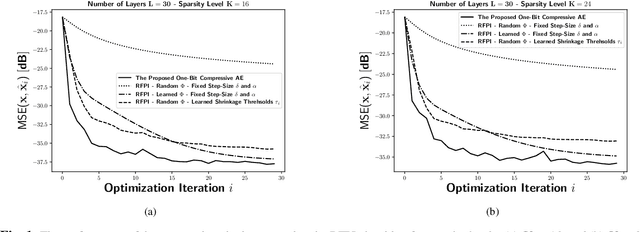

Abstract:One-bit compressive sensing is concerned with the accurate recovery of an underlying sparse signal of interest from its one-bit noisy measurements. The conventional signal recovery approaches for this problem are mainly developed based on the assumption that an exact knowledge of the sensing matrix is available. In this work, however, we present a novel data-driven and model-based methodology that achieves blind recovery; i.e., signal recovery without requiring the knowledge of the sensing matrix. To this end, we make use of the deep unfolding technique and develop a model-driven deep neural architecture which is designed for this specific task. The proposed deep architecture is able to learn an alternative sensing matrix by taking advantage of the underlying unfolded algorithm such that the resulting learned recovery algorithm can accurately and quickly (in terms of the number of iterations) recover the underlying compressed signal of interest from its one-bit noisy measurements. In addition, due to the incorporation of the domain knowledge and the mathematical model of the system into the proposed deep architecture, the resulting network benefits from enhanced interpretability, has a very small number of trainable parameters, and requires very small number of training samples, as compared to the commonly used black-box deep neural network alternatives for the problem at hand.

LoRD-Net: Unfolded Deep Detection Network with Low-Resolution Receivers

Feb 05, 2021

Abstract:The need to recover high-dimensional signals from their noisy low-resolution quantized measurements is widely encountered in communications and sensing. In this paper, we focus on the extreme case of one-bit quantizers, and propose a deep detector entitled LoRD-Net for recovering information symbols from one-bit measurements. Our method is a model-aware data-driven architecture based on deep unfolding of first-order optimization iterations. LoRD-Net has a task-based architecture dedicated to recovering the underlying signal of interest from the one-bit noisy measurements without requiring prior knowledge of the channel matrix through which the one-bit measurements are obtained. The proposed deep detector has much fewer parameters compared to black-box deep networks due to the incorporation of domain-knowledge in the design of its architecture, allowing it to operate in a data-driven fashion while benefiting from the flexibility, versatility, and reliability of model-based optimization methods. LoRD-Net operates in a blind fashion, which requires addressing both the non-linear nature of the data-acquisition system as well as identifying a proper optimization objective for signal recovery. Accordingly, we propose a two-stage training method for LoRD-Net, in which the first stage is dedicated to identifying the proper form of the optimization process to unfold, while the latter trains the resulting model in an end-to-end manner. We numerically evaluate the proposed receiver architecture for one-bit signal recovery in wireless communications and demonstrate that the proposed hybrid methodology outperforms both data-driven and model-based state-of-the-art methods, while utilizing small datasets, on the order of merely $\sim 500$ samples, for training.

Unfolded Algorithms for Deep Phase Retrieval

Dec 21, 2020

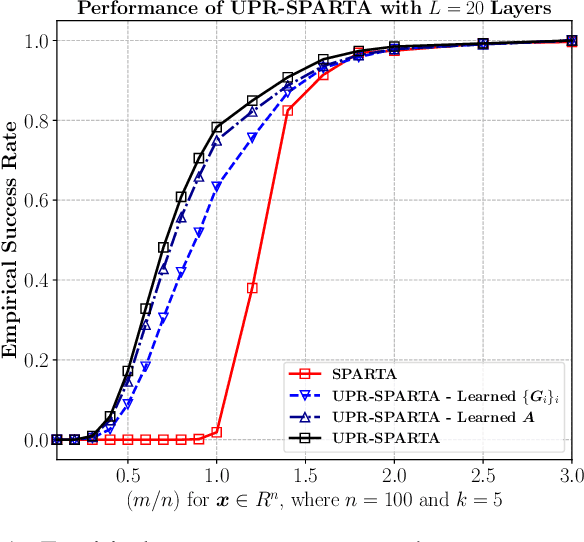

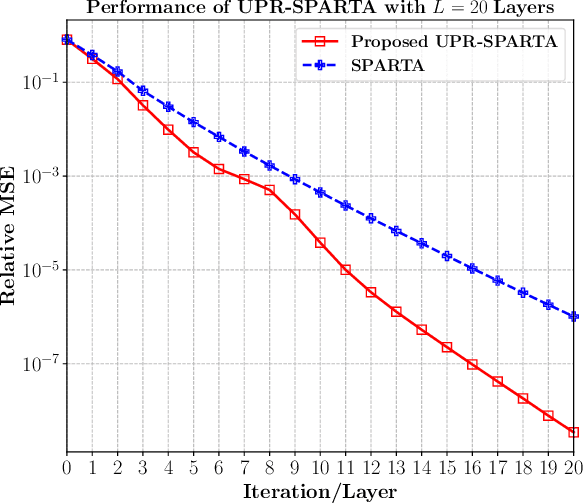

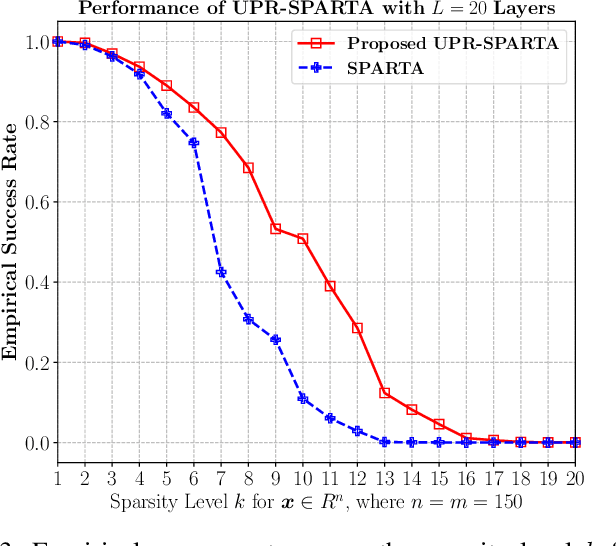

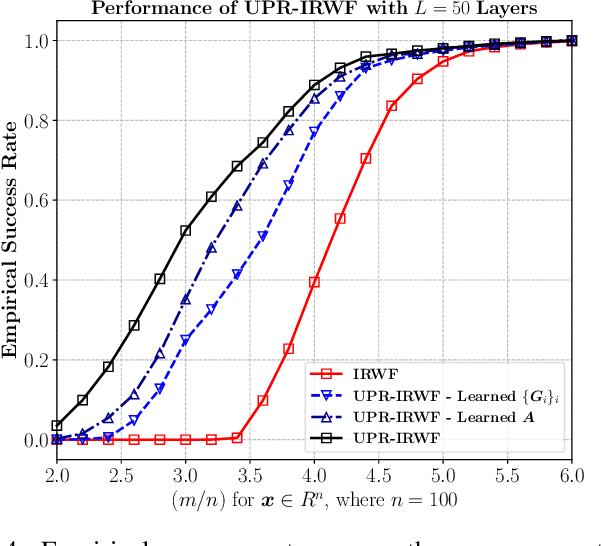

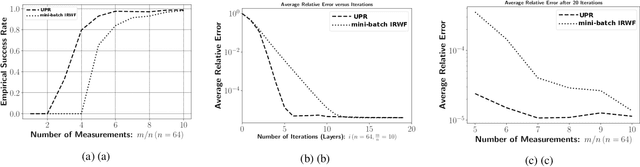

Abstract:Exploring the idea of phase retrieval has been intriguing researchers for decades, due to its appearance in a wide range of applications. The task of a phase retrieval algorithm is typically to recover a signal from linear phaseless measurements. In this paper, we approach the problem by proposing a hybrid model-based data-driven deep architecture, referred to as Unfolded Phase Retrieval (UPR), that exhibits significant potential in improving the performance of state-of-the art data-driven and model-based phase retrieval algorithms. The proposed method benefits from versatility and interpretability of well-established model-based algorithms, while simultaneously benefiting from the expressive power of deep neural networks. In particular, our proposed model-based deep architecture is applied to the conventional phase retrieval problem (via the incremental reshaped Wirtinger flow algorithm) and the sparse phase retrieval problem (via the sparse truncated amplitude flow algorithm), showing immense promise in both cases. Furthermore, we consider a joint design of the sensing matrix and the signal processing algorithm and utilize the deep unfolding technique in the process. Our numerical results illustrate the effectiveness of such hybrid model-based and data-driven frameworks and showcase the untapped potential of data-aided methodologies to enhance the existing phase retrieval algorithms.

Deep-RLS: A Model-Inspired Deep Learning Approach to Nonlinear PCA

Nov 18, 2020

Abstract:In this work, we consider the application of model-based deep learning in nonlinear principal component analysis (PCA). Inspired by the deep unfolding methodology, we propose a task-based deep learning approach, referred to as Deep-RLS, that unfolds the iterations of the well-known recursive least squares (RLS) algorithm into the layers of a deep neural network in order to perform nonlinear PCA. In particular, we formulate the nonlinear PCA for the blind source separation (BSS) problem and show through numerical analysis that Deep-RLS results in a significant improvement in the accuracy of recovering the source signals in BSS when compared to the traditional RLS algorithm.

UPR: A Model-Driven Architecture for Deep Phase Retrieval

Mar 09, 2020

Abstract:The problem of phase retrieval has been intriguing researchers for decades due to its appearance in a wide range of applications. The task of a phase retrieval algorithm is typically to recover a signal from linear phase-less measurements. In this paper, we approach the problem by proposing a hybrid model-based data-driven deep architecture, referred to as the Unfolded Phase Retrieval (UPR), that shows potential in improving the performance of the state-of-the-art phase retrieval algorithms. Specifically, the proposed method benefits from versatility and interpretability of well established model-based algorithms, while simultaneously benefiting from the expressive power of deep neural networks. Our numerical results illustrate the effectiveness of such hybrid deep architectures and showcase the untapped potential of data-aided methodologies to enhance the existing phase retrieval algorithms.

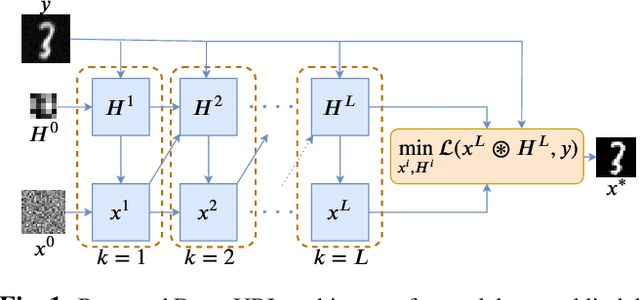

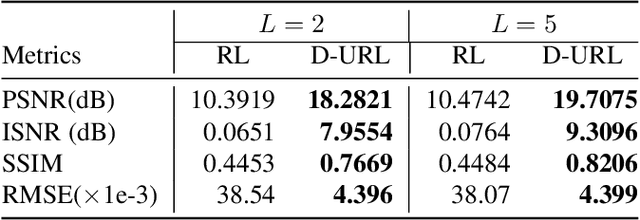

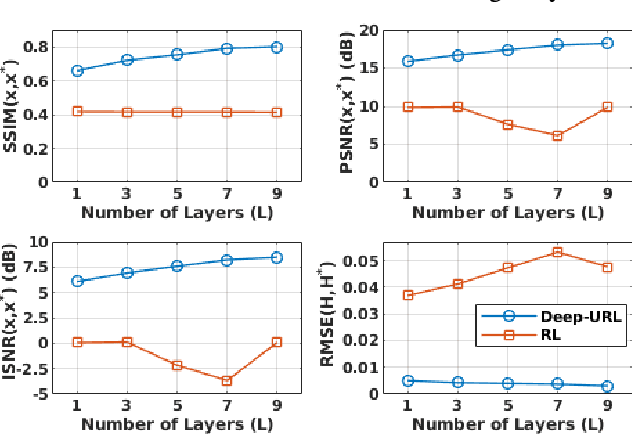

Deep-URL: A Model-Aware Approach To Blind Deconvolution Based On Deep Unfolded Richardson-Lucy Network

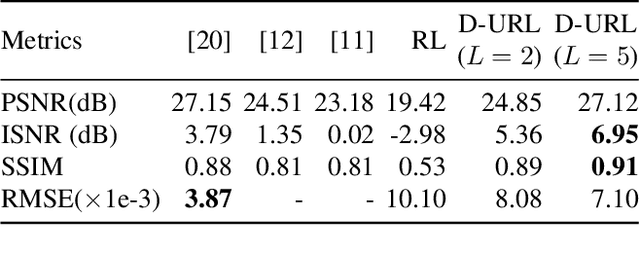

Feb 06, 2020

Abstract:The lack of interpretability in current deep learning models causes serious concerns as they are extensively used for various life-critical applications. Hence, it is of paramount importance to develop interpretable deep learning models. In this paper, we consider the problem of blind deconvolution and propose a novel model-aware deep architecture that allows for the recovery of both the blur kernel and the sharp image from the blurred image. In particular, we propose the Deep Unfolded Richardson-Lucy (Deep-URL) framework -- an interpretable deep-learning architecture that can be seen as an amalgamation of classical estimation technique and deep neural network, and consequently leads to improved performance. Our numerical investigations demonstrate significant improvement compared to state-of-the-art algorithms.

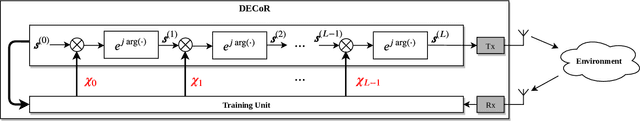

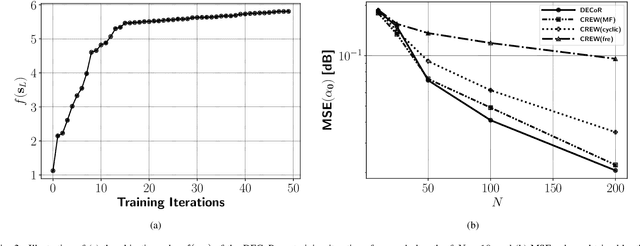

Deep Radar Waveform Design for Efficient Automotive Radar Sensing

Dec 19, 2019

Abstract:In radar systems, unimodular (or constant-modulus) waveform design plays an important role in achieving better clutter/interference rejection, as well as a more accurate estimation of the target parameters. The design of such sequences has been studied widely in the last few decades, with most design algorithms requiring sophisticated a priori knowledge of environmental parameters which may be difficult to obtain in real-time scenarios. In this paper, we propose a novel hybrid model-driven and data-driven architecture that adapts to the ever changing environment and allows for adaptive unimodular waveform design. In particular, the approach lays the groundwork for developing extremely low-cost waveform design and processing frameworks for radar systems deployed in autonomous vehicles. The proposed model-based deep architecture imitates a well-known unimodular signal design algorithm in its structure, and can quickly infer statistical information from the environment using the observed data. Our numerical experiments portray the advantages of using the proposed method for efficient radar waveform design in time-varying environments.

Deep One-bit Compressive Autoencoding

Dec 10, 2019

Abstract:Parameterized mathematical models play a central role in understanding and design of complex information systems. However, they often cannot take into account the intricate interactions innate to such systems. On the contrary, purely data-driven approaches do not need explicit mathematical models for data generation and have a wider applicability at the cost of interpretability. In this paper, we consider the design of a one-bit compressive autoencoder, and propose a novel hybrid model-based and data-driven methodology that allows us to not only design the sensing matrix for one-bit data acquisition, but also allows for learning the latent-parameters of an iterative optimization algorithm specifically designed for the problem of one-bit sparse signal recovery. Our results demonstrate a significant improvement compared to state-of-the-art model-based algorithms.

Model-Aware Deep Architectures for One-Bit Compressive Variational Autoencoding

Nov 27, 2019

Abstract:Parameterized mathematical models play a central role in understanding and design of complex information systems. However, they often cannot take into account the intricate interactions innate to such systems. On the contrary, purely data-driven approaches do not need explicit mathematical models for data generation and have a wider applicability at the cost of interpretability. In this paper, we consider the design of a one-bit compressive variational autoencoder, and propose a novel hybrid model-based and data-driven methodology that allows us not only to design the sensing matrix and the quantization thresholds for one-bit data acquisition, but also allows for learning the latent-parameters of iterative optimization algorithms specifically designed for the problem of one-bit sparse signal recovery. In addition, the proposed method has the ability to adaptively learn the proper quantization thresholds, paving the way for amplitude recovery in one-bit compressive sensing. Our results demonstrate a significant improvement compared to state-of-the-art model-based algorithms.

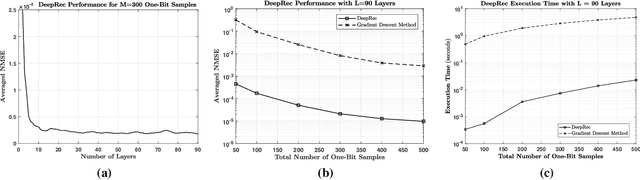

Deep Signal Recovery with One-Bit Quantization

Nov 30, 2018

Abstract:Machine learning, and more specifically deep learning, have shown remarkable performance in sensing, communications, and inference. In this paper, we consider the application of the deep unfolding technique in the problem of signal reconstruction from its one-bit noisy measurements. Namely, we propose a model-based machine learning method and unfold the iterations of an inference optimization algorithm into the layers of a deep neural network for one-bit signal recovery. The resulting network, which we refer to as DeepRec, can efficiently handle the recovery of high-dimensional signals from acquired one-bit noisy measurements. The proposed method results in an improvement in accuracy and computational efficiency with respect to the original framework as shown through numerical analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge