Seungjoo Shin

Locality-aware Gaussian Compression for Fast and High-quality Rendering

Jan 10, 2025Abstract:We present LocoGS, a locality-aware 3D Gaussian Splatting (3DGS) framework that exploits the spatial coherence of 3D Gaussians for compact modeling of volumetric scenes. To this end, we first analyze the local coherence of 3D Gaussian attributes, and propose a novel locality-aware 3D Gaussian representation that effectively encodes locally-coherent Gaussian attributes using a neural field representation with a minimal storage requirement. On top of the novel representation, LocoGS is carefully designed with additional components such as dense initialization, an adaptive spherical harmonics bandwidth scheme and different encoding schemes for different Gaussian attributes to maximize compression performance. Experimental results demonstrate that our approach outperforms the rendering quality of existing compact Gaussian representations for representative real-world 3D datasets while achieving from 54.6$\times$ to 96.6$\times$ compressed storage size and from 2.1$\times$ to 2.4$\times$ rendering speed than 3DGS. Even our approach also demonstrates an averaged 2.4$\times$ higher rendering speed than the state-of-the-art compression method with comparable compression performance.

Binary Radiance Fields

Jun 13, 2023Abstract:In this paper, we propose binary radiance fields (BiRF), a storage-efficient radiance field representation employing binary feature encoding that encodes local features using binary encoding parameters in a format of either $+1$ or $-1$. This binarization strategy lets us represent the feature grid with highly compact feature encoding and a dramatic reduction in storage size. Furthermore, our 2D-3D hybrid feature grid design enhances the compactness of feature encoding as the 3D grid includes main components while 2D grids capture details. In our experiments, binary radiance field representation successfully outperforms the reconstruction performance of state-of-the-art (SOTA) efficient radiance field models with lower storage allocation. In particular, our model achieves impressive results in static scene reconstruction, with a PSNR of 31.53 dB for Synthetic-NeRF scenes, 34.26 dB for Synthetic-NSVF scenes, 28.02 dB for Tanks and Temples scenes while only utilizing 0.7 MB, 0.8 MB, and 0.8 MB of storage space, respectively. We hope the proposed binary radiance field representation will make radiance fields more accessible without a storage bottleneck.

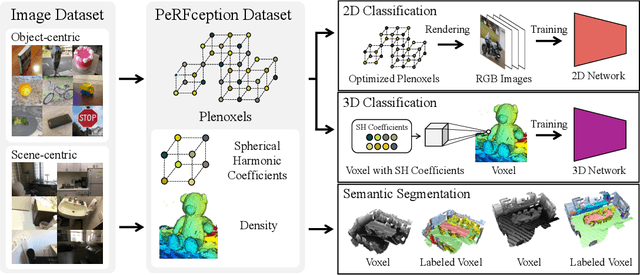

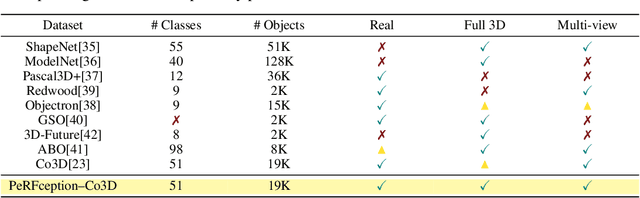

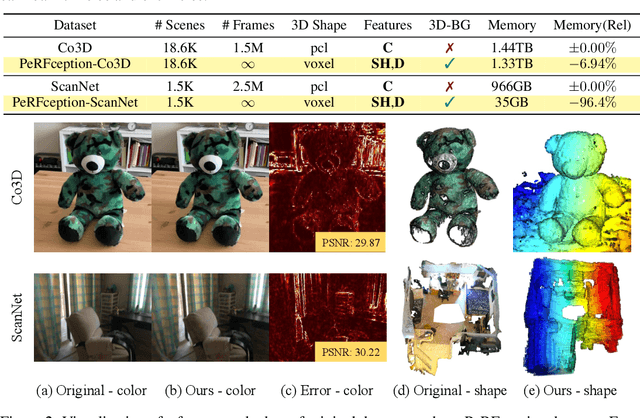

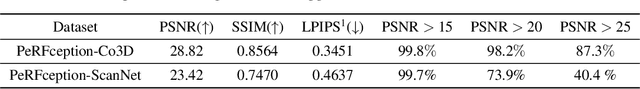

PeRFception: Perception using Radiance Fields

Aug 24, 2022

Abstract:The recent progress in implicit 3D representation, i.e., Neural Radiance Fields (NeRFs), has made accurate and photorealistic 3D reconstruction possible in a differentiable manner. This new representation can effectively convey the information of hundreds of high-resolution images in one compact format and allows photorealistic synthesis of novel views. In this work, using the variant of NeRF called Plenoxels, we create the first large-scale implicit representation datasets for perception tasks, called the PeRFception, which consists of two parts that incorporate both object-centric and scene-centric scans for classification and segmentation. It shows a significant memory compression rate (96.4\%) from the original dataset, while containing both 2D and 3D information in a unified form. We construct the classification and segmentation models that directly take as input this implicit format and also propose a novel augmentation technique to avoid overfitting on backgrounds of images. The code and data are publicly available in https://postech-cvlab.github.io/PeRFception .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge