Seunghyeon Jeon

MIMO Detection under Hardware Impairments: Learning with Noisy Labels

Jun 08, 2023

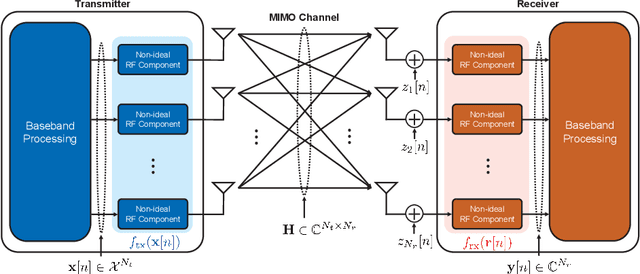

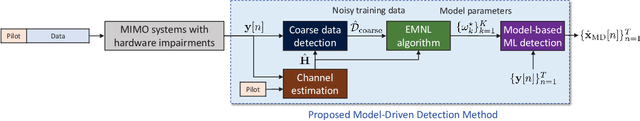

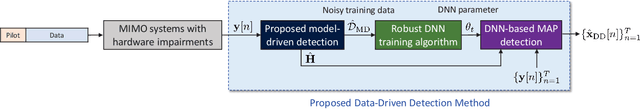

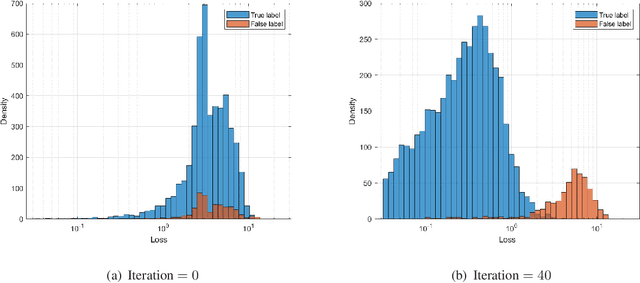

Abstract:This paper considers a data detection problem in multiple-input multiple-output (MIMO) communication systems with hardware impairments. To address challenges posed by nonlinear and unknown distortion in received signals, two learning-based detection methods, referred to as model-driven and data-driven, are presented. The model-driven method employs a generalized Gaussian distortion model to approximate the conditional distribution of the distorted received signal. By using the outputs of coarse data detection as noisy training data, the model-driven method avoids the need for additional training overhead beyond traditional pilot overhead for channel estimation. An expectation-maximization algorithm is devised to accurately learn the parameters of the distortion model from noisy training data. To resolve a model mismatch problem in the model-driven method, the data-driven method employs a deep neural network (DNN) for approximating a-posteriori probabilities for each received signal. This method uses the outputs of the model-driven method as noisy labels and therefore does not require extra training overhead. To avoid the overfitting problem caused by noisy labels, a robust DNN training algorithm is devised, which involves a warm-up period, sample selection, and loss correction. Simulation results demonstrate that the two proposed methods outperform existing solutions with the same overhead under various hardware impairment scenarios.

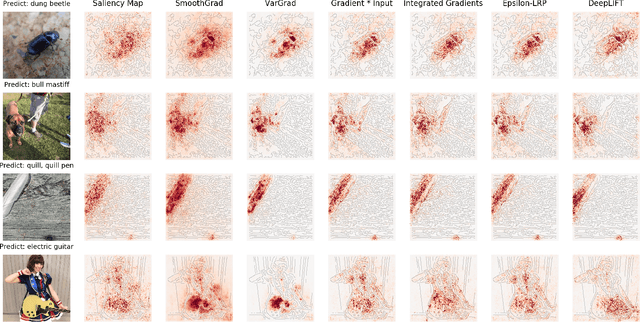

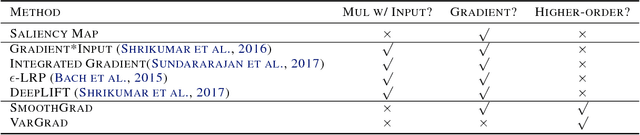

Noise-adding Methods of Saliency Map as Series of Higher Order Partial Derivative

Jun 08, 2018

Abstract:SmoothGrad and VarGrad are techniques that enhance the empirical quality of standard saliency maps by adding noise to input. However, there were few works that provide a rigorous theoretical interpretation of those methods. We analytically formalize the result of these noise-adding methods. As a result, we observe two interesting results from the existing noise-adding methods. First, SmoothGrad does not make the gradient of the score function smooth. Second, VarGrad is independent of the gradient of the score function. We believe that our findings provide a clue to reveal the relationship between local explanation methods of deep neural networks and higher-order partial derivatives of the score function.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge