Seung-Ik Lee

Exploiting Autoencoder's Weakness to Generate Pseudo Anomalies

May 09, 2024Abstract:Due to the rare occurrence of anomalous events, a typical approach to anomaly detection is to train an autoencoder (AE) with normal data only so that it learns the patterns or representations of the normal training data. At test time, the trained AE is expected to well reconstruct normal but to poorly reconstruct anomalous data. However, contrary to the expectation, anomalous data is often well reconstructed as well. In order to further separate the reconstruction quality between normal and anomalous data, we propose creating pseudo anomalies from learned adaptive noise by exploiting the aforementioned weakness of AE, i.e., reconstructing anomalies too well. The generated noise is added to the normal data to create pseudo anomalies. Extensive experiments on Ped2, Avenue, ShanghaiTech, CIFAR-10, and KDDCUP datasets demonstrate the effectiveness and generic applicability of our approach in improving the discriminative capability of AEs for anomaly detection.

* SharedIt link: https://rdcu.be/dGOrh

Constricting Normal Latent Space for Anomaly Detection with Normal-only Training Data

Mar 24, 2024

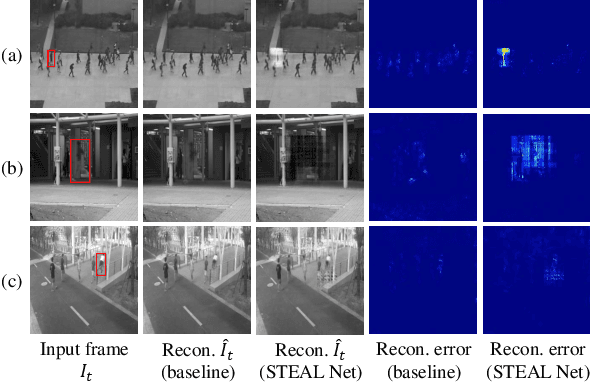

Abstract:In order to devise an anomaly detection model using only normal training data, an autoencoder (AE) is typically trained to reconstruct the data. As a result, the AE can extract normal representations in its latent space. During test time, since AE is not trained using real anomalies, it is expected to poorly reconstruct the anomalous data. However, several researchers have observed that it is not the case. In this work, we propose to limit the reconstruction capability of AE by introducing a novel latent constriction loss, which is added to the existing reconstruction loss. By using our method, no extra computational cost is added to the AE during test time. Evaluations using three video anomaly detection benchmark datasets, i.e., Ped2, Avenue, and ShanghaiTech, demonstrate the effectiveness of our method in limiting the reconstruction capability of AE, which leads to a better anomaly detection model.

PseudoBound: Limiting the anomaly reconstruction capability of one-class classifiers using pseudo anomalies

Mar 19, 2023

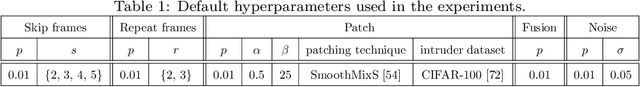

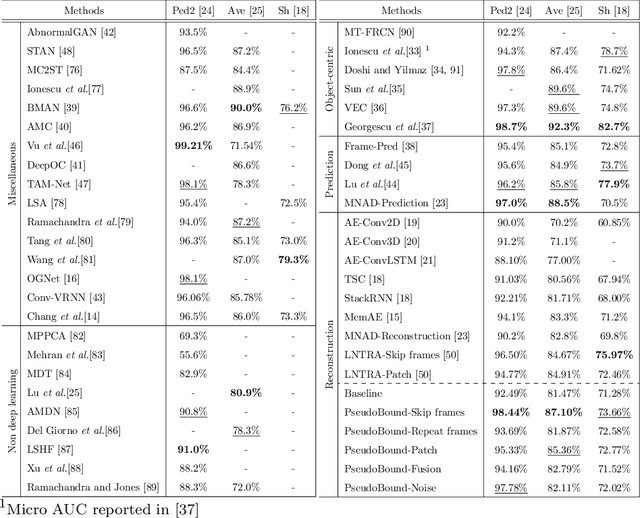

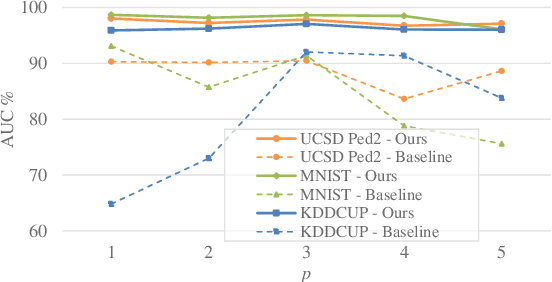

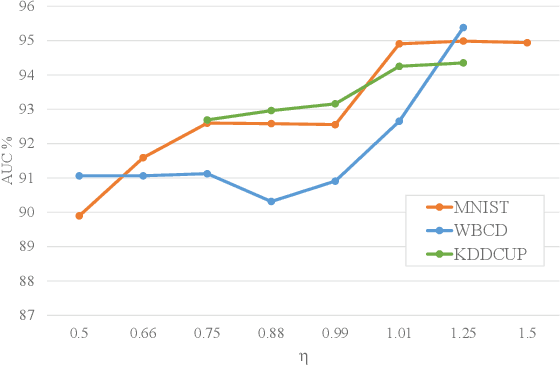

Abstract:Due to the rarity of anomalous events, video anomaly detection is typically approached as one-class classification (OCC) problem. Typically in OCC, an autoencoder (AE) is trained to reconstruct the normal only training data with the expectation that, in test time, it can poorly reconstruct the anomalous data. However, previous studies have shown that, even trained with only normal data, AEs can often reconstruct anomalous data as well, resulting in a decreased performance. To mitigate this problem, we propose to limit the anomaly reconstruction capability of AEs by incorporating pseudo anomalies during the training of an AE. Extensive experiments using five types of pseudo anomalies show the robustness of our training mechanism towards any kind of pseudo anomaly. Moreover, we demonstrate the effectiveness of our proposed pseudo anomaly based training approach against several existing state-ofthe-art (SOTA) methods on three benchmark video anomaly datasets, outperforming all the other reconstruction-based approaches in two datasets and showing the second best performance in the other dataset.

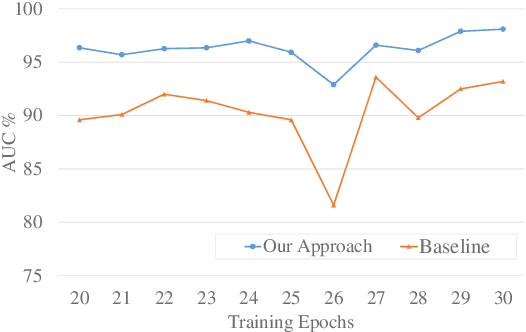

Stabilizing Adversarially Learned One-Class Novelty Detection Using Pseudo Anomalies

Mar 25, 2022

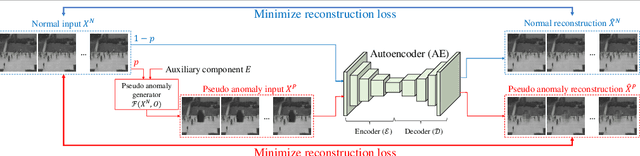

Abstract:Recently, anomaly scores have been formulated using reconstruction loss of the adversarially learned generators and/or classification loss of discriminators. Unavailability of anomaly examples in the training data makes optimization of such networks challenging. Attributed to the adversarial training, performance of such models fluctuates drastically with each training step, making it difficult to halt the training at an optimal point. In the current study, we propose a robust anomaly detection framework that overcomes such instability by transforming the fundamental role of the discriminator from identifying real vs. fake data to distinguishing good vs. bad quality reconstructions. For this purpose, we propose a method that utilizes the current state as well as an old state of the same generator to create good and bad quality reconstruction examples. The discriminator is trained on these examples to detect the subtle distortions that are often present in the reconstructions of anomalous data. In addition, we propose an efficient generic criterion to stop the training of our model, ensuring elevated performance. Extensive experiments performed on six datasets across multiple domains including image and video based anomaly detection, medical diagnosis, and network security, have demonstrated excellent performance of our approach.

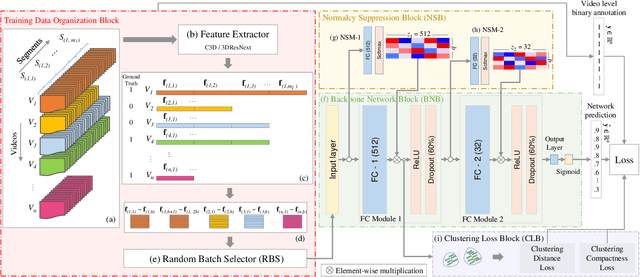

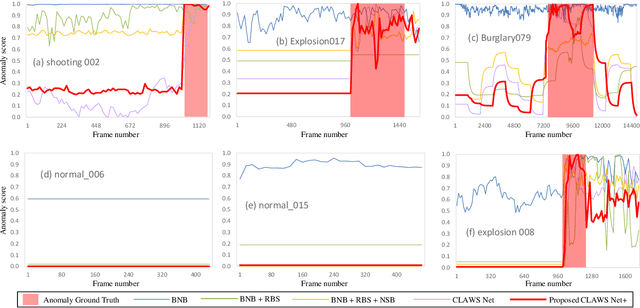

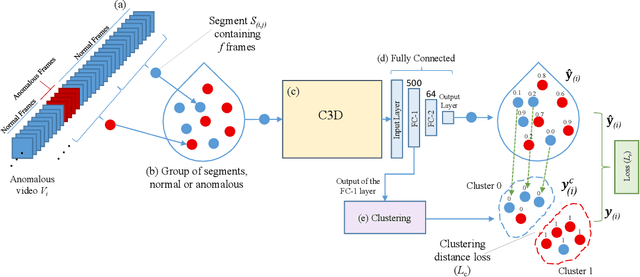

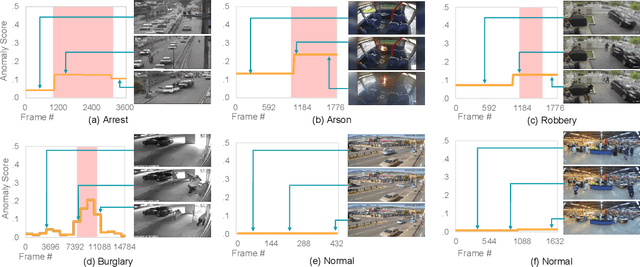

Clustering Aided Weakly Supervised Training to Detect Anomalous Events in Surveillance Videos

Mar 25, 2022

Abstract:Formulating learning systems for the detection of real-world anomalous events using only video-level labels is a challenging task mainly due to the presence of noisy labels as well as the rare occurrence of anomalous events in the training data. We propose a weakly supervised anomaly detection system which has multiple contributions including a random batch selection mechanism to reduce inter-batch correlation and a normalcy suppression block which learns to minimize anomaly scores over normal regions of a video by utilizing the overall information available in a training batch. In addition, a clustering loss block is proposed to mitigate the label noise and to improve the representation learning for the anomalous and normal regions. This block encourages the backbone network to produce two distinct feature clusters representing normal and anomalous events. Extensive analysis of the proposed approach is provided using three popular anomaly detection datasets including UCF-Crime, ShanghaiTech, and UCSD Ped2. The experiments demonstrate a superior anomaly detection capability of our approach.

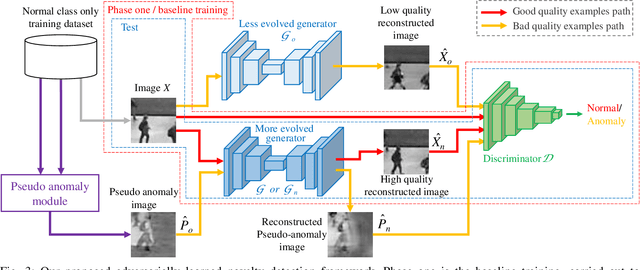

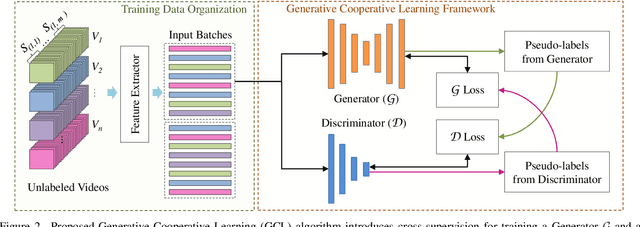

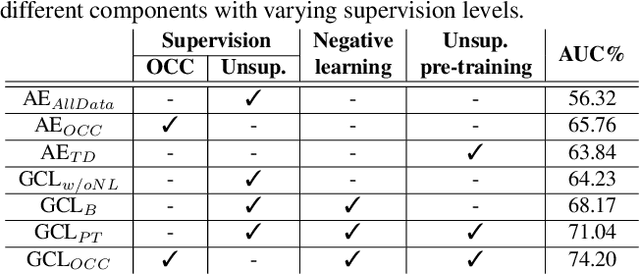

Generative Cooperative Learning for Unsupervised Video Anomaly Detection

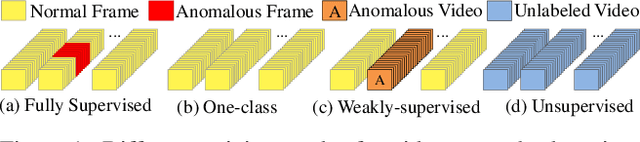

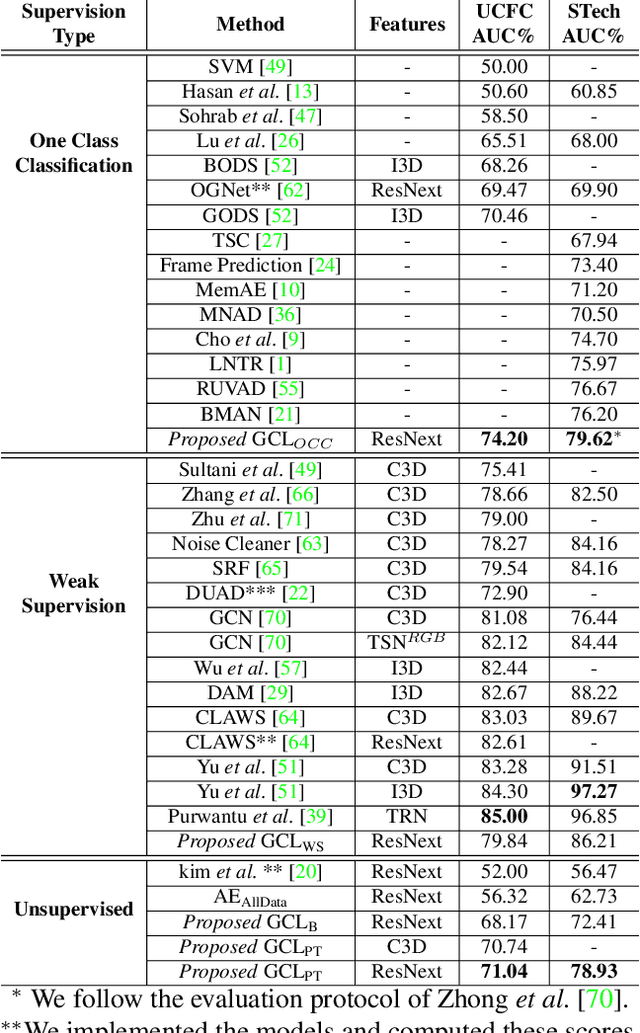

Mar 08, 2022

Abstract:Video anomaly detection is well investigated in weakly-supervised and one-class classification (OCC) settings. However, unsupervised video anomaly detection methods are quite sparse, likely because anomalies are less frequent in occurrence and usually not well-defined, which when coupled with the absence of ground truth supervision, could adversely affect the performance of the learning algorithms. This problem is challenging yet rewarding as it can completely eradicate the costs of obtaining laborious annotations and enable such systems to be deployed without human intervention. To this end, we propose a novel unsupervised Generative Cooperative Learning (GCL) approach for video anomaly detection that exploits the low frequency of anomalies towards building a cross-supervision between a generator and a discriminator. In essence, both networks get trained in a cooperative fashion, thereby allowing unsupervised learning. We conduct extensive experiments on two large-scale video anomaly detection datasets, UCF crime, and ShanghaiTech. Consistent improvement over the existing state-of-the-art unsupervised and OCC methods corroborate the effectiveness of our approach.

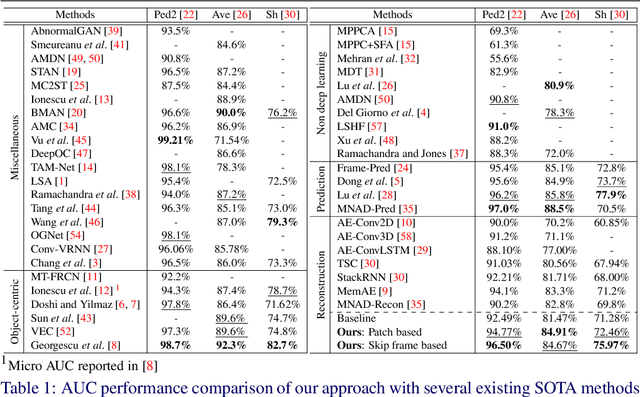

Learning Not to Reconstruct Anomalies

Oct 24, 2021

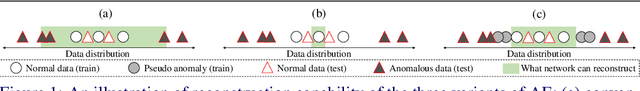

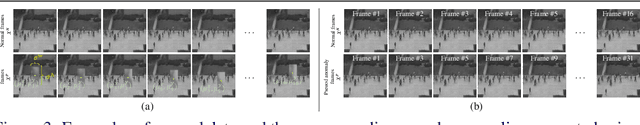

Abstract:Video anomaly detection is often seen as one-class classification (OCC) problem due to the limited availability of anomaly examples. Typically, to tackle this problem, an autoencoder (AE) is trained to reconstruct the input with training set consisting only of normal data. At test time, the AE is then expected to well reconstruct the normal data while poorly reconstructing the anomalous data. However, several studies have shown that, even with only normal data training, AEs can often start reconstructing anomalies as well which depletes the anomaly detection performance. To mitigate this problem, we propose a novel methodology to train AEs with the objective of reconstructing only normal data, regardless of the input (i.e., normal or abnormal). Since no real anomalies are available in the OCC settings, the training is assisted by pseudo anomalies that are generated by manipulating normal data to simulate the out-of-normal-data distribution. We additionally propose two ways to generate pseudo anomalies: patch and skip frame based. Extensive experiments on three challenging video anomaly datasets demonstrate the effectiveness of our method in improving conventional AEs, achieving state-of-the-art performance.

Synthetic Temporal Anomaly Guided End-to-End Video Anomaly Detection

Oct 19, 2021

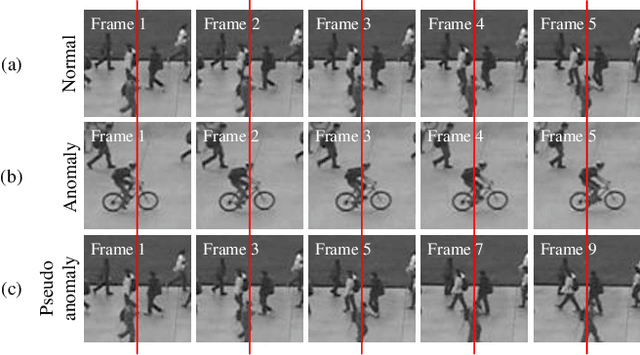

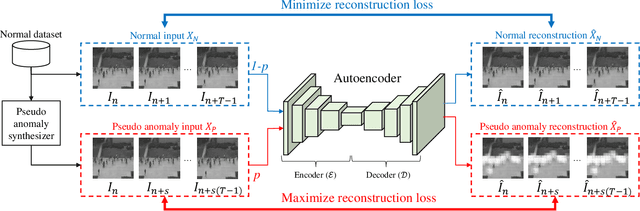

Abstract:Due to the limited availability of anomaly examples, video anomaly detection is often seen as one-class classification (OCC) problem. A popular way to tackle this problem is by utilizing an autoencoder (AE) trained only on normal data. At test time, the AE is then expected to reconstruct the normal input well while reconstructing the anomalies poorly. However, several studies show that, even with normal data only training, AEs can often start reconstructing anomalies as well which depletes their anomaly detection performance. To mitigate this, we propose a temporal pseudo anomaly synthesizer that generates fake-anomalies using only normal data. An AE is then trained to maximize the reconstruction loss on pseudo anomalies while minimizing this loss on normal data. This way, the AE is encouraged to produce distinguishable reconstructions for normal and anomalous frames. Extensive experiments and analysis on three challenging video anomaly datasets demonstrate the effectiveness of our approach to improve the basic AEs in achieving superiority against several existing state-of-the-art models.

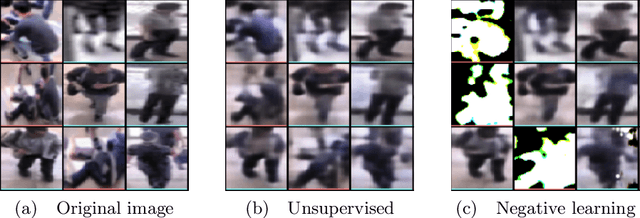

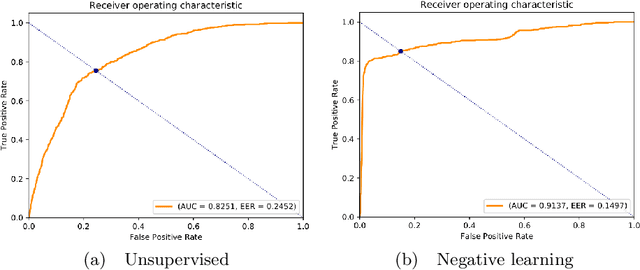

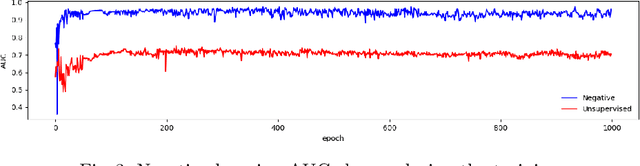

Deep Visual Anomaly detection with Negative Learning

May 24, 2021

Abstract:With the increase in the learning capability of deep convolution-based architectures, various applications of such models have been proposed over time. In the field of anomaly detection, improvements in deep learning opened new prospects of exploration for the researchers whom tried to automate the labor-intensive features of data collection. First, in terms of data collection, it is impossible to anticipate all the anomalies that might exist in a given environment. Second, assuming we limit the possibilities of anomalies, it will still be hard to record all these scenarios for the sake of training a model. Third, even if we manage to record a significant amount of abnormal data, it's laborious to annotate this data on pixel or even frame level. Various approaches address the problem by proposing one-class classification using generative models trained on only normal data. In such methods, only the normal data is used, which is abundantly available and doesn't require significant human input. However, these are trained with only normal data and at the test time, given abnormal data as input, may often generate normal-looking output. This happens due to the hallucination characteristic of generative models. Next, these systems are designed to not use abnormal examples during the training. In this paper, we propose anomaly detection with negative learning (ADNL), which employs the negative learning concept for the enhancement of anomaly detection by utilizing a very small number of labeled anomaly data as compared with the normal data during training. The idea is to limit the reconstruction capability of a generative model using the given a small amount of anomaly examples. This way, the network not only learns to reconstruct normal data but also encloses the normal distribution far from the possible distribution of anomalies.

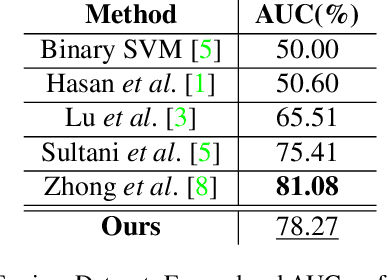

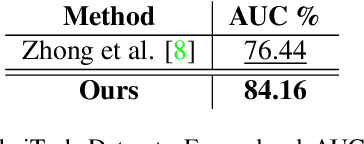

Cleaning Label Noise with Clusters for Minimally Supervised Anomaly Detection

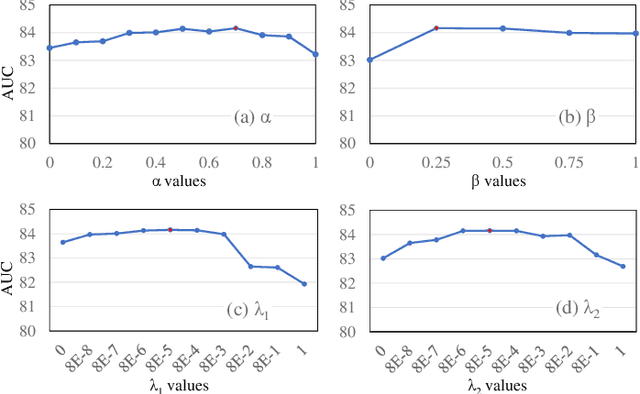

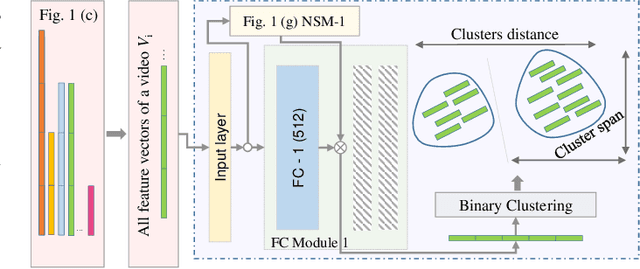

Apr 30, 2021

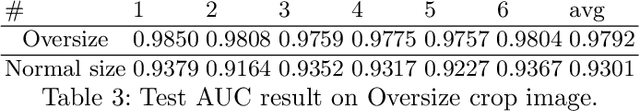

Abstract:Learning to detect real-world anomalous events using video-level annotations is a difficult task mainly because of the noise present in labels. An anomalous labelled video may actually contain anomaly only in a short duration while the rest of the video can be normal. In the current work, we formulate a weakly supervised anomaly detection method that is trained using only video-level labels. To this end, we propose to utilize binary clustering which helps in mitigating the noise present in the labels of anomalous videos. Our formulation encourages both the main network and the clustering to complement each other in achieving the goal of weakly supervised training. The proposed method yields 78.27% and 84.16% frame-level AUC on UCF-crime and ShanghaiTech datasets respectively, demonstrating its superiority over existing state-of-the-art algorithms.

* Presented in the CVPR20 Workshop Learning from Unlabeled Videos. An archival version of this research work, published in SPL, can be accessed at: https://ieeexplore.ieee.org/document/9204830. arXiv admin note: substantial text overlap with arXiv:2008.11887

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge