Sergiy Bogomolov

Safe Reach Set Computation via Neural Barrier Certificates

Apr 29, 2024Abstract:We present a novel technique for online safety verification of autonomous systems, which performs reachability analysis efficiently for both bounded and unbounded horizons by employing neural barrier certificates. Our approach uses barrier certificates given by parameterized neural networks that depend on a given initial set, unsafe sets, and time horizon. Such networks are trained efficiently offline using system simulations sampled from regions of the state space. We then employ a meta-neural network to generalize the barrier certificates to state space regions that are outside the training set. These certificates are generated and validated online as sound over-approximations of the reachable states, thus either ensuring system safety or activating appropriate alternative actions in unsafe scenarios. We demonstrate our technique on case studies from linear models to nonlinear control-dependent models for online autonomous driving scenarios.

Instrumenting an SMT Solver to Solve Hybrid Network Reachability Problems

Sep 13, 2016

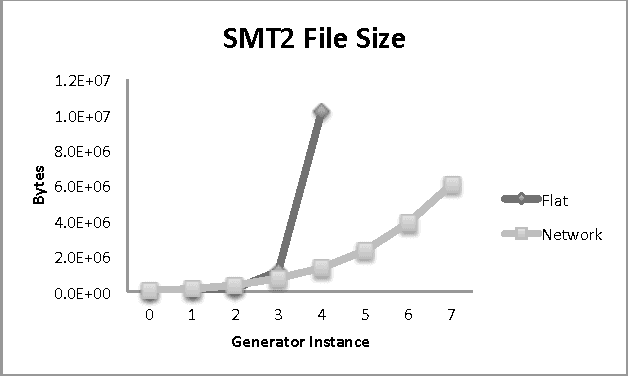

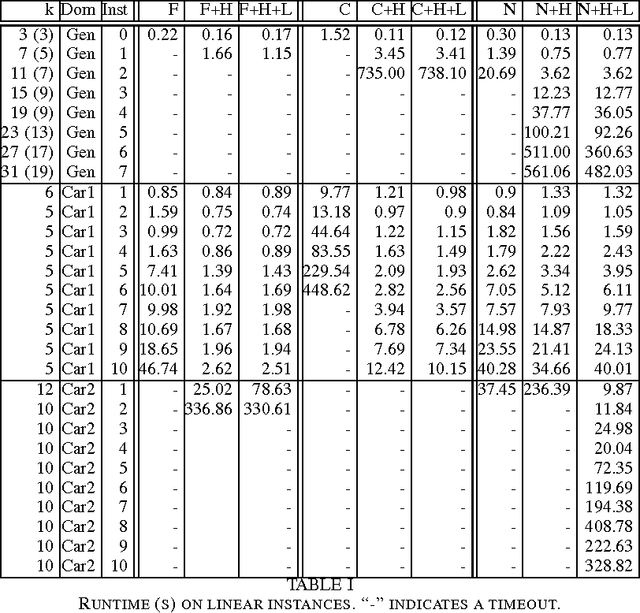

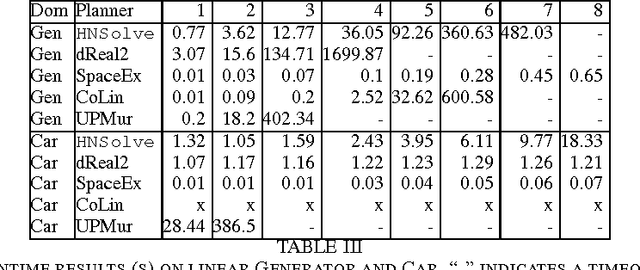

Abstract:PDDL+ planning has its semantics rooted in hybrid automata (HA) and recent work has shown that it can be modeled as a network of HAs. Addressing the complexity of nonlinear PDDL+ planning as HAs requires both space and time efficient reasoning. Unfortunately, existing solvers either do not address nonlinear dynamics or do not natively support networks of automata. We present a new algorithm, called HNSolve, which guides the variable selection of the dReal Satisfiability Modulo Theories (SMT) solver while reasoning about network encodings of nonlinear PDDL+ planning as HAs. HNSolve tightly integrates with dReal by solving a discrete abstraction of the HA network. HNSolve finds composite runs on the HA network that ignore continuous variables, but respect mode jumps and synchronization labels. HNSolve admissibly detects dead-ends in the discrete abstraction, and posts conflict clauses that prune the SMT solver's search. We evaluate the benefits of our HNSolve algorithm on PDDL+ benchmark problems and demonstrate its performance with respect to prior work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge