Saurabh Bodhe

E2ESlack: An End-to-End Graph-Based Framework for Pre-Routing Slack Prediction

Jan 14, 2025

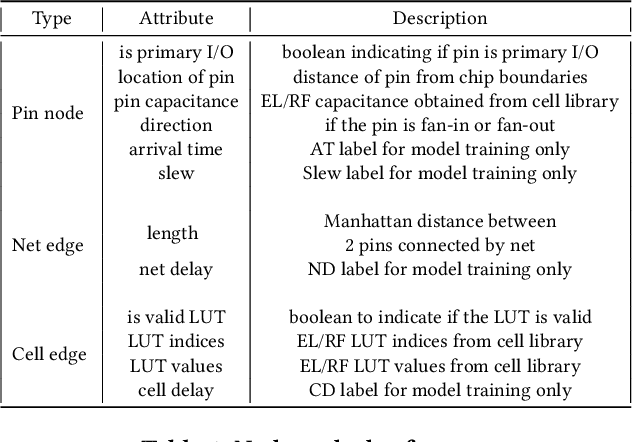

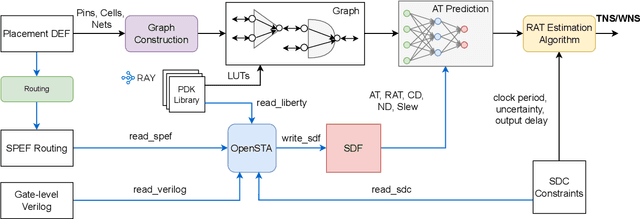

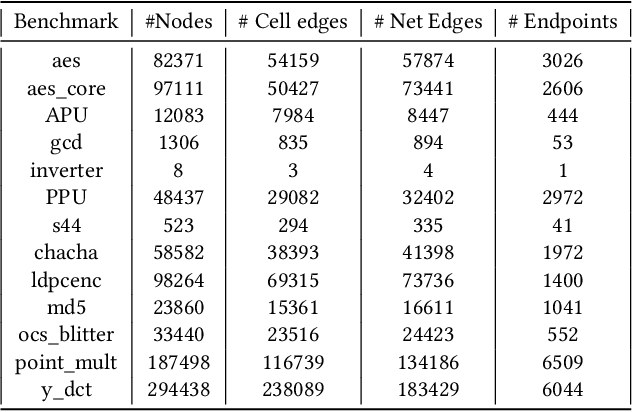

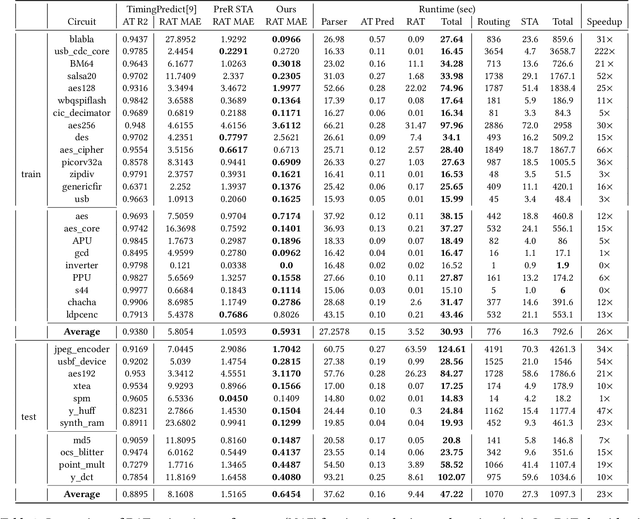

Abstract:Pre-routing slack prediction remains a critical area of research in Electronic Design Automation (EDA). Despite numerous machine learning-based approaches targeting this task, there is still a lack of a truly end-to-end framework that engineers can use to obtain TNS/WNS metrics from raw circuit data at the placement stage. Existing works have demonstrated effectiveness in Arrival Time (AT) prediction but lack a mechanism for Required Arrival Time (RAT) prediction, which is essential for slack prediction and obtaining TNS/WNS metrics. In this work, we propose E2ESlack, an end-to-end graph-based framework for pre-routing slack prediction. The framework includes a TimingParser that supports DEF, SDF and LIB files for feature extraction and graph construction, an arrival time prediction model and a fast RAT estimation module. To the best of our knowledge, this is the first work capable of predicting path-level slacks at the pre-routing stage. We perform extensive experiments and demonstrate that our proposed RAT estimation method outperforms the SOTA ML-based prediction method and also pre-routing STA tool. Additionally, the proposed E2ESlack framework achieves TNS/WNS values comparable to post-routing STA results while saving up to 23x runtime.

The Graph's Apprentice: Teaching an LLM Low Level Knowledge for Circuit Quality Estimation

Oct 30, 2024Abstract:Logic synthesis is a crucial phase in the circuit design process, responsible for transforming hardware description language (HDL) designs into optimized netlists. However, traditional logic synthesis methods are computationally intensive, restricting their iterative use in refining chip designs. Recent advancements in large language models (LLMs), particularly those fine-tuned on programming languages, present a promising alternative. In this paper, we introduce VeriDistill, the first end-to-end machine learning model that directly processes raw Verilog code to predict circuit quality-of-result metrics. Our model employs a novel knowledge distillation method, transferring low-level circuit insights via graphs into the predictor based on LLM. Experiments show VeriDistill outperforms state-of-the-art baselines on large-scale Verilog datasets and demonstrates robust performance when evaluated on out-of-distribution datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge