Sasa Misailovic

University of Illinois at Urbana-Champaign

Enforcing Temporal Constraints for LLM Agents

Dec 25, 2025Abstract:LLM-based agents are deployed in safety-critical applications, yet current guardrail systems fail to prevent violations of temporal safety policies, requirements that govern the ordering and sequencing of agent actions. For instance, agents may access sensitive data before authenticating users or process refunds to unauthorized payment methods, violations that require reasoning about sequences of action rather than an individual action. Existing guardrails rely on imprecise natural language instructions or post-hoc monitoring, and provide no formal guarantees that agents will satisfy temporal constraints. We present Agent-C, a novel framework that provides run-time guarantees ensuring LLM agents adhere to formal temporal safety properties. Agent-C introduces a domain-specific language for expressing temporal properties (e.g., authenticate before accessing data), translates specifications to first-order logic, and uses SMT solving to detect non-compliant agent actions during token generation. When the LLM attempts to generate a non-compliant tool call, Agent-C leverages constrained generation techniques to ensure that every action generated by the LLM complies with the specification, and to generate a compliant alternative to a non-compliant agent action. We evaluate Agent-C across two real-world applications: retail customer service and airline ticket reservation system, and multiple language models (open and closed-source). Our results demonstrate that Agent-C achieves perfect safety (100% conformance, 0% harm), while improving task utility compared to state-of-the-art guardrails and unrestricted agents. On SoTA closed-source models, Agent-C improves conformance (77.4% to 100% for Claude Sonnet 4.5 and 83.7% to 100% for GPT-5), while simultaneously increasing utility (71.8% to 75.2% and 66.1% to 70.6%, respectively), representing a new SoTA frontier for reliable agentic reasoning.

DINGO: Constrained Inference for Diffusion LLMs

May 29, 2025Abstract:Diffusion LLMs have emerged as a promising alternative to conventional autoregressive LLMs, offering significant potential for improved runtime efficiency. However, existing diffusion models lack the ability to provably enforce user-specified formal constraints, such as regular expressions, which makes them unreliable for tasks that require structured outputs, such as fixed-schema JSON generation. Unlike autoregressive models that generate tokens sequentially, diffusion LLMs predict a block of tokens in parallel. This parallelism makes traditional constrained decoding algorithms, which are designed for sequential token prediction, ineffective at preserving the true output distribution. To address this limitation, we propose DINGO, a dynamic programming-based constrained decoding strategy that is both efficient and provably distribution-preserving. DINGO enables sampling of output strings with the highest probability under the model's predicted distribution, while strictly satisfying any user-specified regular expression. On standard symbolic math and JSON generation benchmarks, DINGO achieves up to a 68 percentage point improvement over unconstrained inference

CRANE: Reasoning with constrained LLM generation

Feb 13, 2025Abstract:Code generation, symbolic math reasoning, and other tasks require LLMs to produce outputs that are both syntactically and semantically correct. Constrained LLM generation is a promising direction to enforce adherence to formal grammar, but prior works have empirically observed that strict enforcement of formal constraints often diminishes the reasoning capabilities of LLMs. In this work, we first provide a theoretical explanation for why constraining LLM outputs to very restrictive grammars that only allow syntactically valid final answers reduces the reasoning capabilities of the model. Second, we demonstrate that by augmenting the output grammar with carefully designed additional rules, it is always possible to preserve the reasoning capabilities of the LLM while ensuring syntactic and semantic correctness in its outputs. Building on these theoretical insights, we propose a reasoning-augmented constrained decoding algorithm, CRANE, which effectively balances the correctness of constrained generation with the flexibility of unconstrained generation. Experiments on multiple open-source LLMs and benchmarks show that CRANE significantly outperforms both state-of-the-art constrained decoding strategies and standard unconstrained decoding, showing up to 10% points accuracy improvement over baselines on challenging symbolic reasoning benchmarks GSM-symbolic and FOLIO.

ARQ: A Mixed-Precision Quantization Framework for Accurate and Certifiably Robust DNNs

Oct 31, 2024

Abstract:Mixed precision quantization has become an important technique for enabling the execution of deep neural networks (DNNs) on limited resource computing platforms. Traditional quantization methods have primarily concentrated on maintaining neural network accuracy, either ignoring the impact of quantization on the robustness of the network, or using only empirical techniques for improving robustness. In contrast, techniques for robustness certification, which can provide strong guarantees about the robustness of DNNs have not been used during quantization due to their high computation cost. This paper introduces ARQ, an innovative mixed-precision quantization method that not only preserves the clean accuracy of the smoothed classifiers but also maintains their certified robustness. ARQ uses reinforcement learning to find accurate and robust DNN quantization, while efficiently leveraging randomized smoothing, a popular class of statistical DNN verification algorithms, to guide the search process. We compare ARQ with multiple state-of-the-art quantization techniques on several DNN architectures commonly used in quantization studies: ResNet-20 on CIFAR-10, ResNet-50 on ImageNet, and MobileNetV2 on ImageNet. We demonstrate that ARQ consistently performs better than these baselines across all the benchmarks and the input perturbation levels. In many cases, the performance of ARQ quantized networks can reach that of the original DNN with floating-point weights, but with only 1.5% instructions.

IterGen: Iterative Structured LLM Generation

Oct 09, 2024

Abstract:Large Language Models (LLMs) are widely used for tasks such as natural language and code generation. Still, their outputs often suffer from issues like privacy violations, and semantically inaccurate code generation. Current libraries for LLM generation rely on left-to-right decoding without systematic support for backtracking, limiting the ability to correct or refine outputs mid-generation. To address this issue, we introduce IterGen, an intuitive framework for iterative, grammar-guided LLM generation that enables users to move both forward and backward within the generated output based on grammar symbols. By leveraging a symbol-to-position mapping, IterGen ensures efficient and structured generation while allowing for corrections during the process. We demonstrate IterGen's effectiveness in two important applications: reducing privacy leakage in LLM outputs and improving the accuracy of LLM-generated SQL queries. Our code is available at https://github.com/uiuc-arc/itergen

Is Watermarking LLM-Generated Code Robust?

Mar 24, 2024

Abstract:We present the first study of the robustness of existing watermarking techniques on Python code generated by large language models. Although existing works showed that watermarking can be robust for natural language, we show that it is easy to remove these watermarks on code by semantic-preserving transformations.

Improving LLM Code Generation with Grammar Augmentation

Mar 03, 2024Abstract:We present SynCode a novel framework for efficient and general syntactical decoding of code with large language models (LLMs). SynCode leverages the grammar of a programming language, utilizing an offline-constructed efficient lookup table called DFA mask store based on language grammar terminals. We demonstrate SynCode's soundness and completeness given the context-free grammar (CFG) of the programming language, presenting its ability to retain syntactically valid tokens while rejecting invalid ones. The framework seamlessly integrates with any language defined by CFG, as evidenced by experiments on CFGs for Python and Go. The results underscore the significant reduction of 96.07% of syntax errors achieved when SynCode is combined with state-of-the-art LLMs, showcasing its substantial impact on enhancing syntactical precision in code generation. Our code is available at https://github.com/uiuc-focal-lab/syncode.

Incremental Randomized Smoothing Certification

May 31, 2023Abstract:Randomized smoothing-based certification is an effective approach for obtaining robustness certificates of deep neural networks (DNNs) against adversarial attacks. This method constructs a smoothed DNN model and certifies its robustness through statistical sampling, but it is computationally expensive, especially when certifying with a large number of samples. Furthermore, when the smoothed model is modified (e.g., quantized or pruned), certification guarantees may not hold for the modified DNN, and recertifying from scratch can be prohibitively expensive. We present the first approach for incremental robustness certification for randomized smoothing, IRS. We show how to reuse the certification guarantees for the original smoothed model to certify an approximated model with very few samples. IRS significantly reduces the computational cost of certifying modified DNNs while maintaining strong robustness guarantees. We experimentally demonstrate the effectiveness of our approach, showing up to 3x certification speedup over the certification that applies randomized smoothing of the approximate model from scratch.

Incremental Verification of Neural Networks

Apr 04, 2023

Abstract:Complete verification of deep neural networks (DNNs) can exactly determine whether the DNN satisfies a desired trustworthy property (e.g., robustness, fairness) on an infinite set of inputs or not. Despite the tremendous progress to improve the scalability of complete verifiers over the years on individual DNNs, they are inherently inefficient when a deployed DNN is updated to improve its inference speed or accuracy. The inefficiency is because the expensive verifier needs to be run from scratch on the updated DNN. To improve efficiency, we propose a new, general framework for incremental and complete DNN verification based on the design of novel theory, data structure, and algorithms. Our contributions implemented in a tool named IVAN yield an overall geometric mean speedup of 2.4x for verifying challenging MNIST and CIFAR10 classifiers and a geometric mean speedup of 3.8x for the ACAS-XU classifiers over the state-of-the-art baselines.

Estimating Uncertainty of Autonomous Vehicle Systems with Generalized Polynomial Chaos

Aug 09, 2022

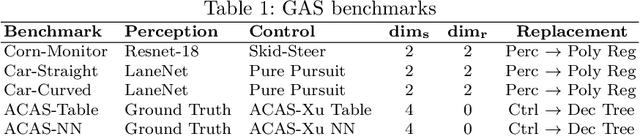

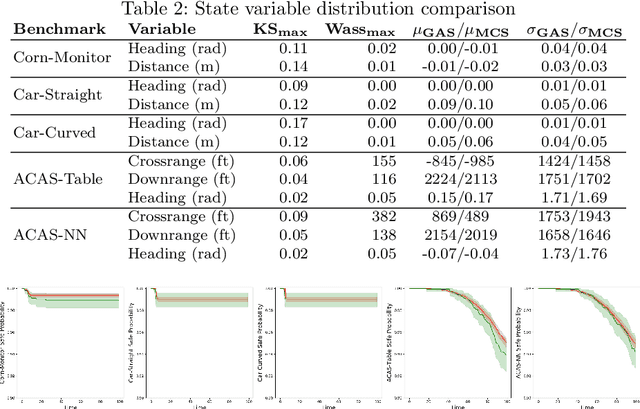

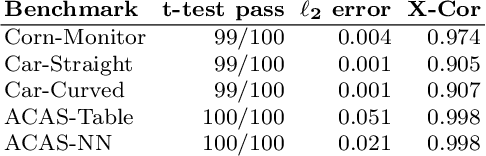

Abstract:Modern autonomous vehicle systems use complex perception and control components and must cope with uncertain data received from sensors. To estimate the probability that such vehicles remain in a safe state, developers often resort to time-consuming simulation methods. This paper presents an alternative methodology for analyzing autonomy pipelines in vehicular systems, based on Generalized Polynomial Chaos (GPC). We also present GAS, the first algorithm for creating and using GPC models of complex vehicle systems. GAS replaces complex perception components with a perception model to reduce complexity. Then, it constructs the GPC model and uses it for estimating state distribution and/or probability of entering an unsafe state. We evaluate GAS on five scenarios used in crop management vehicles, self driving cars, and aerial drones - each system uses at least one complex perception or control component. We show that GAS calculates state distributions that closely match those produced by Monte Carlo Simulation, while also providing 2.3x-3.0x speedups.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge