Sannara Ek

FedAli: Personalized Federated Learning with Aligned Prototypes through Optimal Transport

Nov 15, 2024

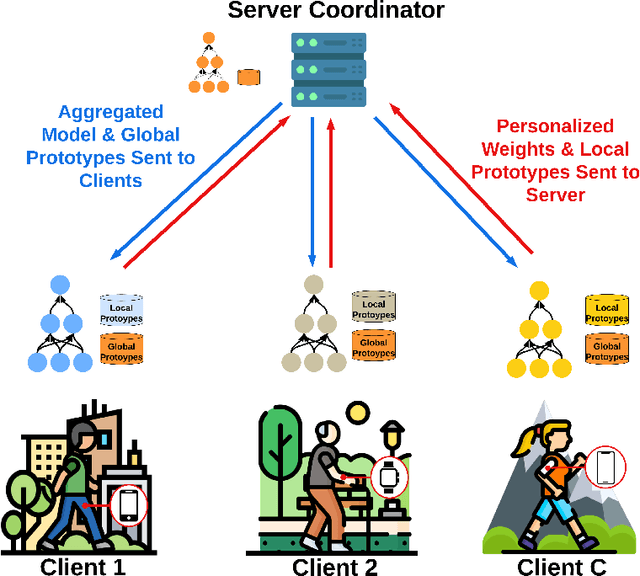

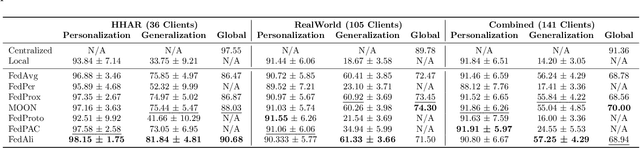

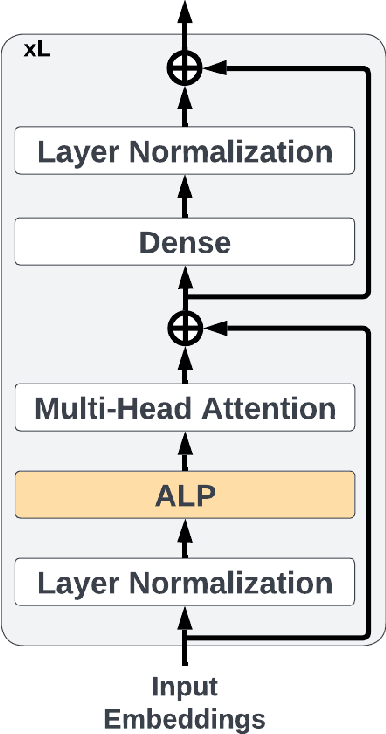

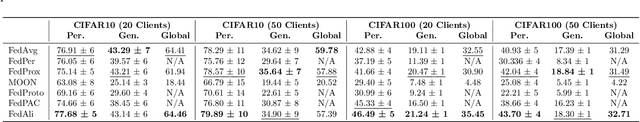

Abstract:Federated Learning (FL) enables collaborative, personalized model training across multiple devices without sharing raw data, making it ideal for pervasive computing applications that optimize user-centric performances in diverse environments. However, data heterogeneity among clients poses a significant challenge, leading to inconsistencies among trained client models and reduced performance. To address this, we introduce the Alignment with Prototypes (ALP) layers, which align incoming embeddings closer to learnable prototypes through an optimal transport plan. During local training, the ALP layer updates local prototypes and aligns embeddings toward global prototypes aggregated from all clients using our novel FL framework, Federated Alignment (FedAli). For model inferences, embeddings are guided toward local prototypes to better reflect the client's local data distribution. We evaluate FedAli on heterogeneous sensor-based human activity recognition and vision benchmark datasets, demonstrating that it outperforms existing FL strategies. We publicly release our source code to facilitate reproducibility and furthered research.

Combining Public Human Activity Recognition Datasets to Mitigate Labeled Data Scarcity

Jun 23, 2023

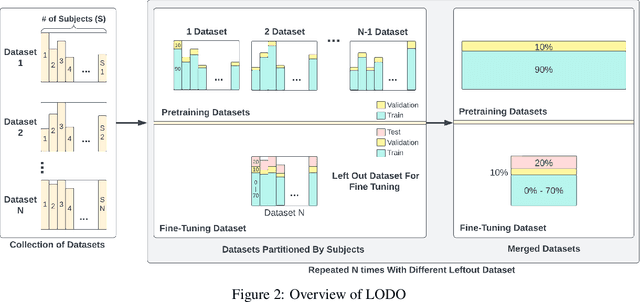

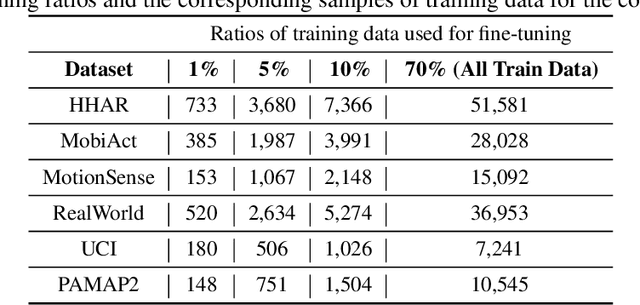

Abstract:The use of supervised learning for Human Activity Recognition (HAR) on mobile devices leads to strong classification performances. Such an approach, however, requires large amounts of labeled data, both for the initial training of the models and for their customization on specific clients (whose data often differ greatly from the training data). This is actually impractical to obtain due to the costs, intrusiveness, and time-consuming nature of data annotation. Moreover, even with the help of a significant amount of labeled data, model deployment on heterogeneous clients faces difficulties in generalizing well on unseen data. Other domains, like Computer Vision or Natural Language Processing, have proposed the notion of pre-trained models, leveraging large corpora, to reduce the need for annotated data and better manage heterogeneity. This promising approach has not been implemented in the HAR domain so far because of the lack of public datasets of sufficient size. In this paper, we propose a novel strategy to combine publicly available datasets with the goal of learning a generalized HAR model that can be fine-tuned using a limited amount of labeled data on an unseen target domain. Our experimental evaluation, which includes experimenting with different state-of-the-art neural network architectures, shows that combining public datasets can significantly reduce the number of labeled samples required to achieve satisfactory performance on an unseen target domain.

Evaluation and comparison of federated learning algorithms for Human Activity Recognition on smartphones

Oct 30, 2022

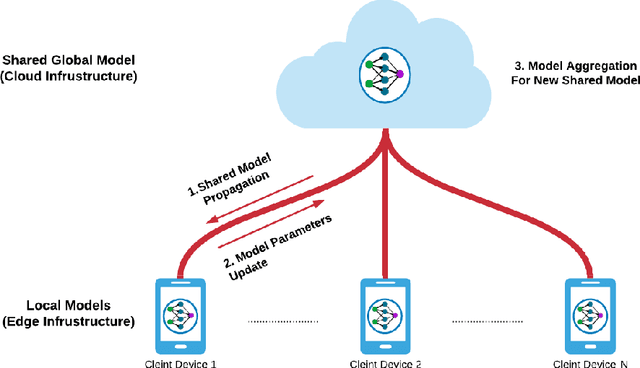

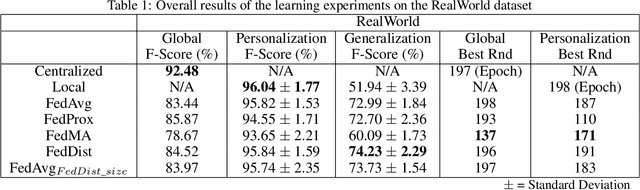

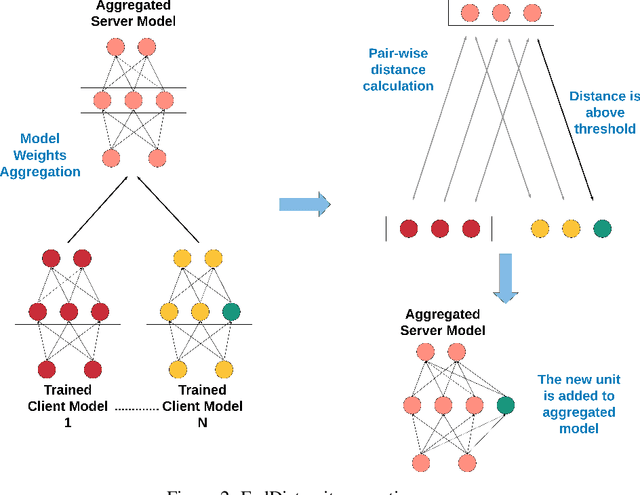

Abstract:Pervasive computing promotes the integration of smart devices in our living spaces to develop services providing assistance to people. Such smart devices are increasingly relying on cloud-based Machine Learning, which raises questions in terms of security (data privacy), reliance (latency), and communication costs. In this context, Federated Learning (FL) has been introduced as a new machine learning paradigm enhancing the use of local devices. At the server level, FL aggregates models learned locally on distributed clients to obtain a more general model. In this way, no private data is sent over the network, and the communication cost is reduced. Unfortunately, however, the most popular federated learning algorithms have been shown not to be adapted to some highly heterogeneous pervasive computing environments. In this paper, we propose a new FL algorithm, termed FedDist, which can modify models (here, deep neural network) during training by identifying dissimilarities between neurons among the clients. This permits to account for clients' specificity without impairing generalization. FedDist evaluated with three state-of-the-art federated learning algorithms on three large heterogeneous mobile Human Activity Recognition datasets. Results have shown the ability of FedDist to adapt to heterogeneous data and the capability of FL to deal with asynchronous situations.

* arXiv admin note: substantial text overlap with arXiv:2110.10223

Federated Self-Supervised Learning in Heterogeneous Settings: Limits of a Baseline Approach on HAR

Jul 17, 2022

Abstract:Federated Learning is a new machine learning paradigm dealing with distributed model learning on independent devices. One of the many advantages of federated learning is that training data stay on devices (such as smartphones), and only learned models are shared with a centralized server. In the case of supervised learning, labeling is entrusted to the clients. However, acquiring such labels can be prohibitively expensive and error-prone for many tasks, such as human activity recognition. Hence, a wealth of data remains unlabelled and unexploited. Most existing federated learning approaches that focus mainly on supervised learning have mostly ignored this mass of unlabelled data. Furthermore, it is unclear whether standard federated Learning approaches are suited to self-supervised learning. The few studies that have dealt with the problem have limited themselves to the favorable situation of homogeneous datasets. This work lays the groundwork for a reference evaluation of federated Learning with Semi-Supervised Learning in a realistic setting. We show that standard lightweight autoencoder and standard Federated Averaging fail to learn a robust representation for Human Activity Recognition with several realistic heterogeneous datasets. These findings advocate for a more intensive research effort in Federated Self Supervised Learning to exploit the mass of heterogeneous unlabelled data present on mobile devices.

A Federated Learning Aggregation Algorithm for Pervasive Computing: Evaluation and Comparison

Oct 19, 2021

Abstract:Pervasive computing promotes the installation of connected devices in our living spaces in order to provide services. Two major developments have gained significant momentum recently: an advanced use of edge resources and the integration of machine learning techniques for engineering applications. This evolution raises major challenges, in particular related to the appropriate distribution of computing elements along an edge-to-cloud continuum. About this, Federated Learning has been recently proposed for distributed model training in the edge. The principle of this approach is to aggregate models learned on distributed clients in order to obtain a new, more general model. The resulting model is then redistributed to clients for further training. To date, the most popular federated learning algorithm uses coordinate-wise averaging of the model parameters for aggregation. However, it has been shown that this method is not adapted in heterogeneous environments where data is not identically and independently distributed (non-iid). This corresponds directly to some pervasive computing scenarios where heterogeneity of devices and users challenges machine learning with the double objective of generalization and personalization. In this paper, we propose a novel aggregation algorithm, termed FedDist, which is able to modify its model architecture (here, deep neural network) by identifying dissimilarities between specific neurons amongst the clients. This permits to account for clients' specificity without impairing generalization. Furthermore, we define a complete method to evaluate federated learning in a realistic way taking generalization and personalization into account. Using this method, FedDist is extensively tested and compared with three state-of-the-art federated learning algorithms on the pervasive domain of Human Activity Recognition with smartphones.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge