Sangjun Han

Data Intelligence Laboratory, LG AI Research

Detail Loss in Super-Resolution Models Based on the Laplacian Pyramid and Repeated Upscaling and Downscaling Process

Jan 14, 2026Abstract:With advances in artificial intelligence, image processing has gained significant interest. Image super-resolution is a vital technology closely related to real-world applications, as it enhances the quality of existing images. Since enhancing fine details is crucial for the super-resolution task, pixels that contribute to high-frequency information should be emphasized. This paper proposes two methods to enhance high-frequency details in super-resolution images: a Laplacian pyramid-based detail loss and a repeated upscaling and downscaling process. Total loss with our detail loss guides a model by separately generating and controlling super-resolution and detail images. This approach allows the model to focus more effectively on high-frequency components, resulting in improved super-resolution images. Additionally, repeated upscaling and downscaling amplify the effectiveness of the detail loss by extracting diverse information from multiple low-resolution features. We conduct two types of experiments. First, we design a CNN-based model incorporating our methods. This model achieves state-of-the-art results, surpassing all currently available CNN-based and even some attention-based models. Second, we apply our methods to existing attention-based models on a small scale. In all our experiments, attention-based models adding our detail loss show improvements compared to the originals. These results demonstrate our approaches effectively enhance super-resolution images across different model structures.

* Accepted for publication in IET Image Processing. This is the authors' final accepted manuscript

Adaptive Information Routing for Multimodal Time Series Forecasting

Dec 23, 2025Abstract:Time series forecasting is a critical task for artificial intelligence with numerous real-world applications. Traditional approaches primarily rely on historical time series data to predict the future values. However, in practical scenarios, this is often insufficient for accurate predictions due to the limited information available. To address this challenge, multimodal time series forecasting methods which incorporate additional data modalities, mainly text data, alongside time series data have been explored. In this work, we introduce the Adaptive Information Routing (AIR) framework, a novel approach for multimodal time series forecasting. Unlike existing methods that treat text data on par with time series data as interchangeable auxiliary features for forecasting, AIR leverages text information to dynamically guide the time series model by controlling how and to what extent multivariate time series information should be combined. We also present a text-refinement pipeline that employs a large language model to convert raw text data into a form suitable for multimodal forecasting, and we introduce a benchmark that facilitates multimodal forecasting experiments based on this pipeline. Experiment results with the real world market data such as crude oil price and exchange rates demonstrate that AIR effectively modulates the behavior of the time series model using textual inputs, significantly enhancing forecasting accuracy in various time series forecasting tasks.

Simplifying Formal Proof-Generating Models with ChatGPT and Basic Searching Techniques

Feb 05, 2025Abstract:The challenge of formal proof generation has a rich history, but with modern techniques, we may finally be at the stage of making actual progress in real-life mathematical problems. This paper explores the integration of ChatGPT and basic searching techniques to simplify generating formal proofs, with a particular focus on the miniF2F dataset. We demonstrate how combining a large language model like ChatGPT with a formal language such as Lean, which has the added advantage of being verifiable, enhances the efficiency and accessibility of formal proof generation. Despite its simplicity, our best-performing Lean-based model surpasses all known benchmarks with a 31.15% pass rate. We extend our experiments to include other datasets and employ alternative language models, showcasing our models' comparable performance in diverse settings and allowing for a more nuanced analysis of our results. Our findings offer insights into AI-assisted formal proof generation, suggesting a promising direction for future research in formal mathematical proof.

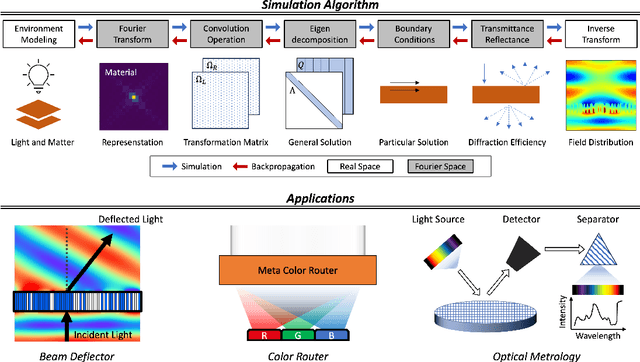

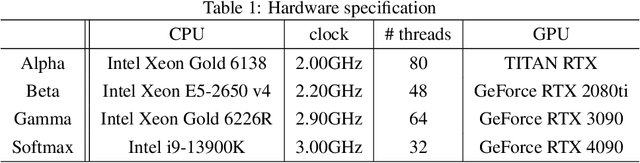

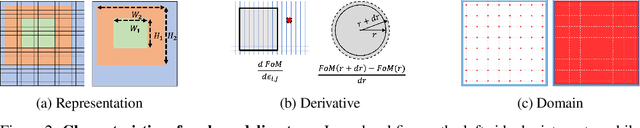

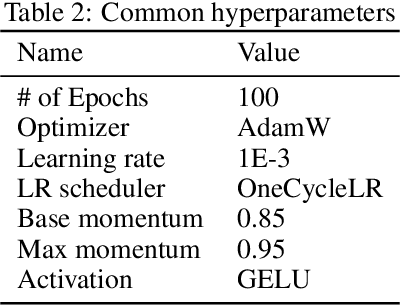

Meent: Differentiable Electromagnetic Simulator for Machine Learning

Jun 11, 2024

Abstract:Electromagnetic (EM) simulation plays a crucial role in analyzing and designing devices with sub-wavelength scale structures such as solar cells, semiconductor devices, image sensors, future displays and integrated photonic devices. Specifically, optics problems such as estimating semiconductor device structures and designing nanophotonic devices provide intriguing research topics with far-reaching real world impact. Traditional algorithms for such tasks require iteratively refining parameters through simulations, which often yield sub-optimal results due to the high computational cost of both the algorithms and EM simulations. Machine learning (ML) emerged as a promising candidate to mitigate these challenges, and optics research community has increasingly adopted ML algorithms to obtain results surpassing classical methods across various tasks. To foster a synergistic collaboration between the optics and ML communities, it is essential to have an EM simulation software that is user-friendly for both research communities. To this end, we present Meent, an EM simulation software that employs rigorous coupled-wave analysis (RCWA). Developed in Python and equipped with automatic differentiation (AD) capabilities, Meent serves as a versatile platform for integrating ML into optics research and vice versa. To demonstrate its utility as a research platform, we present three applications of Meent: 1) generating a dataset for training neural operator, 2) serving as an environment for the reinforcement learning of nanophotonic device optimization, and 3) providing a solution for inverse problems with gradient-based optimizers. These applications highlight Meent's potential to advance both EM simulation and ML methodologies. The code is available at https://github.com/kc-ml2/meent with the MIT license to promote the cross-polinations of ideas among academic researchers and industry practitioners.

Can We Utilize Pre-trained Language Models within Causal Discovery Algorithms?

Nov 19, 2023Abstract:Scaling laws have allowed Pre-trained Language Models (PLMs) into the field of causal reasoning. Causal reasoning of PLM relies solely on text-based descriptions, in contrast to causal discovery which aims to determine the causal relationships between variables utilizing data. Recently, there has been current research regarding a method that mimics causal discovery by aggregating the outcomes of repetitive causal reasoning, achieved through specifically designed prompts. It highlights the usefulness of PLMs in discovering cause and effect, which is often limited by a lack of data, especially when dealing with multiple variables. Conversely, the characteristics of PLMs which are that PLMs do not analyze data and they are highly dependent on prompt design leads to a crucial limitation for directly using PLMs in causal discovery. Accordingly, PLM-based causal reasoning deeply depends on the prompt design and carries out the risk of overconfidence and false predictions in determining causal relationships. In this paper, we empirically demonstrate the aforementioned limitations of PLM-based causal reasoning through experiments on physics-inspired synthetic data. Then, we propose a new framework that integrates prior knowledge obtained from PLM with a causal discovery algorithm. This is accomplished by initializing an adjacency matrix for causal discovery and incorporating regularization using prior knowledge. Our proposed framework not only demonstrates improved performance through the integration of PLM and causal discovery but also suggests how to leverage PLM-extracted prior knowledge with existing causal discovery algorithms.

Systematic Analysis of Music Representations from BERT

Jun 06, 2023Abstract:There have been numerous attempts to represent raw data as numerical vectors that effectively capture semantic and contextual information. However, in the field of symbolic music, previous works have attempted to validate their music embeddings by observing the performance improvement of various fine-tuning tasks. In this work, we directly analyze embeddings from BERT and BERT with contrastive learning trained on bar-level MIDI, inspecting their musical information that can be obtained from MIDI events. We observe that the embeddings exhibit distinct characteristics of information depending on the contrastive objectives and the choice of layers. Our code is available at https://github.com/sjhan91/MusicBERT.

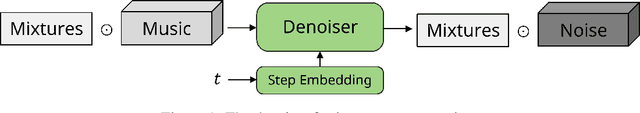

Instrument Separation of Symbolic Music by Explicitly Guided Diffusion Model

Sep 05, 2022

Abstract:Similar to colorization in computer vision, instrument separation is to assign instrument labels (e.g. piano, guitar...) to notes from unlabeled mixtures which contain only performance information. To address the problem, we adopt diffusion models and explicitly guide them to preserve consistency between mixtures and music. The quantitative results show that our proposed model can generate high-fidelity samples for multitrack symbolic music with creativity.

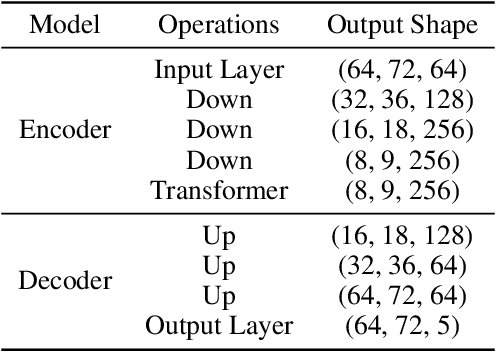

Laplacian Pyramid-like Autoencoder

Aug 26, 2022Abstract:In this paper, we develop the Laplacian pyramid-like autoencoder (LPAE) by adding the Laplacian pyramid (LP) concept widely used to analyze images in Signal Processing. LPAE decomposes an image into the approximation image and the detail image in the encoder part and then tries to reconstruct the original image in the decoder part using the two components. We use LPAE for experiments on classifications and super-resolution areas. Using the detail image and the smaller-sized approximation image as inputs of a classification network, our LPAE makes the model lighter. Moreover, we show that the performance of the connected classification networks has remained substantially high. In a super-resolution area, we show that the decoder part gets a high-quality reconstruction image by setting to resemble the structure of LP. Consequently, LPAE improves the original results by combining the decoder part of the autoencoder and the super-resolution network.

* 20 pages, 3 figures, 5 tables, Science and Information Conference 2022, Intelligent Computing

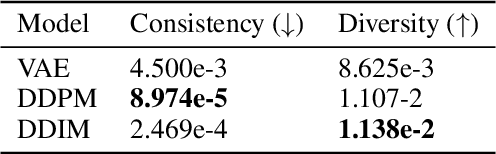

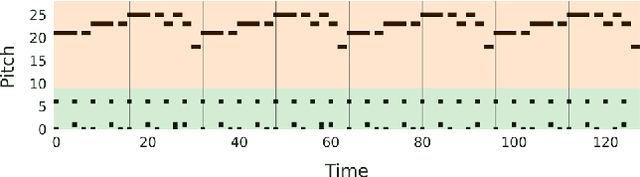

Symbolic Music Loop Generation with Neural Discrete Representations

Aug 11, 2022

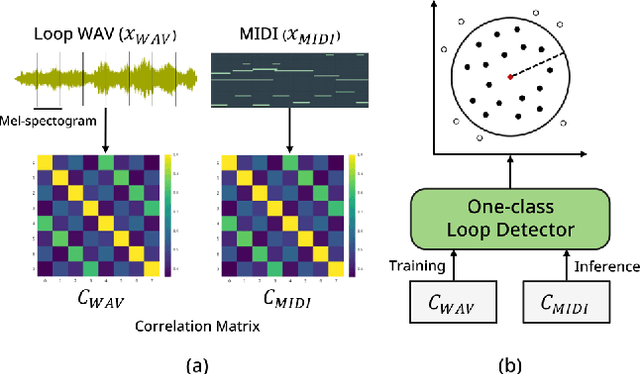

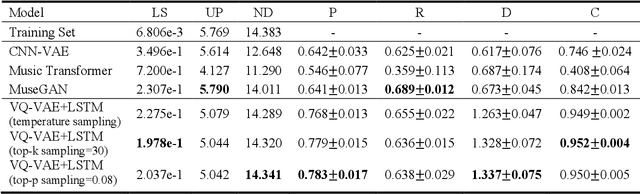

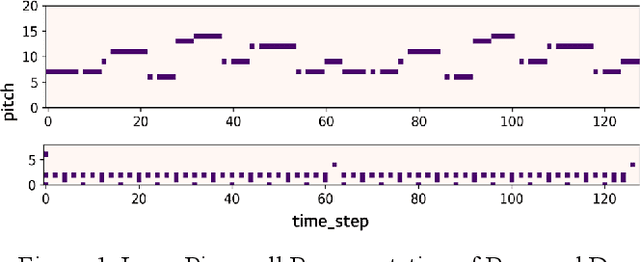

Abstract:Since most of music has repetitive structures from motifs to phrases, repeating musical ideas can be a basic operation for music composition. The basic block that we focus on is conceptualized as loops which are essential ingredients of music. Furthermore, meaningful note patterns can be formed in a finite space, so it is sufficient to represent them with combinations of discrete symbols as done in other domains. In this work, we propose symbolic music loop generation via learning discrete representations. We first extract loops from MIDI datasets using a loop detector and then learn an autoregressive model trained by discrete latent codes of the extracted loops. We show that our model outperforms well-known music generative models in terms of both fidelity and diversity, evaluating on random space. Our code and supplementary materials are available at https://github.com/sjhan91/Loop_VQVAE_Official.

Symbolic Music Loop Generation with VQ-VAE

Nov 15, 2021

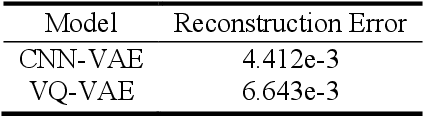

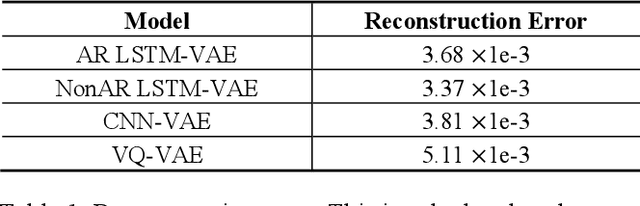

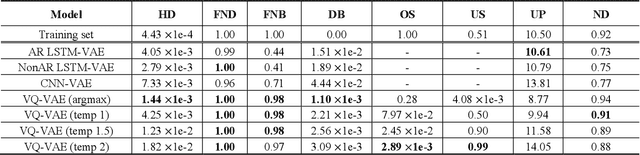

Abstract:Music is a repetition of patterns and rhythms. It can be composed by repeating a certain number of bars in a structured way. In this paper, the objective is to generate a loop of 8 bars that can be used as a building block of music. Even considering musical diversity, we assume that music patterns familiar to humans can be defined in a finite set. With explicit rules to extract loops from music, we found that discrete representations are sufficient to model symbolic music sequences. Among VAE family, musical properties from VQ-VAE are better observed rather than other models. Further, to emphasize musical structure, we have manipulated discrete latent features to be repetitive so that the properties are more strengthened. Quantitative and qualitative experiments are extensively conducted to verify our assumptions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge