Hyeongrae Ihm

Systematic Analysis of Music Representations from BERT

Jun 06, 2023Abstract:There have been numerous attempts to represent raw data as numerical vectors that effectively capture semantic and contextual information. However, in the field of symbolic music, previous works have attempted to validate their music embeddings by observing the performance improvement of various fine-tuning tasks. In this work, we directly analyze embeddings from BERT and BERT with contrastive learning trained on bar-level MIDI, inspecting their musical information that can be obtained from MIDI events. We observe that the embeddings exhibit distinct characteristics of information depending on the contrastive objectives and the choice of layers. Our code is available at https://github.com/sjhan91/MusicBERT.

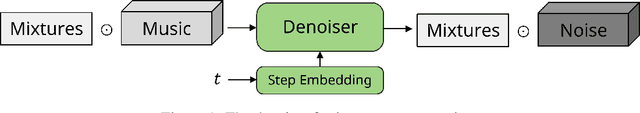

Instrument Separation of Symbolic Music by Explicitly Guided Diffusion Model

Sep 05, 2022

Abstract:Similar to colorization in computer vision, instrument separation is to assign instrument labels (e.g. piano, guitar...) to notes from unlabeled mixtures which contain only performance information. To address the problem, we adopt diffusion models and explicitly guide them to preserve consistency between mixtures and music. The quantitative results show that our proposed model can generate high-fidelity samples for multitrack symbolic music with creativity.

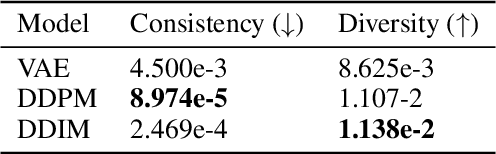

Symbolic Music Loop Generation with Neural Discrete Representations

Aug 11, 2022

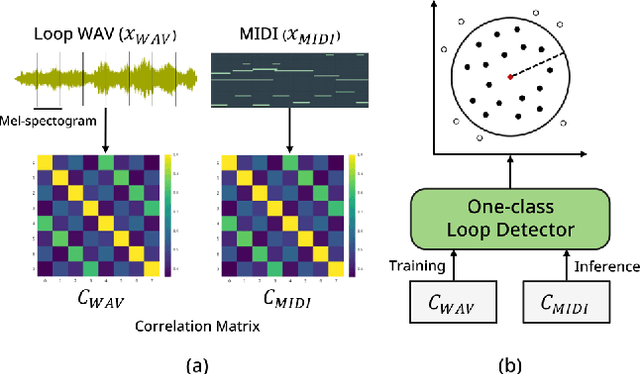

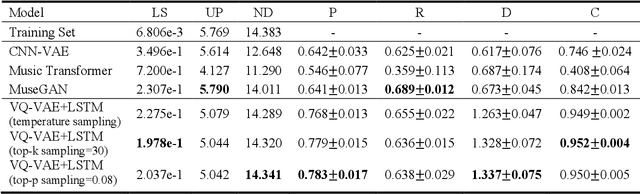

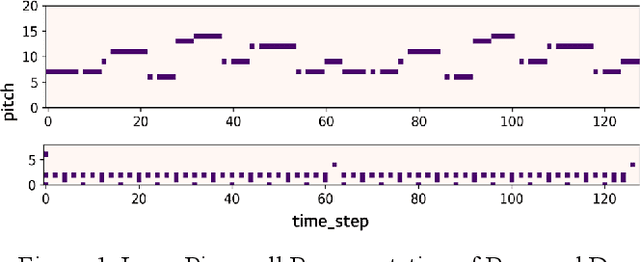

Abstract:Since most of music has repetitive structures from motifs to phrases, repeating musical ideas can be a basic operation for music composition. The basic block that we focus on is conceptualized as loops which are essential ingredients of music. Furthermore, meaningful note patterns can be formed in a finite space, so it is sufficient to represent them with combinations of discrete symbols as done in other domains. In this work, we propose symbolic music loop generation via learning discrete representations. We first extract loops from MIDI datasets using a loop detector and then learn an autoregressive model trained by discrete latent codes of the extracted loops. We show that our model outperforms well-known music generative models in terms of both fidelity and diversity, evaluating on random space. Our code and supplementary materials are available at https://github.com/sjhan91/Loop_VQVAE_Official.

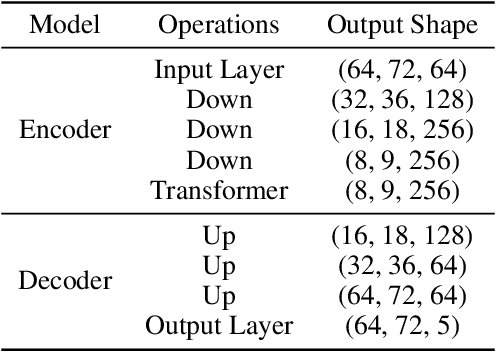

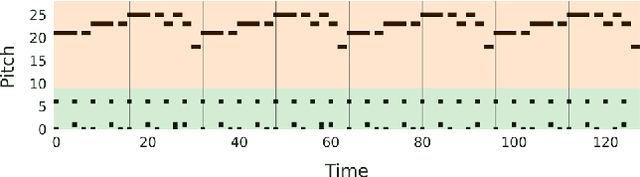

Symbolic Music Loop Generation with VQ-VAE

Nov 15, 2021

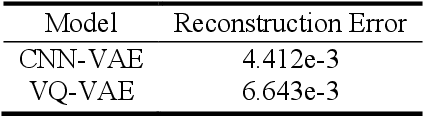

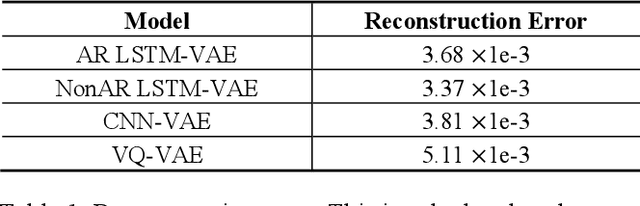

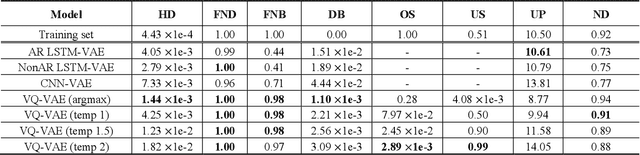

Abstract:Music is a repetition of patterns and rhythms. It can be composed by repeating a certain number of bars in a structured way. In this paper, the objective is to generate a loop of 8 bars that can be used as a building block of music. Even considering musical diversity, we assume that music patterns familiar to humans can be defined in a finite set. With explicit rules to extract loops from music, we found that discrete representations are sufficient to model symbolic music sequences. Among VAE family, musical properties from VQ-VAE are better observed rather than other models. Further, to emphasize musical structure, we have manipulated discrete latent features to be repetitive so that the properties are more strengthened. Quantitative and qualitative experiments are extensively conducted to verify our assumptions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge