Samuel W. Blair

Soybean Maturity Prediction using 2D Contour Plots from Drone based Time Series Imagery

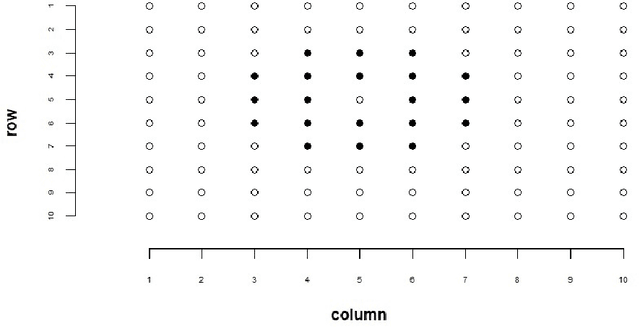

Dec 12, 2024Abstract:Plant breeding programs require assessments of days to maturity for accurate selection and placement of entries in appropriate tests. In the early stages of the breeding pipeline, soybean breeding programs assign relative maturity ratings to experimental varieties that indicate their suitable maturity zones. Traditionally, the estimation of maturity value for breeding varieties has involved breeders manually inspecting fields and assessing maturity value visually. This approach relies heavily on rater judgment, making it subjective and time-consuming. This study aimed to develop a machine-learning model for evaluating soybean maturity using UAV-based time-series imagery. Images were captured at three-day intervals, beginning as the earliest varieties started maturing and continuing until the last varieties fully matured. The data collected for this experiment consisted of 22,043 plots collected across three years (2021 to 2023) and represent relative maturity groups 1.6 - 3.9. We utilized contour plot images extracted from the time-series UAV RGB imagery as input for a neural network model. This contour plot approach encoded the temporal and spatial variation within each plot into a single image. A deep learning model was trained to utilize this contour plot to predict maturity ratings. This model significantly improves accuracy and robustness, achieving up to 85% accuracy. We also evaluate the model's accuracy as we reduce the number of time points, quantifying the trade-off between temporal resolution and maturity prediction. The predictive model offers a scalable, objective, and efficient means of assessing crop maturity, enabling phenomics and ML approaches to reduce the reliance on manual inspection and subjective assessment. This approach enables the automatic prediction of relative maturity ratings in a breeding program, saving time and resources.

Robust soybean seed yield estimation using high-throughput ground robot videos

Dec 03, 2024

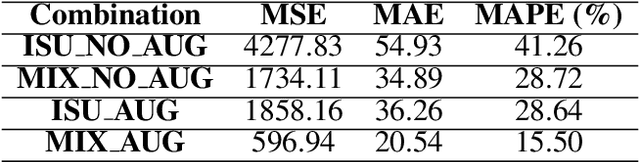

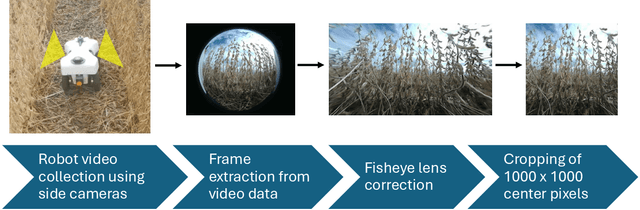

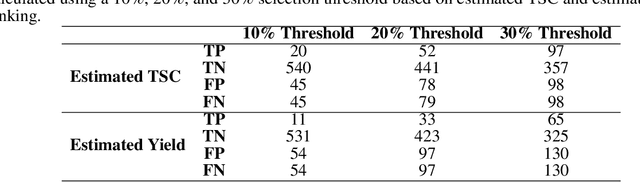

Abstract:We present a novel method for soybean (Glycine max (L.) Merr.) yield estimation leveraging high throughput seed counting via computer vision and deep learning techniques. Traditional methods for collecting yield data are labor-intensive, costly, prone to equipment failures at critical data collection times, and require transportation of equipment across field sites. Computer vision, the field of teaching computers to interpret visual data, allows us to extract detailed yield information directly from images. By treating it as a computer vision task, we report a more efficient alternative, employing a ground robot equipped with fisheye cameras to capture comprehensive videos of soybean plots from which images are extracted in a variety of development programs. These images are processed through the P2PNet-Yield model, a deep learning framework where we combined a Feature Extraction Module (the backbone of the P2PNet-Soy) and a Yield Regression Module to estimate seed yields of soybean plots. Our results are built on three years of yield testing plot data - 8500 in 2021, 2275 in 2022, and 650 in 2023. With these datasets, our approach incorporates several innovations to further improve the accuracy and generalizability of the seed counting and yield estimation architecture, such as the fisheye image correction and data augmentation with random sensor effects. The P2PNet-Yield model achieved a genotype ranking accuracy score of up to 83%. It demonstrates up to a 32% reduction in time to collect yield data as well as costs associated with traditional yield estimation, offering a scalable solution for breeding programs and agricultural productivity enhancement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge