Salvador Aguinaga

Citations and Trust in LLM Generated Responses

Jan 02, 2025Abstract:Question answering systems are rapidly advancing, but their opaque nature may impact user trust. We explored trust through an anti-monitoring framework, where trust is predicted to be correlated with presence of citations and inversely related to checking citations. We tested this hypothesis with a live question-answering experiment that presented text responses generated using a commercial Chatbot along with varying citations (zero, one, or five), both relevant and random, and recorded if participants checked the citations and their self-reported trust in the generated responses. We found a significant increase in trust when citations were present, a result that held true even when the citations were random; we also found a significant decrease in trust when participants checked the citations. These results highlight the importance of citations in enhancing trust in AI-generated content.

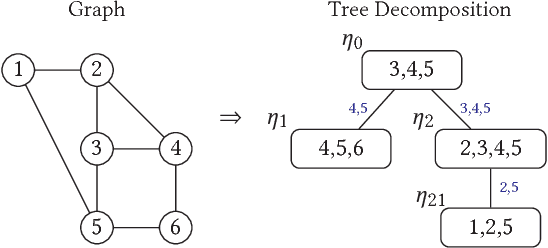

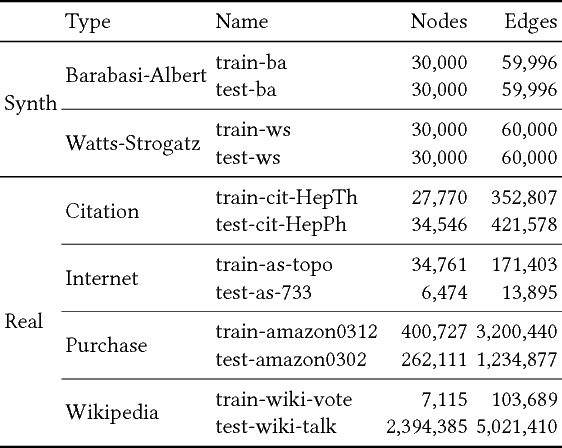

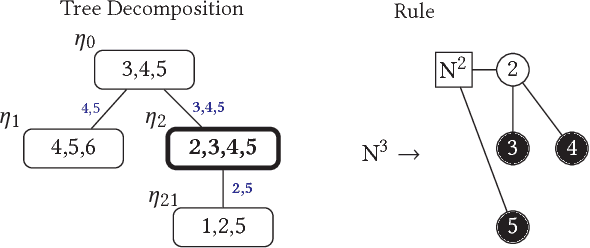

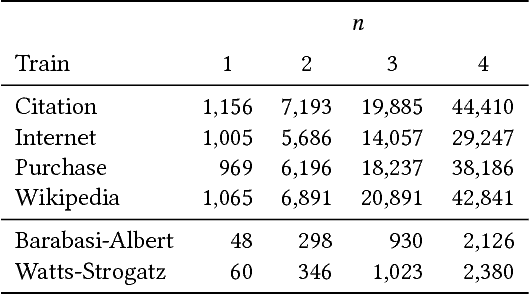

Growing Better Graphs With Latent-Variable Probabilistic Graph Grammars

Jun 11, 2018

Abstract:Recent work in graph models has found that probabilistic hyperedge replacement grammars (HRGs) can be extracted from graphs and used to generate new random graphs with graph properties and substructures close to the original. In this paper, we show how to add latent variables to the model, trained using Expectation-Maximization, to generate still better graphs, that is, ones that generalize better to the test data. We evaluate the new method by separating training and test graphs, building the model on the former and measuring the likelihood of the latter, as a more stringent test of how well the model can generalize to new graphs. On this metric, we find that our latent-variable HRGs consistently outperform several existing graph models and provide interesting insights into the building blocks of real world networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge