Salehe Erfanian Ebadi

AnthroNet: Conditional Generation of Humans via Anthropometrics

Sep 07, 2023Abstract:We present a novel human body model formulated by an extensive set of anthropocentric measurements, which is capable of generating a wide range of human body shapes and poses. The proposed model enables direct modeling of specific human identities through a deep generative architecture, which can produce humans in any arbitrary pose. It is the first of its kind to have been trained end-to-end using only synthetically generated data, which not only provides highly accurate human mesh representations but also allows for precise anthropometry of the body. Moreover, using a highly diverse animation library, we articulated our synthetic humans' body and hands to maximize the diversity of the learnable priors for model training. Our model was trained on a dataset of $100k$ procedurally-generated posed human meshes and their corresponding anthropometric measurements. Our synthetic data generator can be used to generate millions of unique human identities and poses for non-commercial academic research purposes.

PSP-HDRI$+$: A Synthetic Dataset Generator for Pre-Training of Human-Centric Computer Vision Models

Jul 11, 2022

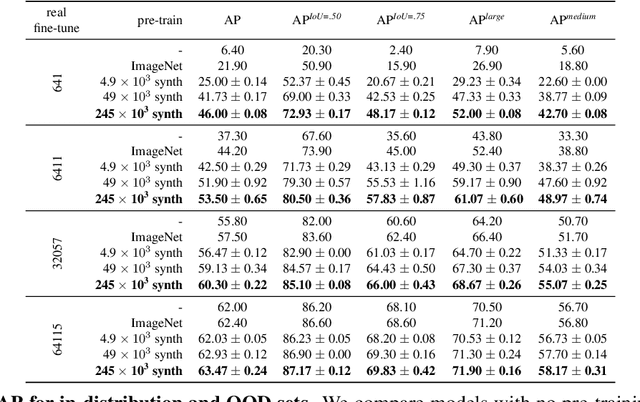

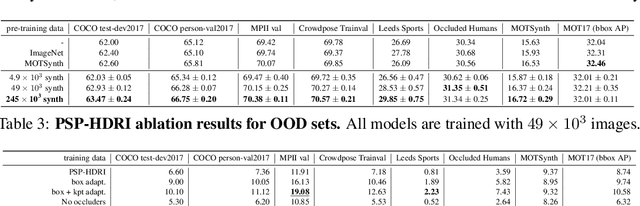

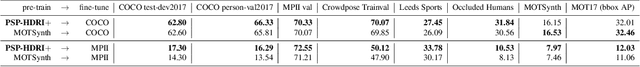

Abstract:We introduce a new synthetic data generator PSP-HDRI$+$ that proves to be a superior pre-training alternative to ImageNet and other large-scale synthetic data counterparts. We demonstrate that pre-training with our synthetic data will yield a more general model that performs better than alternatives even when tested on out-of-distribution (OOD) sets. Furthermore, using ablation studies guided by person keypoint estimation metrics with an off-the-shelf model architecture, we show how to manipulate our synthetic data generator to further improve model performance.

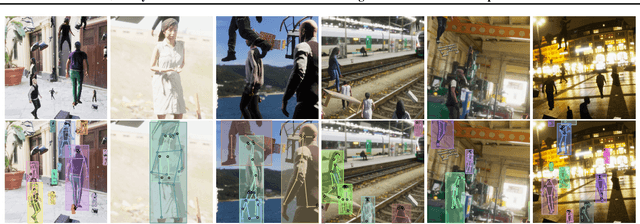

PeopleSansPeople: A Synthetic Data Generator for Human-Centric Computer Vision

Dec 17, 2021

Abstract:In recent years, person detection and human pose estimation have made great strides, helped by large-scale labeled datasets. However, these datasets had no guarantees or analysis of human activities, poses, or context diversity. Additionally, privacy, legal, safety, and ethical concerns may limit the ability to collect more human data. An emerging alternative to real-world data that alleviates some of these issues is synthetic data. However, creation of synthetic data generators is incredibly challenging and prevents researchers from exploring their usefulness. Therefore, we release a human-centric synthetic data generator PeopleSansPeople which contains simulation-ready 3D human assets, a parameterized lighting and camera system, and generates 2D and 3D bounding box, instance and semantic segmentation, and COCO pose labels. Using PeopleSansPeople, we performed benchmark synthetic data training using a Detectron2 Keypoint R-CNN variant [1]. We found that pre-training a network using synthetic data and fine-tuning on target real-world data (few-shot transfer to limited subsets of COCO-person train [2]) resulted in a keypoint AP of $60.37 \pm 0.48$ (COCO test-dev2017) outperforming models trained with the same real data alone (keypoint AP of $55.80$) and pre-trained with ImageNet (keypoint AP of $57.50$). This freely-available data generator should enable a wide range of research into the emerging field of simulation to real transfer learning in the critical area of human-centric computer vision.

Approximated Robust Principal Component Analysis for Improved General Scene Background Subtraction

Mar 18, 2016

Abstract:The research reported in this paper addresses the fundamental task of separation of locally moving or deforming image areas from a static or globally moving background. It builds on the latest developments in the field of robust principal component analysis, specifically, the recently reported practical solutions for the long-standing problem of recovering the low-rank and sparse parts of a large matrix made up of the sum of these two components. This article addresses a few critical issues including: embedding global motion parameters in the matrix decomposition model, i.e., estimation of global motion parameters simultaneously with the foreground/background separation task, considering matrix block-sparsity rather than generic matrix sparsity as natural feature in video processing applications, attenuating background ghosting effects when foreground is subtracted, and more critically providing an extremely efficient algorithm to solve the low-rank/sparse matrix decomposition task. The first aspect is important for background/foreground separation in generic video sequences where the background usually obeys global displacements originated by the camera motion in the capturing process. The second aspect exploits the fact that in video processing applications the sparse matrix has a very particular structure, where the non-zero matrix entries are not randomly distributed but they build small blocks within the sparse matrix. The next feature of the proposed approach addresses removal of ghosting effects originated from foreground silhouettes and the lack of information in the occluded background regions of the image. Finally, the proposed model also tackles algorithmic complexity by introducing an extremely efficient "SVD-free" technique that can be applied in most background/foreground separation tasks for conventional video processing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge