Sahil Modi

NVIDIA Nemotron 3: Efficient and Open Intelligence

Dec 24, 2025Abstract:We introduce the Nemotron 3 family of models - Nano, Super, and Ultra. These models deliver strong agentic, reasoning, and conversational capabilities. The Nemotron 3 family uses a Mixture-of-Experts hybrid Mamba-Transformer architecture to provide best-in-class throughput and context lengths of up to 1M tokens. Super and Ultra models are trained with NVFP4 and incorporate LatentMoE, a novel approach that improves model quality. The two larger models also include MTP layers for faster text generation. All Nemotron 3 models are post-trained using multi-environment reinforcement learning enabling reasoning, multi-step tool use, and support granular reasoning budget control. Nano, the smallest model, outperforms comparable models in accuracy while remaining extremely cost-efficient for inference. Super is optimized for collaborative agents and high-volume workloads such as IT ticket automation. Ultra, the largest model, provides state-of-the-art accuracy and reasoning performance. Nano is released together with its technical report and this white paper, while Super and Ultra will follow in the coming months. We will openly release the model weights, pre- and post-training software, recipes, and all data for which we hold redistribution rights.

Nemotron 3 Nano: Open, Efficient Mixture-of-Experts Hybrid Mamba-Transformer Model for Agentic Reasoning

Dec 23, 2025Abstract:We present Nemotron 3 Nano 30B-A3B, a Mixture-of-Experts hybrid Mamba-Transformer language model. Nemotron 3 Nano was pretrained on 25 trillion text tokens, including more than 3 trillion new unique tokens over Nemotron 2, followed by supervised fine tuning and large-scale RL on diverse environments. Nemotron 3 Nano achieves better accuracy than our previous generation Nemotron 2 Nano while activating less than half of the parameters per forward pass. It achieves up to 3.3x higher inference throughput than similarly-sized open models like GPT-OSS-20B and Qwen3-30B-A3B-Thinking-2507, while also being more accurate on popular benchmarks. Nemotron 3 Nano demonstrates enhanced agentic, reasoning, and chat abilities and supports context lengths up to 1M tokens. We release both our pretrained Nemotron 3 Nano 30B-A3B Base and post-trained Nemotron 3 Nano 30B-A3B checkpoints on Hugging Face.

Wobble control of a pendulum actuated spherical robot

Jan 16, 2023

Abstract:Spherical robots can conduct surveillance in hostile, cluttered environments without being damaged, as their protective shell can safely house sensors such as cameras. However, lateral oscillations, also known as wobble, occur when these sphere-shaped robots operate at low speeds, leading to shaky camera feedback. These oscillations in a pendulum-actuated spherical robot are caused by the coupling between the forward and steering motions due to nonholonomic constraints. Designing a controller to limit wobbling in these robots is challenging due to their underactuated nature. We propose a model-based controller to navigate a pendulum-actuated spherical robot using wobble-free turning maneuvers consisting of circular arcs and straight lines. The model is developed using Lagrange-D'Alembert equations and accounts for the coupled forward and steering motions. The model is further analyzed to derive expressions for radius of curvature, precession rate, wobble amplitude, and wobble frequency during circular motions. Finally, we design an input-output feedback linearization-based controller to control the robot's heading direction and wobble. Overall, the proposed controller enables a teleoperator to command a specific forward velocity and pendulum angle as per the desired turning radius while limiting the robot's lateral oscillations to enhance the quality of camera feedback.

Pendulum Actuated Spherical Robot: Dynamic Modeling & Analysis for Wobble & Precession

Jan 14, 2023

Abstract:A spherical robot has many practical advantages as the entire electronics are protected within a hull and can be carried easily by any Unmanned Aerial Vehicle (UAV). However, its use is limited due to finding mounts for sensors. Pendulum actuated spherical robot provides space for mounting sensors at the yoke. We study the non-linear dynamics of a pendulum-actuated spherical robot to analyze the dynamics of internal assembly (yoke) for mounting sensors. For such robots, we provide a coupled dynamic model that takes care of the relationship between forward and sideways motion. We further demonstrate the effects of wobbling and precession captured by our model when the bot is controlled to execute a turning maneuver while moving with a moderate forward velocity, a practical situation encountered by spherical robots moving in an indoor setting. A simulation setup based on the developed model provides visualization of the spherical robot motion.

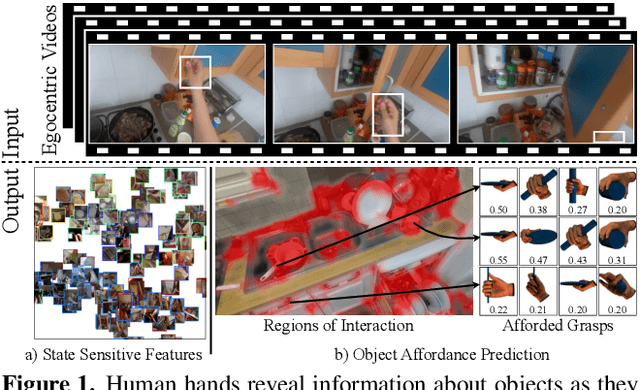

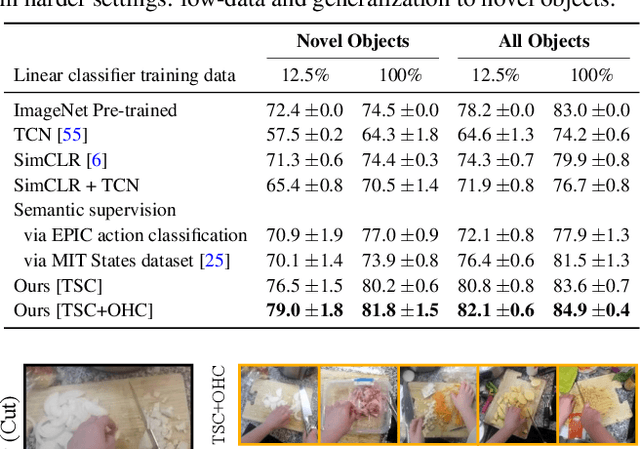

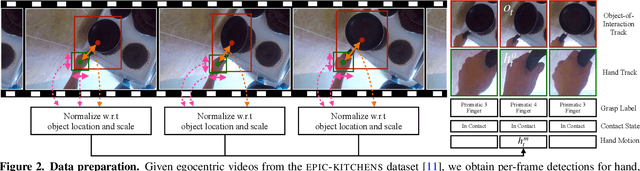

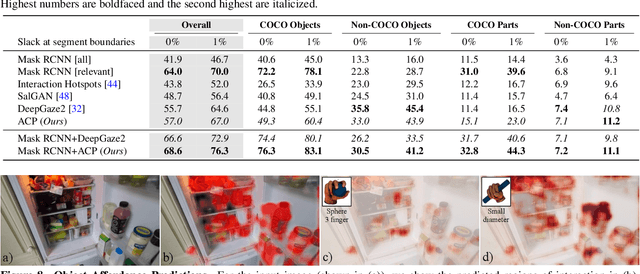

Human Hands as Probes for Interactive Object Understanding

Dec 16, 2021

Abstract:Interactive object understanding, or what we can do to objects and how is a long-standing goal of computer vision. In this paper, we tackle this problem through observation of human hands in in-the-wild egocentric videos. We demonstrate that observation of what human hands interact with and how can provide both the relevant data and the necessary supervision. Attending to hands, readily localizes and stabilizes active objects for learning and reveals places where interactions with objects occur. Analyzing the hands shows what we can do to objects and how. We apply these basic principles on the EPIC-KITCHENS dataset, and successfully learn state-sensitive features, and object affordances (regions of interaction and afforded grasps), purely by observing hands in egocentric videos.

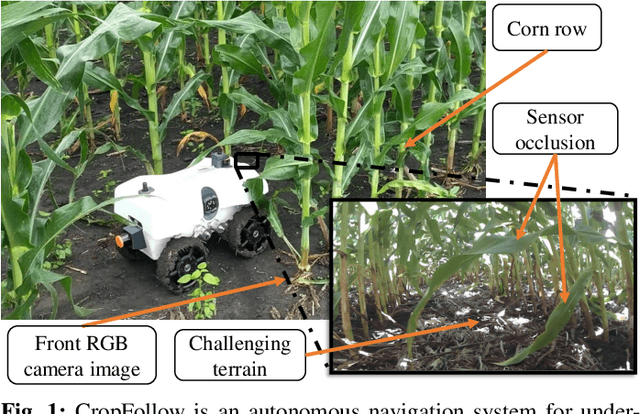

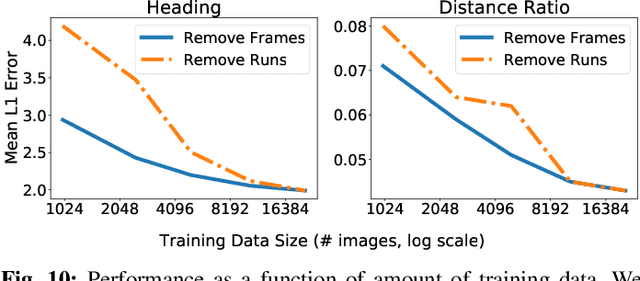

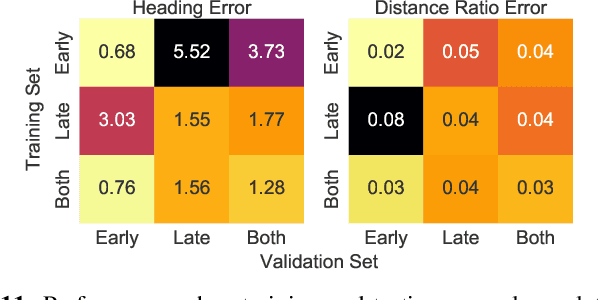

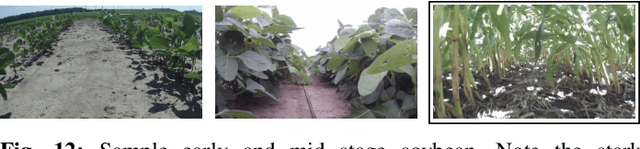

Learned Visual Navigation for Under-Canopy Agricultural Robots

Jul 06, 2021

Abstract:We describe a system for visually guided autonomous navigation of under-canopy farm robots. Low-cost under-canopy robots can drive between crop rows under the plant canopy and accomplish tasks that are infeasible for over-the-canopy drones or larger agricultural equipment. However, autonomously navigating them under the canopy presents a number of challenges: unreliable GPS and LiDAR, high cost of sensing, challenging farm terrain, clutter due to leaves and weeds, and large variability in appearance over the season and across crop types. We address these challenges by building a modular system that leverages machine learning for robust and generalizable perception from monocular RGB images from low-cost cameras, and model predictive control for accurate control in challenging terrain. Our system, CropFollow, is able to autonomously drive 485 meters per intervention on average, outperforming a state-of-the-art LiDAR based system (286 meters per intervention) in extensive field testing spanning over 25 km.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge