Sahar Almahfouz Nasser

SAGE: Agentic Framework for Interpretable and Clinically Translatable Computational Pathology Biomarker Discovery

Feb 01, 2026Abstract:Despite significant progress in computational pathology, many AI models remain black-box and difficult to interpret, posing a major barrier to clinical adoption due to limited transparency and explainability. This has motivated continued interest in engineered image-based biomarkers, which offer greater interpretability but are often proposed based on anecdotal evidence or fragmented prior literature rather than systematic biological validation. We introduce SAGE (Structured Agentic system for hypothesis Generation and Evaluation), an agentic AI system designed to identify interpretable, engineered pathology biomarkers by grounding them in biological evidence. SAGE integrates literature-anchored reasoning with multimodal data analysis to correlate image-derived features with molecular biomarkers, such as gene expression, and clinically relevant outcomes. By coordinating specialized agents for biological contextualization and empirical hypothesis validation, SAGE prioritizes transparent, biologically supported biomarkers and advances the clinical translation of computational pathology.

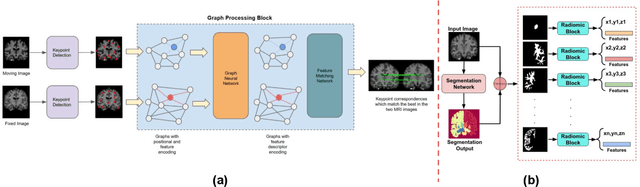

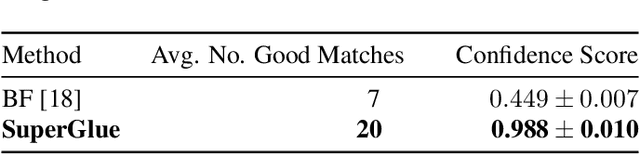

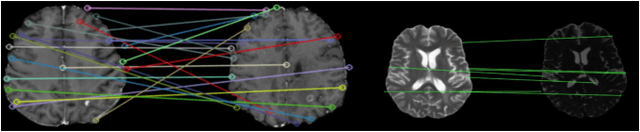

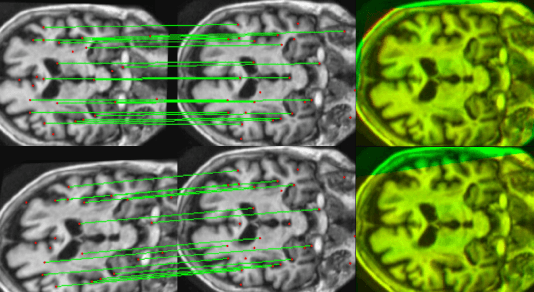

Utilizing Radiomic Feature Analysis For Automated MRI Keypoint Detection: Enhancing Graph Applications

Nov 30, 2023

Abstract:Graph neural networks (GNNs) present a promising alternative to CNNs and transformers in certain image processing applications due to their parameter-efficiency in modeling spatial relationships. Currently, a major area of research involves the converting non-graph input data for GNN-based models, notably in scenarios where the data originates from images. One approach involves converting images into nodes by identifying significant keypoints within them. Super-Retina, a semi-supervised technique, has been utilized for detecting keypoints in retinal images. However, its limitations lie in the dependency on a small initial set of ground truth keypoints, which is progressively expanded to detect more keypoints. Having encountered difficulties in detecting consistent initial keypoints in brain images using SIFT and LoFTR, we proposed a new approach: radiomic feature-based keypoint detection. Demonstrating the anatomical significance of the detected keypoints was achieved by showcasing their efficacy in improving registration processes guided by these keypoints. Subsequently, these keypoints were employed as the ground truth for the keypoint detection method (LK-SuperRetina). Furthermore, the study showcases the application of GNNs in image matching, highlighting their superior performance in terms of both the number of good matches and confidence scores. This research sets the stage for expanding GNN applications into various other applications, including but not limited to image classification, segmentation, and registration.

Transforming Breast Cancer Diagnosis: Towards Real-Time Ultrasound to Mammogram Conversion for Cost-Effective Diagnosis

Aug 10, 2023

Abstract:Ultrasound (US) imaging is better suited for intraoperative settings because it is real-time and more portable than other imaging techniques, such as mammography. However, US images are characterized by lower spatial resolution noise-like artifacts. This research aims to address these limitations by providing surgeons with mammogram-like image quality in real-time from noisy US images. Unlike previous approaches for improving US image quality that aim to reduce artifacts by treating them as (speckle noise), we recognize their value as informative wave interference pattern (WIP). To achieve this, we utilize the Stride software to numerically solve the forward model, generating ultrasound images from mammograms images by solving wave-equations. Additionally, we leverage the power of domain adaptation to enhance the realism of the simulated ultrasound images. Then, we utilize generative adversarial networks (GANs) to tackle the inverse problem of generating mammogram-quality images from ultrasound images. The resultant images have considerably more discernible details than the original US images.

Reverse Knowledge Distillation: Training a Large Model using a Small One for Retinal Image Matching on Limited Data

Jul 21, 2023Abstract:Retinal image matching plays a crucial role in monitoring disease progression and treatment response. However, datasets with matched keypoints between temporally separated pairs of images are not available in abundance to train transformer-based model. We propose a novel approach based on reverse knowledge distillation to train large models with limited data while preventing overfitting. Firstly, we propose architectural modifications to a CNN-based semi-supervised method called SuperRetina that help us improve its results on a publicly available dataset. Then, we train a computationally heavier model based on a vision transformer encoder using the lighter CNN-based model, which is counter-intuitive in the field knowledge-distillation research where training lighter models based on heavier ones is the norm. Surprisingly, such reverse knowledge distillation improves generalization even further. Our experiments suggest that high-dimensional fitting in representation space may prevent overfitting unlike training directly to match the final output. We also provide a public dataset with annotations for retinal image keypoint detection and matching to help the research community develop algorithms for retinal image applications.

Improving Mitosis Detection Via UNet-based Adversarial Domain Homogenizer

Sep 15, 2022

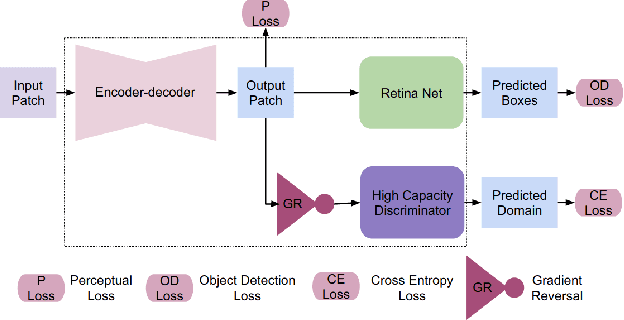

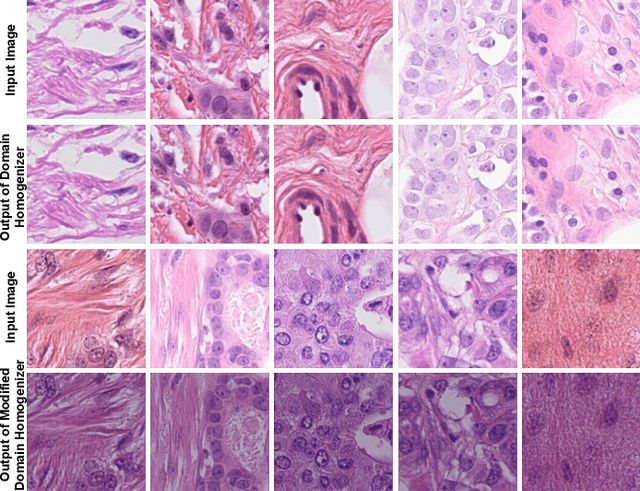

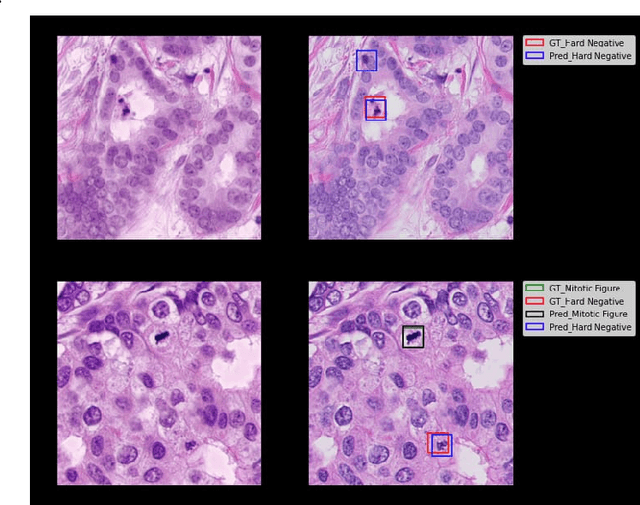

Abstract:The effective localization of mitosis is a critical precursory task for deciding tumor prognosis and grade. Automated mitosis detection through deep learning-oriented image analysis often fails on unseen patient data due to inherent domain biases. This paper proposes a domain homogenizer for mitosis detection that attempts to alleviate domain differences in histology images via adversarial reconstruction of input images. The proposed homogenizer is based on a U-Net architecture and can effectively reduce domain differences commonly seen with histology imaging data. We demonstrate our domain homogenizer's effectiveness by observing the reduction in domain differences between the preprocessed images. Using this homogenizer, along with a subsequent retina-net object detector, we were able to outperform the baselines of the 2021 MIDOG challenge in terms of average precision of the detected mitotic figures.

WSSAMNet: Weakly Supervised Semantic Attentive Medical Image Registration Network

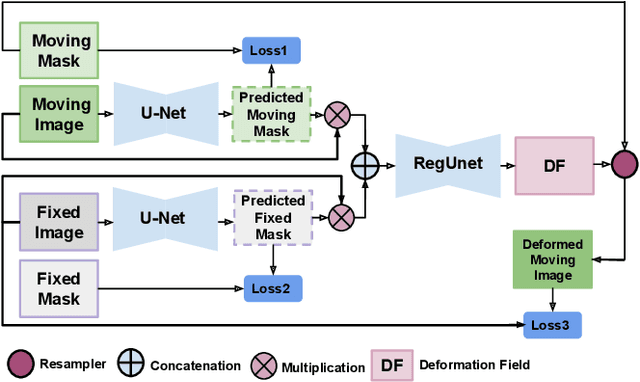

Mar 05, 2022

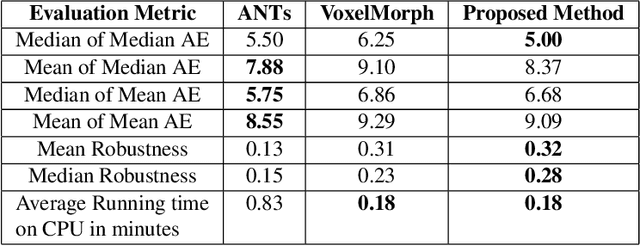

Abstract:We present WSSAMNet, a weakly supervised method for medical image registration. Ours is a two step method, with the first step being the computation of segmentation masks of the fixed and moving volumes. These masks are then used to attend to the input volume, which are then provided as inputs to a registration network in the second step. The registration network computes the deformation field to perform the alignment between the fixed and the moving volumes. We study the effectiveness of our technique on the BraTSReg challenge data against ANTs and VoxelMorph, where we demonstrate that our method performs competitively.

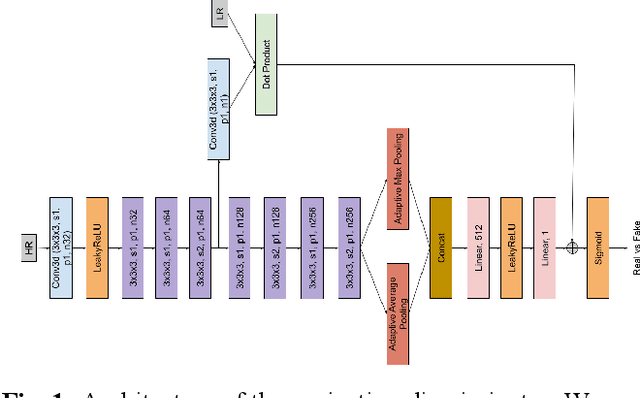

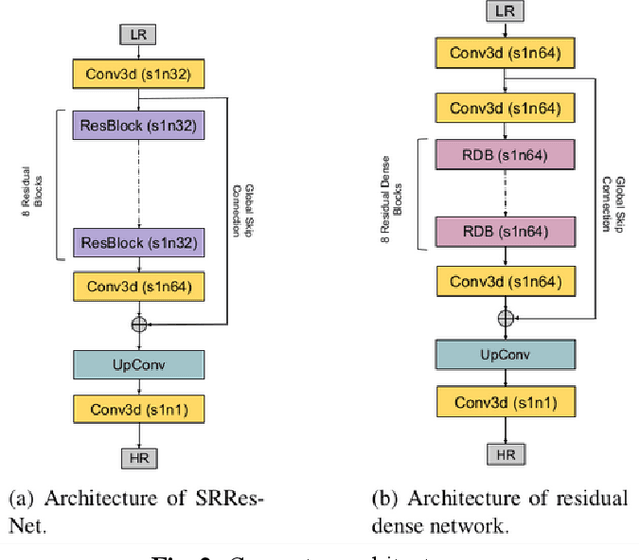

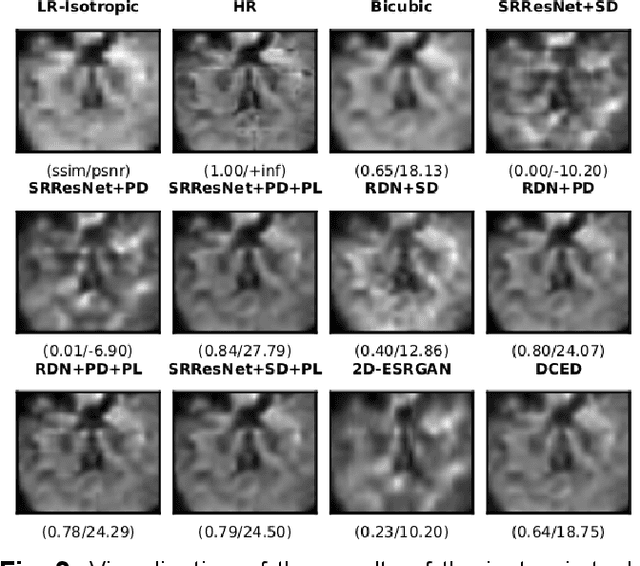

Perceptual cGAN for MRI Super-resolution

Jan 23, 2022

Abstract:Capturing high-resolution magnetic resonance (MR) images is a time consuming process, which makes it unsuitable for medical emergencies and pediatric patients. Low-resolution MR imaging, by contrast, is faster than its high-resolution counterpart, but it compromises on fine details necessary for a more precise diagnosis. Super-resolution (SR), when applied to low-resolution MR images, can help increase their utility by synthetically generating high-resolution images with little additional time. In this paper, we present a SR technique for MR images that is based on generative adversarial networks (GANs), which have proven to be quite useful in generating sharp-looking details in SR. We introduce a conditional GAN with perceptual loss, which is conditioned upon the input low-resolution image, which improves the performance for isotropic and anisotropic MRI super-resolution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge