SMA Sharif

The Tenth NTIRE 2025 Image Denoising Challenge Report

Apr 16, 2025

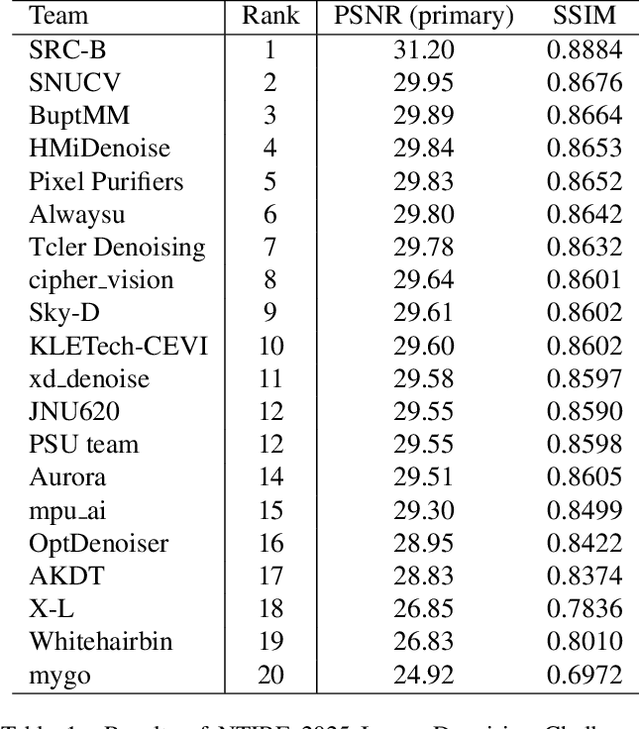

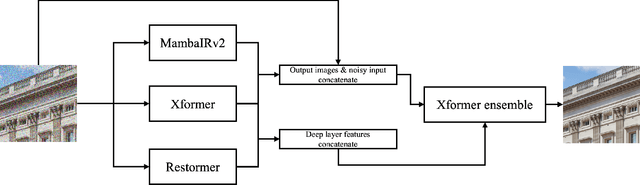

Abstract:This paper presents an overview of the NTIRE 2025 Image Denoising Challenge ({\sigma} = 50), highlighting the proposed methodologies and corresponding results. The primary objective is to develop a network architecture capable of achieving high-quality denoising performance, quantitatively evaluated using PSNR, without constraints on computational complexity or model size. The task assumes independent additive white Gaussian noise (AWGN) with a fixed noise level of 50. A total of 290 participants registered for the challenge, with 20 teams successfully submitting valid results, providing insights into the current state-of-the-art in image denoising.

Beyond Joint Demosaicking and Denoising: An Image Processing Pipeline for a Pixel-bin Image Sensor

Apr 19, 2021

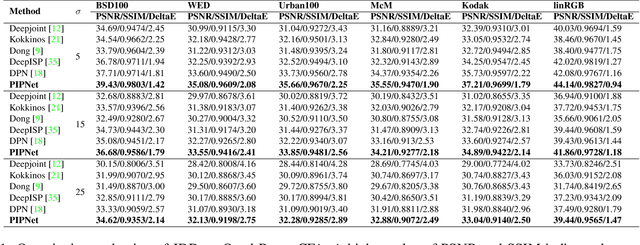

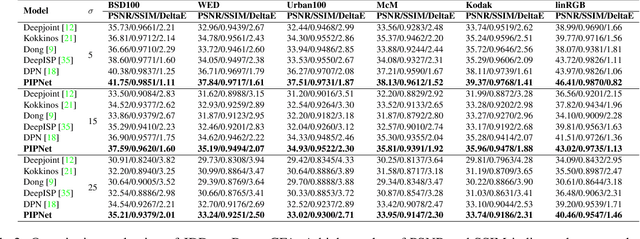

Abstract:Pixel binning is considered one of the most prominent solutions to tackle the hardware limitation of smartphone cameras. Despite numerous advantages, such an image sensor has to appropriate an artefact-prone non-Bayer colour filter array (CFA) to enable the binning capability. Contrarily, performing essential image signal processing (ISP) tasks like demosaicking and denoising, explicitly with such CFA patterns, makes the reconstruction process notably complicated. In this paper, we tackle the challenges of joint demosaicing and denoising (JDD) on such an image sensor by introducing a novel learning-based method. The proposed method leverages the depth and spatial attention in a deep network. The proposed network is guided by a multi-term objective function, including two novel perceptual losses to produce visually plausible images. On top of that, we stretch the proposed image processing pipeline to comprehensively reconstruct and enhance the images captured with a smartphone camera, which uses pixel binning techniques. The experimental results illustrate that the proposed method can outperform the existing methods by a noticeable margin in qualitative and quantitative comparisons. Code available: https://github.com/sharif-apu/BJDD_CVPR21.

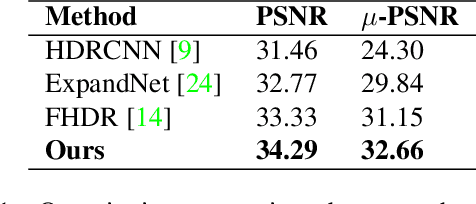

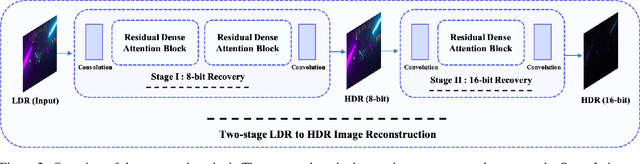

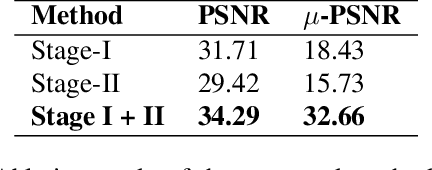

A Two-stage Deep Network for High Dynamic Range Image Reconstruction

Apr 19, 2021

Abstract:Mapping a single exposure low dynamic range (LDR) image into a high dynamic range (HDR) is considered among the most strenuous image to image translation tasks due to exposure-related missing information. This study tackles the challenges of single-shot LDR to HDR mapping by proposing a novel two-stage deep network. Notably, our proposed method aims to reconstruct an HDR image without knowing hardware information, including camera response function (CRF) and exposure settings. Therefore, we aim to perform image enhancement task like denoising, exposure correction, etc., in the first stage. Additionally, the second stage of our deep network learns tone mapping and bit-expansion from a convex set of data samples. The qualitative and quantitative comparisons demonstrate that the proposed method can outperform the existing LDR to HDR works with a marginal difference. Apart from that, we collected an LDR image dataset incorporating different camera systems. The evaluation with our collected real-world LDR images illustrates that the proposed method can reconstruct plausible HDR images without presenting any visual artefacts. Code available: https://github. com/sharif-apu/twostageHDR_NTIRE21.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge