Beyond Joint Demosaicking and Denoising: An Image Processing Pipeline for a Pixel-bin Image Sensor

Paper and Code

Apr 19, 2021

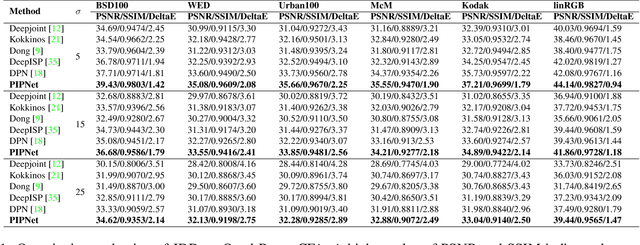

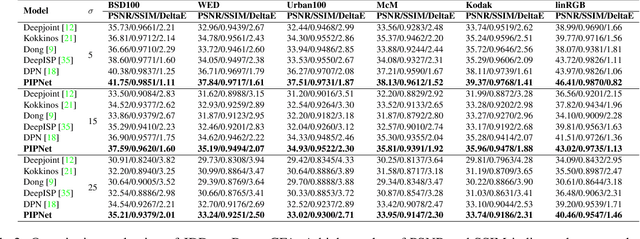

Pixel binning is considered one of the most prominent solutions to tackle the hardware limitation of smartphone cameras. Despite numerous advantages, such an image sensor has to appropriate an artefact-prone non-Bayer colour filter array (CFA) to enable the binning capability. Contrarily, performing essential image signal processing (ISP) tasks like demosaicking and denoising, explicitly with such CFA patterns, makes the reconstruction process notably complicated. In this paper, we tackle the challenges of joint demosaicing and denoising (JDD) on such an image sensor by introducing a novel learning-based method. The proposed method leverages the depth and spatial attention in a deep network. The proposed network is guided by a multi-term objective function, including two novel perceptual losses to produce visually plausible images. On top of that, we stretch the proposed image processing pipeline to comprehensively reconstruct and enhance the images captured with a smartphone camera, which uses pixel binning techniques. The experimental results illustrate that the proposed method can outperform the existing methods by a noticeable margin in qualitative and quantitative comparisons. Code available: https://github.com/sharif-apu/BJDD_CVPR21.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge