Ryuhei Takahashi

RETR: Multi-View Radar Detection Transformer for Indoor Perception

Nov 15, 2024

Abstract:Indoor radar perception has seen rising interest due to affordable costs driven by emerging automotive imaging radar developments and the benefits of reduced privacy concerns and reliability under hazardous conditions (e.g., fire and smoke). However, existing radar perception pipelines fail to account for distinctive characteristics of the multi-view radar setting. In this paper, we propose Radar dEtection TRansformer (RETR), an extension of the popular DETR architecture, tailored for multi-view radar perception. RETR inherits the advantages of DETR, eliminating the need for hand-crafted components for object detection and segmentation in the image plane. More importantly, RETR incorporates carefully designed modifications such as 1) depth-prioritized feature similarity via a tunable positional encoding (TPE); 2) a tri-plane loss from both radar and camera coordinates; and 3) a learnable radar-to-camera transformation via reparameterization, to account for the unique multi-view radar setting. Evaluated on two indoor radar perception datasets, our approach outperforms existing state-of-the-art methods by a margin of 15.38+ AP for object detection and 11.77+ IoU for instance segmentation, respectively.

SIRA: Scalable Inter-frame Relation and Association for Radar Perception

Nov 04, 2024

Abstract:Conventional radar feature extraction faces limitations due to low spatial resolution, noise, multipath reflection, the presence of ghost targets, and motion blur. Such limitations can be exacerbated by nonlinear object motion, particularly from an ego-centric viewpoint. It becomes evident that to address these challenges, the key lies in exploiting temporal feature relation over an extended horizon and enforcing spatial motion consistency for effective association. To this end, this paper proposes SIRA (Scalable Inter-frame Relation and Association) with two designs. First, inspired by Swin Transformer, we introduce extended temporal relation, generalizing the existing temporal relation layer from two consecutive frames to multiple inter-frames with temporally regrouped window attention for scalability. Second, we propose motion consistency track with the concept of a pseudo-tracklet generated from observational data for better trajectory prediction and subsequent object association. Our approach achieves 58.11 mAP@0.5 for oriented object detection and 47.79 MOTA for multiple object tracking on the Radiate dataset, surpassing previous state-of-the-art by a margin of +4.11 mAP@0.5 and +9.94 MOTA, respectively.

Mutual Interference Mitigation for MIMO-FMCW Automotive Radar

Apr 06, 2023

Abstract:This paper considers mutual interference mitigation among automotive radars using frequency-modulated continuous wave (FMCW) signal and multiple-input multiple-output (MIMO) virtual arrays. For the first time, we derive a general interference signal model that fully accounts for not only the time-frequency incoherence, e.g., different FMCW configuration parameters and time offsets, but also the slow-time code MIMO incoherence and array configuration differences between the victim and interfering radars. Along with a standard MIMO-FMCW object signal model, we turn the interference mitigation into a spatial-domain object detection under incoherent MIMO-FMCW interference described by the explicit interference signal model, and propose a constant false alarm rate (CFAR) detector. More specifically, the proposed detector exploits the structural property of the derived interference model at both \emph{transmit} and \emph{receive} steering vector space. We also derive analytical closed-form expressions for probabilities of detection and false alarm. Performance evaluation using both synthetic-level and phased array system-level simulation confirms the effectiveness of our proposed detector over selected baseline methods.

Partially-Shared Variational Auto-encoders for Unsupervised Domain Adaptation with Target Shift

Jan 25, 2020

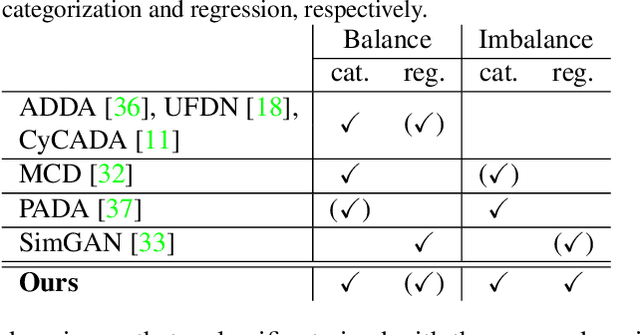

Abstract:This paper proposes a novel approach for unsupervised domain adaptation (UDA) with target shift. Target shift is a problem of mismatch in label distribution between source and target domains. Typically it appears as class-imbalance in target domain. In practice, this is an important problem in UDA; as we do not know labels in target domain datasets, we do not know whether or not its distribution is identical to that in the source domain dataset. Many traditional approaches achieve UDA with distribution matching by minimizing mean maximum discrepancy or adversarial training; however these approaches implicitly assume a coincidence in the distributions and do not work under situations with target shift. Some recent UDA approaches focus on class boundary and some of them are robust to target shift, but they are only applicable to classification and not to regression. To overcome the target shift problem in UDA, the proposed method, partially shared variational autoencoders (PS-VAEs), uses pair-wise feature alignment instead of feature distribution matching. PS-VAEs inter-convert domain of each sample by a CycleGAN-based architecture while preserving its label-related content. To evaluate the performance of PS-VAEs, we carried out two experiments: UDA with class-unbalanced digits datasets (classification), and UDA from synthesized data to real observation in human-pose-estimation (regression). The proposed method presented its robustness against the class-imbalance in the classification task, and outperformed the other methods in the regression task with a large margin.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge