Ruth Stock-Homburg

Building the Web for Agents: A Declarative Framework for Agent-Web Interaction

Nov 14, 2025

Abstract:The increasing deployment of autonomous AI agents on the web is hampered by a fundamental misalignment: agents must infer affordances from human-oriented user interfaces, leading to brittle, inefficient, and insecure interactions. To address this, we introduce VOIX, a web-native framework that enables websites to expose reliable, auditable, and privacy-preserving capabilities for AI agents through simple, declarative HTML elements. VOIX introduces <tool> and <context> tags, allowing developers to explicitly define available actions and relevant state, thereby creating a clear, machine-readable contract for agent behavior. This approach shifts control to the website developer while preserving user privacy by disconnecting the conversational interactions from the website. We evaluated the framework's practicality, learnability, and expressiveness in a three-day hackathon study with 16 developers. The results demonstrate that participants, regardless of prior experience, were able to rapidly build diverse and functional agent-enabled web applications. Ultimately, this work provides a foundational mechanism for realizing the Agentic Web, enabling a future of seamless and secure human-AI collaboration on the web.

MoVEInt: Mixture of Variational Experts for Learning Human-Robot Interactions from Demonstrations

Jul 10, 2024Abstract:Shared dynamics models are important for capturing the complexity and variability inherent in Human-Robot Interaction (HRI). Therefore, learning such shared dynamics models can enhance coordination and adaptability to enable successful reactive interactions with a human partner. In this work, we propose a novel approach for learning a shared latent space representation for HRIs from demonstrations in a Mixture of Experts fashion for reactively generating robot actions from human observations. We train a Variational Autoencoder (VAE) to learn robot motions regularized using an informative latent space prior that captures the multimodality of the human observations via a Mixture Density Network (MDN). We show how our formulation derives from a Gaussian Mixture Regression formulation that is typically used approaches for learning HRI from demonstrations such as using an HMM/GMM for learning a joint distribution over the actions of the human and the robot. We further incorporate an additional regularization to prevent "mode collapse", a common phenomenon when using latent space mixture models with VAEs. We find that our approach of using an informative MDN prior from human observations for a VAE generates more accurate robot motions compared to previous HMM-based or recurrent approaches of learning shared latent representations, which we validate on various HRI datasets involving interactions such as handshakes, fistbumps, waving, and handovers. Further experiments in a real-world human-to-robot handover scenario show the efficacy of our approach for generating successful interactions with four different human interaction partners.

Learning Multimodal Latent Dynamics for Human-Robot Interaction

Nov 27, 2023Abstract:This article presents a method for learning well-coordinated Human-Robot Interaction (HRI) from Human-Human Interactions (HHI). We devise a hybrid approach using Hidden Markov Models (HMMs) as the latent space priors for a Variational Autoencoder to model a joint distribution over the interacting agents. We leverage the interaction dynamics learned from HHI to learn HRI and incorporate the conditional generation of robot motions from human observations into the training, thereby predicting more accurate robot trajectories. The generated robot motions are further adapted with Inverse Kinematics to ensure the desired physical proximity with a human, combining the ease of joint space learning and accurate task space reachability. For contact-rich interactions, we modulate the robot's stiffness using HMM segmentation for a compliant interaction. We verify the effectiveness of our approach deployed on a Humanoid robot via a user study. Our method generalizes well to various humans despite being trained on data from just two humans. We find that Users perceive our method as more human-like, timely, and accurate and rank our method with a higher degree of preference over other baselines.

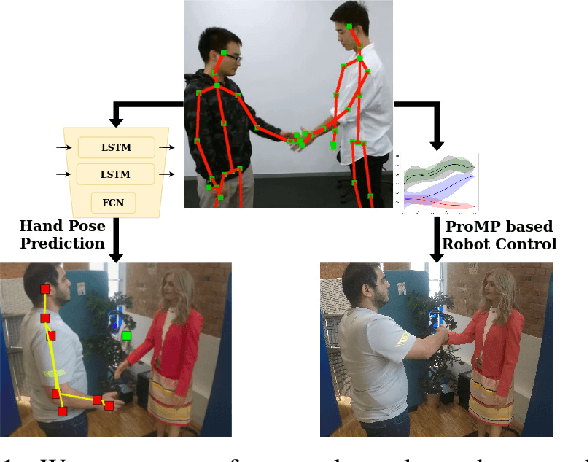

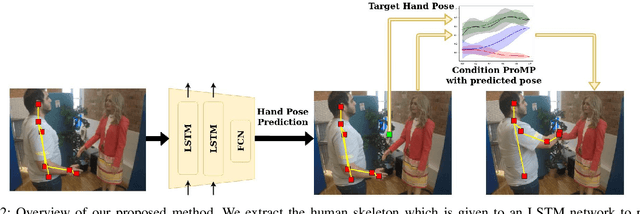

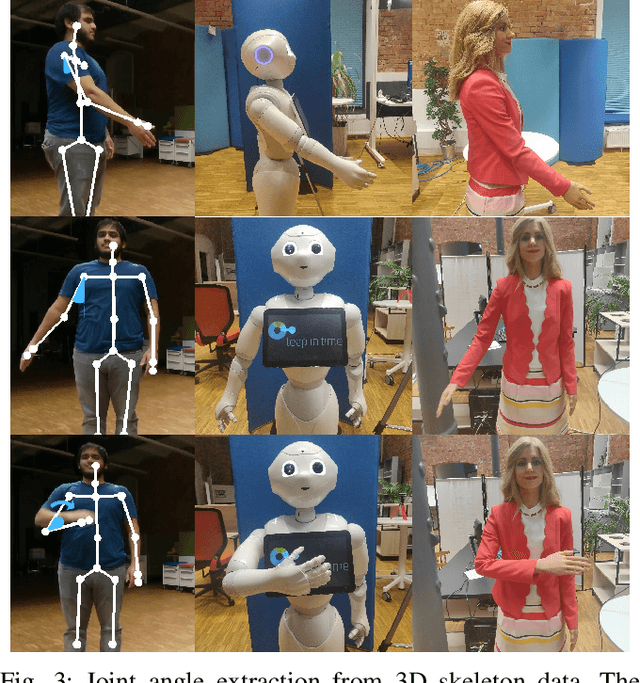

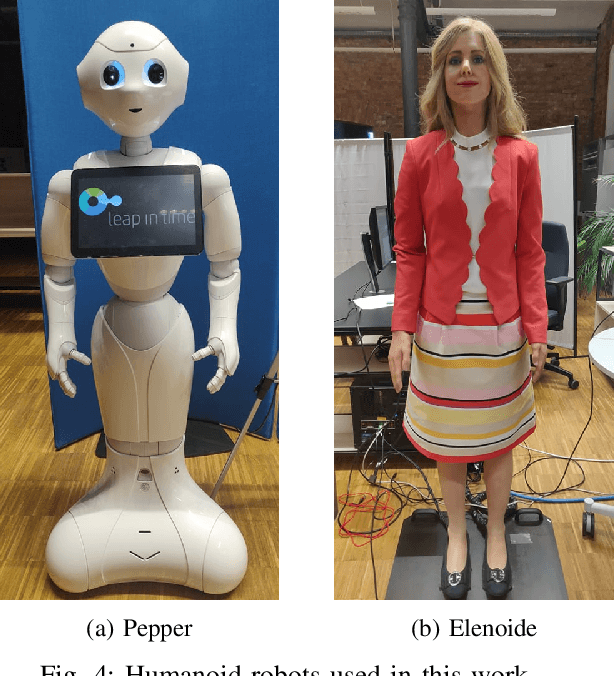

Learning Human-like Hand Reaching for Human-Robot Handshaking

Feb 28, 2021

Abstract:One of the first and foremost non-verbal interactions that humans perform is a handshake. It has an impact on first impressions as touch can convey complex emotions. This makes handshaking an important skill for the repertoire of a social robot. In this paper, we present a novel framework for learning human-robot handshaking behaviours for humanoid robots solely using third-person human-human interaction data. This is especially useful for non-backdrivable robots that cannot be taught by demonstrations via kinesthetic teaching. Our approach can be easily executed on different humanoid robots. This removes the need for re-training, which is especially tedious when training with human-interaction partners. We show this by applying the learnt behaviours on two different humanoid robots with similar degrees of freedom but different shapes and control limits.

Human-Robot Handshaking: A Review

Feb 14, 2021

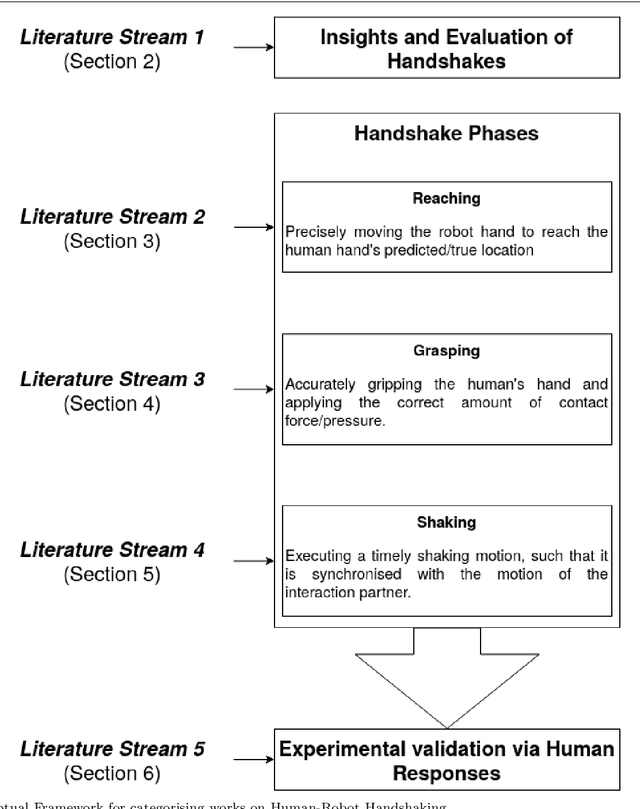

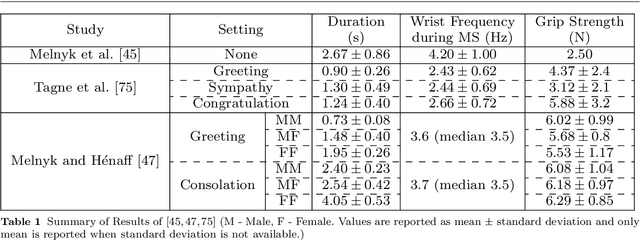

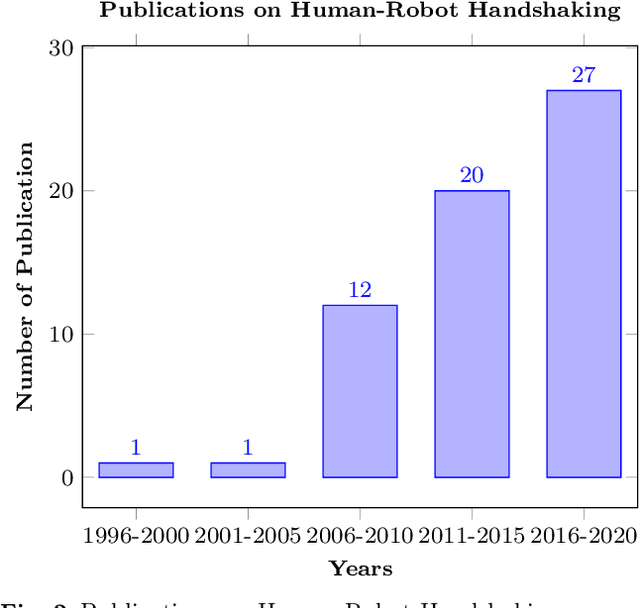

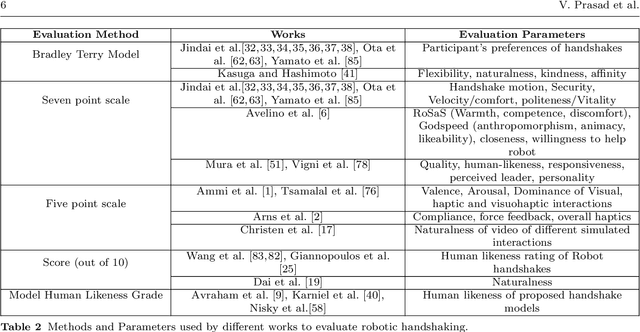

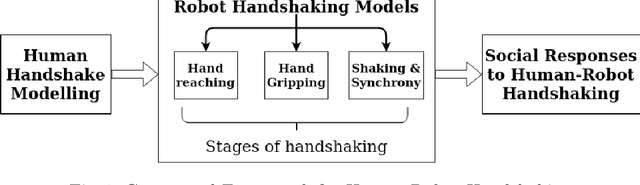

Abstract:For some years now, the use of social, anthropomorphic robots in various situations has been on the rise. These are robots developed to interact with humans and are equipped with corresponding extremities. They already support human users in various industries, such as retail, gastronomy, hotels, education and healthcare. During such Human-Robot Interaction (HRI) scenarios, physical touch plays a central role in the various applications of social robots as interactive non-verbal behaviour is a key factor in making the interaction more natural. Shaking hands is a simple, natural interaction used commonly in many social contexts and is seen as a symbol of greeting, farewell and congratulations. In this paper, we take a look at the existing state of Human-Robot Handshaking research, categorise the works based on their focus areas, draw out the major findings of these areas while analysing their pitfalls. We mainly see that some form of synchronisation exists during the different phases of the interaction. In addition to this, we also find that additional factors like gaze, voice facial expressions etc. can affect the perception of a robotic handshake and that internal factors like personality and mood can affect the way in which handshaking behaviours are executed by humans. Based on the findings and insights, we finally discuss possible ways forward for research on such physically interactive behaviours.

Advances in Human-Robot Handshaking

Aug 26, 2020

Abstract:The use of social, anthropomorphic robots to support humans in various industries has been on the rise. During Human-Robot Interaction (HRI), physically interactive non-verbal behaviour is key for more natural interactions. Handshaking is one such natural interaction used commonly in many social contexts. It is one of the first non-verbal interactions which takes place and should, therefore, be part of the repertoire of a social robot. In this paper, we explore the existing state of Human-Robot Handshaking and discuss possible ways forward for such physically interactive behaviours.

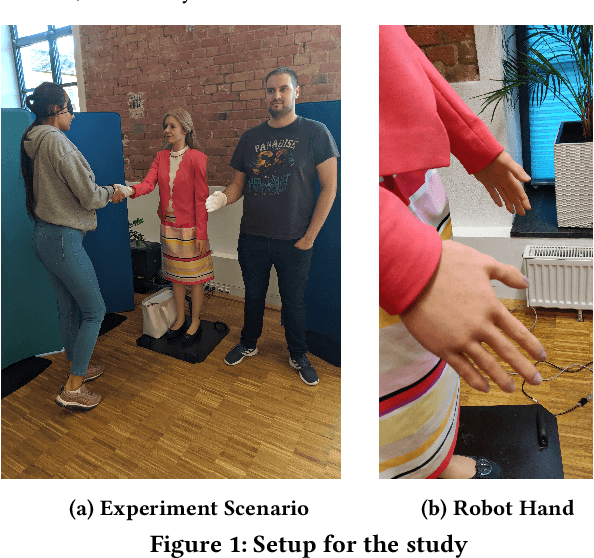

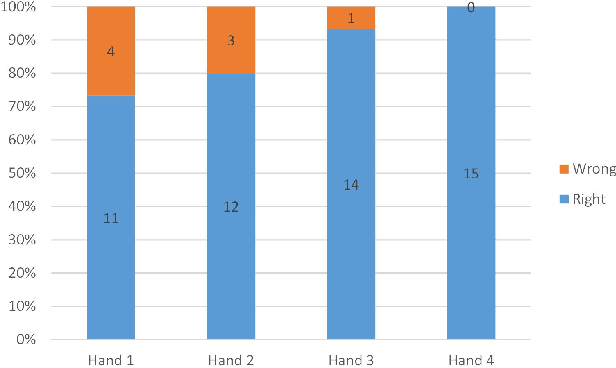

Evaluation of the Handshake Turing Test for anthropomorphic Robots

Jan 28, 2020

Abstract:Handshakes are fundamental and common greeting and parting gestures among humans. They are important in shaping first impressions as people tend to associate character traits with a person's handshake. To widen the social acceptability of robots and make a lasting first impression, a good handshaking ability is an important skill for social robots. Therefore, to test the human-likeness of a robot handshake, we propose an initial Turing-like test, primarily for the hardware interface to future AI agents. We evaluate the test on an android robot's hand to determine if it can pass for a human hand. This is an important aspect of Turing tests for motor intelligence where humans have to interact with a physical device rather than a virtual one. We also propose some modifications to the definition of a Turing test for such scenarios taking into account that a human needs to interact with a physical medium.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge